P

repared for:

Department of Homeland Security

Cyber Threat Modeling:

Survey, Assessment, and Representative Framework

April 7, 2018

Authors

:

De

borah J. Bodeau

Ca

therine D. McCollum

Da

v

id B. Fox

The

H

omeland Security Systems Engineering and Development Institute (HSSEDI)™

Operated by The MITRE Corporation

Ap

proved for Public Release; Distribution Unlimited.

Case

Number 18-1174 / DHS reference number 16

-J-00184-01

Th

i

s document is a product of the Homeland Security Systems Engineering and Development Institute (HSSEDI™).

i

Hom

eland Security Systems Engineering & Development Institute

The Homeland Security Act of 2002 (Section 305 of PL 107-296, as codified in 6 U.S.C. 185), herein

referred to as the “Act,” authorizes the Secretary of the Department of Homeland Security (DHS), acting

through the Under Secretary for Science and Technology, to establish one or more federally funded

research and development centers (FFRDCs) to provide independent analysis of homeland security issues.

The MITRE Corporation operates the Homeland Security Systems Engineering and Development

Institute (HSSEDI) as an FFRDC for DHS under contract HSHQDC-14-D-00006.

The HSSEDI FFRDC provides the government with the necessary systems engineering and development

expertise to conduct complex acquisition planning and development; concept exploration,

experimentation and evaluation; information technology, communications and cyber security processes,

standards, methodologies and protocols; systems architecture and integration; quality and performance

review, best practices and performance measures and metrics; and, independent test and evaluation

activities. The HSSEDI FFRDC also works with and supports other federal, state, local, tribal, public and

private sector organizations that make up the homeland security enterprise. The HSSEDI FFRDC’s

research is undertaken by mutual consent with DHS and is organized as a set of discrete tasks. This report

presents the results of research and analysis conducted under:

HSHQDC-16-J-00184

Next Generation Cyber Infrastructure (NGCI) Apex Cyber Risk Metrics and Threat Model Assessment

This HSSEDI task order is to enable the DHS Science and Technology Directorate (S&T) to facilitate

improvement of cybersecurity within the Financial Services Sector (FSS). To support NGCI Apex use

cases and provide a common frame of reference for community interaction to supplement institution-

specific threat models, HSSEDI developed an integrated suite of threat models identifying attacker

methods from the level of a single FSS institution up to FSS systems-of-systems, and a corresponding

cyber wargaming framework linking technical and business views. HSSEDI assessed risk metrics and risk

assessment frameworks, provided recommendations toward development of scalable cybersecurity risk

metrics to meet the needs of the NGCI Apex program, and developed representations depicting the

interdependencies and data flows within the FSS.

The results presented in this report do not necessarily reflect official DHS opinion or policy.

For more information about this publication contact:

Homeland Security Systems Engineering & Development Institute

The MITRE Corporation

7515 Colshire Drive

McLean, VA 22102

Email:

HSSEDI_info@mitre.org

h

ttp://www.mitre.org/HSSEDI

ii

A

bstract

This report provides a survey of cyber threat modeling frameworks, presents a comparative

assessment of the surveyed frameworks, and extends an existing framework to serve as a basis

for cyber threat modeling for a variety of purposes. The Department of Homeland Security

(DHS) Science and Technology Directorate (S&T) Next Generation Cyber Infrastructure (NGCI)

Apex program will use threat modeling and cyber wargaming to inform the development and

evaluation of risk metrics, technology foraging, and the evaluation of how identified

technologies could decrease risks. A key finding of the assessment was that no existing

framework or model was sufficient to meet the needs of the NGCI Apex program. Therefore, this

paper also presents a threat modeling framework for the NGCI Apex program, with initial

population of that framework. The survey, assessment, and framework as initially populated are

general enough to be used by medium-to-large organizations in critical infrastructure sectors,

particularly in the Financial Services Sector, seeking to ensure that cybersecurity and resilience

efforts consider cyber threats in a rigorous, repeatable way.

Key Words

1. Cy

ber Threat Models

2. Next Generation Cyber Infrastructure (NGCI)

3. Cyber Risk Metrics

4. Cybersecurity

5. Threat Modeling Framework

iii

This page

intentionally left blank

iv

T

able of Contents

1 Introduction ...................................................................................................................... 1

1.1 Key Concepts and Terminology .................................................................................................... 3

1.2 Uses of Threat Modeling ................................................................................................................ 5

1.2.1 Risk Management and Risk Metrics ....................................................................................... 5

1.2.2 Cyber Wargaming .................................................................................................................... 7

1.2.3 Technology Profiling and Technology Foraging ................................................................... 8

1.3 Survey and Assessment Approach............................................................................................... 9

2 Threat Modeling Frameworks and Methodologies ................................................................. 11

2.1 Frameworks for Cyber Risk Management .................................................................................. 11

2.1.1 NIST Framework for Improving Critical Infrastructure Cybersecurity ............................... 11

2.1.2 Publications Produced by the Joint Task Force Transformation Initiative ....................... 13

2.1.3 CBEST Intelligence-Led Cyber Threat Modelling ................................................................ 14

2.1.4 COBIT 5 and Risk IT ............................................................................................................... 15

2.1.5 Topic-Focused Frameworks and Methodologies ................................................................ 15

2.2 Threat Modeling to Support Design Analysis and Testing ....................................................... 20

2.2.1 Draft NIST Special Publication 800-154, Guide to Data-Centric System Threat Modeling20

2.2.2 STRIDE .................................................................................................................................... 20

2.2.3 DREAD .................................................................................................................................... 21

2.2.4 Operationally Critical Threat, Asset, and Vulnerability Evaluation (OCTAVE) ................. 21

2.2.5 Intel’s Threat Agent Risk Assessment (TARA) and Threat Agent Library (TAL) .............. 22

2.2.6 IDDIL/ATC ............................................................................................................................... 22

2.3 Threat Modeling to Support Information Sharing and Security Operations ............................ 23

2.3.1 STIX™ ..................................................................................................................................... 23

2.3.2 OMG Threat / Risk Standards Initiative ................................................................................ 24

2.3.3 PRE-ATT&CK™ ...................................................................................................................... 24

2.3.4 Cyber Threat Framework ....................................................................................................... 24

3 Specific Threat Models...................................................................................................... 26

3.1 Enterprise-Neutral, Technology-Focused .................................................................................. 26

3.1.1 Adversarial Tactics, Techniques, and Common Knowledge (ATT&CK™) ........................ 26

3.1.2 Common Attack Pattern Enumeration and Classification (CAPEC™)............................... 27

3.1.3 Web Application Threat Models ............................................................................................ 28

3.1.4 Invincea Threat Modeling ...................................................................................................... 28

3.1.5 Other Taxonomies and Attack Pattern Catalogs ................................................................. 29

v

3

.1.6 Threat Modeling for Cloud Computing ................................

................................................. 30

3.2 Enterprise-Oriented, Technology-Focused ................................................................................ 30

3.2.1 MITRE’s Threat Assessment and Remediation Analysis (TARA) ......................................

30

3

.2

.

2 NIP

RN

e

t/SI

P

RNet Cyber Security Architecture Review (NSCSAR)

................................

....

31

3

.2

.

3 Not

iona

l

Thre

a

t Model for a Large Financial Institution

................................

.....................

32

4 Ana

ly

sis

a

nd

As

sessm

e

nt

................................................................

................................

... 34

4

.1 Chara

c

teri

z

ing Thre

a

t M

odels

................................

................................................................

..... 34

4.1.1 Characterizing Models in General ........................................................................................ 34

4.1.2 Characteristics of Cyber Threat Models .............................................................................. 35

4.1.3 Cyber Threat Frameworks, Methodologies, and General Models ...................................... 37

4

.2 Ass

e

s

s

me

nt

of Cyber Threat Models

................................

..........................................................

44

4

.2

.

1 Ass

e

s

sment Criteria

................................................................

................................

.............. 44

4

.2

.

2 Ass

e

s

sment of Surveyed Models, Frameworks, and Methodologies

................................ 48

4

.3 Rel

e

v

a

nc

e of Cyber Threat Modeling Constructs

................................................................

......

52

4

.4 Combining Cybe

r

Thre

a

t

Models for NGCI Apex

................................

................................

....... 56

5 Ini

ti

al

C

yb

er Threat Model

................................................................

................................

.. 60

5

.1 M

odel

ing Fra

me

wor

k

................................................................

................................

.................... 61

5

.1

.

1 Adve

rs

a

ry Intent

................................................................

................................

..................... 62

5

.1

.

2 Adve

rs

a

ry Targeting

................................................................

................................

.............. 65

5

.1

.

3 Adve

rs

a

ry Capabilities

................................................................

................................

.......... 67

5

.1

.

4 Beha

v

iors

or Threat Events

................................

................................................................

.. 68

5.1.5 Threat Scenarios .................................................................................................................... 69

5

.2 Ini

tia

l

Repr

esentative Threat Model

................................

............................................................

70

5

.2

.

1 Adve

rs

ary Characteristics

................................

................................................................

..... 70

5

.2

.

2 Adve

rs

ary Behaviors and Threat Events

................................

................................

............. 71

5.3 Structuring Representative Threat Scenarios ............................................................................ 79

6 Conclusion ...................................................................................................................... 85

Appendix A Modeling Constructs ......................................................................................... 86

List of Acronyms .................................................................................................................... 94

Glossary .................................................................................................................... 100

List of References ................................................................................................................ 103

vi

Lis

t of Figures

Figure 1. Threat Models Are Developed from a Variety of Perspectives ................................

................ 2

Figure

2

.

S

c

ope of Risk Management Decisions to Be Supported by Threat Model

.............................

6

Figure

3

.

Thr

e

at Modeling Approaches

................................

................................................................

.....

7

Figure

4

.

Th

e

Cyber Defense Matrix

................................................................

................................

...........

9

Figure

5

.

Ri

s

k Management Implementation Tiers and Functions in the NIST Cybersecurity

Framework

................................

................................................................

................................

.................

12

Figure

6

.

Ri

s

k Management Scope of Decision Making in the NIST Cybersecurity Framework

......... 12

Figure

7

.

C

y

ber Prep Framework

................................................................................................

..............

16

Figure 8. Attributes of Adversary Capabilities ........................................................................................ 17

Figure 9. Cyber Attack Lifecycle .............................................................................................................. 18

Figure 10. CAPEC™ Model ....................................................................................................................... 28

Figure 11. Example TARA Threat Matrix .................................................................................................. 31

Figure 12. Large Financial Institution Notional Threat Model ................................................................ 33

Figure 13. Characterizing a Model for Use in Evaluating Effectiveness ............................................... 45

Figure 14. Uses of Cyber Threat Models in NGCI Apex .......................................................................... 57

Figure 15. Threat Modeling Level of Detail Depends on Whether and How Assets and Systems Are

Modeled ...................................................................................................................................................... 58

Figure 16. Key Constructs in Cyber Threat Modeling (Details for Adversarial Threats Not Shown) .. 60

Figure 17. Relationships Between Aspects of Adversary’s Intent and Other Key Constructs ........... 63

List of Tables

Table 1. ODNI Cyber Threat Framework .................................................................................................. 25

Table 2. ATT&CK Categories of Tactics .................................................................................................. 27

Table 3. Characteristics of Threat Models and Frameworks .................................................................. 35

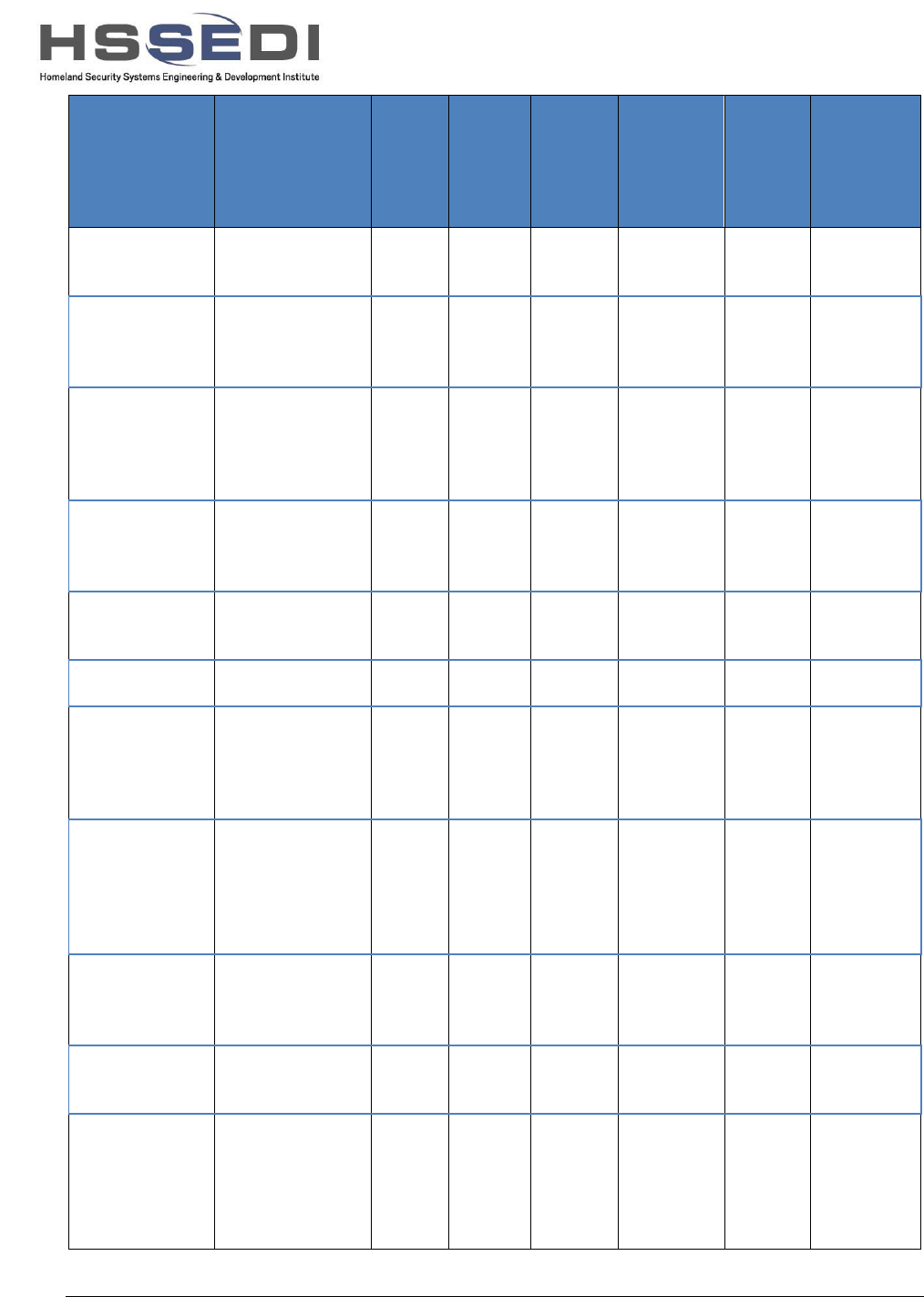

Table 4. Profiles of Surveyed Threat Models and Frameworks ............................................................. 38

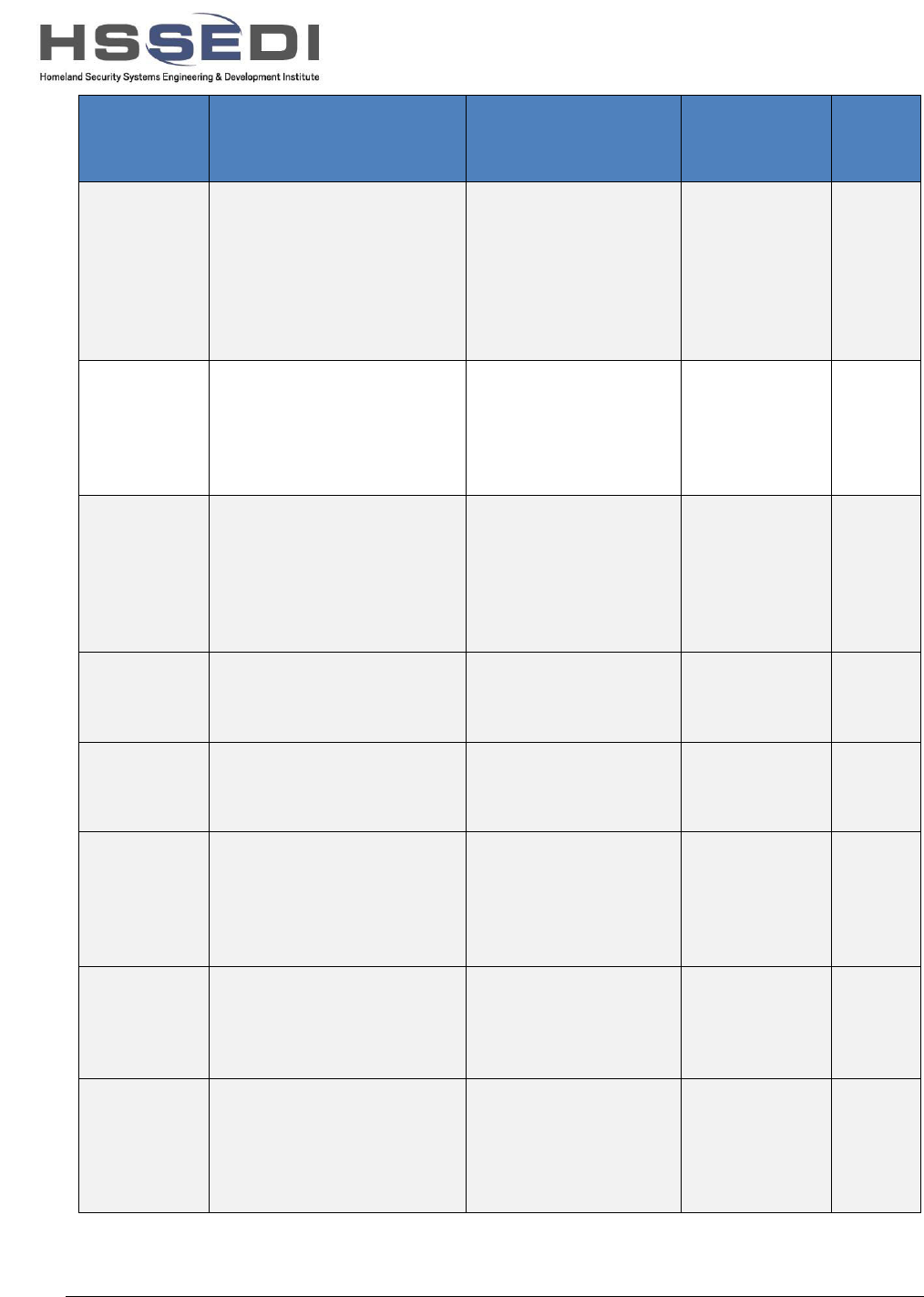

Table 5. Evaluation Attributes .................................................................................................................. 49

Table 6. Summary Assessment of Threat Models and Frameworks ..................................................... 50

Table 7. Profiles of Desired Characteristics of Threat Models for Different Purposes ........................ 51

Table 8. Uses of Cyber Threat Modeling Constructs .............................................................................. 53

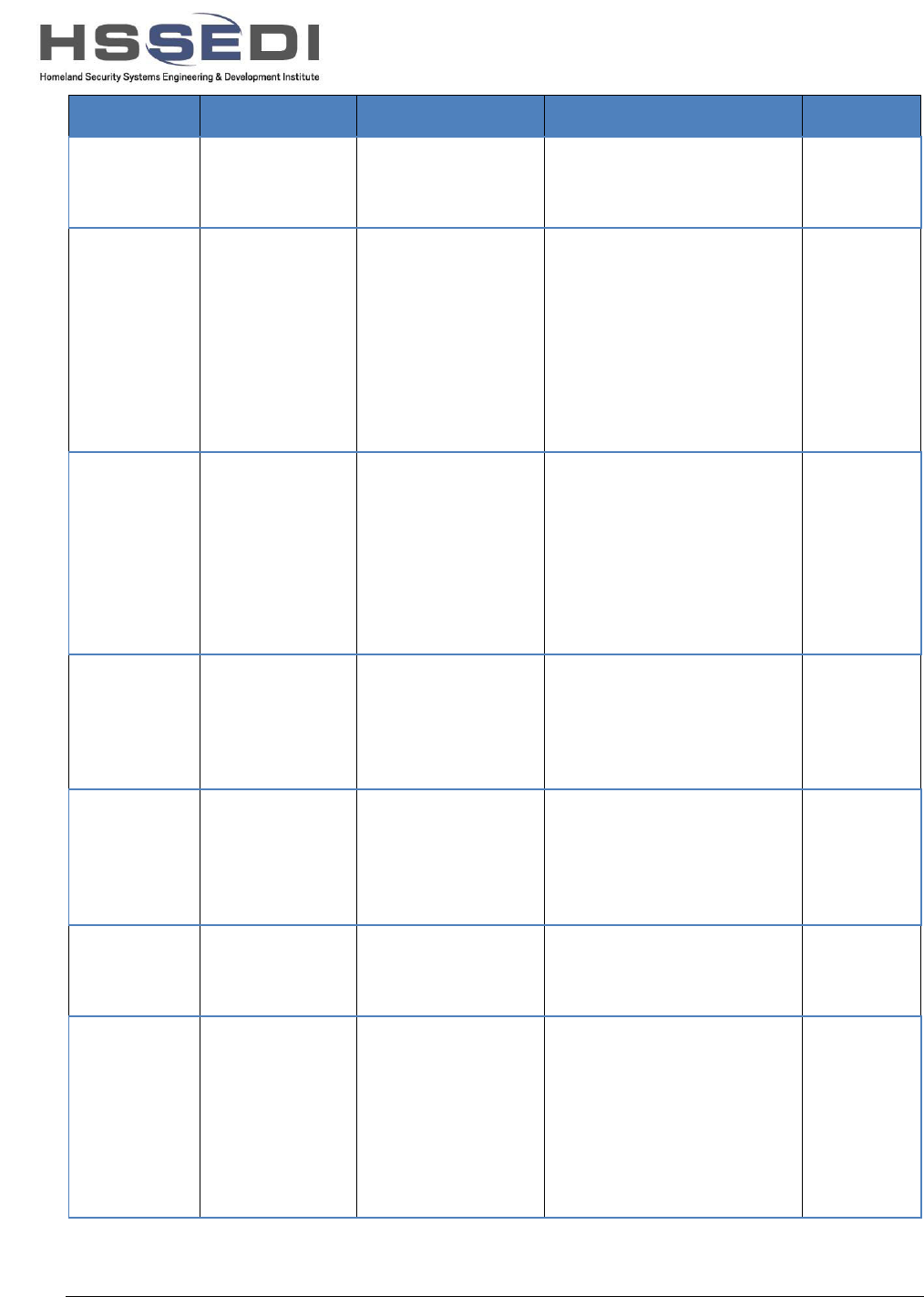

Table 9. Characteristics Related to Adversary Intent: Goals, Cyber Effects, and Organizational

Consequences ........................................................................................................................................... 63

Table 10. Characteristics Related to Adversary Intent: Timeframe, Persistence, Stealth, CAL Stages

.................................................................................................................................................................... 65

vii

Ta

ble 11. Scope or Scale of Effects ................................................................

......................................... 65

Table 12. Characteristics of Adversary Capabilities: Resources, Methods, and Attack Vectors ....... 67

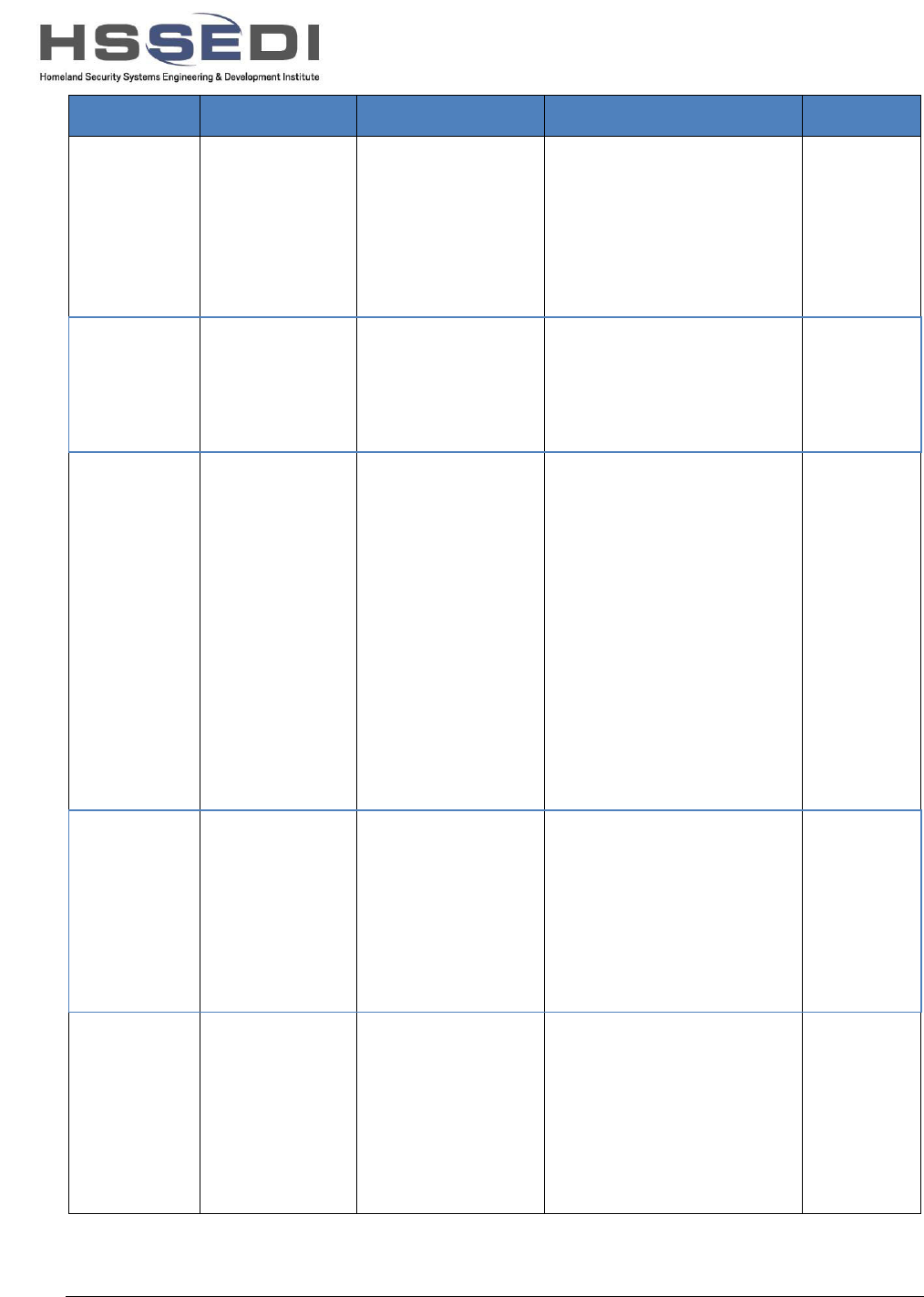

Table 13. Cyber Effects ............................................................................................................................. 69

Table 14. Adversary Goals, Typical Actors, and Targets ....................................................................... 70

Table 15. Adversary Behaviors and Threat Events ................................................................................. 72

Table 16. Building Blocks for Threat Scenarios ...................................................................................... 81

Table 17. Threat Modeling Constructs ..................................................................................................... 86

1

1

Introduction

This report provides a survey of cyber threat modeling frameworks, presents a comparative

assessment of the surveyed frameworks, and extends an existing framework to serve as a basis

for cyber threat modeling for a variety of purposes.

The work in this report was performed for the Department of Homeland Security (DHS) Science

and Technology Directorate (S&T) Next Generation Cyber Infrastructure (NGCI) Apex

Program. That program seeks to accelerate the adoption of effective information technology (IT)

security risk-mitigating cyber technologies by the Financial Services Sector (FSS). However,

while the NGCI Apex Program focuses on the FSS, cyber threat modeling is more broadly

applicable to medium-to-large organizations in other critical infrastructure sectors; it is also

applicable beyond individual organizations. Therefore, this report is offered as a general resource

for non-military use.

1

Cyber threat modeling is the process of developing and applying a representation of adversarial

threats (sources, scenarios, and specific events) in cyberspace. Such threats can target or affect a

device, an application, a system, a network, a mission or business function (and the system-of-

systems which support that mission or business function), an organization, a region, or a critical

infrastructure sector. The cyber threat modeling process can inform efforts related to

cybersecurity and resilience in multiple ways:

• Risk management. Cyber threat modeling is a component of cyber risk framing, analysis

and assessment, and evaluation of alternative responses (individually or in the context of

cybersecurity portfolio management), which are components of enterprise risk

management. While non-adversarial threats can –

and must –

also be considered in risk

mana

gement, this paper focuses on adversarial threat models for cybersecurity and

resilience. See Section 1.2.1.

• Cyber

w

argaming. Cyber threat modeling motivates and underlies the development of

threat scenarios used in cyber wargaming. In this context, cyber threat modeling is

strongly oriented toward the concerns of the stakeholders participating in or represented

in wargaming activities. See Section 1.2.2.

• T

e

chnology profiling and foraging. Cyber threat modeling can motivate the selection of

threat events or threat scenarios used to evaluate and compare the capabilities of

technologies, products, services. That is, cyber threat modeling can enable technology

profiling, both to characterize existing technologies and to identify research gaps. It can

also support technology foraging, i.e., the process of scouting for and identifying

technologies of potential interest. See Section 1.2.3.

• S

yste

ms security engineering. Cyber threat modeling can be used throughout the system

development lifecycle (SDLC), including requirements definition, analysis and design,

implementation, testing, and operations and maintenance (O&M). However, it is

particularly important for design analysis and testing, where it motivates and underlies

1

A

n excellent survey of the state of the art in cyber threat modeling for military purposes was prepared for Defence Research and

Development Canada (DRDC) by Bell Canada and Sphyrna Security [Magar 2016]. The framework developed in that report is

discussed in Section 2.

2

the de

velopment of threat scenarios used to design and test devices, applications, and/or

systems [Kosten 2017]. Thus, cyber threat modeling can be oriented toward a specific

layer or set of layers in a notional layered architecture. While this purpose is not the

primary focus of this survey, some cyber threat modeling frameworks and approaches

oriented to this purpose are included in the survey.

• Security operations and analysis. Cyber threat modeling can focus activities by cyber

defenders, including threat hunting (searching for indicators or evidence of adversary

activities), continuous monitoring and security assessment, and DevOps (rapid

development and operational deployment of defense tools), on specific types of threat

events. For this purpose, threat information sharing is crucial. To share threat

information, a common conception is needed of what constitutes such information –

what

infor

mation i

s relevant and useful [NIST 2016c]. Some cyber threat modeling

frameworks and approaches oriented to this purpose are included in the survey.

The

proc

e

ss of cyber threat modeling involves selecting a cyber threat modeling framework and

populating that framework with specific values (e.g., adversary expertise, attack patterns and

attack events) as relevant to the intended scope (e.g., architectural layers or stakeholder

concerns). The populated framework can be used to construct threat scenarios (for risk

assessment, cyber wargaming, design analysis and testing); characterize controls, technologies,

or research efforts (for technology foraging); and/or to share threat information and responses.

This i

ntroduc

tory section presents key concepts and terminology, discusses some uses of cyber

threat modeling, and provides background on the survey and assessment process used to develop

this report. Section 2 provides a survey of cyber threat modeling frameworks and methodologies.

Section 3 presents a survey of populated cyber threat models or sub-models. Figure 1 illustrates

the fact that cyber threat modeling frameworks and methods have been developed as part of risk

frameworks and modeling methods, as general approaches to cyber threat modeling, oriented

toward enterprise information technology (EIT), and for non-EIT environments. The figure gives

a sense of the range of modeling frameworks surveyed in Sections 2 and 3.

Figur

e

1

. Threat Models Are Developed from a Variety of Perspectives

S

e

ction 4 presents the analysis and assessment of the surveyed materials with respect to the goals

of the NGCI Apex Program. Section 5 provides an initial and partially populated cyber threat

3

modeling

framework for use by the NGCI Apex Program, which may also be of broader use.

Appendix A explains relevant threat modeling constructs. Finally, a glossary, list of acronyms,

and references are provided.

1.1 Key Concepts and Terminology

A mode

l is an abstract representation of some domain of human experience, used (1) to structure

knowledge, (2) to provide a common language for discussing that knowledge, and (3) to perform

analyses in that domain.

A variety of terms are used in threat modeling, including threat, threat actor, threat event, threat

vector, threat scenario, campaign, attacker, attack, attack vector, attack activity, malicious cyber

activity, and intrusion. Different threat modeling approaches define these terms differently, due

to assumptions about the contexts and purposes for which they will be used. Terminology related

to threat is embedded in a larger setting of terminology about risk. Definitions therefore depend

on the larger understanding of risk, and on assumptions about the technological and operational

environment in which risk will be managed.

At a minimum, a few concepts are key. These concepts relate to undesirable events; the forces or

actors which could cause those events to occur; stories or structured accounts of how one or

more undesirable events could result in harm; and the harms which could result. In general, the

terms corresponding to these concepts are threat event, threat source,

threat scenario, a

nd

c

onse

que

nces. For the NGCI Apex program, the focus is on cyber attacks as defined by [OFR

2017]: “Cyberattacks are deliberate efforts to disrupt, steal, alter, or destroy data stored on IT

systems.”

The

F

e

d

eral Financial Institutions Examination Council (FFIEC) Information Security (IS)

Handbook on Risk Assessment

2

[FFIEC 2016] defines threats as events:

T

hre

ats a

re

events that could cause harm to the confidentiality, integrity, or availability of

information or information systems, through unauthorized disclosure, misuse, alteration,

or destruction of information or information systems.

How

e

ve

r, the

term “threat” is also used more broadly, to include circumstances and to modify

other terms.

R

isk asse

ssm

e

nt guidance is published by the National Institute of Standards and Technology

(NIST) in NIST Special Publication (SP) 800-30R1 [NIST 2012]. NIST SP 800-30R1 defines

several terms related to threat:

T

hre

at: An

y

circumstance or event with the potential to adversely impact organizational

operations (including mission, functions, image, or reputation), organizational assets,

individuals, other organizations, or the Nation through an information system via

unauthorized access, destruction, disclosure, or modification of information, and/or denial of

service.

2

T

he first (2001) version of NIST SP 800-30 is also specifically referenced by the FFIEC Handbook for Information Security as

an example of elements that comprise a sound risk assessment process. The guide defines a threat model framework consisting of

threat sources, threat events, vulnerabilities, likelihood (susceptibility), and impact. The 2001 version, though superseded, can be

found for reference at http://csrc.nist.gov/publications/nistpubs/800-30/sp800-30.pdf.

4

T

hreat event: An event or situation that has the potential for causing undesirable

consequences or impact.

Threat scenario: A set of discrete threat events, associated with a specific threat source or

multiple threat sources, partially ordered in time. Synonym for Threat Campaign.

In the FFIEC IS Handbook, threats come from agents (referred to in other references as threat

actors or adversaries) who are internal or external. They have different capabilities and

motivations, which require the use of different risk mitigation and control techniques. Note that

this characterization (unlike the one provided in NIST SP 800-30R1) does not consider threats

from nation-state sources, which might seek competitive intelligence but might also try to cause

harm as a national security matter, whether illicitly or openly in coordination with other

international conflict.

NIST SP 800-30R1 identifies four types of threat sources: adversarial, accidental, structural, and

environmental. In Table D-2, NIST SP 800-30R1 describes adversarial threats (i.e., threat actors)

as:

Individuals, groups, organizations, or states that seek to exploit the organization’s

dependence on cyber resources (i.e., information in electronic form, information and

communications technologies, and the communications and information-handling capabilities

provided by those technologies).

An adversarial threat has two main aspects: characteristics (e.g., capabilities, intent, and targeting

[NIST 2012]) and behaviors (often referred to as malicious cyber activities [NSTC 2016] or

attack activities). Adversary characteristics related to capabilities can include methods resources

that can be directed or allocated, and relationships [Bodeau 2013]. Intent can have multiple

aspects: (i) cyber goals or intended cyber effects (e.g., denial of service, data modification), (ii)

non-cyber goals (e.g., financial gain), and (iii) risk trade-offs [Bodeau 2014]. Behaviors can be

described as tactics, techniques, and procedures (TTPs):

“Tactics are high-level descriptions of behavior, techniques are detailed descriptions of

behavior in the context of a tactic, and procedures are even lower-level, highly detailed

descriptions in the context of a technique. TTPs could describe an actor’s tendency to use a

specific malware variant, order of operations, attack tool, delivery mechanism (e.g., phishing

or watering hole attack), or exploit.” [NIST 2016c]

Both the FFIEC IS Handbook and the NIST SP 800-30R1 definitions specifically enumerate the

types of consequences that a (cyber) threat could cause, in terms of effects on information and

information systems. These cyber effects, whether expressed as loss of confidentiality, integrity,

or availability, or expressed using a more nuanced vocabulary [Temin 2010], can be translated

into effects on: the organization; its customers, partners, or suppliers; its sector; mission or

business functions within the organization or across the sector; or the Nation.

The behaviors or actions of an adversarial threat actor can be characterized in terms of the threat

vector (or attack vector) they use:

Attack vectors or avenues of attack are general approaches to achieving cyber effects, and

can include cyber, physical or kinetic, social engineering, and supply chain attacks.

[Bodeau 2014]

5

Attac

k vectors are strongly influenced by the underlying model or set of assumptions about the

technical and operational environment in which an attack occurs. Thus, attack vectors are often

enumerated in the context of incident handling [NIST 2012b] or vulnerability remediation

[FIRST 2015].

Adversary behaviors can be organized, using a cyber attack lifecycle or cyber kill chain model,

into a threat scenario or attack scenario. Numerous variants of these models have been

developed. Examples include the Lockheed Martin cyber kill chain [Cloppert 2009] and the

structure of a threat campaign given in NIST SP 800-30R1. See Section 2.1.6.3 for a discussion

of cyber attack lifecycle models. Threat scenarios can be represented graphically (as attack

graphs) or using a tree structure (as attack trees), as well as verbally (e.g., in an exercise).

1.2 Uses of Threat Modeling

As noted, cyber threat modeling can serve any of a variety of purposes. Three of these purposes

were identified as particularly relevant to the NGCI Apex Program: input into the definition and

evaluation of risk metrics, which support risk management; construction of threat scenarios to be

used in cyber wargaming; and technology foraging. These are discussed below.

1.2.1 Risk Management and Risk Metrics

Risk management can be described as consisting of four component processes: risk framing, risk

assessment, risk response, and risk monitoring [NIST 2011]. Risk framing involves stating

assumptions about the environment in which risk will be managed and defining a risk

management strategy (e.g., how alternative risk mitigations will be prioritized – what belongs in

a portfolio of cybersecurity solutions). Assumptions about threat sources (particularly adversary

characteristics) are central to risk framing, while characteristics or taxonomies of threat events

can be used in cybersecurity portfolio management. Risk assessment brings together all aspects

of the threat model with an environmental model (i.e., a representation of the operational and

technical environment in which threats could occur), so that the likelihood and consequence

severity of threat scenarios or individual threat events can be estimated or evaluated. Risk

response involves evaluation of potential alternative risk mitigations (ways to reduce likelihood

and/or severity of consequences), and thus focuses on the threat event and threat scenario

portions of a threat model. Risk monitoring involves searching for indications of change in the

environment, particularly indicators of adversary activity within systems undergoing continuous

monitoring and security assessment; while the portion of risk monitoring focused on systems

emphasizes threat events and relies on threat information sharing, higher-level intelligence

analysis looks for changes in adversary characteristics, and feeds back into risk framing and risk

assessment.

Cybersecurity risk can be managed at varying scopes. Within an organization, risk can be

managed at the organizational tier (or executive level), the mission/business function tier (or

business/process level), and the information system tier (or implementation/operations level)

[NIST 2011]. Beyond these, two additional levels can be identified: the sector, region, or

community-of-interest (COI) tier, and the national or transnational level [Bodeau 2014]. These

are illustrated in Figure 2.

6

Figur

e 2. Scope of Risk Management Decisions to Be Supported by Threat Model

A threat model can be oriented toward one or more of these levels:

• At the system implementation or operations level, a threat model – or threat intelligence

structured by a threat model – can motivate selection of specific security controls or

courses of action. Depending on the stage in the SDLC, a threat model can inform design

decisions or security operations.

• At the mission or business function level, a threat model can motivate elements of the

enterprise architecture, the organization’s information security architecture, and specific

mission or business function architectures.

• At the organizational level, a threat model reflects and expresses the organization’s

assumptions about its threat environment; these are an integral part of the organization’s

risk frame [NIST 2011]. Note that risk management at the organizational level can consider

not only a given organization’s cyber resources, but also those resources it obtains from

service providers (e.g., network telecommunications, cloud services, managed security

services). For example, an organization’s service level agreement (SLA) with a cloud

provider can be based on a shared or an organization-defined threat model.

• At levels above the enterprise, a threat model can provide a common structure for threat

intelligence information sharing and can support the development of multi-participant

exercises or cyber wargames.

A threat model is part of the risk assessment deliverable identified as a standard requirement

levied on a supplier of network-connectable software, systems or devices in the Procurement

Requirements appendix of the Cyber Insurance Buying Guide [FSSCC 2016].

Threat modeling for risk assessment can be approached from three directions: by first modeling

the threat, generally or specifically, and then applying it to a relevant environment; by first

modeling the systems, data, and boundaries in the environment and then determining what

N

ational / Transnational

S

e

ctor / Region / COI

O

r

ganization / Enterprise

M

i

ssion / Business

F

un

ction

I

nformation System

I

mp

lementation /

O

pe

rations

7

thre

a

ts are relevant; or by first identifying the organizational assets that could be affected by

threats, characterizing the threats that could affect or target those assets, and situating the assets

in terms of systems [Potteiger 2016] [NIST 2016]. These three approaches are illustrated in

Figure 3. Note that while each approach focuses on a different aspect of risk as a starting point,

assumptions about the other aspects are used implicitly to determine the scope of the primary

aspect.

Figur

e

3. Threat Modeling Approaches

Using any of these threat modeling approaches, risk is estimated by assessing identified threat

events or scenarios, in the context of relevant vulnerabilities and environmental assumptions, as

to likelihood of occurrence and severity of impact. The resulting measure is the result of any

inherent risk, minus the mitigation of threats provided by implemented controls, and constitutes a

measure of residual risk. This process may iterate as additional controls are identified and

implemented, and as evolving threat capabilities are identified and reported.

Measuring risk levels and identifying operational processes that support ongoing mitigation of

cyber threats should result in a reporting capability for significant risk-based metrics.

Development of metrics is outside the scope of this document, but risk metrics are critical to

providing executive managers with oversight capabilities to establish a cyber program baseline to

manage acceptable residual risk to the institution.

1.2.2 Cyber Wargaming

Cyber wargaming is a method of exercising and examining, in a modeled environment, human

performance and decision-making or system characteristics and outcomes in the context of a

cyber attack scenario. Examples include tabletop exercises, red-team exercises, and hybrid

combinations of tabletop and red-team exercises. Red-team and hybrid exercises can simulate

attack and defense activities on an operational system, on a cyber range, in a testbed, or in a

laboratory. Modeling and simulation (M&S) can be used to develop scenarios for a cyber

wargame, or can support hybrid exercises and simulation experiments (SIMEX, [MITRE 2009]).

8

C

yber threat modeling supports cyber wargaming by creating an adversary profile which is

enacted by the red team (or represented in the script for a tabletop exercise, or as a set of

parameters in M&S), in identifying plausible threat events, and developing threat scenarios.

1.2.3 Technology Profiling and Technology Foraging

Cybersecurity controls, technologies, and practices serve to mitigate risks. Any organization is

re

source-constrained, and thus cannot implement all possible risk mitigations. Potential risk

mitigations can be characterized in terms of the threats (typically, the types of threat events) they

address, as well as using other structuring frameworks. Threat models, consisting of verbal

summaries of adversary characteristics and typical behaviors, are commonplace in published

descriptions of research and development (R&D) efforts. A more detailed threat model,

consisting of a list of threat events, is included in the Security Problem Definition section of a

Protection Profile or of the Security Target for a product to be evaluated against the Common

Criteria (CC) under the National Information Assurance Partnership (NIAP).

3

To support technology foraging, categorizations such as matrix approaches can be used. By

placing risk mitigations and threat events in the cells of such matrices, analysts can develop

testable hypotheses about the effects of the mitigations. One structuring framework is derived in

part from the NIST Cybersecurity Framework (CSF, [NIST 2014]), described in more detail in

Section 2.1.1. In the Cyber Defense Matrix used by the Cyber Apex Review Team (CART) and

illustrated in Figure 4, technologies and products are mapped to the five functions defined by the

CSF (columns) they perform and the classes of assets (rows) for which they perform those

functions. The Network-Detect cell, for instance, is at the intersection of the Detect function and

the Network asset class. The CART’s Cyber Defense Matrix has been elaborated, in some

contexts, with additional cells for areas not directly captured by the function-asset mapping. In

particular, cells are sometimes included for Analytics and Visualization and for Orchestration

and Automation. The CART has identified four cells as of particular interest to the FSS:

Network-Identify, Network-Detect, Data-Protect, and Data-Detect.

3

S

ee https://www.niap-ccevs.org/.

9

Figur

e

4. The Cyber Defense Matrix

S

ince threats can be characterized in terms of the types of assets they affect, the cells in the

Cyber Defense Matrix can be viewed as characterizing the types of effects a given risk

mitigation could have on a threat.

Two related matrices are those provided by the U.S. Cyber Consequences Unit (US-CCU) [Borg

2016]. One matrix characterizes vulnerabilities (and can be used to characterize specific

adversary activities) in terms of an adversary’s attack action (columns) and the type of assets in

which the vulnerability is exploited (rows). Adversary attack actions include find, penetrate, co-

opt, conceal, and make irreversible (Note that this categorization of attack actions is, in effect, a

cyber attack lifecycle.) Asset types include hardware, software, networks, automation, users, and

suppliers. The second matrix characterizes risk mitigations in terms of their effects on adversary

goals (e.g., harder to find, harder to penetrate) for each type of asset.

Another matrix approach characterizes risk mitigations in terms of the phases of the Lockheed-

Martin cyber kill chain (rows) and Department of Defense (DoD) effects on a military adversary

(columns). Those effects include detect, deny, disrupt, degrade, deceive, and destroy [Cloppert

2009, Bedell 2016]. By contrast, the Community Attack Model developed by the Center for

Internet Security (CIS) uses a matrix in which the rows correspond to the CSF functions, but the

columns correspond to attack stages in a nine-stage cyber attack lifecycle [CIS 2016]; the CIS

Critical Security Controls are mapped to cells in that matrix.

1.3 Survey and Assessment Approach

The set of frameworks and models described in Sections 2 and 3 was identified by subject matter

experts (SMEs) within MITRE, the NGCI Apex program, and the CART. A few threat modeling

approaches specific to DoD or other military organizations were identified by SMEs as

potentially relevant to the FSS and used as inputs to the survey. The assessment was driven by

the scope of desired uses for a cyber threat model identified by the NGCI Apex program – risk

management, cyber wargaming within an organization and across a sector or sub-sector,

technology foraging, and technology evaluation.

10

The

survey and assessment focused on cyber threats targeting or exploiting enterprise IT, since

the FSS depends heavily on it. However, other critical infrastructure sectors depend heavily on

operational technology (OT). Even organizations in the FSS depend – or will increasingly

depend – on cyber-physical systems (CPS) such as, for instance, automated teller machines

(ATMs) and OT. For example, convergence between EIT and building access and control

systems (BACS) can increase efficiency and decrease operating costs. Cyber threat modeling for

CPS, OT, and the Internet of Things (IoT) is an area of future work.

11

2

Threat Modeling Frameworks and Methodologies

This section summarizes a number of threat modeling frameworks and methodologies. Some

approaches to threat modeling are implicitly or explicitly included in risk management

approaches; these are presented in Section 2.1. Other approaches are intended to be integrated

into system design processes; these are discussed in Section 2.2. Finally, some threat modeling

frameworks are intended to support or leverage threat information sharing; these are presented in

Section 2.3. The frameworks and methodologies described in this section are either not

populated with threat events, or include only a representative starting set of threat events.

Populated threat models are described in Section 3.

2.1 Frameworks for Cyber Risk Management

Several frameworks for cyber risk management –

mana

gement of risks due to dependence on

c

y

ber resources, given that cyberspace is contested or includes bad actors – assume an

underlying threat model or threat modeling framework. In particular:

• Threat modeling is implicit in the NIST Framework for Improving Critical Infrastructure

Cybersecurity (see Section 2.1.1).

• Threat modeling is explicit in NIST SP 800-30R1, and is integral to the view of risk

management developed by the DoD’s Joint Task Force (JTF) Transformation Initiative

(described in Section 2.1.2) and represented by multiple NIST Special Publications (SPs).

• Threat modeling is integral to the assessment process in the Bank of England’s CBEST

framework (discussed in Section 2.1.3).

4

F

urther, many cyber threat modeling approaches have some elements in common. Cyber attack

lifecycle models or cyber kill chain models inform many of them. Attack trees or attack graphs

provide a structuring framework for the development of threat scenarios.

2.1.1 NIST Framework for Improving Critical Infrastructure Cybersecurity

NIST released Version 1.0 of its Framework for Improving Critical Infrastructure Cybersecurity

in February, 2014 [NIST 2014]. A revision was published in April, 2018 [NIST 2018b]. The

Cybersecurity Framework (CSF) defines a high-level approach to risk management, to

complement the cybersecurity programs and risk management processes of organizations in

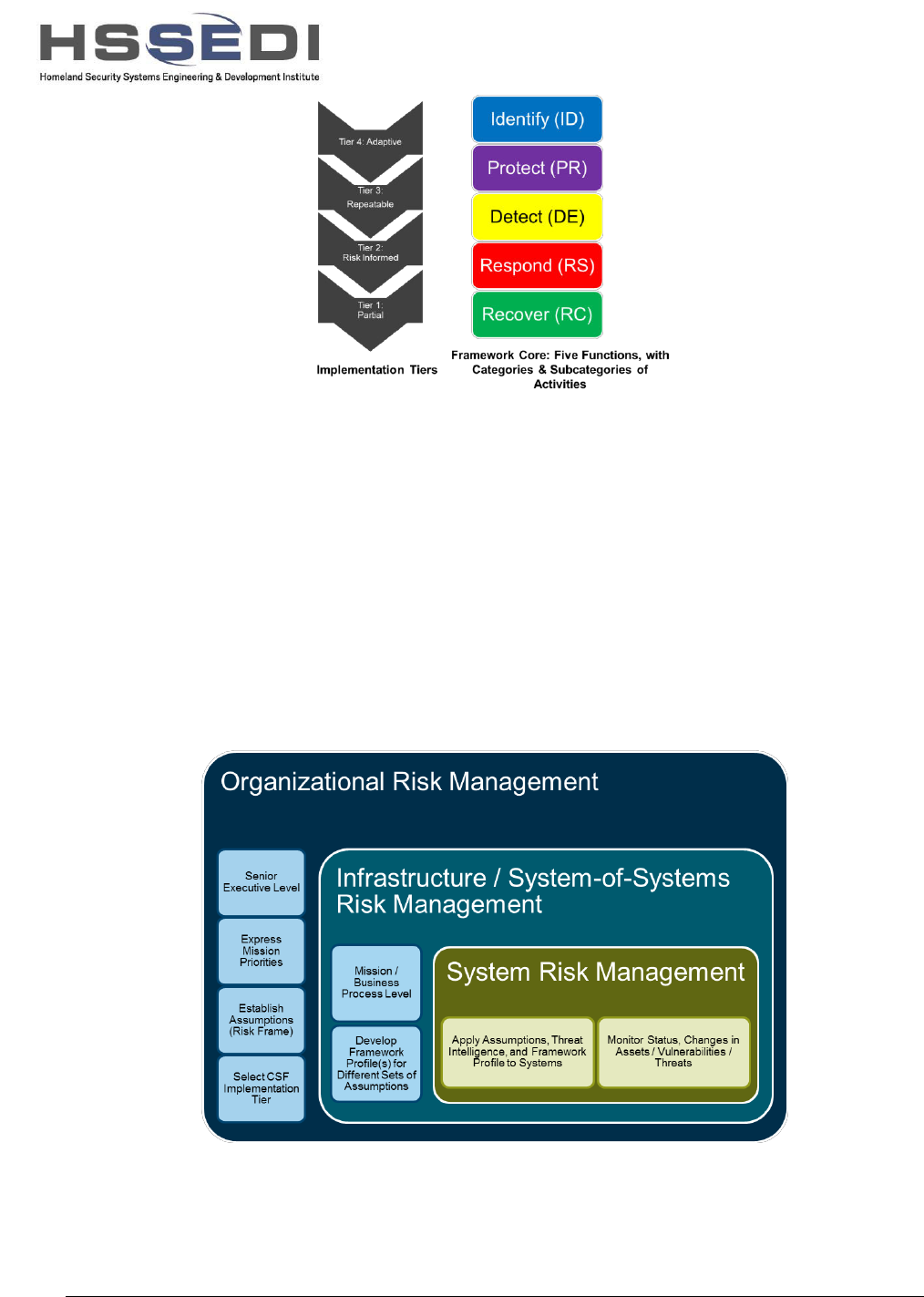

critical infrastructure sectors. As illustrated in Figure 5, the CSF has two major components: the

four Implementation Tiers and the Framework Core.

4

CBES

T is not an acronym.

12

Figur

e 5. Risk Management Implementation Tiers and Functions in the NIST Cybersecurity Framework

In the CSF approach, as illustrated in Figure 6, an organization implicitly or explicitly asserts its

assumptions about the risks to which it is subject, including assumptions about the threats it

faces. Senior executives establish mission priorities and determine the organization’s

Implementation Tier. Based on this senior-level direction, mission and business process owners

develop the organization’s Framework Profile – selections and refinements of categories and

sub-categories of activities under the five functions defined in the Framework Core, aligned with

the business requirements, risk tolerance, and resources of the organization. For the

organization’s systems, risk management involves applying the organization’s assumptions,

priorities, and Framework Profile, together with (if the Implementation Tier is high enough)

threat intelligence. Risk management at the system level also involves monitoring system status

as well as changes to assets, vulnerabilities, and/or threats.

Figure

6. Risk Management Scope of Decision Making in the NIST Cybersecurity Framework

The CSF states that:

13

“

Cybersecurity threats exploit the increased complexity and connectivity of critical

infrastructure systems, placing the Nation’s security, economy, and public safety and

health at risk.”

The CSF does not define cyber threat modeling terms, but uses the following terms:

cybersecurity threats, threat exposure, threat environment, evolving and sophisticated threats,

and cyber threat intelligence.

It should be noted that the three levels at which risk management is performed in the NIST

framework are consistent with the three levels of risk management defined in NIST SP 800-39,

Managing Information Security Risk [NIST 2011]: organizational, mission / business function,

and system. NIST SP 800-39 provides the organizational context for NIST SP 800-30 and the

draft NIST SP 800-154.

In addition, the Framework’s Core Functions can be used to group and review mitigations for

identified threats. Coupled with NIST SP 800-30R1 [NIST 2012] and other risk processes, it

provides a common framework that is consistent with, and can be applied using, other

publications such as Control Objectives for IT (COBIT) (Section 2.1.4) and the Federal Financial

Institutions Examination Council’s Handbook for Information Security [FFIEC 2016].

2.1.2 Publications Produced by the Joint Task Force Transformation Initiative

The DoD, Intelligence Community (IC), and Federal agencies via representation by the NIST

created the Joint Task Force Transformation Initiative to move from a compliance-oriented

approach to cybersecurity to one based on risk management. Several NIST publications support

this transition, including NIST SP 800-37 [NIST 2010], NIST SP 800-39 [NIST 2011], NIST SP

800-30R1 [NIST 2012], and NIST SP 800-53R4 [NIST 2013]. The Committee on National

Security Systems (CNSS) has provided additional publications, including CNSS Instruction

(CNSSI) 1253 [CNSS 2014].

5 6

The risk management process as defined in NIST SP 800-39 consists of four activities: risk

framing, risk assessment, risk response, and risk monitoring. NIST SP 800-39 defines a risk

frame as “the set of assumptions, constraints, risk tolerances, and priorities/trade-offs that shape

an organization’s approach for managing risk.” The assumptions about threat sources and threat

events – specifically including the types of adversarial tactics, techniques, and procedures (TTPs)

to be addressed, and adversarial characteristics (e.g., capability, intent, targeting) – implicitly or

explicitly define the organization’s threat model. This threat model is further refined and

5

In

the context of the JTF publications, the phrase “risk management framework” (RMF) has various interpretations. As defined

in CNSSI 4009 [CNSS 2015], the RMF is “a structured approach used to oversee and manage risk for an enterprise.” This high-

level and general definition encompasses risk management at all levels (organization, mission / business process, and system) in

the approach to risk management defined in NIST SP 800-39. The risk management approach defined in NIST SP 800-39 uses

the term “tier” – organizational tier, mission / business process tier, system tier. To avoid confusion with Implementation Tiers as

defined in the CSF, this paper – like the draft Implementation Guidance for Federal Agencies [NIST 2017] – uses the term

“level.”

6

However, the term RMF has been widely interpreted in other ways. Some focus on its primary purpose: as a framework

designed to help authorizing officials (AO) make near real-time, risk informed decisions. Others tend to use the term RMF as a

shorthand for referring to various documents (e.g., NIST SP 800-53, NIST SP 800-39, NIST SP 800-37, NIST SP 800-30R1,

CNSSI 1253, etc.) that support and underlie the broader RMF construct. Still others use the term narrowly to refer to the six step

process defined in NIST SP 800-37. Each of these uses is valid; it is the context that matters.

14

populate

d when risk assessments are performed, and the populated values are updated as part of

risk monitoring.

NIST SP 800-30R1 provides a representative threat model as part of an overall risk assessment

methodology. That threat model includes

• A taxonomy of threat sources (Table D-2), with accompanying characteristics for

adversarial threats (capability, intent, and targeting) and for non-adversarial threats (range

of effects).

• A representative set of adversarial threat events (Table E-2), organized using the structure

of a cyber campaign (i.e., a cyber attack lifecycle), and a representative set of non-

adversarial threat events (Table E-3).

• A taxonomy of predisposing conditions (i.e., environmental factors which affect the

likelihood of threat events occurring or resulting in adverse consequences) (Table F-4).

Because vulnerabilities are characterized in a wide variety of ways, NIST SP 800-30R1

does not include a taxonomy of vulnerabilities.

NIST SP 800-30R1 does not prescribe this threat model (nor the risk model of which it is a part).

However, NIST SP 800-30R1 states:

“To facilitate reciprocity of assessment results, organization-specific risk models include,

or can be translated into, the risk factors (i.e., threat, vulnerability, impact, likelihood, and

predisposing condition) defined in the appendices.”

2.1.3 CBEST Intelligence-Led Cyber Threat Modelling

The CBEST approach to threat modeling [BOE 2016] is a subcomponent of a framework for

cyber threat intelligence-driven system assessments and testing, published by the Bank of

England in 2016. It outlines an analytical model of cyber threat actors in terms of their goals,

capabilities used to pursue these goals, and methods and patterns of operation. The model is

intended to act as a template for conducting a cyber threat assessment to define a set of realistic

and threat-informed test scenarios. The CBEST approach focuses on identification of specific

threat actors and their common attack patterns to generate actionable cyber reconnaissance.

Using as much intelligence as is available, analysts using the CBEST approach analyze each

specific threat actor’s identity and motivations more deeply than in most models, for instance,

delving into geopolitical and socio-cultural factors affecting likely behavior. The approach

characterizes the threat actor’s capability in terms of resources, skill level and sophistication,

persistence, indicators of potential access to the target system being assessed, and evidence of

risk sensitivity. It models what is known about the threat actor’s phases of operation; TTPs;

countermeasures against discovery; timing; and coordination of activity. The CBEST approach is

intended to enable analysts, given adequate cyber threat intelligence data, to derive a model of

threat actors rigorous and precise enough to be predictive of likely threat events. Though this

level of threat intelligence may often not be available, the CBEST approach seeks to generate the

most realistic threat scenarios possible given the information at hand.

The CBEST approach includes clear guidance on the expected contents of a threat scenario. Key

modeling constructs in CBEST include threat entity goal orientation (including identity,

motivation, and intention), capabilities, and modus operandi.

15

2.1.4

COBIT 5 and Risk IT

Control Objectives for Information and Related Technologies (COBIT) is a framework for

g

overnance of IT environments with an extensive focus on controls. COBIT Version 5 was

released by the Information Systems Audit and Control Association (ISACA) in April 2012

(http://www.isaca.org/cobit). COBIT is based on components of the International Organization

for Standardization (ISO) standards, including incorporation of the ISO 38500 model for the

corporate governance for IT and an ISO 15504 aligned COBIT Process Capability Assessment

Model. Security controls are based on the ISO 27001 series of control objectives [ISO 2013].

This includes assessment considerations aligned with operational practice, implementation

guidance, measurement, and risk management.

COBIT is accompanied by the Risk IT framework for managing business risks of IT [ISACA

2009]. Risk IT consists of a risk model together with a process model; processes are defined for

the domains of risk governance, risk evaluation, and risk response. The model underlying risk

evaluation in Risk IT is not a security risk model, but does identify security risk as a class of risk

to be considered. A risk scenario is described in terms of threat type (which includes malicious

threats), actor, type of event (i.e., type of impact), asset or resource affected, and time. ISACA

offers guidance on developing risk scenarios, including 60 examples covering 20 categories of

risk [ISACA 2014]. In addition, the scenario planning approach in Risk IT’s risk assessment

framework allows for risk consideration beyond an individual organizational or system view.

2.1.5

Topic-Focused Frameworks and Methodologies

As noted in Section 1.1, adversary characteristics and behaviors are key topics in discussions of

cyber threats. Some frameworks and methodologies focus on specific topics, rather than

representing all characteristics and behaviors. Characteristic-focused frameworks include the

multi-tier threat model developed by the Defense Science Board (DSB) Task Force on Resilient

Military Systems and Cyber Prep. Behavior-focused frameworks and methodologies include

cyber attack lifecycle or cyber kill chain models, attack tree or attack graph modeling, and

insider threat modeling.

2.1.5.1 DSB Six-Tier Threat Hierarchy

The DSB Task Force Report on Resilient Military Systems and the Advanced Cyber Threat

[DSB 2013] defines a threat hierarchy, based primarily on potential attackers’ capabilities. In

that hierarchy, Tiers I and II exploit known vulnerabilities; Tiers III and IV discover new

vulnerabilities; and Tiers V and VI create vulnerabilities. Other differentiators include attacker

knowledge or expertise, resources, scale of operations, use of proxies, timeframe, and alignment

with or sponsorship by criminal, terrorist, or nation-state entities.

The threat hierarchy is used to motivate and structure recommendations for risk management

strategies. Risk is represented as a function of threat, vulnerability, and consequence. Threat has

the characteristics of intent and capabilities; corresponding strategies are deter and disrupt.

Vulnerability has the characteristics of inherent and introduced; corresponding strategies are

defend and detect. Consequence has the characteristics of fixable and final, with corresponding

strategies of restore and discard.

16

2.

1.

5.2 Cyber Prep Adversary Characterization Framework

The MITRE C

orporation’s Cyber Prep methodology [Bodeau 2017] uses the characteristics of an

organization’s expected cyber adversaries to motivate recommendations for preparedness against

cyber threats. Cyber Prep is specifically oriented to the organizational level of risk management.

The Cyber Prep framework defines fourteen aspects of organizational preparedness, in three

areas: Governance, Operations, and Architecture & Engineering. Different adversary

characteristics motivate different aspects of preparedness. Adversary characteristics include

goals, scope or scale of operations, timeframe of operations, persistence, concern for stealth,

stages of the cyber attack lifecycle used, cyber effects sought or produced, and capabilities. In

addition to the modeling constructs indicated in Figure 7, Cyber Prep identifies a representative

set of high-level attack scenarios. Characteristics of an organization – its missions, assets, and

role in the larger cyber ecosystem – make different scenarios more or less attractive to

adversaries with different characteristics [Sheingold 2017].

Figur

e

7. Cyber Prep Framework

The

Cyber Prep threat modeling framework builds on the Describing and Analyzing Cyber

Strategies (DACS) framework, which can be applied at any scope or scale [Bodeau 2014].

DACS provides additional detail on capabilities, as illustrated in Figure 8. A strategy for

developing intelligence about, or having effects on, adversary capabilities could focus on one or

more of these attributes.

17

Figur

e

8. Attributes of Adversary Capabilities

2.

1.5.3 Cyber Attack Lifecycle or Cyber Kill Chain Models

7

The recognition that attacks or intrusions by advanced cyber adversaries against organizations or

missions are multistage, and occur over periods of months or years, has led to the development

of multistage models which can be used to “bin” or characterize attack events. Such a multistage

model is frequently referred to as a “cyber kill chain,” adapting military terminology; the phrase

“cyber attack lifecycle” is a non-military alternative. An initial cyber kill chain model was

developed by Lockheed Martin [Cloppert 2009].

Cyber attack lifecycle models are most commonly defined for external attacks on enterprise IT

and command and control (C2) systems. NIST SP 800-30R1 and the 2013 DoD Guidelines for

Cybersecurity Developmental Test and Evaluation (DT&E) [DoD 2013] use a seven-phase cyber

attack lifecycle model, as illustrated in Figure 9.

8

Variant attack lifecycles are common. Most focus on exfiltration of sensitive information as the

adversary’s objective. For example, an Advanced Research and Development Activity (ARDA)

Workshop designed a version to characterize activities by insiders [Maybury 2005]:

reconnaissance, access, entrenchment, exploitation, communication, manipulation, extraction &

exfiltration, and counter intelligence. Raytheon uses a six-phase model: Footprint, Scan,

Enumerate, Gain Access, Escalate Privileges, and Pilfer.

9

Dell Secureworks identifies 12 stages:

define target, find and organize accomplices, build or acquire tools, research target

infrastructure/employees, test for detection, deployment, initial intrusion, outbound connection

initiated, expand access and obtain credentials, strengthen foothold, exfiltrate data, and cover

tracks and remain undetected [SecureWorks 2016].

Other cyber attack lifecycles, like the one shown in Figure 9, do not specify the adversary’s

objectives, and thus enable cyber attacks that directly impact organizations and their missions

(e.g., via denial of service, via data corruption or falsification) to be represented. Microsoft

researchers have identified a set of ten “base types” of actions: reconnaissance, commencement,

7

T

h

is section is adapted and updated from [Bodeau 2013].

8

Later versions of the DoD Cybersecurity Test and Evaluation Guidebook have used different variants. The current version

[DoD 2018] identifies four phases – Prepare, Gain Access, Propagate, and Affect – with two classes of activities (Reconnaissance

and C2) applying across all four phases.

9

The white paper in which this model was first presented is no longer accessible. However, the model is included in Patent US

8516596 B2, “Cyber attack analysis,” granted August 20, 2013. See http://www.google.com/patents/US8516596.

18

e

ntry, foothold, lateral movement, acquire control, acquire target, implement / execute, conceal

& maintain, and withdraw [Espenschied 2012]. Mandiant [Mandiant 2013] describes an attack

lifecycle consisting of Initial Recon; Initial Compromise; Establish a Foothold; Escalate

Privileges, Internal Recon, Move Laterally, and Maintain Presence, which can repeat cyclically;

and Complete Mission. The CIS Community Attack Model defines nine stages: Initial Recon,

Acquire / Develop Tools, Delivery, Initial Compromise, Misuse / Escalate Privilege, Internal

Recon, Lateral Movement, Establish Persistence, and Execute Mission Objectives [CIS 2016].

The National Cyber Security Centre (NCSC) defines a four-stage process: Survey, Delivery,

Breach, and Affect [NCSC 2016]; this is similar to the four stages identified in the ODNI Cyber

Threat Framework discussed in Section 2.3.4. Payments UK identifies twelve steps: Define

target, Find and organize accomplices, Build or acquire tools, Research target infrastructures /

employees, Test for detection, Deployment, Initial intrusion, Outbound connection initiated,

Expand access and obtain credentials, Strengthen foothold, Exfiltrate data, and Cover tracks and

remain undetected [Payments UK 2014].

Figur

e 9. Cyber Attack Lifecycle

The concept of an attack lifecycle or kill chain has been extended to threats beyond those that

exploit the exposure of an organization’s systems in cyberspace, to insider threats, threats to

industrial control systems and other cyber-physical systems, and the supply chain. A four-stage

insider threat kill chain – recruitment / tipping point, search / recon, acquisition / collection, and

exfiltration / action – was defined by the Federal Bureau of Investigation (FBI) [Reidy 2013] and

has been adopted [TripWire 2015] and extended [ZoneFox 2015] more broadly. A version for

industrial control systems (ICS) has been defined [Assante 2015], with two stages (Cyber

Intrusion Preparation & Execution and ICS Attack Development & Execution), each of which

19

include

s multiple phases. A version for cyber-physical systems (CPS) has been developed [Hahn

2015] which takes into consideration the three layers of a CPS (cyber, control, and physical). The

stages are recon (spanning all three layers), weaponize (spanning cyber and control), deliver

(cyber), cyber execution (cyber), perturb control (control), and achieve physical objective

(physical). The DSB Task Force on Cyber Supply Chain [DSB 2017] has developed a four-phase

kill chain: Intelligence & Planning, Design & Create, Insert, and Achieve Effect. A more

detailed supply chain attack lifecycle [Shackleford 2015] represents two different attack vectors:

physical and virtual.

2.1.5.4 Threat Modeling Using Attack Trees or Attack Graphs

Attack trees or attack graphs are a well-established approach to developing threat scenarios for

risk a

ssessment or cyber wargaming. Surveys of models and methodologies using directed

acyclic graphs can be found in [Kordy 2014] and in Appendix B.1.2 of [Bodeau 2013]. In

addition to the variants described there and other historical variants described in [Beyst 2016],

products (e.g., http://threatmodeler.com) or prototype tools from a wide variety of research

efforts can be used to generate attack trees or attack graphs. The Mission Oriented Risk and

Design Analysis (MORDA) methodology [Buckshaw 2005] describes the use of attack trees,

with consideration of adversary preferences, as part of risk assessment. NIST SP 800-30R1

accommodates but does not direct the use of attack trees.

2.

1.5.5 Threat Characterization Framework Developed for DRDC

A survey of the state-of-the-art in cyber threat modeling was performed for Defence Research

and Development Canada (DRDC) [Magar 2016]. That report (like this one) includes a proposed

initial cyber threat modeling framework. That framework is intended to be used to develop a

Canadian Armed Forces (CAF) cyber threat model to be demonstrated on the DRDC

ARMOUR

10

platform. The framework identifies four key elements: adversary, attack, asset, and

effect. Adversary attributes include type, motivation, commitment, and resources. Attack

attributes include delivery mechanisms (local access, remote delivery, distributed delivery, or

social engineering), tools, automation, and actions. Asset attributes include profile, container

(hardware, software, object, or people), and vulnerability. Effect attributes include cyber effects

and effects on military activities.

2.1.5.6 Insider Threat Modeling

Insider threat modeling includes models of insider behavior intended to help identify indicators

of insider activity [Costa 2016]. Computational M&S is a key analytic approach [Moore 2016].

Insider threat modeling also includes models intended to predict whether and how an insider

could become malicious, and to analyze and predict the effects of organizational actions on

insider behavior. Such predictive analysis and modeling emphasizes psychosocial factors

[Greitzer 2013]. Insider threat modeling via M&S is outside the scope of this survey.

Insider threat modeling overlaps with cyber threat modeling, insofar as insiders act in and on an

organization’s cyber resources. However, there are areas in which the two forms of modeling are

distinct: First, cyber threat modeling considers external threat sources and malicious cyber

activities at all layers in a layered architecture; insider threat modeling considers external threat

10

A

RMOUR is not an acronym, but refers to the DRDC Automated Computer Network Defence program.

20

a

ctors only with respect to their efforts to influence or suborn insiders, and focuses on actions

that an individual user can take. Second, insider threat modeling can include purely non-cyber

threat scenarios (e.g., theft of physical goods or of information in non-electronic form).

2.2 Threat Modeling to Support Design Analysis and Testing

Several highly structured threat modeling approaches have been developed to be used in the

s

ystem design and development process, to motivate and support system design decisions. These

include the modeling approach in the draft NIST SP 800-154; the Spoofing, Tampering,

Repudiation, Information Disclosure, Denial of Service, Elevation of Privilege (STRIDE) and

Damage, Reliability, Exploitability, Affected Users, and Discoverability (DREAD) approaches

created by Microsoft; Carnegie Mellon Software Engineering Institute’s Operationally Critical

Threat, Asset, and Vulnerability Evaluation (OCTAVE) methodology; and structured approaches

used by Intel and Lockheed Martin. In addition, less structured brainstorming approaches are

also in use [Steiger 2016, Shull 2016], but are not discussed below.

2.2.1 Draft NIST Special Publication 800-154, Guide to Data-Centric System Threat

Modeling

NIST, in 2016, released a draft of a new threat modeling guidance document focused on

identifying and prioritizing threats against specific types of data within systems [NIST 2016] in

order to inform and assess approaches for securing the data. This guidance document may

change, following a review and revision period. NIST SP 800-154 (DRAFT) lays out the

following approach.

System boundaries are identified, and each specific type of data to be protected is identified and

characterized as to authorized locations, movement between locations in the course of legitimate

processing, security objectives to be met for the data, and applications, services, and classes of

users authorized to access the data in ways relevant to the security objectives.

For each data type and location, a list of applicable attack vectors is then developed. Alterations

of security controls to improve the protection of the data are identified and their effectiveness

estimated against each of the attack vectors. Costs of security controls, in terms of resources or

effects on functionality, usability, and performance, are also characterized.

The attack vectors for the data types and countermeasures, as characterized, make up the threat

model, which is then analyzed as a whole to determine what set of countermeasures can best

reduce risk across the data types and attack vectors. Some scoring methods for analysis are

suggested but are not specified in detail.

2.2.2 STRIDE

Spoofing, Tampering, Repudiation, Information Disclosure, Denial of Service, Elevation of

Privilege (STRIDE) was developed for internal use at Microsoft [Kohnfelder 1999], as part of

their push to produce more secure software. Consequently, it takes a software development

perspective on threat. It has subsequently been used widely within the community and embedded

into a loose threat modeling methodology [Shostack 2014].

While sometimes referred to as a threat model or threat modeling framework, STRIDE serves

primarily as a categorization of general types of threat vectors to be considered, helping analysts

21

identif

y a complete threat model, for example using attack tree analysis [Xin 2014]. STRIDE

does not directly address level of detail or specific attack methods. It can be applied to software

components, enterprise architectures, or particular assets to be protected.

Threat modeling using STRIDE begins by answering the question “what are you building?” with

components and trust boundaries, which are used to identify interactions that cross trust

boundaries and therefore may pose opportunities for adversaries. Potential adversaries and their

objectives are postulated. The attack vector categories of the STRIDE mnemonic are then

applied to specific interfaces, functions, data objects, and software techniques that are part of the

system or component being protected. Based on the findings, an analyst or software developer

might identify bugs that need to be fixed or conclude that there is an attack vector that needs to

be mitigated in some other way (which could, for example, involve addition of a separate

security component or product, a policy change, or elimination of a feature.)

2.2.3 DREAD

DREAD was also created at Microsoft for use in their software development process to improve

the security of their products [Howard 2003]. The acronym stands for Damage, Reliability (of an

attack – sometimes rendered as reproducibility), Exploitability, Affected Users, and