Written Statement

Jonathan Turley

Shapiro Professor of Public Interest Law

The George Washington University Law School

“Censorship Laundering:

How the U.S. Department of Homeland Security Enables the Silencing of Dissent”

Subcommittee on Oversight, Investigation, and Accountability

Committee on Homeland Security

United States House of Representatives

May 11, 2023

I. INTRODUCTION

Chairman Bishop, ranking member Ivey, members of the Subcommittee, my

name is Jonathan Turley, and I am a law professor at George Washington University,

where I hold the J.B. and Maurice C. Shapiro Chair of Public Interest Law.

1

It is an honor

to appear before you today to discuss free speech and government censorship.

For the purposes of background, I come to this subject as someone who has

written,

2

litigated,

3

and testified

4

in the areas of congressional oversight and the First

1

I appear today on my own behalf, and my views do not reflect those of my law school or the

media organizations that feature my legal analysis.

2

In addition to a blog with a focus on First Amendment issues (www.jonathanturley.org), I have

written on First Amendment issues as an academic for decades. See, e.g., Jonathan Turley, THE

INDISPENSABLE RIGHT: FREE SPEECH IN THE AGE OF RAGE (forthcoming 2024); Jonathan

Turley, The Unfinished Masterpiece: Speech Compulsion and the Evolving Jurisprudence of Religious

Speech 82 MD L. REV. (forthcoming 2023); Jonathan Turley, Rage Rhetoric and the Revival of American

Sedition, 65 William & Mary Law Review (forthcoming 2023), Jonathan Turley, The Right to Rage in

American Political Discourse, GEO. J.L. & PUB. POL’Y (forthcoming 2023); Jonathan Turley, Harm and

Hegemony: The Decline of Free Speech in the United States, 45 HARV. J.L. & PUB. POL’Y 571 (2022);

Jonathan Turley, The Loadstone Rock: The Role of Harm in The Criminalization of Plural Unions, 64

EMORY L.J. 1905 (2015); Jonathan Turley, Registering Publicus: The Supreme Court and Right to

Anonymity, 2002 SUP. CT. REV. 57-83.

3

See, e.g., Eugene Volokh, The Sisters Wives Case and the Criminal Prosecution of Polygamy,

WASH. POST, Aug. 28, 2015 (discussing challenge on religious, speech, and associational rights); Jonathan

Turley, Thanks to the Sisters Wives Litigation, We have One Less Morality Law, WASH. POST, Dec. 12,

2013.

4

See, e.g., “Hearing on the Weaponization of the Federal Government,” United States House of

Representatives, House Judiciary Committee, Select Subcommittee on the Weaponization of the Federal

Government, February 9, 2023 (statement of Jonathan Turley); Examining the ‘Metastasizing’ Domestic

Terrorism Threat After the Buffalo Attack: Hearing Before the S. Comm. on the Judiciary, 117th Cong.

(2022) (statement of Jonathan Turley); Secrecy Orders and Prosecuting Leaks: Potential Legislative

Responses to Deter Prosecutorial Abuse of Power: Hearing Before H. Comm. on the Judiciary, 117th

Cong. (2021) (statement of Jonathan Turley); Fanning the Flames: Disinformation and Extremism in the

Media: Hearing Before the Subcomm. on Commc’n & Tech. of the H. Comm. on Energy & Com., 117th

Cong. (2021) (statement of Jonathan Turley); The Right of The People Peacefully to Assemble: Protecting

2

Amendment for decades. I have also represented the United States House of

Representatives in litigation.

5

My testimony today obviously reflects that past work and I

hope to offer a fair understanding of the governing constitutional provisions, case law,

and standards that bear on this question.

As I recently testified before the House Judiciary Committee, the growing

evidence of censorship and blacklisting efforts by the government raises serious and

troubling questions over our protection of free speech.

6

There are legitimate

disagreements on how Congress should address the role of the government in such

censorship. The first step, however, is to fully understand the role played in prior years

and to address the deep-seated doubts of many Americans concerning the actions of the

government to stifle or sanction speech.

The Twitter Files and other recent disclosures raise serious questions of whether

the United States government is now a partner in what may be the largest censorship

system in our history. That involvement cuts across the Executive Branch, with

confirmed coordination with agencies ranging from the Homeland Security to the State

Department to the Federal Bureau of Investigation (FBI). Even based on our limited

knowledge, the size of this censorship system is breathtaking, and we only know of a

fraction of its operations through the Twitter Files, congressional hearings, and pending

litigation. Most of the information has come from the Twitter Files after the purchase of

the company by Elon Musk. Notably, Twitter has 450 million active users

7

but it is still

only ranked 15th in the number of users, after companies such as Facebook, Instagram,

TikTok, Snapchat, and Pinterest.

8

The assumption is that the government censorship

program dovetailed with these other companies, which continue to refuse to share past

communications or work with the government. Assuming these efforts extended to the

larger platforms, we have a government-supported censorship system that is unparalleled

in history.

We now have undeniable evidence of a comprehensive system of censorship that

stretches across the government, academia, and corporate realms. Through disinformation

offices, grants, and other means, an array of federal agencies has been active

“stakeholders” in this system. This includes Homeland Security, State Department, the

FBI and other federal agencies actively seeking the censorship of citizens and groups.

The partners in this effort extend across social media platforms. The goal is not just to

remove dissenting views, but also to isolate those citizens who voice them. We recently

Speech By Stopping Anarchist Violence: Hearing Before the Subcomm. on the Const. of the S. Comm. on

the Judiciary, 116th Cong. (2020) (statement of Jonathan Turley); Respect for Law Enforcement and the

Rule of Law: Hearing Before the Commission on Law Enforcement and the Administration of Justice,

(2020) (statement of Jonathan Turley); The

Media and The Publication of Classified Information: Hearing

Before the H. Select Comm. on Intelligence, 109

th

Cong. (2006) (statement of Jonathan Turley).

5

See U.S. House of Representatives v. Burwell, 185 F. Supp. 3d 165 (D.D.C. 2016),

https://casetext.com/case/us-house-of-representatives-v-capacity-1.

6

Some of today’s testimony is material include from that earlier hearing. “Hearing on the

Weaponization of the Federal Government,” United States House of Representatives, House Judiciary

Committee, Select Subcommittee on the Weaponization of the Federal Government, February 9, 2023

(statement of Jonathan Turley).

7

Twitter Revenue and User Statistics, BUSINESS OF APPS, Jan. 31, 2023,

https://www.businessofapps.com/data/twitter-statistics/.

8

Most Popular Social Networks, STATISTA, https://www.statista.com/statistics/272014/global-

social-networks-ranked-by-number-of-users/.

3

learned that this effort extended even to companies like LinkedIn.

9

New emails

uncovered in the Missouri v. Biden litigation reportedly show that the Biden

Administration’s censorship efforts extended to Facebook to censor private

communications on its WhatsApp messaging service.

10

The effort to limit access, even to

professional sites like LinkedIn, creates a chilling effect on those who would challenge

majoritarian or official views. It was the same chilling effect experienced by scientists

who tried to voice alternative views on vaccines, school closures, masks, or the Covid

origins. The success of this partnership may surpass anything achieved by direct state-run

systems in countries like Russia or China.

The recent disclosures involving the Cybersecurity and Infrastructure Security

Agency (CISA) is chillingly familiar. It is part of an ever-expanding complex of

government programs and grants directed toward the censorship or blacklisting of

citizens and groups. In just a matter of weeks, the size of this complex has come into

greater focus and has confirmed the fears held by many of us over the use of private

actors to do indirectly what the government is prohibited from doing directly. I have

called it “censorship by surrogate” and CISA appears to be the latest agency to enlist

private proxy actors.

The focus of this hearing is particularly welcomed, as it reminds us that the cost

of censorship is not just the loss of the right to free expression. Those costs can include

the impact of reducing needed public debate and scrutiny in areas like public health. For

years, government and corporate figures worked to silence scientists and researchers who

opposed government policies on mask efficacy, universal vaccinations, school closures,

and even the origin of Covid-19. Leading experts Drs. Jayanta Bhattacharya (Stanford

University) and Martin Kulldorff (Harvard University) as well as a host of others, faced

overwhelming attacks for questioning policies or views that later proved questionable or

downright wrong. Those doctors were the co-authors of the Great Barrington

Declaration, which advocated for a more focused Covid response that targeted the most

vulnerable populations, rather than widespread lockdowns and mandates.

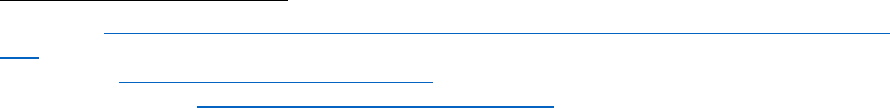

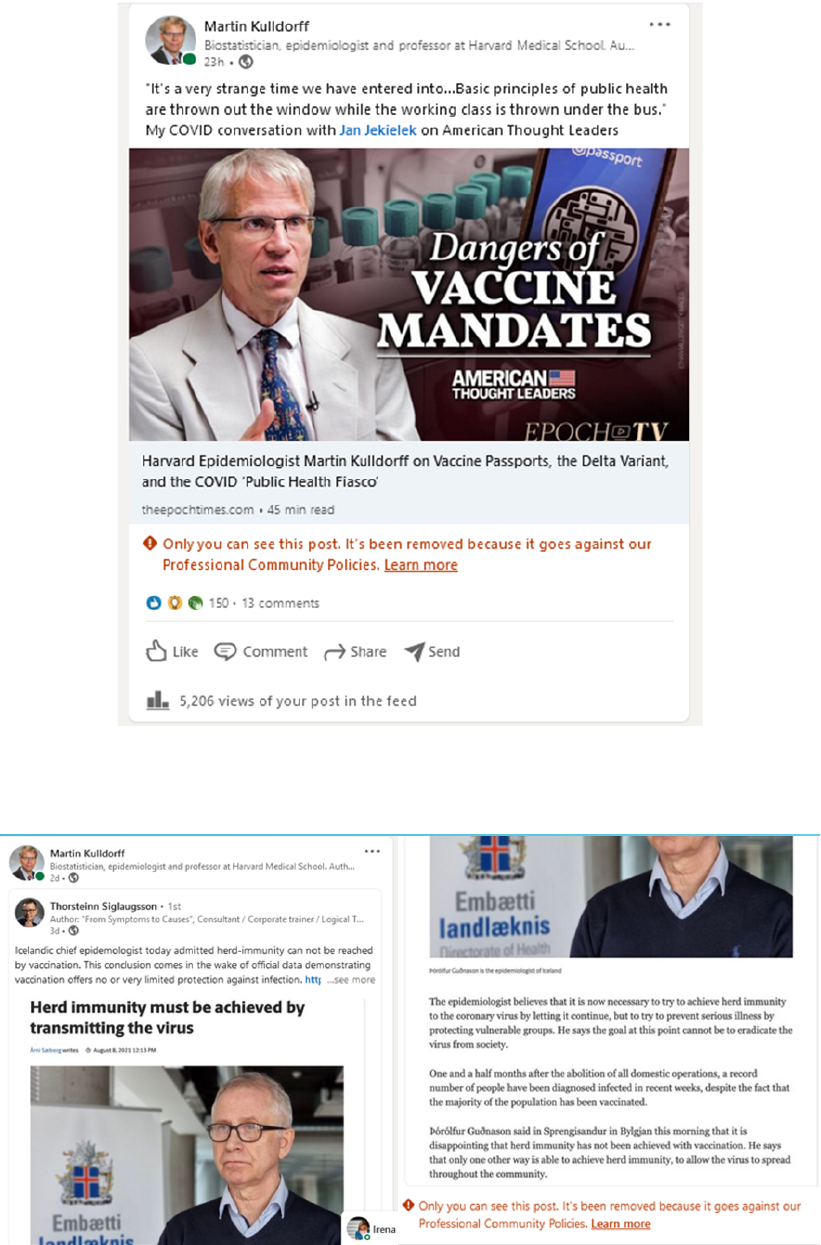

Dr. Kulldorff was censored in March 2021 when he tweeted “Thinking that

everyone must be vaccinated is as scientifically flawed as thinking that nobody should.

COVID vaccines are important for older high-risk people and their care-takers. Those

with prior natural infection do not need it. Nor children.” Every aspect of that tweet was

worthy of scientific and public debate. However, with the support of political, academic,

and media figures, such views were suppressed at the very moment in which they could

have made the most difference. For example, if we had a true and open debate, we might

have followed other countries in keeping schools open for young children. Agencies and

the media now recognize that these objections had merit.. We are now experiencing an

educational and mental health crisis associated with a lockdown that might have been

avoided or reduced (as in other countries). Millions died as government agencies enlisted

9

Jonathan Turley, “Connect to Opportunity”: State Department Pushed LinkedIn to Censor

“Disinformation,” Res Ipsa Blog (www.jonathanturley.org), Apr. 12, 2023,

https://jonathanturley.org/2023/04/12/connect-to-opportunity-new-evidence-shows-state-department-

pushing-linkenin-to-censor-disinformation/.

10

Jonathan Turley, New Documents Expose Government Censorship Efforts at Facebook and

WhatsApp, Res Ipsa Blog (www.jonathanturley.org), March 26, 2023,

https://jonathanturley.org/2023/03/26/new-documents-expose-government-censorship-efforts-at-facebook-

and-whatsapp/.

4

companies to silence dissenting viewpoints on best practices and approaches. We do not

know how many of those deaths or costs might have been avoided because this debate

was delayed until after the pandemic had largely subsided.

The purpose of my testimony today is to address the legal question of when

government support for censorship systems becomes a violation of the First Amendment

and, more broadly, when it convenes free speech principles. To that end, I hope to briefly

explore what we know, what we do not know, and why we must know much more about

the government’s efforts to combat speech deemed misinformation, disinformation, and

malinformation (MDM).

Regardless of how one comes out on the constitutional ramifications of the

government’s role in the censorship system, there should be no serious debate over the

dangers that government-supported censorship presents to our democracy. The United

States government may be outsourcing censorship, but the impact is still inimical to the

free speech values that define this country. This should not be a matter that divides our

political parties. Free speech is the core article of faith of all citizens in our constitutional

system. It should transcend politics and, despite our deepening divisions, unite us all in a

common cause to protect what Justice Louis Brandeis once called “the indispensable

right.”

11

II. MDM AND CENSORSHIP BY SURROGATE

It is a common refrain among many supporters of corporate censorship that the

barring, suspension, or shadow banning of individuals on social media is not a free

speech problem. The reason is that the First Amendment applies to the government, not

private parties. As a threshold matter, it is important to stress that free speech values are

neither synonymous with, nor contained exclusively within, the First Amendment. The

First Amendment addressed the most prevalent danger of the time in the form of direct

government regulation and censorship of free speech and the free press. Yet, free speech

in society is impacted by both public and private conduct. Indeed, the massive censorship

system employed by social media companies presents the greatest loss of free speech in

our history. These companies, not the government, now control access to the

“marketplace of ideas.” That is also a free speech threat that needs to be taken seriously

by Congress. While the Washington Post has shown that the Russian trolling operations

had virtually zero impact on our elections,

12

the corporate censorship of companies like

Twitter and Facebook clearly had an impact by suppressing certain stories and viewpoints

in our public discourse. It was the response to alleged disinformation, not the

disinformation itself, that manipulated the debate and issues for voters.

The First Amendment addresses actions by the government, but there are certainly

actions taken by these agencies to censor the views of citizens. While one can debate

whether social media executives became effective government agents, public employees

are government agents. Their actions must not seek to abridge the freedom of speech. It is

possible that a systemic government program supporting a privately-run censorship

11

Whitney v. California, 274 U.S. 357, 375-76 (1927) (Brandeis, J., concurring).

12

Tim Starks, Russian Trolls on Twitter Had Little Influence on 2016 Election, WASH. POST, Jan. 9,

2023, https://www.washingtonpost.com/politics/2023/01/09/russian-trolls-twitter-had-little-influence-2016-

voters/.

5

system is sufficient to justify injunctive relief based on the actions of dozens of federal

employees to target and seek the suspension of citizens due to their viewpoints. However,

this program can also run afoul of the First Amendment if the corporate counterparts in

the system are considered effective government agents themselves. The most common

example occurs under the Fourth Amendment where the government is sometimes

viewed as acting through private security guards or snitches performing tasks at its

request.

The same agency relationship can occur under the First Amendment, particularly

on social media. The “marketplace of ideas” is now largely digital. The question is

whether the private bodies engaging in censorship are truly acting independently of the

government. There is now ample reason to question that separation. Social media

companies operate under statutory conditions and agency review. That relationship can

allow or encourage private parties to act as willing or coerced agents in the denial of free

speech. Notably, in 1946, the Court dealt with a town run by a private corporation in

Marsh v. Alabama.

13

It was that corporation, rather than a government unit, that

prevented citizens from distributing religious literature on a sidewalk. However, the

Court still found that the First Amendment was violated because the corporation was

acting as a governing body. The Court held that, while the denial of free speech rights

“took place, [in a location] held by others than the public, [it] is not sufficient to justify

the State’s permitting a corporation to govern a community of citizens so as to restrict

their fundamental liberties.”

14

Congress has created a curious status for social media companies in granting

immunity protections in Section 230. That status and immunity have been repeatedly

threatened by members of Congress unless social media companies expanded censorship

programs in a variety of different areas. The demands for censorship have been

reinforced by letters threatening congressional action. Many of those threats have

centered around removing Section 230 immunity, pursuing antitrust measures, or other

vague regulatory responses. Many of these threats have focused on conservative sites or

speakers. The language of the Section itself is problematic in giving these companies

immunity “to restrict access to or availability of material that the provider or user

considers to be obscene, lewd, lascivious, filthy, excessively violent, harassing, or

otherwise objectionable, whether or not such material is constitutionally protected.”

15

As

Columbia Law professor Phil Hamburger has noted, the statute appears to permit what is

made impermissible under the First Amendment:

16

“Congress makes explicit that it is

immunizing companies from liability for speech restrictions that would be

unconstitutional if lawmakers themselves imposed them.”

17

As Hamburger notes, that

does not mean that the statute is unconstitutional, particularly given the judicial rule

favoring narrow constructions to avoid unconstitutional meanings.

18

However, there is

13

Marsh v. Alabama, 326 U.S. 501 (1946).

14

Id. at 509.

15

47 U.S.C. § 230(c).

16

Philip Hamburger, The Constitution Can Crack Section 230, WALL STREET JOURNAL (Jan. 30,

2021).

17

Congress makes explicit that it is immunizing companies from liability for speech restrictions that

would be unconstitutional if lawmakers themselves imposed them.

18

Id. See, e.g., Republican Party of Hawaii v. Mink, 474 U.S. 1301, 1302 (1985) (narrowly

interpreting the recall provisions of the Honolulu City Charter).

6

another lingering issue raised by the use of this power to carry out the clear preference on

“content moderation” of one party.

The Court has recognized that private actors can be treated as agents of the

government under a variety of theories. Courts have found such agency exists when the

government exercises “coercive power” or “provided such significant encouragement,

either overt or covert, that the choice must in law be deemed to be that of the

State.”

19

The Court has also held that the actions of a private party can be “fairly treated

as that of the State itself” where there exists a “close nexus between the State and the

challenged action” that a private action “may be fairly treated as that of the State itself.”

20

I will return to the case law below, but first it is useful to consider what is currently

known about the government-corporate coordination revealed by the Twitter Files.

I will not lay out the full array of communications revealed by Twitter and recent

litigation, but some are worth noting as illustrative of a systemic and close coordination

between the company and federal officials, including dozens reportedly working within

the FBI. The level of back-channel communications at one point became so

overwhelming that a Twitter executive complained that the FBI was “probing & pushing

everywhere.” Another official referred to managing the government censorship referrals

as a “monumental undertaking.” At the same time, dozens of ex-FBI employees were

hired, including former FBI General Counsel James Baker. There were so many FBI

employees that they set up a private Slack channel and a crib sheet to allow them to

translate FBI terms into Twitter terms more easily. The Twitter Files have led groups

from the right to the left of our political spectrum to raise alarms over a censorship

system maintained by a joint government-corporate effort.

21

Journalist Matt Taibbi was

enlisted by Elon Musk to present some of these files and reduced his findings to a simple

header: “Twitter, the FBI Subsidiary.”

As discussed today, these disclosures show that FBI is not alone among the

federal agencies in systemically targeting posters for censorship. Indeed, emails reveal

FBI figures, like San Francisco Assistant Special Agent in Charge Elvis Chan, asking

Twitter executives to “invite an OGA” (or “Other Government Organization”) to an

upcoming meeting. A week later, Stacia Cardille, a senior Twitter legal executive,

indicated the OGA was the CIA, an agency under strict limits regarding domestic

activities. Much of this work apparently was done through the multi-agency Foreign

Influence Task Force (FITF), which operated secretly to censor citizens. Cardille

referenced her “monthly (soon to be weekly) 90-minute meeting with FBI, DOJ, DHS,

ODNI [Office of the Director of National Intelligence], and industry peers on election

threats.” She detailed long lists of tasks sent to Twitter by government officials. The

censorship efforts reportedly included “regular meetings” with intelligence officials. This

included an effort to warn Twitter about a “hack-and-leak operation” by state actors

targeting the 2020 presidential election. That occurred just before the New York Post

19

Blum v. Yaretsky, 457 U.S. 991, 1004 (1982).

20

Jackson v. Metro. Edison Co., 419 U.S. 345, 351 (1974).

21

Compare Yes, You Should be Worried About the Relationship with Twitter, THE FIRE, Dec. 23,

2022, https://www.thefire.org/news/yes-you-should-be-worried-about-fbis-relationship-twitter with Branco

Marcetic, Why the Twitter Files Are In Fact a Big Deal, JACOBIN, Dec. 29, 2022,

https://jacobin.com/2022/12/twitter-files-censorship-content-moderation-intelligence-agencies-surveillance.

7

story on Hunter Biden’s laptop was published and then blocked by Twitter. It was also

blocked by other social media platforms like Facebook.

22

The files also show the staggering size of government searches and demands. The

FBI reportedly did key word searches to flag large numbers of postings for possible

referral to Twitter. On November 3, 2020, Cardille told Baker that “[t]he FBI has “some

folks in the Baltimore field office and at HQ that are just doing keyword searches for

violations. This is probably the 10th request I have dealt with in the last 5 days.” Baker

responded that it was “odd that they are searching for violations of our policies.” But it

was not odd at all. Twitter had integrated both current and former FBI officials into its

network and the FBI was using the company’s broadly defined terms of service to target

a wide array of postings and posters for suspensions and deletions.

At one point, the coordination became so tight that, in July 2020, Chan offered to

grant temporary top-secret clearance to Twitter executives to allow for easier

communications and incorporation into the government network.

23

This close working

relationship also allowed the government use of accounts covertly, reportedly with the

knowledge of Twitter. One 2017 email sent by an official from United States Central

Command (CENTCOM) requested that Twitter “whitelist” Arabic-language Twitter

accounts that the government was using to “amplify certain messages.” The government

also asked that these accounts be granted the “verified” blue checkmark.

The range of available evidence on government coordination with censorship

extends beyond the Twitter Files and involves other agencies. For example, recent

litigation brought by various states over social media censorship revealed a back-channel

exchange between defendant Carol Crawford, the CDC’s Chief of digital media and a

Twitter executive.

24

The timing of the request for the meeting was made on March 18,

2021. Twitter senior manager for public policy Todd O’Boyle asked Crawford to help

identify tweets to be censored and emphasized that the company was “looking forward to

setting up regular chats.” However, Crawford said that the timing that week was “tricky.”

Notably, that week, Dorsey and other CEOs were to appear at a House hearing to discuss

“misinformation” on social media and their “content moderation” policies. I had

just testified on private censorship in circumventing the First Amendment as a type of

censorship by surrogate.

25

Dorsey and the other CEOs were asked at the March 25, 2021,

hearing about my warning of a “little brother problem, a problem which private entities

do for the government which it cannot legally do for itself.”

26

Dorsey insisted that there

was no such censorship office or program.

22

Mark Zuckerberg has also stated that the FBI clearly warned about the Hunter Biden laptop as

Russian disinformation. David Molloy, Zuckerberg Tells Rogan that FBI Warning Prompted Biden Laptop

Story Censorship, BBC, August 26, 2022, https://www.bbc.com/news/world-us-canada-62688532.

23

Gadde and Roth both testified that they do not know if anyone took up this offer for clearances.

24

The lawsuit addresses how experts, including Drs. Jayanta Bhattacharya (Stanford University) and

Martin Kulldorff (Harvard University), have faced censorship on these platforms.

25

Fanning the Flames: Disinformation and Extremism in the Media: Hearing Before the Subcomm.

on Commc’n & Tech. of the H. Comm. on Energy & Com., 117th Cong. (2021) (statement of Jonathan

Turley, Shapiro Professor of Public Interest Law, The George Washington University Law School).

26

Misinformation and Disinformation on Online Platforms: Hearing Before the Subcomm. on

Commc’n & Tech. and Subcomm. on Consumer Protection of the H. Comm. on Energy & Com., 117th

Cong. (2021).

8

The pressure to censor Covid-related views was also coming from the White

House, as they targeted Alex Berenson, a former New York Times reporter, who had

contested agency positions on vaccines and underlying research. Rather than push

information to counter Berenson’s views, the White House wanted him banned. Berenson

was eventually suspended.

These files show not just a massive censorship system but a coordination and

integration of the government to a degree that few imagined before the release of the

Twitter Files. Congressional hearings have only deepened the alarm for many in the free

speech community. At one hearing, former Twitter executive Anika Collier Navaroli

testified on what she called the “nuanced” standard used by her and her staff on

censorship, including the elimination of “dog whistles” and “coded” messaging. She then

said that they balanced free speech against safety and explained that they sought a

different approach:

“Instead of asking just free speech versus safety to say free speech for whom and

public safety for whom. So whose free expression are we protecting at the expense

of whose safety and whose safety are we willing to allow to go the winds so that

people can speak freely?”

The statement was similar to the statement of the former CEO Parag Agrawal. After

taking over as CEO, Agrawal pledged to regulate content as “reflective of things that we

believe lead to a healthier public conversation.” Agrawal said the company would “focus

less on thinking about free speech” because “speech is easy on the internet. Most people

can speak. Where our role is particularly emphasized is who can be heard.”

The sweeping standards revealed at these hearings were defended by members as

necessary to avoid “insurrections” and other social harms. What is particularly distressing

is to hear members repeatedly defending censorship by citing Oliver Wendell Holmes’

famous statement on “shouting fire in a crowded theater.” This mantra has been grossly

misused as a justification for censorship. From statements on the pandemic to climate

change, anti-free speech advocates are claiming that opponents are screaming “fire” and

causing panic. The line comes from Schenck v. United States, a case that discarded the

free speech rights of citizens opposing the draft. Charles Schenck and Elizabeth Baer

were leading socialists in Philadelphia who opposed the draft in World War I. Fliers were

distributed that encouraged men to “assert your rights” and stand up for their right to

refuse such conscription as a form of involuntary servitude. Writing for the Court, Justice

Oliver Wendell Holmes dismissed the free speech interests in protecting the war and the

draft. He then wrote the most regrettable and misunderstood judicial soundbites in

history: “the character of every act depends on the circumstances in which it is done . . .

The most stringent protection of free speech would not protect a man in falsely shouting

fire in a theater and causing a panic.” “Shouting fire in a crowded theater” quickly

became a mantra for every effort to curtail free speech.

Holmes sought to narrow his clear and present danger test in his dissent

in Abrams v. United States. He warned that “we should be eternally vigilant against

attempts to check the expression of opinions that we loath and believe to be frought (sic)

with death, unless they so imminently threaten immediate interference with the lawful

and pressing purposes of the law that at an immediate check is required to save the

country.” Holmes’ reframing of his view would foreshadow the standard

in Brandenburg v. Ohio, where the Supreme Court ruled that even calling for violence is

9

protected under the First Amendment unless there is a threat of “imminent lawless

action and is likely to incite or produce such action.” However, members are still

channeling the standard from Schenck, which is a curious choice for most Democrats in

using a standard used against socialists and anti-war protesters.

Even more unnerving is the fact that Navaroli’s standard and those referencing

terms like “delegitimization” makes the Schenck standard look like the model of clarity.

Essentially, they add that you also have to consider the theater, movie, and audience to

decide what speech to allow. What could be treated as crying “Fire!” by any given person

or in any given circumstances would change according to their “nuanced” judgment.

III. CISA WITHIN THE GOVERNMENT-CORPORATE ALLIANCE

The role of CISA in this complex of government-corporate programs only

recently came into closer scrutiny. The Department of Homeland Security was previously

the focus of public controversy with the disclosure of the creation of Department’s

Disinformation Governance Board and the appointment of Nina Jankowicz, its head.

Jankowicz was a long advocate for censorship in the name of combating disinformation.

At the time, White House press secretary Jen Psaki described the board as intended “to

prevent disinformation and misinformation from traveling around the country in a range

of communities.”

27

While the Department ultimately yielded to the public outcry over the

board and disbanded it, the public was never told of a wide array other offices doing

much of the same work in targeting citizens and groups for possible censorship.

In January 2017, the Homeland Security declared that election infrastructure

would be treated as “critical infrastructure.” CISA took a lead in supporting election

infrastructure integrity and countering election misinformation. In 2018, CISA and its

Countering Foreign Influence Task Force (CFITF) reportedly assumed a greater role in

monitoring and counteracting foreign interference in U.S. elections. In 2020, this work

appears to have expanded further to pursue allegations of “switch boarding” by domestic

actors, or individuals thought to be acting as conduits for information undermining

elections or critical infrastructure. Much about this work remains unclear and I am no

expert on CISA or its operational profile. However, the expanding mandate of CISA

follows a strikingly familiar pattern.

The Twitter Files references CISA participation in these coordination meetings.

Given a mandate to help protect election integrity, CISA plunged into the monitoring and

targeting of those accused of disinformation. Infrastructure was interpreted to include

speech. As its director, Jen Easterly, declared “the most critical infrastructure is our

cognitive infrastructure” and thus included “building that resilience to misinformation

and disinformation, I think, is incredibly important.”

28

She pledged to continue that work

with the private sector including social media companies on that effort. We do not need

the government in the business of building our “cognitive infrastructure.” Like content

27

Press Briefing by Press Secretary Jen Psaki, April 29, 2022, https://www.whitehouse.gov/briefing-

room/press-briefings/2022/04/28/press-briefing-by-press-secretary-jen-psaki-april-28-2022/.

28

Maggie Miller, Cyber Agency Beefing Up Disinformation, Misinformation Team, THE HILL, Nov.

10, 2022, https://thehill.com/policy/cybersecurity/580990-cyber-agency-beefing-up-disinformation-

misinformation-team/.

10

moderation, the use of this euphemism does not disguise the government’s effort to direct

and control what citizens may read or say on public platforms.

Over the years, the range of information deemed harmful has expanded to the

point that even true information is now viewed as harmful for the purposes of censorship.

Some of the recent disclosures from Twitter highlighted the work of Stanford’s Virality

Project which insisted “true stories … could fuel hesitancy” over taking the vaccine or

other measures.

29

It is reminiscent of the sedition prosecutions under the Crown before

the American revolution where truth was no defense. Even true statements could be

viewed as seditious and criminal. Once the government gets into the business of speech

regulation, the appetite for censorship becomes insatiable as viewpoints are deemed

harmful, even if true. CISA shows the same broad range of suspect speech:

§ Misinformation is false, but not created or shared with the intention of causing

harm.

§ Disinformation is deliberately created to mislead, harm, or manipulate a person,

social group, organization, or country.

§ Malinformation is based on fact, but used out of context to mislead, harm, or

manipulate. An example of malinformation is editing a video to remove important

context to harm or mislead.”

30

MDM regulations offer the government the maximal space for censorship based on how

information may be received or used. The inclusion of true material used to “manipulate”

others is particularly chilling as a rationale for speech controls.

According to the Election Integrity Partnership (EIP), “tickets” flag material for

investigation that can be “one piece of content, an idea or narrative, or hundreds of URLs

pulled in a data dump.”

31

These tickets reportedly include those suspected of

“delegitimization,” which includes speech that undermines or spread distrust in the

political or electoral system. The ill-defined character of these categories is by design. It

allows for highly selective or biased “ticketing” of speech. The concern is that

conservative writers or sites subjected to the greatest targeting or ticketing. This pattern

was evident in other recent disclosures from private bodies working with U.S. agencies.

For example, we recently learned that the U.S. State Department funding for the National

Endowment for Democracy (NED) included support for the Global Disinformation

Index (GDI).

32

The British group sought to discourage advertisers from supporting sites

29

Jonathan Turley, True Stores …Could Fuel Hesitancy”: Stanford Project Worked to Censor Even

True Stories on Social Media, Res Ipsa Blog (www.jonathanturley.org), March 19, 2023, at

https://jonathanturley.org/2023/03/19/true-stories-could-fuel-hesitancy-stanford-project-worked-to-censor-

even-true-stories-on-social-media/.

30

Foreign Influence Operations and Disinformation,https://www.cisa.gov/topics/election-

security/foreign-influence-operations-and-disinformation.

31

ELECTION INTEGRITY PARTNERSHIP, THE LONG FUSE MISINFORMATION AND THE

2020 ELECTION 9 (2021), https://stacks.stanford.edu/file/druid:tr171zs0069/EIP-Final-Report.pdf

32

Jonathan Turley, Scoring Speech: How the Biden Administration has been Quietly Shaping Public

Discourse, Res Ipsa Blog (www.jonathanturley.org), Feb. 20, 2023,

https://jonathanturley.org/2023/02/20/scoring-speech-how-the-biden-administration-has-been-quietly-

shaping-speech/.

11

deemed dangerous due to disinformation. Companies were warned by GDI about “risky”

sites that pose “reputational and brand risk” and asked them to avoid “financially

supporting disinformation online.” All ten of the “riskiest” sites identified by the GDI are

popular with conservatives, libertarians, and independents, including Reason, a site

featuring legal analysis of conservative law professors. Liberal sites like HuffPost were

ranked as the most trustworthy. The categories were as ill-defined as those used by CISA.

RealClearPolitics was blacklisted due to what GDI considers “biased and sensational

language.” The New York Post was blacklisted because “content sampled from the Post

frequently displayed bias, sensationalism and clickbait, which carries the risk of

misleading the site’s reader.” After the biased blacklisting was revealed, NED announced

that it would withdraw funding for the organization. However, as with the Disinformation

Board, the Disinformation Index was just one of a myriad of groups being funded or fed

information from federal agencies. These controversies have created a type of “Whack-a-

mole” challenge for the free speech community. Every time one censorship partnership is

identified and neutralized, another one pops up.

EIP embodies this complex of groups working with agencies. It describes itself as

an organization that “was formed between four of the nation’s leading institutions

focused on understanding misinformation and disinformation in the social media

landscape: the Stanford Internet Observatory, the University of Washington’s Center for

an Informed Public, Graphika, and the Atlantic Council’s Digital Forensic Research

Lab.” The EIP has referred to CISA as one of its “stakeholders” and CISA has used the

partnership to censor individuals or groups identified by the agency. We still do not the

full extent of the coordination between CISA and other agencies with private and

academic groups in carrying out censorship efforts. However, the available evidence

raises legitimate questions over an agency relationship for the purposes of the First

Amendment.

IV. OUTSOURCING CENSORSHIP: THE NEED FOR GREATER

TRANSPARENCY AND ACCOUNTABILITY

In recent years, a massive censorship complex has been established with

government, academic, and corporate components. Millions of posts and comments are

now being filtered through this system in arguably the most sophisticated censorship

system in history. This partnership was facilitated by the demands of the First

Amendment, which bars the government from directly engaging in forms of prior

restraint and censorship. If “necessity is the mother of invention,” the censorship complex

shows how inventive motivated people can be in circumventing the Constitution. It has

been an unprecedented challenge for the free speech community. The First Amendment

was designed to deal with the classic threat to free speech of a government-directed

system of censorship. However, the traditional model of a ministry of information is now

almost quaint in comparison to the current system. It is possible to have an effective state

media by consent rather than coercion. There is no question that the work of these

12

academic and private groups limits free speech. Calling opposing views disinformation,

malinformation, or misinformation does not sanitize the censorship. It is still censorship

being conducted through a screen of academic and corporate entities. It may also

contravene the First Amendment.

The government can violate the Constitution through public employees or private

actors. As I testified recently before the Judiciary Committee, this agency relationship

can be established through consent or coercion. Indeed, the line can be difficult to discern

in many cases. There is an argument that this is a violation of the First Amendment.

Where the earlier debate over the status of these companies under Section 230 remained

mired in speculation, the recent disclosures of government involvement in the Twitter

censorship program presents a more compelling and concrete case for arguing agency

theories. These emails refer to multiple agencies with dozens of employees actively

coordinating the blacklisting and blocking of citizens due to their public statements.

There is no question that the United States government is actively involved in a massive

censorship system. The only question is whether it is in violation of the First

Amendment.

Once again, the Twitter Files show direct action from federal employees to censor

viewpoints and individual speakers on social media. The government conduct is direct

and clear. That may alone be sufficient to satisfy courts that a program or policy abridges

free speech under the First Amendment. Even if a company like Twitter declined

occasionally, the federal government was actively seeking to silence citizens. Any

declinations only show that that effort was not always successful.

In addition to that direct action, the government may also be responsible for the

actions of third parties who are partnering with the government on censorship. The

government has long attempted to use private parties to evade direct limits imposed by

the Constitution. Indeed, this tactic has been part of some of the worst chapters in our

history. For example, in Lombard v. Louisiana,

33

the Supreme Court dealt with the denial

of a restaurant to serve three black students and one white student at a lunch counter in

New Orleans reserved for white people. The Court acknowledged that there was no state

statute or city ordinance requiring racial segregation in restaurants. However, both the

Mayor and the Superintendent of Police had made public statements that “sit-in

demonstrations” would not be permitted. The Court held that the government cannot do

indirectly what it cannot do directly. In other words, it “cannot achieve the same result by

an official command which has at least as much coercive effect as an ordinance.”

34

As the Court said in Blum v. Yaretsky (where state action was not found), “a State

normally can be held responsible for a private decision only when it has exercised

coercive power or has provided such significant encouragement, either overt or covert,

that the choice must in law be deemed to be that of the State.”

35

Past cases (often dealing

with state action under the Fourteenth Amendment) have produced different tests for

establishing an agency relationship, including (1) public function; (2) joint action; (3)

governmental compulsion or coercion; and (4) governmental nexus.

36

Courts have noted

33

373 U.S. 267 (1963).

34

Id. at 273.

35

Blum v. Yaretsky, 457 U.S. 991, 1004-05 (1982).

36

Pasadena Republican Club v. W. Justice Ctr., 985 F.3d 1161, 1167 (9th Cir. 2021); Kirtley v.

Rainey, 326 F.3d 1088, 1092 (9th Cir. 2003). Some courts reduce this to three tests.

13

that these cases “overlap” in critical respects.

37

I will not go into each of these tests but

they show the highly contextual analysis performed by courts in finding private conduct

taken at the behest or direction of the government. The Twitter Files show a multilayered

incorporation of government information, access, and personnel in the censorship

program. One question is “whether the state has so far insinuated itself into a position of

interdependence with [the private entity] that it must be recognized as a joint participant

in the challenged activity.”

38

Nevertheless, the Supreme Court noted in Blum that “[m]ere

approval of or acquiescence in the initiatives of a private party is not sufficient to justify

holding the State responsible for those initiatives.”

39

Courts have previously rejected claims of agency by private parties over social

media.

40

However, these cases often cited that lack of evidence of coordination and

occurred before the release of the Twitter Files. For example, in Rogalinski v. Meta

Platforms, Inc.,

41

the court rejected a claim that Meta Platforms, Inc. violated the First

Amendment when it censored posts about COVID-19. However, the claim was based

entirely on a statement by the White House Press Secretary and “all of the alleged

censorship against Rogalinski occurred before any government statement.” It noted that

there was no evidence that there was any input of the government to challenge the

assertion that Meta’s message was “entirely its own.”

42

There is an interesting comparison to the decision of the United States Court of

Appeals for the Sixth Circuit in Paige v. Coyner, where the Court dealt with the

termination of an employee after a county official called her employer to complain about

comments made in a public hearing.

43

The court recognized that “[t]his so-called state-

actor requirement becomes particularly complicated in cases such as the present one

where a private party is involved in inflicting the alleged injury on the plaintiff.”

44

However, in reversing the lower court, it still found state action due to the fact that a

government official made the call to the employer, which prompted the termination.

Likewise, in Dossett v. First State Bank, the United States Court of Appeals for

the Eighth Circuit ruled that the termination of a bank employee was the result of state

action after school board members contacted her employer about comments made at a

public-school board meeting.

45

The Eighth Circuit ruled that the district court erred by

instructing a jury that it had to find that the school board members had “actual authority”

to make these calls. In this free speech case, the court held that you could have state

action under the color of law when the “school official who was purporting to act in the

performance of official duties but was acting outside what a reasonable person would

believe the school official was authorized to do.”

46

In this case, federal officials are

clearly acting in their official capacity. Indeed, that official capacity is part of the concern

37

Rogalinski v. Meta Platforms, Inc., 2022 U.S. Dist. LEXIS 142721 (August 9, 2022).

38

Gorenc v. Salt River Project Agr. Imp. & Power Dist., 869 F.2d 503, 507 (9th Cir. 1989).

39

Blum, 457 U.S. at 1004-05.

40

O’Handley v. Padilla, 579 F. Supp.3d 1163 1192-93 (N.D. Cal. 2022).

41

2022 U.S. Dist. LEXIS 142721 (August 9, 2022).

42

Id.

43

Paige v. Coyner, 614 F.3d 273, 276 (6th Cir. 2010).

44

Id.

45

399 F.3d 940 (8th Cir. 2005).

46

Id. at 948.

14

raised by the Twitter Files: the assignment of dozens of federal employees to support a

massive censorship system.

Courts have also ruled that there is state action where government officials use

their positions to intimidate or pressure private parties to limit free speech. In National

Rifle Association v. Vullo, the United States Court of Appeals for the Second Circuit

ruled that a free speech claim could be made on the basis of a state official’s pressuring

companies not to do business with the NRA.

47

The Second Circuit held “although

government officials are free to advocate for (or against) certain viewpoints, they may not

encourage suppression of protected speech in a manner that ‘can reasonably be

interpreted as intimating that some form of punishment or adverse regulatory action will

follow the failure to accede to the official’s request.’”

48

It is also important to note that

pressure is not required to establish an agency relationship under three of the prior tests.

It can be based on consent rather than coercion.

We have seen how censorship efforts began with claims of foreign interference

and gradually expanded into general efforts to target harm or “delegitimizing” speech.

The Twitter Files show FBI officials warning Twitter executives that their platform was

being targeted by foreign powers, including a warning that an executive cited as a basis

for blocking postings related to the Hunter Biden laptop. At the same time, various

members of Congress have warned social media companies that they could face

legislative action if they did not continue to censor social media. Indeed, after Twitter

began to reinstate free speech protections and dismantle its censorship program, Rep.

Schiff (joined by Reps. André Carson (D-Ind.), Kathy Castor (D-Fla.) and Sen. Sheldon

Whitehouse (D-R.I.)) sent a letter to Facebook, warning it not to relax its censorship

efforts. The letter reminded Facebook that some lawmakers are watching the company

“as part of our ongoing oversight efforts” — and suggested they may be forced to

exercise that oversight into any move by Facebook to “alter or rollback certain

misinformation policies.” This is only the latest such warning. In prior hearings, social

media executives were repeatedly told that a failure to remove viewpoints were

considered “disinformation.” For example, in a November 2020 Senate hearing, then-

Twitter CEO Jack Dorsey apologized for censoring the Hunter Biden laptop story. But

Sen. Richard Blumenthal, D-Conn., warned that he and his Senate colleagues would not

tolerate any “backsliding or retrenching” by “failing to take action against dangerous

disinformation.”

49

Senators demands increased censorship in areas ranging from the

pandemic to elections to climate change.

These warnings do not necessarily mean that a court would find that executives

were carrying out government priorities. An investigation is needed to fully understand

the coordination and the communications between the government and these companies.

In Brentwood Academy v. Tennessee Secondary School Athletic Assn.,

50

the Supreme

Court noted that state action decisions involving such private actors are highly case

specific:

47

National Rifle Association of America v. Vullo, 49 F.4th 700, 715 (2d Cir. 2022).

48

Id. (quoting Hammerhead Enters., Inc. v. Brezenoff, 707 F.2d 33, 39 (2d Cir. 1983)).

49

Misinformation and Disinformation on Online Platforms: Hearing Before the Subcomm. on

Commc’n & Tech. and Subcomm. on Consumer Protection of the H. Comm. on Energy & Com., 117th Cong.

(2021).

50

531 U.S. 288 (2001).

15

What is fairly attributable is a matter of normative judgment, and the criteria lack

rigid simplicity. From the range of circumstances that could point toward the

State behind an individual face, no one fact can function as a necessary condition

across the board for finding state action; nor is any set of circumstances

absolutely sufficient, for there may be some countervailing reason against

attributing activity to the government…

Our cases have identified a host of facts that can bear on the fairness of such an

attribution. We have, for example, held that a challenged activity may be state

action when it results from the State’s exercise of “coercive power,” …when the

State provides “significant encouragement, either overt or covert,” … or when a

private actor operates as a ‘willful participant in joint activity with the State or its

agents,” … We have treated a nominally private entity as a state actor when it is

controlled by an “agency of the State,” … when it has been delegated a public

function by the State, … when it is “entwined with governmental policies,” or

when government is “entwined in [its] management or control.”

51

Obviously, many of these elements appear present. However, the Twitter Files also show

executives occasionally declining to ban posters targeted by the government. It also

shows such pressure coming from the legislative branch. For example, the Twitter Files

reveal that Twitter refused to carry out censorship requests from at least one member

targeting a columnist and critic. Twitter declined and one of its employees simply wrote,

“no, this isn’t feasible/we don’t do this.”

52

There were also requests from Republicans to

Twitter for action against posters, including allegedly one from the Trump White House

to take down content.

53

We simply do not know the extent of what companies like Twitter “did do,” nor

for whom. We do not know how demands were declined when flagged by the CISA, FBI,

or other agencies. The report from Twitter reviewers selected by Elon Musk suggests that

most requests coming from the Executive Branch were granted. That is one of the areas

that could be illuminated by this select subcommittee. The investigation may be able to

supply the first comprehensive record of the government efforts to use these companies

to censor speech. It can pull back the curtain on America’s censorship system so that both

Congress and the public can judge the conduct of our government.

Whether the surrogate censorship conducted by social media companies is a form

of government action may be addressed by the courts in the coming years. However,

certain facts are well-established and warrant congressional action. First, while these

companies and government officials prefer to call it “content moderation,” these

companies have carried out the largest censorship system in history, effectively

51

Id. at 296.

52

Jonathan Turley, “We Don’t Do This”: Twitter Censors Rejected Adam Schiff’s Censorship

Request, THE HILL, Jan. 5, 2023, https://thehill.com/opinion/judiciary/3800380-we-dont-do-this-even-

twitters-censors-rejected-adam-schiffs-censorship-request/.

53

This included the Trump White House allegedly asking to take down derogatory tweets from the

wife of John Legend after the former president attacked the couple. Moreover, some Trump officials

supported efforts to combat foreign interference and false information on social media. It has been reported

that Twitter has a “database” of Republican demands. Adam Rawnsley and Asawin Suebaeny, Twitter Kept

Entire “Database” of Republican Requests to Censor Posts, ROLLING STONE, Feb. 8, 2023,

https://www.rollingstone.com/politics/politics-news/elon-trump-twitter-files-collusion-biden-censorship-

1234675969/.

16

governing the speech of billions of people. The American Civil Liberties Union, for

example, maintains that censorship applies to both government and private actions. It is

defined as “the suppression of words, images, or ideas that are ‘offensive,’ [and] happens

whenever some people succeed in imposing their personal political or moral values on

others.”

54

Adopting Orwellian alternative terminology does not alter the fact that these

companies are engaging in the systemic censoring of viewpoints on social media.

Second, the government admits that it has supported this massive censorship

system. Even if the censorship is not deemed government action for the purposes of the

First Amendment, it is now clear that the government has actively supported and assisted

in the censorship of citizens. Objecting that the conduct of government officials may not

qualify under the First Amendment does not answer the question of whether members

believe that the government should be working for the censorship of opposing or

dissenting viewpoints. During the McCarthy period, the government pushed blacklists for

suspected communists and the term “fellow travelers” was rightfully denounced

regardless of whether it qualified as a violation of the First Amendment. Even before Joe

McCarthy launched his un-American activities hearings, the Justice Department created

an effective blacklist of organizations called “Attorney General’s List of Subversive

Organizations” (AGLOSO) that was then widely distributed to the media and the public.

It became the foundation for individual blacklists.

55

The maintenance of the list fell to the

FBI. Ultimately, blacklisting became the norm with both legislative and executive

officials tagging artists, writers, and others. As Professor Geoffrey Stone observed,

“Government at all levels hunted down ‘disloyal’ individuals and denounced them.

Anyone so stigmatized became a liability to his friends and an outcast to society.”

56

At

the time, those who raised the same free speech objections were also attacked as “fellow

travelers” or “apologists” for communists. It was wrong then and it is wrong now. It was

an affront to free speech values that have long been at the core of our country. It is not

enough to say that the government is merely seeking the censorship of posters like any

other user. There are many things that are more menacing when done by the government

rather than individuals. Moreover, the government is seeking to silence certain speakers

in our collective name and using tax dollars to do so. The FBI and other agencies have

massive powers and resources to amplify censorship efforts. The question is whether

Congress and its individual members support censorship whether carried out by corporate

or government officials on social media platforms.

57

Third, the government is engaged in targeting users under the ambiguous

mandates of combating disinformation or misinformation. These are not areas

traditionally addressed by public affairs offices to correct false or misleading statements

54

American Civil Liberties Union, What is Censorship?, https://www.aclu.org/other/what-

censorship.

55

Robert Justin Goldstein, Prelude to McCarthyism, PROLOGUE MAGAZINE, Fall 2006,

https://www.archives.gov/publications/prologue/2006/fall/agloso.html. Courts pushed back on the listing to

require some due process for those listed.

56

Geoffrey R. Stone, Free Speech in the Age of McCarthy: A Cautionary Tale, 93 CALIF. L. REV.

1387, 1400 (2005).

57

The distinction between these companies from other corporate entities like the NFL or Starbucks

is important. There is no question that businesses can limit speech on their premises and by their own

employees. However, these companies constitute the most popular communication platforms in the

country. They are closer to AT&T than Starbucks in offering a system of communication.

17

made about an agency’s work. The courts have repeatedly said that agencies are allowed

to speak in their voices without viewpoint neutrality.

58

As the Second Circuit stated,

“[w]hen it acts as a speaker, the government is entitled to favor certain views over

others.”

59

This was an effort to secretly silence others. Courts have emphasized that “[i]t

is well-established that First Amendment rights may be violated by the chilling effect of

governmental action that falls short of a direct prohibition against speech.”

60

These public

employees were deployed to monitor and target user spreading “disinformation” on a

variety of subjects, from election fraud to government corruption. The Twitter Files show

how this mandate led to an array of abuses, from targeting jokes to barring opposing

scientific views.

These facts already warrant bipartisan action from Congress. Free speech

advocates have long opposed disinformation mandates as an excuse or invitation for

public or private censorship. I admittedly subscribe to the view that the solution to bad

speech is better speech, not speech regulation.

61

Justice Brandeis embraced the view of

the Framers that free speech was its own protection against false statements: “If there be

time to discover through discussion the falsehood and the fallacies, to avert the evil by

the processes of education, the remedy to be applied is more speech not enforced

silence.”

62

We have already seen how disinformation was used to silence dissenting

views of subjects like mask efficacy and Covid policies like school closures that are now

being recognized as legitimate.

We have also seen how claims of Russian trolling operations may have been

overblown in their size or their impact. Indeed, even some Twitter officials ultimately

concluded that the FBI was pushing exaggerated claims of foreign influence on social

media.

63

The Twitter Files refer to sharp messages from the FBI when Twitter failed to

find evidence supporting the widely reported foreign trolling operations. One Twitter

official referred to finding “no links to Russia.” This was not for want of trying. Spurred

on by the FBI, another official promised “I can brainstorm with [redacted] and see if we

can dig even deeper and try to find a stronger connection.” The pressure from the FBI led

Roth to tell his colleagues that he was “not comfortable” with the agenda of the FBI and

said that it reminded him of something “more like something we’d get from a

congressional committee than the Bureau.”

The danger of censorship is not solely a concern of one party. To his great credit,

Rep. Ro Khanna (D., Cal.) in October 2020, said that he was appalled by the censorship

58

Pleasant Grove City v. Summum, 555 U.S. 460, 467-68 (2009); Johanns v. Livestock Mktg.Ass’n,

544 U.S. 550, 553 (2005).

59

Wandering Dago, Inc. v. Destito, 879 F.3d 20, 34 (2d Cir. 2018).

60

Zieper v. Metzinger, 474 F.3d 60, 65 (2d Cir. 2007).

61

See generally Jonathan Turley, Harm and Hegemony: The Decline of Free Speech in the United

States, 45 HARV. J.L. & PUB. POL’Y 571 (2022).

62

Whitney, 274 U.S. at 375, 377.

63

In his testimony, Roth stated that they found substantial Russian interference impacting the

election. Protecting Speech from Government Interference and Social Media Bias, Part 1: Twitter’s Role in

Suppressing the Biden Laptop Story: Hearing Before the H. Comm. on Oversight & Accountability, 118th

Cong. (2023) (statement of Yael Roth, Former Head of Trust and Safety, Twitter). That claim stands in

conflict with other studies and reports, but it can also be addressed as part of the investigation into these

communications.

18

and was alarmed by the apparent “violation of the 1st Amendment principles.”

64

Congress can bar the use of federal funds for such disinformation offices. Such

legislation can require detailed reporting on agency efforts to ban or block public

comments or speech by citizens. Even James Baker told the House Oversight Committee

that there may be a need to pass legislation to limit the role of government officials in

their dealings with social media companies.

65

Legislation can protect the legitimate role

of agencies in responding and disproving statements made out its own programs or

policies. It is censorship, not disinformation, that has damaged our nation in recent years.

Free speech like sunshine can be its own disinfectant. In Terminiello v. City of Chicago,

the Supreme Court declared that:

The right to speak freely and to promote diversity of ideas . . . is . . . one of the

chief distinctions that sets us apart from totalitarian regimes . . . [A] function

of free speech under our system of government is to invite dispute. . . . Speech is

often provocative and challenging. . . [F]reedom of speech, though not absolute, is

nevertheless protected against censorship.

66

Disinformation does cause divisions, but the solution is not to embrace government-

corporate censorship. The government effort to reduce speech does not solve the problem

of disinformation. It does not change minds but simply silences voices in national

debates.

V. CONCLUSION

There is obviously a deep division in Congress over censorship, with many

members supporting the efforts to blacklist and remove certain citizens or groups from

social media platforms. That is a debate that many of us in the free speech community

welcome. However, let it be an honest and open debate. The first step in securing such a

debate is to support transparency on the full extent of these efforts by federal agencies.

The second step is to allow these questions to be discussed without attacking journalists

and witnesses who come to Congress to share their own concerns over the threat to both

free press and free speech values. Calling reporters “so called journalists” or others

“Putin lovers” represent a return to the rhetoric used against free speech advocates during

the Red Scare.

67

We are better than that as a country and our Constitution demands more

from this body. If members want to defend censorship, then do so with the full record

before the public on the scope and standards of this government effort.

64

Democratic Rep. Ro Khana Expressed Concerns Over Twitter’s Censorship of Hunter Biden

Laptop, FOX NEWS, Dec. 2, 2022, https://www.foxnews.com/politics/democratic-rep-ro-khanna-expressed-

concerns-twitters-censorship-hunter-biden-laptop-story.

65

Protecting Speech from Government Interference and Social Media Bias, Part 1: Twitter’s Role in

Suppressing the Biden Laptop Story: Hearing Before the H. Comm. on Oversight & Accountability, 118th

Cong. (2023) (statement of James Baker, Former General Counsel, FBI.

66

Terminiello v. City of Chicago, 337 U.S. 1, 4 (1949) (citations omitted).

67

Jonathan Turley, Is the Red Scare Turning Blue?, Res Ipsa Blog (www.jonathanturley.org), Feb. 12,

2023, https://jonathanturley.org/2023/02/12/is-the-red-scare-going-blue-democrats-accuse-government-

critics-of-being-putin-lovers-and-supporting-insurrectionists/.

19

The public understands the threat to free speech and strongly supports an

investigation into the FBI’s role in censoring social media. Despite the push for

censorship by some politicians and pundits, most Americans still want free-speech

protections. It is in our DNA. This country was founded on deep commitments to free

speech and limited government – and that constitutional tradition is no conspiracy theory.

Polls show that 73% of Americans believe that these companies censored material for

political purposes.

68

Another poll showed that 63% want an investigation into FBI

censorship allegations.

69

Adlai Stevenson famously warned of this danger: “Public confidence in the

integrity of the Government is indispensable to faith in democracy; and when we lose

faith in the system, we have lost faith in everything we fight . . . for.” Senator

Stevenson’s words should resonate on both sides of our political divide and that we

might, even now, find a common ground and common purpose. The loss of faith in our

government creates political instabilities and vulnerabilities in our system. Moreover,

regardless of party affiliation, we should all want answers to come of these questions. We

can differ on our conclusions, but the first step for Congress is to force greater

transparency on controversies involving bias to censorship. One of the greatest values of

oversight is to allow greater public understanding of the facts behind government actions.

Greater transparency is the only course that can help resolve the doubts that many have

over the motivations and actions of their government. I remain an optimist that it is still

possible to have a civil and constructive discussion of these issues. Regardless of our

political affiliations and differences, everyone in this room is here because of a deep love

and commitment to this country. It was what brought us from vastly different

backgrounds and areas in our country. We share a single article of faith in our

Constitution and the values that it represents. We are witnessing a crisis of faith today

that must be healed for the good of our entire nation. The first step toward that healing is

an open and civil discussion of the concerns that the public has with our government. We

can debate what measures are warranted in light of any censorship conducted with

government assistance. However, we first need to get a full and complete understanding

of the relationship between federal agencies and these companies in the removal or

suspension of individuals from social media. At a minimum, that should be a position that

both parties can support in the full disclosure of past government conduct and

communications with these companies.

Once again, thank you for the honor of appearing before you to discuss these

important issues, and I would be happy to answer any questions from the Committee.

Jonathan Turley

J.B. & Maurice C. Shapiro Chair of Public Interest Law

George Washington University

68

Sean Burch, Nearly 75% of Americans Believe Twitter, Facebook Censor Posts Based on

Viewpoints, Pew Finds, THE WRAP, Aug. 19, 2020, https://www.thewrap.com/nearly-75-percent-twitter-

facebook-censor/.

69

63% Want FBI’s Social Media Activity Investigated, RASMUSSEN REPORTS, Dec. 26, 2022,

https://www.rasmussenreports.com/public_content/politics/partner_surveys/twittergate_63_want_fbi_s_soc

ial_media_activity_investigated.

CENSORSHIP CAN BE DEADLY

!"#$%&$'()*+,#*-"*.",./01*2&"".%3*%4*$)""+'*($*,/5,0$*(3)%&6,#67*-86*(6*($*"$)"+(,//0*(3)%&6,#6*.8&(#9*,*

#,:%#,/*"3"&9"#+0*$8+'*,$*,*),#."3(+1*;%*,86'%&(60*($*(#4,//(-/"7*,#.*5'"#*,*#"5*<(&8$*"3"&9"$7*(6*($*

(3)%$$(-/"*4%&*)%/(:+(,#$* ,#.* )8-/(+* '",/6'*%=+(,/$* 6%* 9"6* 6'(#9$* &(9'6* 5(6'%86* /($6"#(#9* 6%* .($+8$$(%#$*

-"65""#*,*5(."*+,$6*%4*$+("#:$6$*5(6'*.(>"&"#6*,&",$*%4*"?)"&:$"*,#.*6'%89'6$1**

@*,3*,#*")(."3(%/%9($67*,*-(%$6,:$:+(,#*,#.*,*)&%4"$$%&*%4*3".(+(#"*,6*A,&<,&.7*%#*/",<"1*2%&*%<"&*65%*

."+,."$*@*',<"*.%#"*&"$",&+'*%#*6'"*."6"+:%#*,#.*3%#(6%&(#9*%4*(#4"+:%8$*.($",$"*%86-&",B$*,#.*%#*6'"*

$,4"60*"<,/8,:%#*%4*<,++(#"$*,#.*.&89$1*@*'"/)".*-8(/.*6'"*#,:%#$*.($",$"*$8&<"(//,#+"*$0$6"3$1*C"$)(6"*

6'($7*@*5,$*+"#$%&".*,#.*-/,+B*/($6".*.8&(#9*6'"*),#."3(+7*-0*D5(E"&7*F(#B".@#7*G%8D8-"*,#.*2,+"-%%B1**

@#*",&/0*HIHI7*5"*,/&",.0*B#"5*4&%3*J8',#*.,6,*6',6*6'"&"*($*3%&"*6',#*,*6'%8$,#.K4%/.*.(>"&"#+"*(#*

!%<(.*3%&6,/(60*-"65""#*6'"*%/.*,#.*6'"*0%8#9

1

1*C8&(#9*6'"*),#."3(+7*5"*4,(/".*6%*,."L8,6"/0*)&%6"+6*

%/."&* M3"&(+,#$* 5'(/"* $+'%%/* +/%$8&"$* ,#.* %6'"&* /%+B.%5#* 3",$8&"$* 9"#"&,6".* "#%&3%8$* +%//,6"&,/*

)8-/(+*'",/6'*.,3,9"*6',6*5"*#%5*38$6*/(<"*5(6'7*,#.*.("*4&%37*4%&*0",&$*6%*+%3"1*

C8&(#9*6'"*$)&(#9*%4*HIHI7*N5"."#*5,$*6'"*%#/0*3,O%&*J"$6"&#*+%8#6&0*6%*B"")*$+'%%/$*,#.*.,0+,&"*%)"#*

4%&*+'(/.&"#*,9"$*P*6%*PQ1*M3%#9*6'%$"*P1R*3(//(%#*+'(/.&"#7*6'"&"*5"&"*S"&%*+%<(.*.",6'$*,#.*6'"*+%<(.*

&($B*4%&*6",+'"&$*5"&"*/"$$*6',#*6'"*,<"&,9"*%4*%6'"&*)&%4"$$(%#$

2

1*D'($*$'%5".*6',6*(6*5,$*$,4"*6%*B"")*

$+'%%/$*%)"#1*@6*5,$*(3)%&6,#6*4%&*M3"&(+,*6%*B#%5*6',67*-86*,*T8/0*HIHI*;"5*U#9/,#.*T%8&#,/*%4*V".(+(#"*

,&:+/"*%#*$+'%%/*+/%$8&"*.(.*#%6*"<"#*3"#:%#*N5"."#

3

1*D',6W$*/(B"*&")%&:#9*%#*,*#"5*3".(+,/*6&",63"#6*

5(6'%86*(#+/8.(#9*(#4%&3,:%#*4&%3*6'"*+%3),&($%#*+%#6&%/*9&%8)1**

X#,-/"*6%*)8-/($'*30*6'%89'6$*,-%86*6'"*),#."3(+*(#*XN*U#9/($'*/,#98,9"*3".(,7*(#*6'"*$833"&*%4*HIHI*

@*8$".*30*D5(E"&*,++%8#6*6%*$',&"*6'"*N5".($'*.,6,*,#.*,&98"*4%&*%)"#*$+'%%/$1*Y86*(#*T8/0*HIHI7*D5(E"&*

)86*3"*%#*6'"(&*Z6&"#.$*-/,+B/($6[*6%*/(3(6*6'"*&",+'*%4*30*%)"#*$+'%%/*)%$6$

4

1*

@#*\+6%-"&*HIHI7*@*,86'%&".*6'"*]&",6*Y,&&(#96%#*C"+/,&,:%#*5(6'*65%*4"//%5*")(."3(%/%9($6$7*C&1*N8#"6&,*

]8)6,*,6*\?4%&.*,#.*C&1*T,0*Y',E,+',&0,*,6*N6,#4%&.

5

1*J"*,&98".*4%&*-"E"&*)&%6"+:%#*%4*'(9'K&($B*%/."&*

)"%)/"*5'(/"* B"")(#9*$+'%%/$* %)"#* ,#.* /"^#9*0%8#9*)"%)/"* /(<"* 3%&"* #%&3,/* /(<"$1* D'($* 5,$* $',.%5*

-,##".*-0*]%%9/"*,#.*+"#$%&".*-0*_"..(6

6

1*M`"&*)%$:#9*(#*4,<%&*%4*)&(%&(:S(#9*6'"*"/."&/0*4%&*<,++(#,:%#7*

%8&*2,+"-%%B*),9"*5,$*“unpublished”.*a2(98&"*Pb*

@#*V,&+'*HIHP7*D5(E"&*+"#$%&".*,*)%$6*5'"#*@*5&%6"*6',6*“Thinking that everyone must be vaccinated is

as scien:fically flawed as thinking that nobody should. COVID vaccines are important for older high-risk

people and their care-takers. Those with prior natural infec:on do not need it. Nor children.”*D5(E"&*4,/$"/0*

+/,(3".*6',6*6'"*65""6*5,$*3($/",.(#97*,#.*(6*+%8/.*#%6*-"*&")/(".*6%7*$',&".*%&*/(B".1*J"*',<"* B#%5#*

,-%86*(#4"+:%#K,+L8(&".*(338#(60*$(#+"*6'"*M6'"#(,#*c/,98"*(#*deI*Y!7*,#.*6'"*L8"$:%#(#97*."#(,/*,#.*

+"#$%&(#9*%4*$8+'*#,68&,/*(338#(60*($*6'"*3%$6*$68##(#9*."#(,/*%4*$+("#:f+*4,+6$*.8&(#9*6'"*),#."3(+1*

*

1

!"#$$%&'(!)*!+,-./012!+ř'!)567#'57!89&#$%!:5!;<5!8=5>?@>*!A?3B5%.3C!;='?$!1DC!EDED*!

2

!F#:$?>!G56$49!;<53>H!&I!8J5%53*!+&K?%012!?3!7>9&&$>9?$%'53!L!;!>&M=6'?7&3!:54J553!N?3$63%!63%!8J5%53C!O#$H!PC!

EDED*!

3

!A5K?37&3!)C!+5K?B!)C!A?=7?4>9!)*!Q5&=53?3<!F'?M6'H!7>9&&$7!%#'?3<!495!F63%5M?>*!R5J!S3<$63%!O&#'36$!&I!

)5%?>?35C!O#$H!E2C!EDED*!

4

*T96U6>96'H6!O*!V964!.!%?7>&K5'5%!64!WJ?U5'!956%X#6'45'7C!Y3G5'%C!/5>5M:5'!EEC!EDEE*!

5

!T96U6>96'H6!OC!Z#=46!8C!"#$$%&'(!)*!Z'564!T6''?3<4&3!/5>$6'6[&3C!,>4&:5'!\C!EDED*!

6

!]<!W*!!V9H!>63^4!J5!46$B!6:!495!Z'564!T6''?3<4&3!/5>$6'6[&3_!W95!8=5>464&'C!,>4&:5'!1PC!EDED*!

J(6'* .",./0* +%#$"L8"#+"$1* M6* ,* :3"* 5'"#* <,++(#"$* 5"&"* (#* $'%&6* $8))/07* 5"* 5"&"*<,++(#,:#9* 0%8#9*

,.8/6$*,#.*)"%)/"*5(6'*#,68&,/*(338#(607*5'%*.(.*#%6*#"".*(67*-"4%&"*3,#0*%/."&*M3"&(+,#$*5'%*5'%$"*

/(<"$*+%8/.*',<"*-""#*$,<".*-0*(61*a2(98&"*Hb*

D'&%89'* &,#.%3(S".* $68.("$* ,#.* &"<("5$

7

*

8

7* 5"* B#%5* 6',6* 4,+"* 3,$B$* )&%<(."* %#/0* 3,&9(#,/* %&* #%*

)&%6"+:%#* ,9,(#$6*!%<(.1* M* &,#.%3(S".* $68.0* (#* C"#3,&B* $'%5".* #%* $(9#(f+,#6* -"#"f6

9

* 5'(/"* ,* G,/"*

X#(<"&$(60*$68.0* +%#.8+6".* (#* Y,#9/,."$'* $'%5".* ,* &".8+:%#* -"65""#*I* ,#.* PR* )"&+"#6

10

1* @6* ($* 6'"#*

.,#9"&%8$*6%*3,B"*%/."&*'(9'K&($B*M3"&(+,#$*-"/("<"*6',6*3,$B$*5(//*)&%6"+6*6'"3*5'"#*6'"0*5(//*#%67*,$*

6'"0*3,0*9%*6%*+&%5.".*&"$6,8&,#6$*%&*$8)"&*3,&B"6$*6'(#B(#9*6',6*6'"(&*3,$B*($*B"")(#9*6'"3*$,4"1*@#*

V,0*HIHP*@*5,$*6"3)%&,&(/0*$8$)"#.".*-0*D5(E"&*4%&*6'&""*5""B$*4%&*5&(:#9*6',6g*“Naively fooled to think