July 25, 2012 19:03 ffirs Sheet number 4 Page number iv cyan black

WileyPLUS builds students’ confidence because it takes the guesswork

out of studying by providing students with a clear roadmap:

It offers interactive resources along with a complete digital textbook that help

students learn more. With WileyPLUS, students take more initiative so you’ll have

greater impact on their achievement in the classroom and beyond.

ALL THE HELP, RESOURCES, AND PERSONAL

SUPPORT YOU AND YOUR STUDENTS NEED!

Technical Support 24/7

FAQs, online chat,

and phone support

www.wileyplus.com/support

Student support from an

experienced student user

Collaborate with your colleagues,

find a mentor, attend virtual and live

events, and view resources

2-Minute T

utorials and all

of the resources you and your

students need to get started

Your WileyPLUS Account Manager,

providing personal training

and support

www.WhereFacultyConnect.com

Pre-loaded, ready-to-use

assignments and presentations

created by subject matter experts

July 25, 2012 19:03 ffirs Sheet number 3 Page number iii cyan black

Elementary Differential

Equations and Boundary

Value Problems

July 25, 2012 19:03 ffirs Sheet number 4 Page number iv cyan black

July 25, 2012 19:03 ffirs Sheet number 5 Page number v cyan black

TENTHEDITION

Elementary Differential

Equations and

Boundary Value

Problems

William E. Boyce

Edward P. Hamilton Professor Emeritus

Richard C. DiPrima

formerly Eliza Ricketts Foundation Professor

Department of Mathematical Sciences

Rensselaer Polytechnic Institute

July 25, 2012 19:03 ffirs Sheet number 6 Page number vi cyan black

PUBLISHER Laurie Rosatone

ACQUISITIONS EDITOR David Dietz

MARKETING MANAGER Melanie Kurkjian

SENIOR EDITORIAL ASSISTANT Jacqueline Sinacori

FREELANCE DEVELOPMENT EDITOR Anne Scanlan-Rohrer

SENIOR PRODUCTION EDITOR Kerry Weinstein

SENIOR CONTENT MANAGER Karoline Luciano

SENIOR DESIGNER Madelyn Lesure

SENIOR PRODUCT DESIGNER Tom Kulesa

EDITORIAL OPERATIONS MANAGER Melissa Edwards

ASSOCIATE CONTENT EDITOR Beth Pearson

MEDIA SPECIALIST Laura Abrams

ASSISTANT MEDIA EDITOR Courtney Welsh

PRODUCTION SERVICES Carol Sawyer/The Perfect Proof

COVER ART Norm Christiansen

This book was set in Times Ten by MPS Limited, Chennai, India and printed and bound by R.R.

Donnelley/ Willard. The cover was printed by R.R. Donnelley / Willard.

This book is printed on acid free paper. ∞

The paper in this book was manufactured by a mill whose forest management programs include

sustained yield harvesting of its timberlands. Sustained yield harvesting principles ensure that the

numbers of trees cut each year does not exceed the amount of new growth.

Copyright © 2012 John Wiley & Sons, Inc. All rights reserved.

No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or

by any means, electronic, mechanical, photocopying, recording, scanning, or otherwise, except as

permitted under Sections 107 and 108 of the 1976 United States Copyright Act, without either the prior

written permission of the Publisher, or authorization through payment of the appropriate per-copy fee

to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400,

fax (978) 750-4470. Requests to the Publisher for permission should be addressed to the Permissions

Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030, (201) 748-6011,

fax (201) 748-6008, E-mail: PERMREQ@WILEY.COM. To order books or for customer service,

call 1 (800)-CALL-WILEY (225-5945).

ISBN 978-0-470-45831-0

Printed in the United States of America

10 9 8 7 6 5 4 3 2 1

July 25, 2012 19:03 ffirs Sheet number 7 Page number vii cyan black

To Elsa and in loving memory of Maureen

To Siobhan, James, Richard, Jr., Carolyn, and Ann

And to the next generation:

Charles, Aidan, Stephanie, Veronica, and Deirdre

July 25, 2012 19:03 ffirs Sheet number 8 Page number viii cyan black

The Authors

William E. Boyce received his B.A. degree in Mathematics from Rhodes College,

and his M.S. and Ph.D. degrees in Mathematics from Carnegie-Mellon University.

He is a member of the American Mathematical Society, the Mathematical Associ-

ation of America, and the Society for Industrial and Applied Mathematics. He is

currently the Edward P. Hamilton Distinguished Professor Emeritus of Science Ed-

ucation (Department of Mathematical Sciences) at Rensselaer. He is the author

of numerous technical papers in boundary value problems and random differential

equations and their applications. He is the author of several textbooks including

two differential equations texts, and is the coauthor (with M.H. Holmes, J.G. Ecker,

and W.L. Siegmann) of a text on using Maple to explore Calculus. He is also coau-

thor (with R.L. Borrelli and C.S. Coleman) of Differential Equations Laboratory

Workbook (Wiley 1992), which received the EDUCOM Best Mathematics Curricu-

lar Innovation Award in 1993. Professor Boyce was a member of the NSF-sponsored

CODEE (Consortium for Ordinary Differential Equations Experiments) that led to

the widely-acclaimed ODE Architect. He has also been active in curriculum inno-

vation and reform. Among other things, he was the initiator of the “Computers in

Calculus” project at Rensselaer, partially supported by the NSF. In 1991 he received

the William H. Wiley Distinguished Faculty Award given by Rensselaer.

Richard C. DiPrima (deceased) received his B.S., M.S., and Ph.D. degrees in

Mathematics from Carnegie-Mellon University. He joined the faculty of Rensselaer

Polytechnic Institute after holding research positions at MIT, Harvard, and Hughes

Aircraft. He held the Eliza Ricketts Foundation Professorship of Mathematics at

Rensselaer, was a fellow of the American Society of Mechanical Engineers, the

American Academy of Mechanics, and the American Physical Society. He was also

a member of the American Mathematical Society, the Mathematical Association of

America, and the Society for Industrial and Applied Mathematics. He served as the

Chairman of the Department of Mathematical Sciences at Rensselaer, as President

of the Society for Industrial and Applied Mathematics, and as Chairman of the Ex-

ecutive Committee of the Applied Mechanics Division ofASME. In 1980, he was the

recipient of the William H. Wiley Distinguished Faculty Award given by Rensselaer.

He received Fulbright fellowships in 1964–65 and 1983 and a Guggenheim fellow-

ship in 1982–83. He was the author of numerous technical papers in hydrodynamic

stability and lubrication theory and two texts on differential equations and boundary

value problems. Professor DiPrima died on September 10, 1984.

July 20, 2012 18:17 fpref Sheet number 1 Page number ix cyan black

ix

PREFACE

This edition, like its predecessors, is written from the viewpoint of the applied

mathematician, whose interest in differential equations may be sometimes quite

theoretical, sometimes intensely practical, and often somewhere in between. We

have sought to combine a sound and accurate (but not abstract) exposition of the

elementary theory of differential equations with considerable material on methods

of solution, analysis, and approximation that have proved useful in a wide variety of

applications.

The book is written primarily for undergraduate students of mathematics, science,

or engineering, who typically take a course on differential equations during their

first or second year of study. The main prerequisite for reading the book is a working

knowledge of calculus,gained from a normal two- or three-semester course sequence

or its equivalent. Some familiarity with matrices will also be helpful in the chapters

on systems of differential equations.

To be widely useful, a textbook must be adaptable to a variety of instructional

strategies. This implies at least two things. First, instructors should have maximum

flexibility to choose both the particular topics they wish to cover and the order in

which they want to cover them. Second, the book should be useful to students who

have access to a wide range of technological capability.

With respect to content, we provide this flexibility by making sure that, so far as

possible, individual chapters are independent of each other. Thus, after the basic

parts of the first three chapters are completed (roughly Sections 1.1 through 1.3, 2.1

through 2.5, and 3.1 through 3.5), the selection of additional topics,and the order and

depth in which they are covered, are at the discretion of the instructor. Chapters 4

through 11 are essentially independent of each other, except that Chapter 7 should

precede Chapter 9 and that Chapter 10 should precede Chapter 11. This means that

there are multiple pathways through the book, and many different combinations

have been used effectively with earlier editions.

July 20, 2012 18:17 fpref Sheet number 2 Page number x cyan black

x Preface

With respect to technology, we note repeatedly in the text that computers are ex-

tremely useful for investigating differential equations and their solutions, and many

of the problems are best approached with computational assistance. Nevertheless,

the book is adaptable to courses having various levels of computer involvement,

ranging from little or none to intensive. The text is independent of any particular

hardware platform or software package.

Many problems are marked with the symbol

to indicate that we consider them

to be technologically intensive. Computers have at least three important uses in a

differential equations course. The first is simply to crunch numbers, thereby gen-

erating accurate numerical approximations to solutions. The second is to carry out

symbolic manipulations that would be tedious and time-consuming to do by hand.

Finally, and perhaps most important of all, is the ability to translate the results of

numerical or symbolic computations into graphical form, so that the behavior of

solutions can be easily visualized. The marked problems typically involve one or

more of these features. Naturally, the designation of a problem as technologically

intensive is a somewhat subjective judgment, and the

is intended only as a guide.

Many of the marked problems can be solved, at least in part, without computa-

tional help, and a computer can also be used effectively on many of the unmarked

problems.

From a student’s point of view, the problems that are assigned as homework and

that appear on examinations drive the course. We believe that the most outstanding

feature of this book is the number, and above all the variety and range, of the prob-

lems that it contains. Many problems are entirely straightforward, but many others

are more challenging,and some are fairly open-ended and can even serve as the basis

for independent student projects. There are far more problems than any instructor

can use in any given course, and this provides instructors with a multitude of choices

in tailoring their course to meet their own goals and the needs of their students.

The motivation for solving many differential equations is the desire to learn some-

thing about an underlying physical process that the equation is believed to model.

It is basic to the importance of differential equations that even the simplest equa-

tions correspond to useful physical models, such as exponential growth and decay,

spring–mass systems, or electrical circuits. Gaining an understanding of a complex

natural process is usually accomplished by combining or building upon simpler and

more basic models. Thus a thorough knowledge of these basic models, the equations

that describe them, and their solutions is the first and indispensable step toward the

solution of more complex and realistic problems. We describe the modeling process

in detail in Sections 1.1, 1.2, and 2.3. Careful constructions of models appear also in

Sections 2.5 and 3.7 and in the appendices to Chapter 10. Differential equations re-

sulting from the modeling process appear frequently throughout the book, especially

in the problem sets.

The main reason for including fairly extensive material on applications and math-

ematical modeling in a book on differential equations is to persuade students that

mathematical modeling often leads to differential equations, and that differential

equations are part of an investigation of problems in a wide variety of other fields.

We also emphasize the transportability of mathematical knowledge: once you mas-

ter a particular solution method, you can use it in any field of application in which an

appropriate differential equation arises. Once these points are convincingly made,

we believe that it is unnecessary to provide specific applications of every method

July 20, 2012 18:17 fpref Sheet number 3 Page number xi cyan black

Preface xi

of solution or type of equation that we consider. This helps to keep this book to

a reasonable size, and in any case, there is only a limited time in most differential

equations courses to discuss modeling and applications.

Nonroutine problems often require the use of a variety of tools, both analytical

and numerical. Paper-and-pencil methods must often be combined with effective

use of a computer. Quantitative results and graphs, often produced by a computer,

serve to illustrate and clarify conclusions that may be obscured by complicated ana-

lytical expressions. On the other hand, the implementation of an efficient numerical

procedure typically rests on a good deal of preliminary analysis—to determine the

qualitative features of the solution as a guide to computation, to investigate limit-

ing or special cases, or to discover which ranges of the variables or parameters may

require or merit special attention. Thus, a student should come to realize that investi-

gating a difficult problem may well require both analysis and computation; that good

judgment may be required to determine which tool is best suited for a particular task;

and that results can often be presented in a variety of forms.

We believe that it is important for students to understand that (except perhaps

in courses on differential equations) the goal of solving a differential equation is

seldom simply to obtain the solution. Rather, we seek the solution in order to obtain

insight into the behavior of the process that the equation purports to model. In

other words, the solution is not an end in itself. Thus, we have included in the text

a great many problems, as well as some examples, that call for conclusions to be

drawn about the solution. Sometimes this takes the form of finding the value of the

independent variable at which the solution has a certain property, or determining

the long-term behavior ofthe solution. Otherproblemsask for the effectof variations

in a parameter, or for the determination of a critical value of a parameter at which

the solution experiences a substantial change. Such problems are typical of those

that arise in the applications of differential equations, and, depending on the goals

of the course,an instructor has the option of assigning few or many of these problems.

Readers familiar with the preceding edition will observe that the general structure

of the book is unchanged. The revisions that we have made in this edition are in

many cases the result of suggestions from users of earlier editions. The goals are

to improve the clarity and readability of our presentation of basic material about

differential equations and their applications. More specifically, the most important

revisions include the following:

1. Sections 8.5 and 8.6 have been interchanged, so that the more advanced topics appear at

the end of the chapter.

2. Derivations and proofs in several chapters have been expanded or rewritten to provide

more details.

3. The fact that the real and imaginary parts of a complex solution of a real problem are also

solutions now appears as a theorem in Sections 3.2 and 7.4.

4. The treatment of generalized eigenvectors in Section 7.8 has been expanded both in the

text and in the problems.

5. There are about twenty new or revised problems scattered throughout the book.

6. There are new examples in Sections 2.1, 3.8, and 7.5.

7. About a dozen figures have been modified, mainly by using color to make the essen-

tial feature of the figure more prominent. In addition, numerous captions have been

July 20, 2012 18:17 fpref Sheet number 4 Page number xii cyan black

xii Preface

expanded to clarify the purpose of the figure without requiring a search of the

surrounding text.

8. There are several new historical footnotes, and some others have been expanded.

The authors have found differential equations to be a never-ending source of in-

teresting, and sometimes surprising, results and phenomena. We hope that users of

this book, both students and instructors, will share our enthusiasm for the subject.

William E. Boyce

Grafton, New York

March 13, 2012

July 20, 2012 18:17 fpref Sheet number 5 Page number xiii cyan black

Preface xiii

Supplemental Resources for Instructors and Students

An Instructor’s Solutions Manual, ISBN 978-0-470-45834-1, includes solutions for all

problems not contained in the Student Solutions Manual.

A Student Solutions Manual, ISBN 978-0-470-45833-4, includes solutions for se-

lected problems in the text.

A Book Companion Site, www.wiley.com/college/boyce, provides a wealth of re-

sources for students and instructors, including

•

PowerPoint slides of important definitions, examples, and theorems from the

book,as well as graphics for presentation in lectures or for study and note taking.

•

Chapter Review Sheets, which enable students to test their knowledge of key

concepts. For further review, diagnostic feedback is provided that refers to per-

tinent sections in the text.

•

Mathematica, Maple, and MATLAB data files for selected problems in the text

providing opportunities for further exploration of important concepts.

•

Projects that deal with extended problems normally not included among tradi-

tional topics in differential equations,many involving applications from a variety

of disciplines. These vary in length and complexity, and they can be assigned as

individual homework or as group assignments.

A series of supplemental guidebooks, also published by John Wiley & Sons, can be

used with Boyce/DiPrima in order to incorporate computing technologies into the

course. These books emphasize numerical methods and graphical analysis, showing

how these methods enable us to interpret solutions of ordinary differential equa-

tions (ODEs) in the real world. Separate guidebooks cover each of the three major

mathematical software formats, but the ODE subject matter is the same in each.

•

Hunt, Lipsman, Osborn, and Rosenberg, Differential Equations with MATLAB,

3rd ed., 2012, ISBN 978-1-118-37680-5

•

Hunt, Lardy, Lipsman, Osborn, and Rosenberg, Differential Equations with

Maple, 3rd ed., 2008, ISBN 978-0-471-77317-7

•

Hunt, Outing, Lipsman, Osborn, and Rosenberg, Differential Equations with

Mathematica, 3rd ed., 2009, ISBN 978-0-471-77316-0

WileyPLUS

WileyPLUS is an innovative, research-based online environment for effective teach-

ing and learning.

WileyPLUS builds students’ confidence because it takes the guesswork out of

studying by providing students with a clear roadmap: what to do, how to do it, if they

did it right. Students will take more initiative so you’ll have greater impact on their

achievement in the classroom and beyond.

WileyPLUS, is loaded with all of the supplements above, and it also features

•

The E-book, which is an exact version of the print text but also features hyper-

links to questions, definitions, and supplements for quicker and easier support.

July 20, 2012 18:17 fpref Sheet number 6 Page number xiv cyan black

xiv Preface

•

Guided Online (GO) Exercises, which prompt students to build solutions step-

by-step. Rather than simply grading an exercise answer as wrong, GO problems

show students precisely where they are making a mistake.

•

Homework management tools, which enable instructors easily to assign and

grade questions, as well as to gauge student comprehension.

•

QuickStart pre-designed reading and homework assignments. Use them as is,

or customize them to fit the needs of your classroom.

•

Interactive Demonstrations, based on figures from the text, which help reinforce

and deepen understanding of the key concepts of differential equations. Use

them in class or assign them as homework. Worksheets are provided to help

guide and structure the experience of mastering these concepts.

July 19, 2012 22:54 flast Sheet number 1 Page number xv cyan black

xv

ACKNOWLEDGMENTS

It is a pleasure to express my appreciation to the many people who have generously

assisted in various ways in the preparation of this book.

To the individuals listed below, who reviewed the manuscript and/or provided

valuable suggestions for its improvement:

Vincent Bonini, California Polytechnic State University, San Luis Obispo

Fengxin Chen, University of Texas San Antonio

Carmen Chicone, University of Missouri

Matthew Fahy, Northern Arizona University

Isaac Goldbring, University of California at Los Angeles

Anton Gorodetski, University of California Irvine

Mansoor Haider, North Carolina State University

David Handron, Carnegie Mellon University

Thalia D. Jeffres,Wichita State University

Akhtar Khan, Rochester Institute of Technology

Joseph Koebbe, Utah State University

Ilya Kudish, Kettering University

Tong Li, University of Iowa

Wen-Xiu Ma, University of South Florida

Aldo Manfroi, University of Illinois Urbana-Champaign

Will Murray, California State University Long Beach

Harold R. Parks, Oregon State University

William Paulsen, Arkansas State University

Shagi-Di Shih, University of Wyoming

John Starrett, New Mexico Institute of Mining and Technology

David S. Torain II, Hampton University

George Yates, Youngstown State University

Nung Kwan (Aaron) Yip, Purdue University

Yue Zhao, University of Central Florida

July 19, 2012 22:54 flast Sheet number 2 Page number xvi cyan black

xvi Acknowledgments

To my colleagues and students at Rensselaer, whose suggestions and reactions

through the years have done much to sharpen my knowledge of differential equa-

tions, as well as my ideas on how to present the subject.

To those readers of the preceding edition who called errors or omissions to my

attention.

To Tamas Wiandt (Rochester Institute of Technology), who is primarily responsi-

ble for the revision of the Instructor’s Solutions Manual and the Student Solutions

Manual, and to Charles Haines (Rochester Institute of Technology), who assisted in

this undertaking.

To Tom Polaski (Winthrop University), who checked the answers in the back of

the text and the Instructor’s Solutions Manual for accuracy.

To David Ryeburn (Simon Fraser University), who carefully checked the entire

manuscript and pageproofs at leastfour times andis responsible formany corrections

and clarifications.

To Douglas Meade (University of South Carolina), who gave indispensable assis-

tance in a variety of ways: by reading the entire manuscript at an early stage and

offering numerous suggestions; by materially assisting in expanding the historical

footnotes and updating the references; and by assuming the primary responsibility

for checking the accuracy of the page proofs.

To the editorial and production staff of John Wiley & Sons, who have always been

ready to offer assistance and have displayed the highest standards of professionalism.

Finally, and most important, to my wife Elsa for discussing questions both math-

ematical and stylistic, and above all for her unfailing support and encouragement

during the revision process. In a very real sense, this book is a joint product.

William E. Boyce

July 19, 2012 22:53 ftoc Sheet number 1 Page number xvii cyan black

xvii

CONTENTS

Chapter 1 Introduction 1

1.1 Some Basic Mathematical Models; Direction Fields 1

1.2 Solutions of Some Differential Equations 10

1.3 Classification of Differential Equations 19

1.4 Historical Remarks 26

Chapter 2

First Order Differential Equations 31

2.1 Linear Equations; Method of Integrating Factors 31

2.2 Separable Equations 42

2.3 Modeling with First Order Equations 51

2.4 Differences Between Linear and Nonlinear Equations 68

2.5 Autonomous Equations and Population Dynamics 78

2.6 Exact Equations and Integrating Factors 95

2.7 Numerical Approximations: Euler’s Method 102

2.8 The Existence and Uniqueness Theorem 112

2.9 First Order Difference Equations 122

Chapter 3

Second Order Linear Equations 137

3.1 Homogeneous Equations with Constant Coefficients 137

3.2 Solutions of Linear Homogeneous Equations; the Wronskian 145

3.3 Complex Roots of the Characteristic Equation 158

3.4 Repeated Roots; Reduction of Order 167

3.5 Nonhomogeneous Equations; Method of Undetermined Coefficients 175

3.6 Variation of Parameters 186

3.7 Mechanical and Electrical Vibrations 192

3.8 Forced Vibrations 207

Chapter 4

Higher Order Linear Equations 221

4.1 General Theory of nth Order Linear Equations 221

4.2 Homogeneous Equations with Constant Coefficients 228

4.3 The Method of Undetermined Coefficients 236

4.4 The Method of Variation of Parameters 241

Chapter 5

Series Solutions of Second Order Linear Equations 247

5.1 Review of Power Series 247

5.2 Series Solutions Near an Ordinary Point, Part I 254

July 19, 2012 22:53 ftoc Sheet number 2 Page number xviii cyan black

xviii Contents

5.3 Series Solutions Near an Ordinary Point, Part II 265

5.4 Euler Equations; Regular Singular Points 272

5.5 Series Solutions Near a Regular Singular Point, Part I 282

5.6 Series Solutions Near a Regular Singular Point, Part II 288

5.7 Bessel’s Equation 296

Chapter 6

The Laplace Transform 309

6.1 Definition of the Laplace Transform 309

6.2 Solution of Initial Value Problems 317

6.3 Step Functions 327

6.4 Differential Equations with Discontinuous Forcing Functions 336

6.5 Impulse Functions 343

6.6 The Convolution Integral 350

Chapter 7

Systems of First Order Linear Equations 359

7.1 Introduction 359

7.2 Review of Matrices 368

7.3 Systems of Linear Algebraic Equations; Linear Independence, Eigenvalues,

Eigenvectors 378

7.4 Basic Theory of Systems of First Order Linear Equations 390

7.5 Homogeneous Linear Systems with Constant Coefficients 396

7.6 Complex Eigenvalues 408

7.7 Fundamental Matrices 421

7.8 Repeated Eigenvalues 429

7.9 Nonhomogeneous Linear Systems 440

Chapter 8

Numerical Methods 451

8.1 The Euler or Tangent Line Method 451

8.2 Improvements on the Euler Method 462

8.3 The Runge–Kutta Method 468

8.4 Multistep Methods 472

8.5 Systems of First Order Equations 478

8.6 More on Errors; Stability 482

Chapter 9

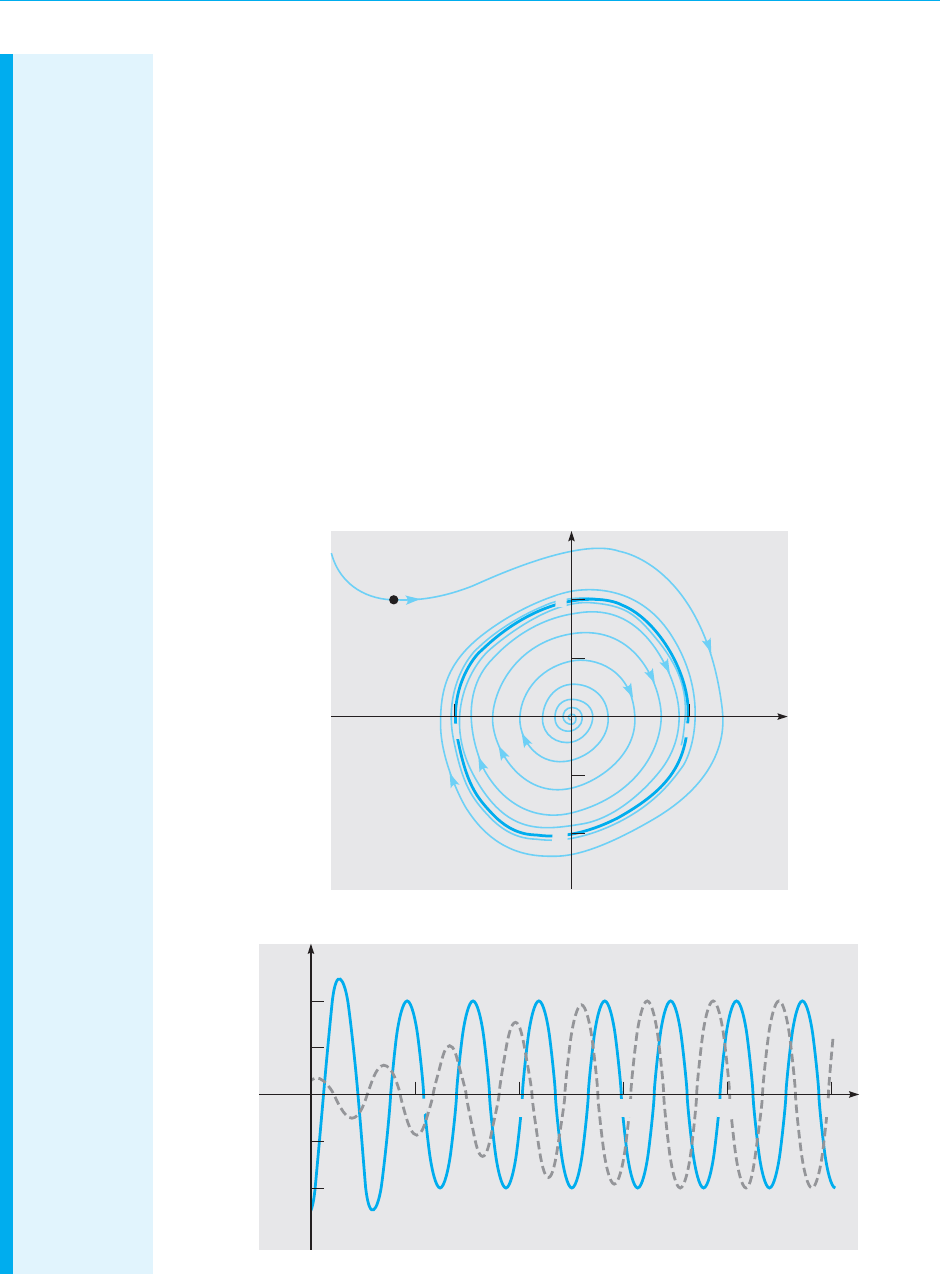

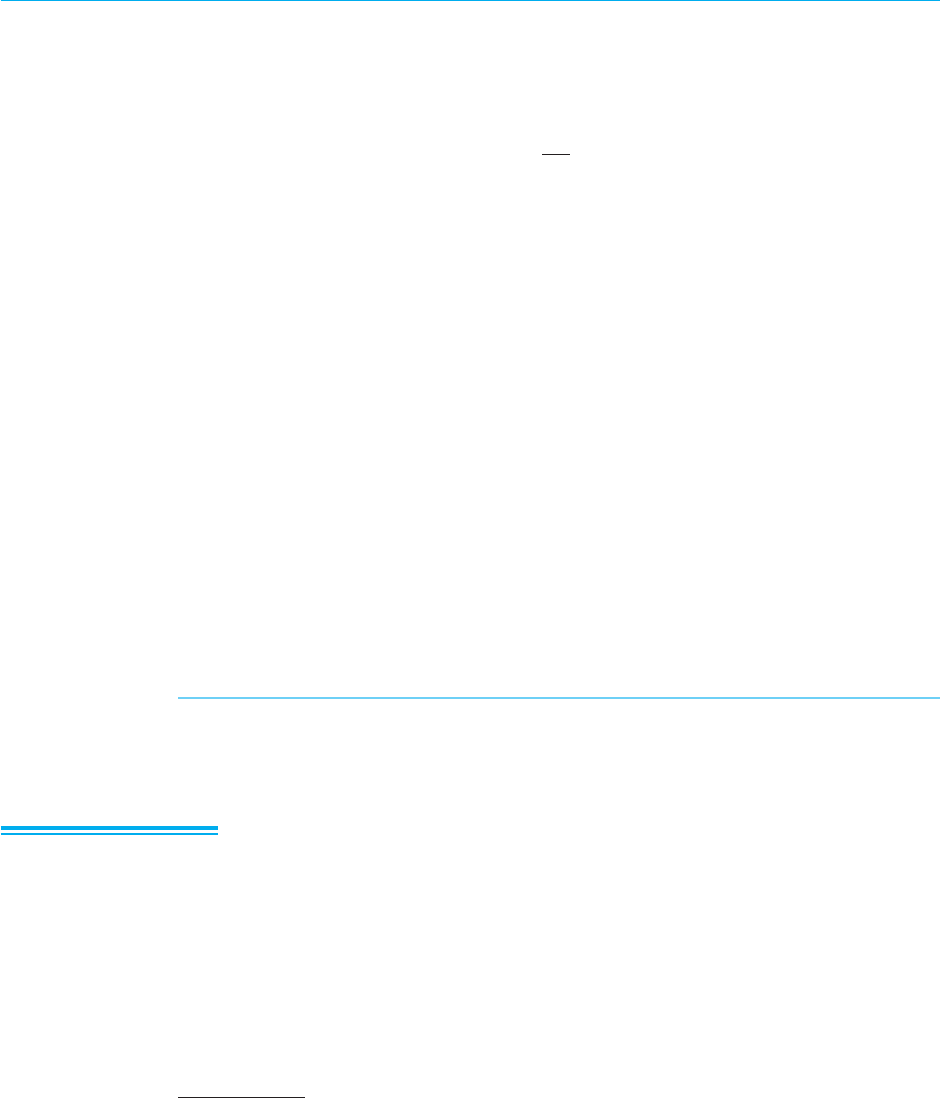

Nonlinear Differential Equations and Stability 495

9.1 The Phase Plane: Linear Systems 495

9.2 Autonomous Systems and Stability 508

9.3 Locally Linear Systems 519

9.4 Competing Species 531

9.5 Predator–Prey Equations 544

July 19, 2012 22:53 ftoc Sheet number 3 Page number xix cyan black

Contents xix

9.6 Liapunov’s Second Method 554

9.7 Periodic Solutions and Limit Cycles 565

9.8 Chaos and Strange Attractors: The Lorenz Equations 577

Chapter 10

Partial Differential Equations and Fourier Series 589

10.1 Two-Point Boundary Value Problems 589

10.2 Fourier Series 596

10.3 The Fourier Convergence Theorem 607

10.4 Even and Odd Functions 614

10.5 Separation of Variables; Heat Conduction in a Rod 623

10.6 Other Heat Conduction Problems 632

10.7 The Wave Equation: Vibrations of an Elastic String 643

10.8 Laplace’s Equation 658

Appendix A Derivation of the Heat Conduction Equation 669

Appendix B Derivation of the Wave Equation 673

Chapter 11

Boundary Value Problems and Sturm–Liouville Theory 677

11.1 The Occurrence of Two-Point Boundary Value Problems 677

11.2 Sturm–Liouville Boundary Value Problems 685

11.3 Nonhomogeneous Boundary Value Problems 699

11.4 Singular Sturm–Liouville Problems 714

11.5 Further Remarks on the Method of Separation of Variables: A Bessel

Series Expansion 721

11.6 Series of Orthogonal Functions: Mean Convergence 728

Answers to Problems 739

Index 799

July 19, 2012 22:53 ftoc Sheet number 4 Page number xx cyan black

August 7, 2012 21:03 c01 Sheet number 1 Page number 1 cyan black

1

CHAPTER

1

Introduction

In this chapter we give perspective to your study of differential equations in several

different ways. First,we use two problems to illustrate some of the basic ideas that we

will return to, and elaborate upon, frequently throughout the remainder of the book.

Later, to provide organizational structure for the book, we indicate several ways

of classifying differential equations. Finally, we outline some of the major trends

in the historical development of the subject and mention a few of the outstanding

mathematicians who have contributed to it. The study of differential equations has

attracted the attention of many of the world’s greatest mathematicians during the

past three centuries. Nevertheless, it remains a dynamic field of inquiry today, with

many interesting open questions.

1.1 Some Basic Mathematical Models; Direction Fields

Before embarking on a serious study of differential equations (for example, by read-

ing this book or major portions of it), you should have some idea of the possible

benefits to be gained by doing so. For some students the intrinsic interest of the

subject itself is enough motivation, but for most it is the likelihood of important

applications to other fields that makes the undertaking worthwhile.

Many of the principles, or laws, underlying the behavior of the natural world are

statements or relations involving rates at which things happen. When expressed

in mathematical terms, the relations are equations and the rates are derivatives.

Equations containing derivatives are differential equations.Therefore,to understand

and to investigate problems involving the motion of fluids, the flow of current in elec-

tric circuits, the dissipation of heat in solid objects, the propagation and detection of

August 7, 2012 21:03 c01 Sheet number 2 Page number 2 cyan black

2 Chapter 1. Intr oduction

seismic waves, or the increase or decrease of populations, among many others, it is

necessary to know something about differential equations.

A differential equation that describes some physical process is often called a math-

ematical model of the process, and many such models are discussed throughout this

book. In this section we begin with two models leading to equations that are easy to

solve. It is noteworthy that even the simplest differential equations provide useful

models of important physical processes.

EXAMPLE

1

AFalling

Object

Suppose that an object is falling in the atmosphere near sea level. Formulate a differential

equation that describes the motion.

We begin by introducing letters to represent various quantities that may be of interest in this

problem. The motion takes place during a certain time interval, so let us use t to denote time.

Also, let us use v to represent the velocity of the falling object. The velocity will presumably

change with time, so we think of v as a function of t; in other words, t is the independent

variable and v is the dependent variable. The choice of units of measurement is somewhat

arbitrary, and there is nothing in the statement of the problem to suggest appropriate units,

so we are free to make any choice that seems reasonable. To be specific, let us measure time

t in seconds and velocity v in meters/second. Further, we will assume that v is positive in the

downward direction—that is, when the object is falling.

The physical law that governs the motion of objects is Newton’s second law, which states

that the mass of the object times its acceleration is equal to the net force on the object. In

mathematical terms this law is expressed by the equation

F = ma, (1)

where m is the mass of the object, a is its acceleration, and F is the net force exerted on the

object. To keep our units consistent, we will measure m in kilograms, a in meters/second

2

, and

F in newtons. Of course, a is related to v by a = dv/dt, so we can rewrite Eq. (1) in the form

F = m(dv/dt). (2)

Next, consider the forces that act on the object as it falls. Gravity exerts a force equal to

the weight of the object, or mg, where g is the acceleration due to gravity. In the units we have

chosen, g has been determined experimentally to be approximately equal to 9.8 m/s

2

near

the earth’s surface. There is also a force due to air resistance, or drag, that is more difficult to

model. This is not the place for an extended discussion of the drag force; suffice it to say that it

is often assumed that the drag is proportional to the velocity,and we will make that assumption

here.Thus the drag force has the magnitude γv,where γ is a constant called the drag coefficient.

The numerical value of the drag coefficient varies widely from one object to another; smooth

streamlined objects have much smaller drag coefficients than rough blunt ones. The physical

units for γ are mass/time, or kg/s for this problem; if these units seem peculiar, remember that

γv must have the units of force, namely, kg·m/s

2

.

In writing an expression for the net force F , we need to remember that gravity always acts

in the downward (positive) direction, whereas, for a falling object, drag acts in the upward

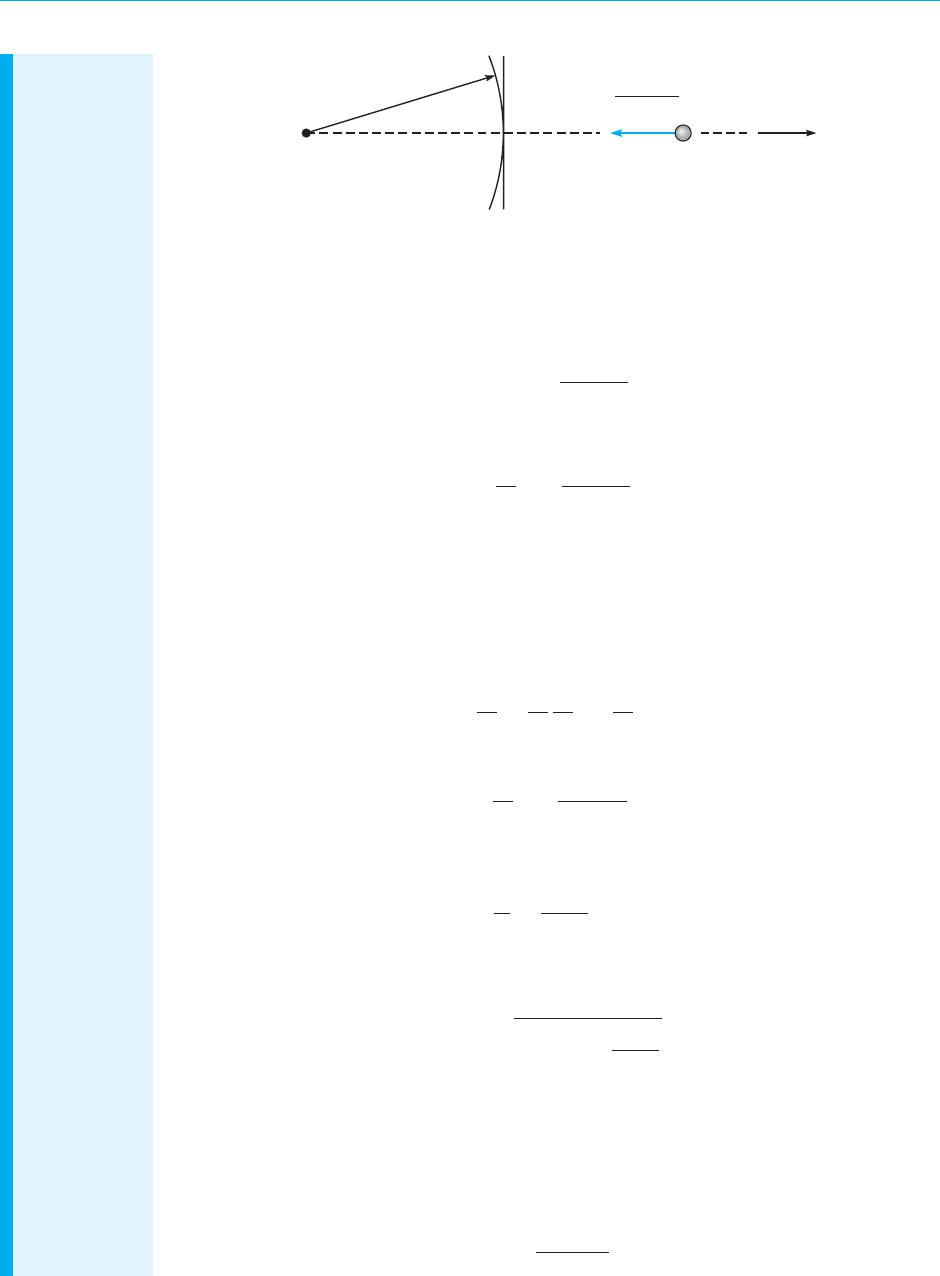

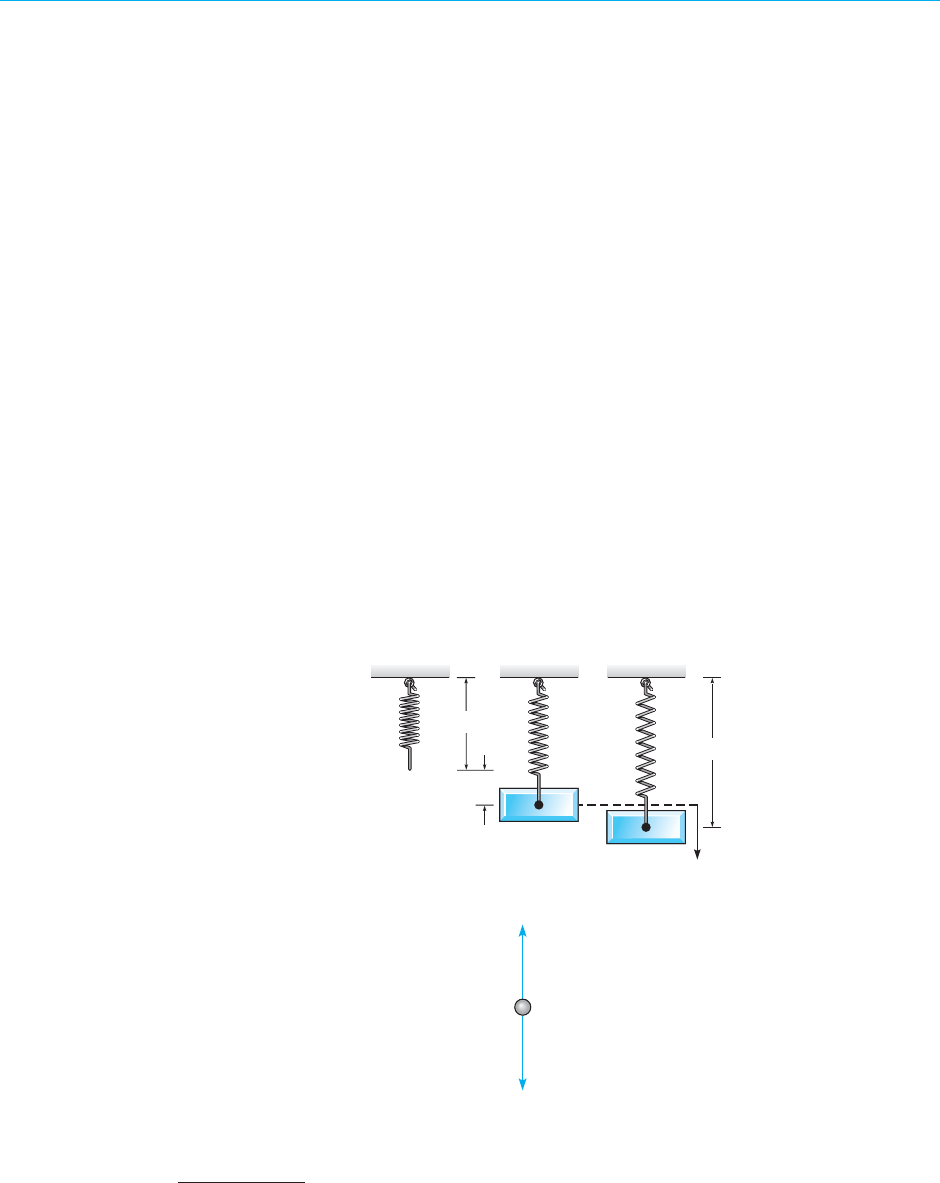

(negative) direction, as shown in Figure 1.1.1. Thus

F = mg − γv (3)

and Eq. (2) then becomes

m

dv

dt

= mg − γv. (4)

Equation (4) is a mathematical model of an object falling in the atmosphere near sea level.

Note that the model contains the three constants m, g, and γ. The constants m and γ depend

August 7, 2012 21:03 c01 Sheet number 3 Page number 3 cyan black

1.1 Some Basic Mathematical Models; Direction Fields 3

very much on the particular object that is falling, and they are usually different for different

objects. It is common to refer to them as parameters, since they may take on a range of values

during the course of an experiment. On the other hand, g is a physical constant, whose value

is the same for all objects.

γ υ

m

mg

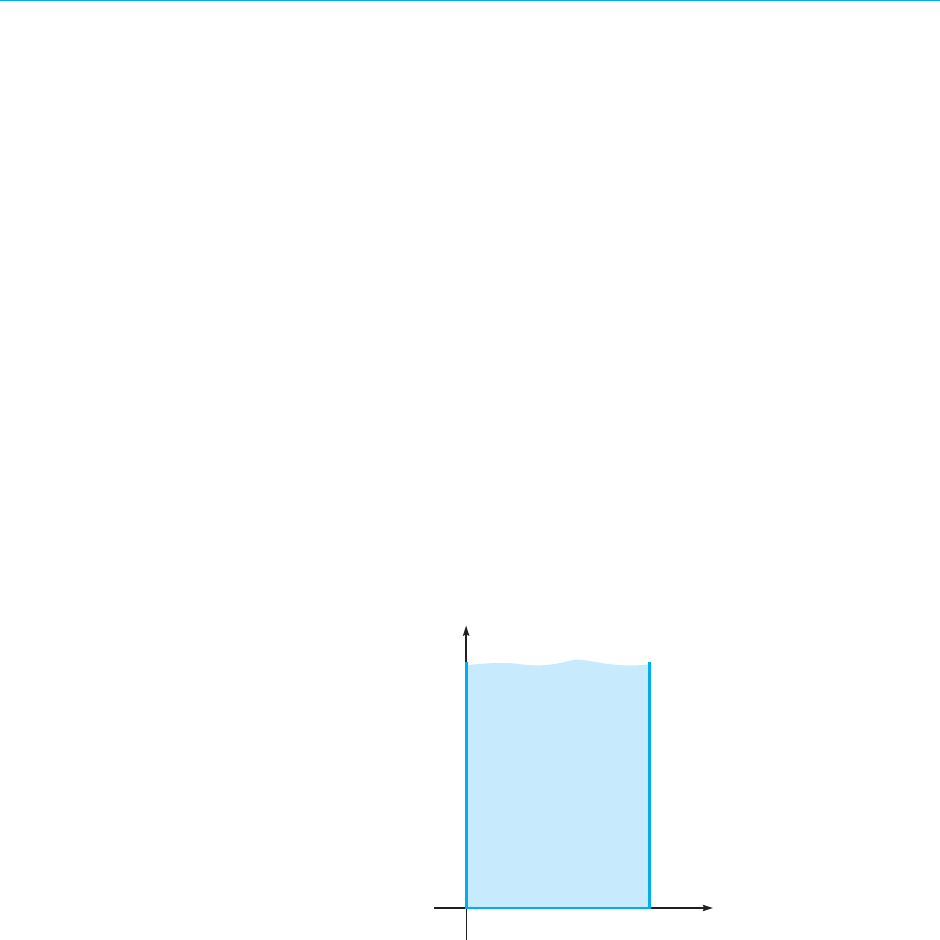

FIGURE 1.1.1 Free-body diagram of the forces on a falling object.

To solve Eq. (4), we need to find a function v = v(t) that satisfies the equation. It

is not hard to do this, and we will show you how in the next section. For the present,

however,let us see what we can learn about solutions without actually finding any of

them. Our task is simplified slightly if we assign numerical values to m and γ, but the

procedure is the same regardless of which values we choose. So, let us suppose that

m = 10 kg and γ = 2 kg/s. Then Eq. (4) can be rewritten as

d v

dt

= 9.8 −

v

5

. (5)

EXAMPLE

2

AFalling

Object

(continued)

Investigate the behavior of solutions of Eq. (5) without solving the differential equation.

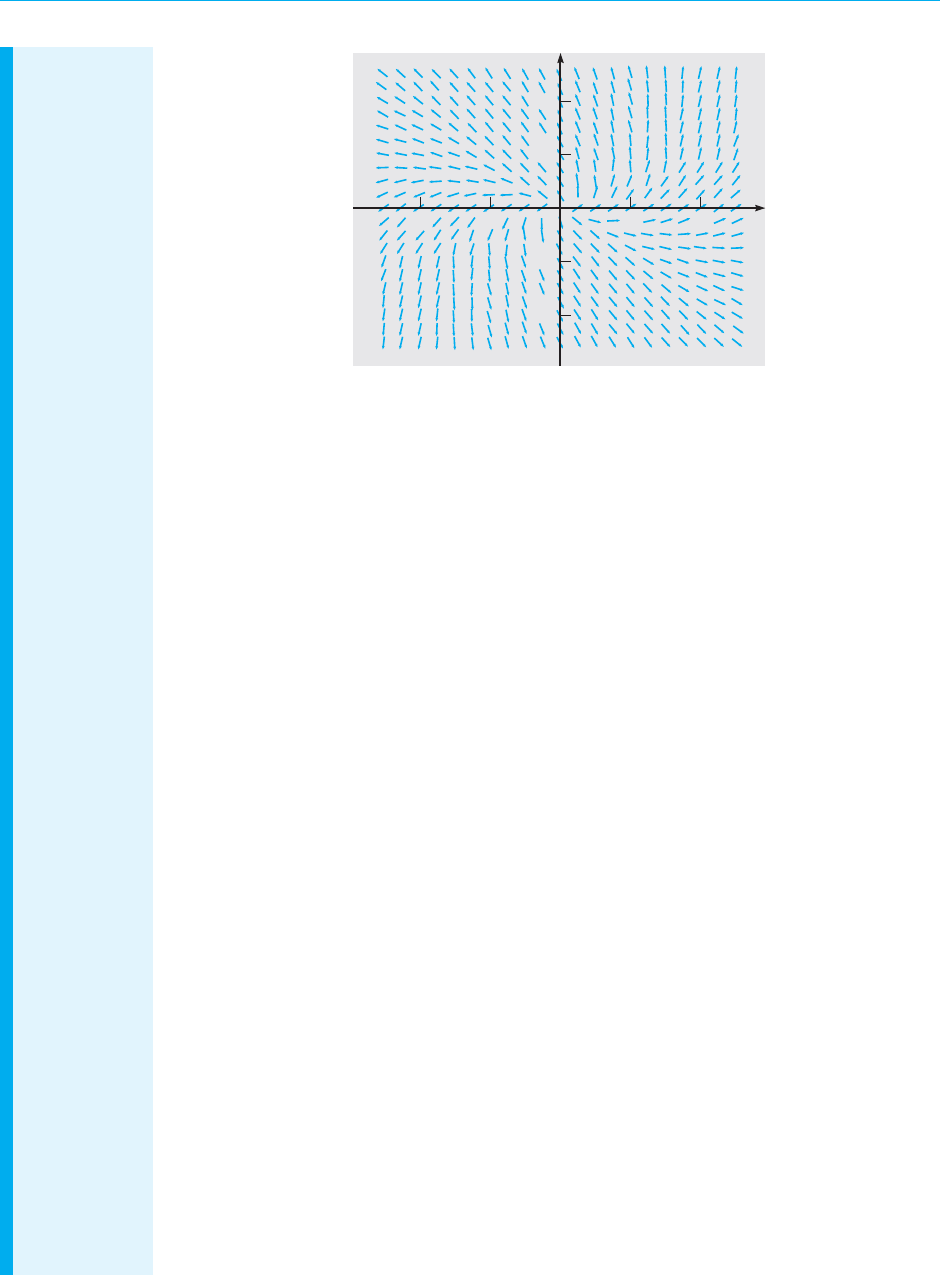

First let us consider what information canbe obtained directlyfrom the differentialequation

itself. Suppose that the velocity v has a certain given value.Then, by evaluating the right side of

Eq. (5),we can find the corresponding value of d v/dt. For instance,if v = 40,then dv/dt = 1.8.

This means that the slope of a solution v = v(t) has the value 1.8 at any point where v = 40.

We can display this information graphically in the tv-plane by drawing short line segments

with slope 1.8 at several points on the line v = 40. Similarly, if v = 50, then dv/dt =−0.2, so

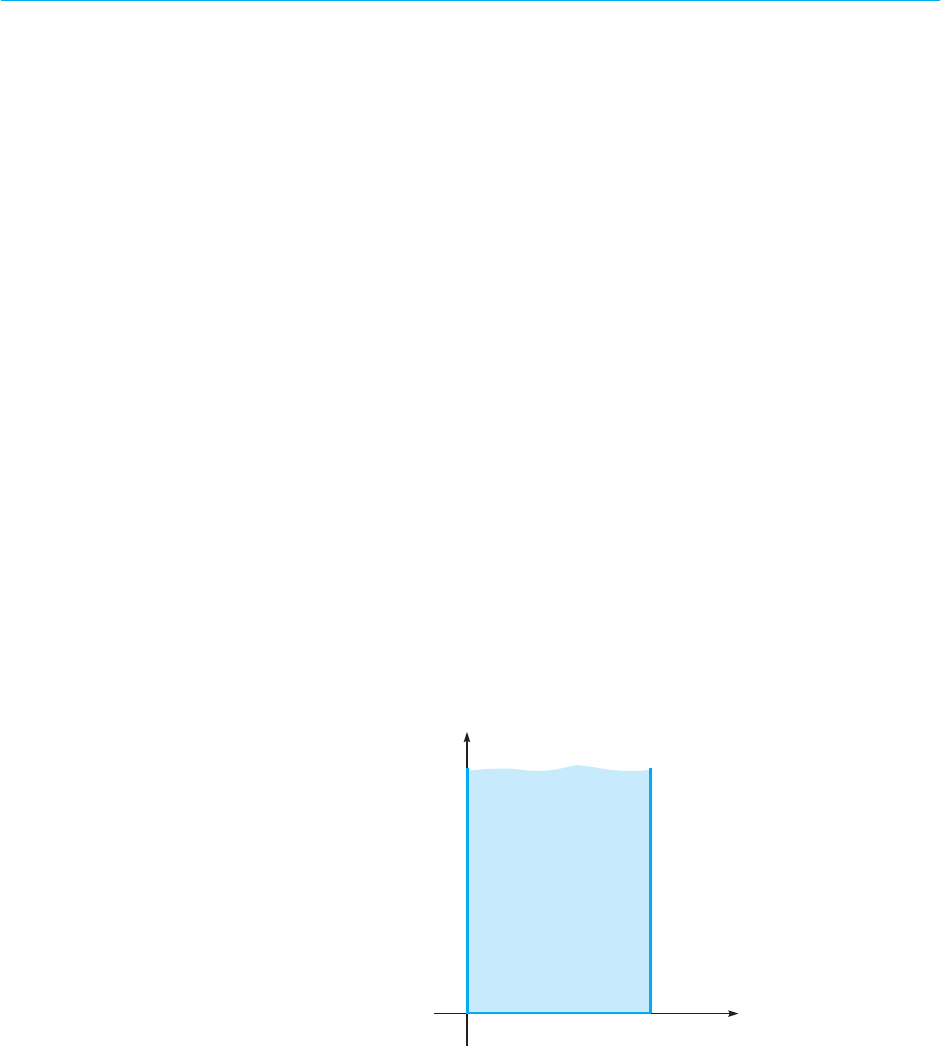

we draw line segments with slope −0.2 at several points on the line v = 50. We obtain Figure

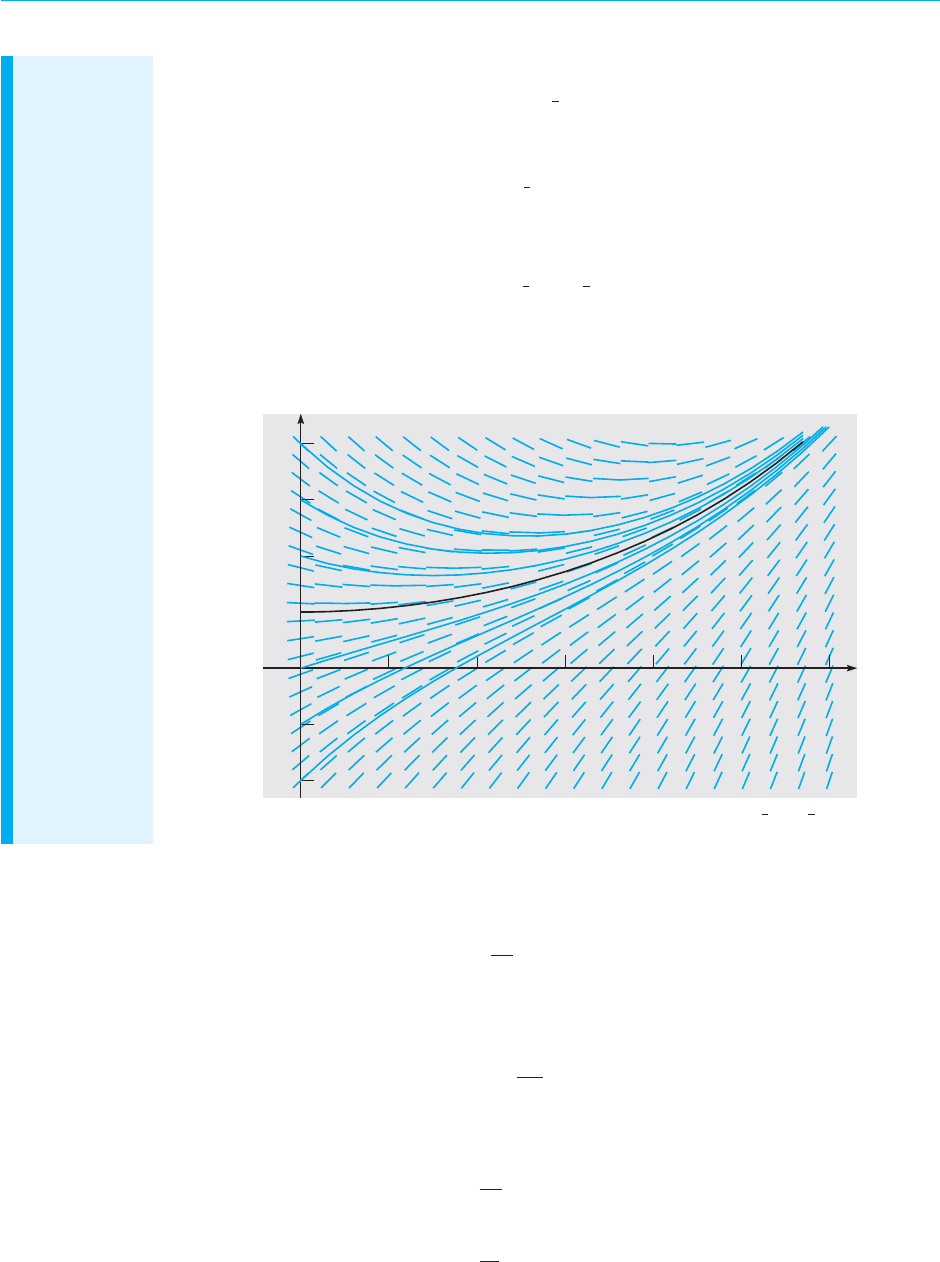

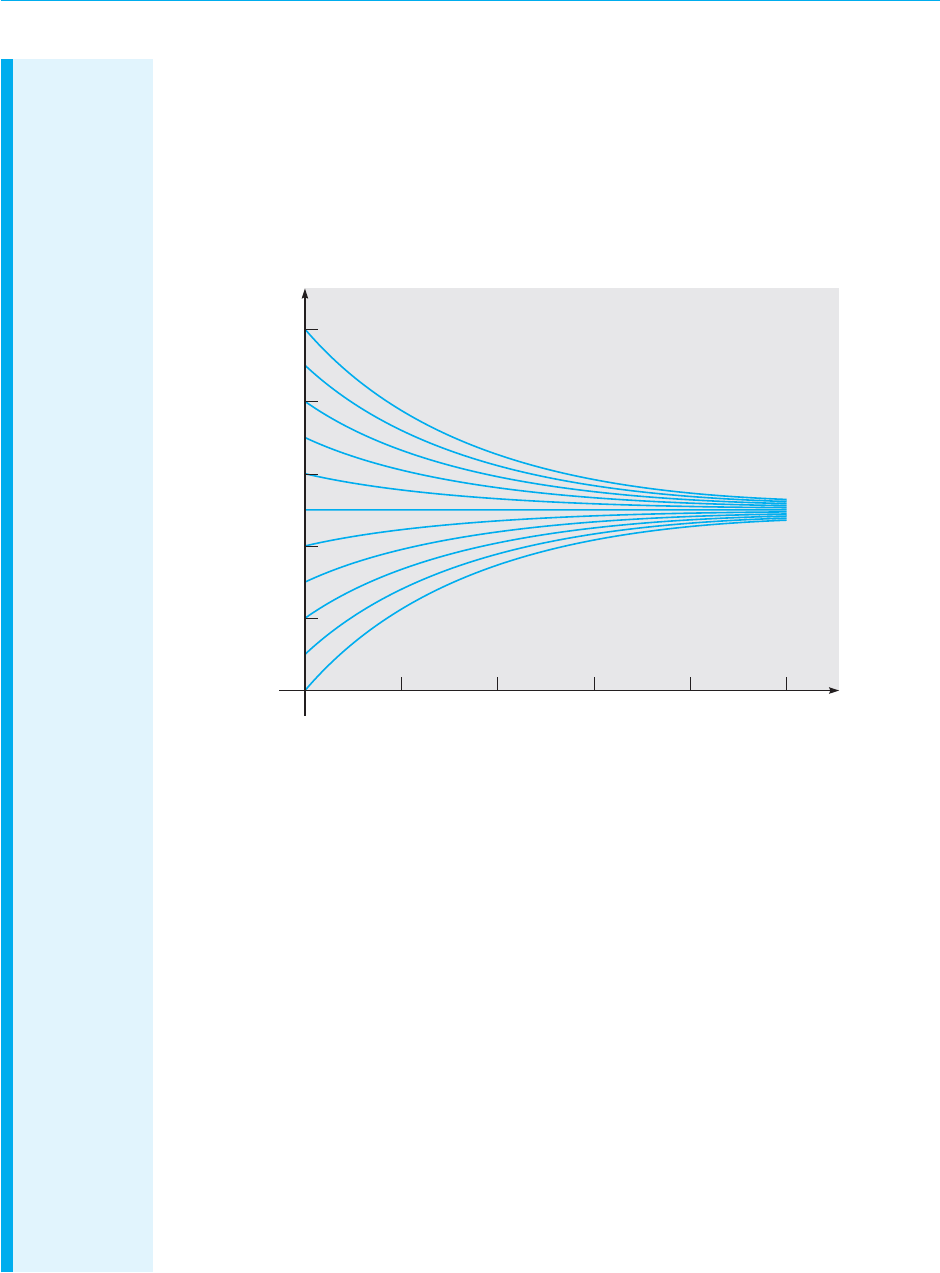

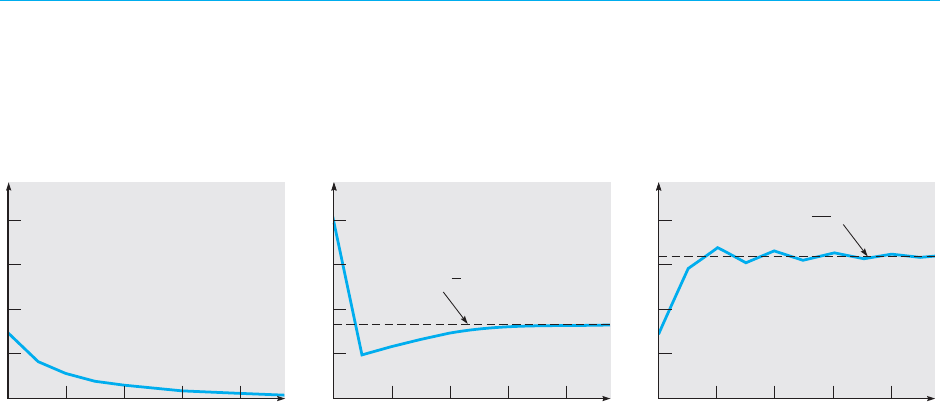

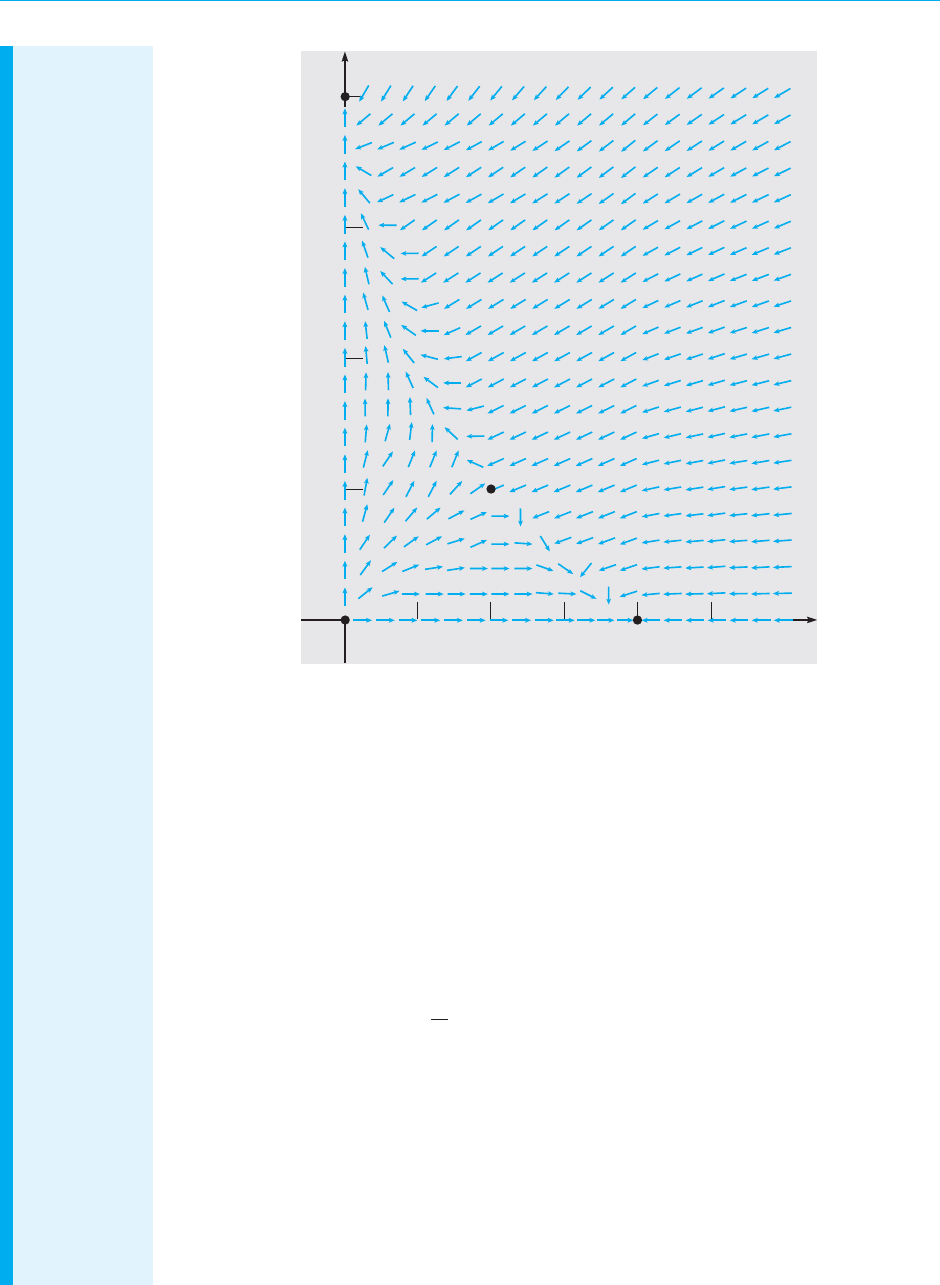

1.1.2 by proceeding in the same way with other values of v. Figure 1.1.2 is an example of what

is called a direction field or sometimes a slope field.

Remember that a solution of Eq. (5) is a function v = v(t) whose graph is a curve in the

tv-plane. The importance of Figure 1.1.2 is that each line segment is a tangent line to one

of these solution curves. Thus, even though we have not found any solutions, and no graphs of

solutions appear in the figure, we can nonetheless draw some qualitative conclusions about

the behavior of solutions. For instance, if v is less than a certain critical value, then all the line

segments have positive slopes, and the speed of the falling object increases as it falls. On the

other hand, if v is greater than the critical value, then the line segments have negative slopes,

and the falling object slows down as it falls.What is this critical value of v that separates objects

whose speed is increasing from those whose speed is decreasing? Referring again to Eq. (5),

we ask what value of v will cause dv/dt to be zero. The answer is v = (5)(9.8) = 49

m/s

.

In fact, the constant function v(t) = 49 is a solution of Eq. (5). To verify this statement,

substitute v(t) = 49 into Eq. (5) and observe that each side of the equation is zero. Because

it does not change with time, the solution v(t) = 49 is called an equilibrium solution.Itis

the solution that corresponds to a perfect balance between gravity and drag. In Figure 1.1.3

August 7, 2012 21:03 c01 Sheet number 4 Page number 4 cyan black

4 Chapter 1. Intr oduction

we show the equilibrium solution v(t) = 49 superimposed on the direction field. From this

figure we can draw another conclusion, namely, that all other solutions seem to be converging

to the equilibrium solution as t increases. Thus,in this context,the equilibrium solution is often

called the terminal velocity.

24 t6810

48

44

40

52

60

56

υ

FIGURE 1.1.2 A direction field for Eq. (5): d v/dt = 9.8 − (v/5).

24 t6810

48

44

40

52

60

56

υ

FIGURE 1.1.3 Direction field and equilibrium solution for Eq. (5): dv/dt = 9.8 − (v/5).

The approach illustrated in Example 2 can be applied equally well to the more

general Eq. (4), where the parameters m and γ are unspecified positive numbers.

The results are essentially identical to those of Example 2. The equilibrium solution

of Eq. (4) is v(t) = mg/γ. Solutions below the equilibriumsolution increasewith time,

those above it decrease with time, and all other solutions approach the equilibrium

solution as t becomes large.

August 7, 2012 21:03 c01 Sheet number 5 Page number 5 cyan black

1.1 Some Basic Mathematical Models; Direction Fields 5

Direction Fields. Direction fields are valuable tools in studying the solutions of

differential equations of the form

dy

dt

= f (t, y), (6)

where f is a given function of the two variables t and y, sometimes referred to as the

rate function. A direction field for equations of the form (6) can be constructed by

evaluating f at each point of a rectangular grid. At each point of the grid, a short line

segment is drawn whose slope is the value of f at that point. Thus each line segment

is tangent to the graph of the solution passing through that point. A direction field

drawn on a fairly fine grid gives a good picture of the overall behavior of solutions of

a differential equation. Usually a grid consisting of a few hundred points is sufficient.

The construction of a direction field is often a useful first step in the investigation of

a differential equation.

Two observations are worth particular mention. First, in constructing a direction

field, we do not have to solve Eq. (6); we just have to evaluate the given function

f (t, y) many times.Thus direction fields can be readily constructed even for equations

that may be quite difficult to solve. Second, repeated evaluation of a given function

is a task for which a computer is well suited, and you should usually use a computer

to draw a direction field. All the direction fields shown in this book, such as the one

in Figure 1.1.2, were computer-generated.

Field Mice and Owls. Now let us look at another, quite different example. Consider

a population of field mice who inhabit a certain rural area. In the absence of

predators we assume that the mouse population increases at a rate proportional

to the current population. This assumption is not a well-established physical law

(as Newton’s law of motion is in Example 1), but it is a common initial hypothesis

1

in a study of population growth. If we denote time by t and the mouse population by

p(t),then the assumption about population growth can be expressed by the equation

dp

dt

= rp, (7)

where the proportionality factor r is called the rate constant or growth rate.Tobe

specific,suppose that time is measured in months and that the rate constant r has the

value 0.5/month. Then each term in Eq. (7) has the units of mice/month.

Now let us add to the problem by supposing that several owls live in the same

neighborhood and that they kill 15 field mice per day.To incorporate this information

into the model, we must add another term to the differential equation (7), so that it

becomes

dp

dt

= 0.5p − 450. (8)

Observe that the predation term is −450 rather than −15 because time is measured

in months, so the monthly predation rate is needed.

1

A better model of population growth is discussed in Section 2.5.

August 7, 2012 21:03 c01 Sheet number 6 Page number 6 cyan black

6 Chapter 1. Intr oduction

EXAMPLE

3

Investigate the solutions of Eq. (8) graphically.

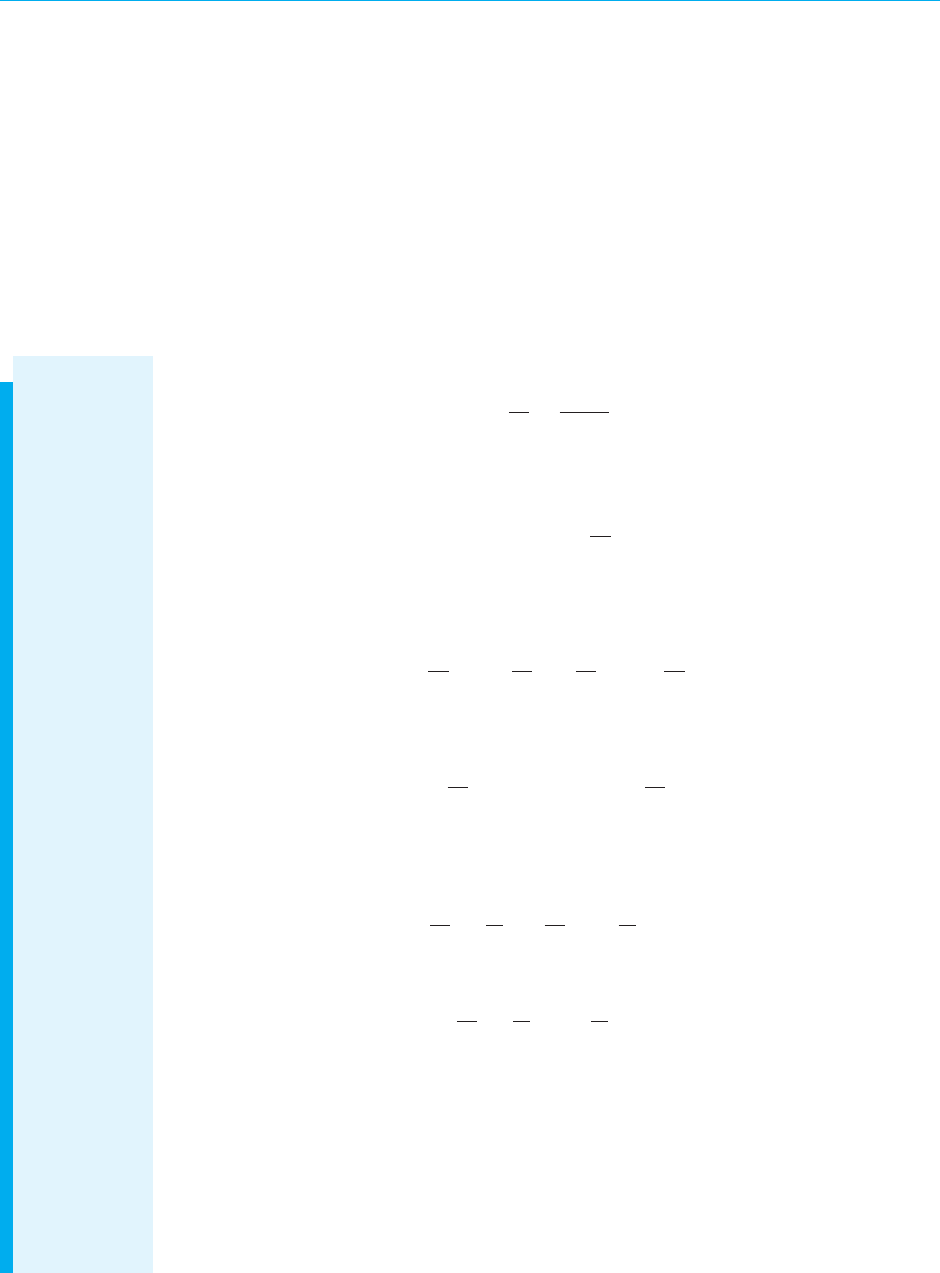

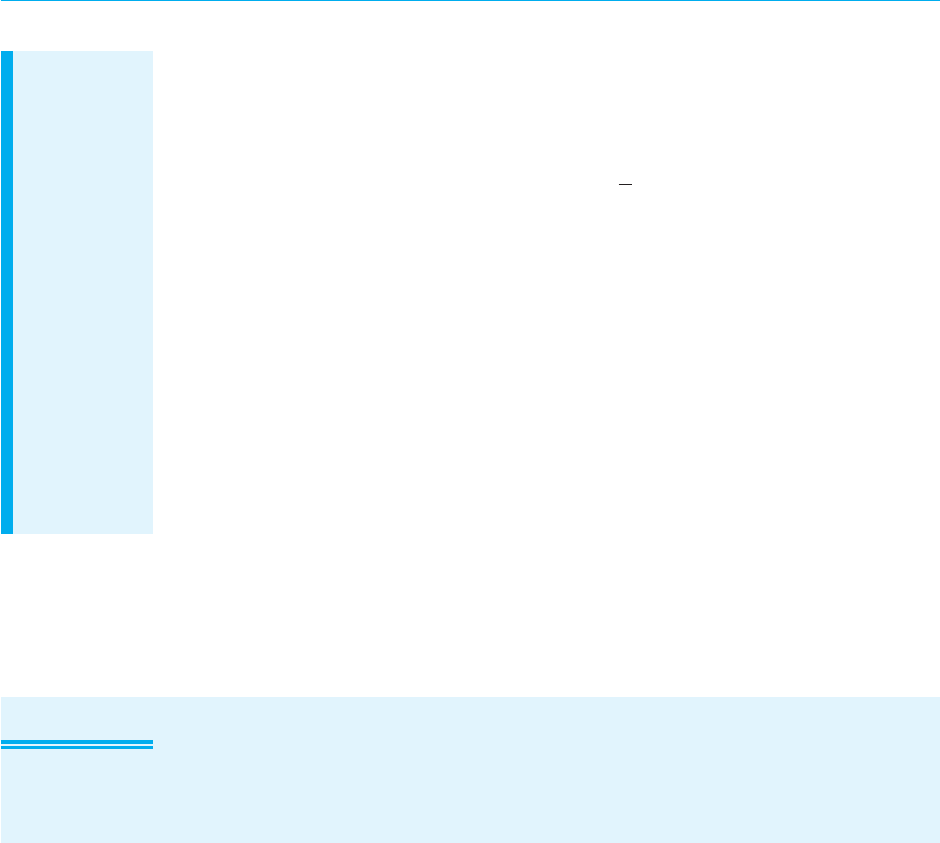

A direction field for Eq. (8) is shown in Figure 1.1.4. For sufficiently large values of p it can

be seen from the figure, or directly from Eq. (8) itself, that dp/dt is positive, so that solutions

increase. On the other hand, if p is small, then dp/dt is negative and solutions decrease. Again,

the critical value of p that separates solutions that increase from those that decrease is the

value of p for which dp/dt is zero. By setting dp/dt equal to zero in Eq. (8) and then solving

for p,we find the equilibrium solution p(t) = 900, for which the growth term and the predation

term in Eq. (8) are exactly balanced. The equilibrium solution is also shown in Figure 1.1.4.

12 t345

900

850

800

950

1000

p

FIGURE 1.1.4 Direction field and equilibrium solution for Eq. (8): dp/dt = 0.5p − 450.

Comparing Examples 2 and 3, we note that in both cases the equilibrium solution

separates increasing from decreasing solutions. In Example 2 other solutions con-

verge to, or are attracted by, the equilibrium solution, so that after the object falls far

enough, an observer will see it moving at very nearly the equilibrium velocity. On

the other hand, in Example 3 other solutions diverge from, or are repelled by, the

equilibrium solution. Solutions behave very differently depending on whether they

start above or below the equilibrium solution. As time passes, an observer might see

populations either much larger or much smaller than the equilibrium population,but

the equilibrium solution itself will not, in practice, be observed. In both problems,

however, the equilibrium solution is very important in understanding how solutions

of the given differential equation behave.

A more general version of Eq. (8) is

dp

dt

= rp − k, (9)

where the growth rate r and the predation rate k are unspecified. Solutions of this

more general equation are very similar to those of Eq. (8). The equilibrium solution

of Eq. (9) is p(t) = k /r. Solutions above the equilibrium solution increase, while

those below it decrease.

You should keep in mind that both of the models discussed in this section have

their limitations. The model (5) of the falling object is valid only as long as the

August 7, 2012 21:03 c01 Sheet number 7 Page number 7 cyan black

1.1 Some Basic Mathematical Models; Direction Fields 7

object is falling freely, without encountering any obstacles. The population model

(8) eventually predicts negative numbers of mice (if p < 900) or enormously large

numbers (ifp > 900). Both of thesepredictions areunrealistic,so this model becomes

unacceptable after a fairly short time interval.

Constructing Mathematical Models. In applying differential equations to any of the

numerous fields in which they are useful, it is necessary first to formulate the appro-

priate differential equation that describes,or models,the problem being investigated.

In this section we have looked at two examples of this modeling process, one drawn

from physics and the other from ecology. In constructing future mathematical mod-

els yourself, you should recognize that each problem is different, and that successful

modeling cannot be reduced to the observance of a set of prescribed rules. Indeed,

constructing a satisfactory model is sometimes the most difficult part of the problem.

Nevertheless, it may be helpful to list some steps that are often part of the process:

1. Identify the independent and dependent variables and assign letters to represent them.

Often the independent variable is time.

2. Choose the units of measurement for each variable. In a sense the choice of units is

arbitrary, but some choices may be much more convenient than others. For example, we

chose to measure time in seconds for the falling-object problem and in months for the

population problem.

3. Articulate the basic principle that underlies or governs the problem you are investigating.

This may be a widely recognized physical law,such as Newton’s law of motion,or it may be

a more speculative assumption that may be based on your own experience or observations.

In any case, this step is likely not to be a purely mathematical one, but will require you to

be familiar with the field in which the problem originates.

4. Express the principle or law in step 3 in terms of the variables you chose in step 1. This

may be easier said than done. It may require the introduction of physical constants or

parameters (such as the drag coefficient in Example 1) and the determination of appro-

priate values for them. Or it may involve the use of auxiliary or intermediate variables

that must then be related to the primary variables.

5. Make sure that all terms in your equation have the same physical units. If this is not the

case, then your equation is wrong and you should seek to repair it. If the units agree, then

your equation at least is dimensionally consistent,although it may have other shortcomings

that this test does not reveal.

6. In the problems considered here,the result of step 4 is a single differential equation,which

constitutes the desired mathematical model. Keep in mind, though, that in more complex

problems the resulting mathematical model may be much more complicated, perhaps

involving a system of several differential equations, for example.

PROBLEMS In each of Problems 1 through 6, draw a direction field for the given differential equation.

Based on the direction field, determine the behavior of y as t →∞. If this behavior depends

on the initial value of y at t = 0, describe the dependency.

1.

y

′

= 3 − 2y 2. y

′

= 2y − 3

3.

y

′

= 3 + 2y 4. y

′

=−1 − 2y

5.

y

′

= 1 + 2y 6. y

′

= y + 2

August 7, 2012 21:03 c01 Sheet number 8 Page number 8 cyan black

8 Chapter 1. Intr oduction

In each of Problems 7 through 10, write down a differential equation of the form

dy/dt = ay + b whose solutions have the required behavior as t →∞.

7. All solutions approach y = 3. 8. All solutions approach y = 2/3.

9. All other solutions diverge from y = 2. 10. All other solutions diverge from y = 1/3.

In each of Problems 11 through 14, draw a direction field for the given differential equation.

Based on the direction field, determine the behavior of y as t →∞. If this behavior depends

on the initial value of y at t = 0, describe this dependency. Note that in these problems the

equations are not of the form y

′

= ay + b, and the behavior of their solutions is somewhat

more complicated than for the equations in the text.

11.

y

′

= y(4 − y) 12. y

′

=−y(5 − y)

13.

y

′

= y

2

14. y

′

= y(y − 2)

2

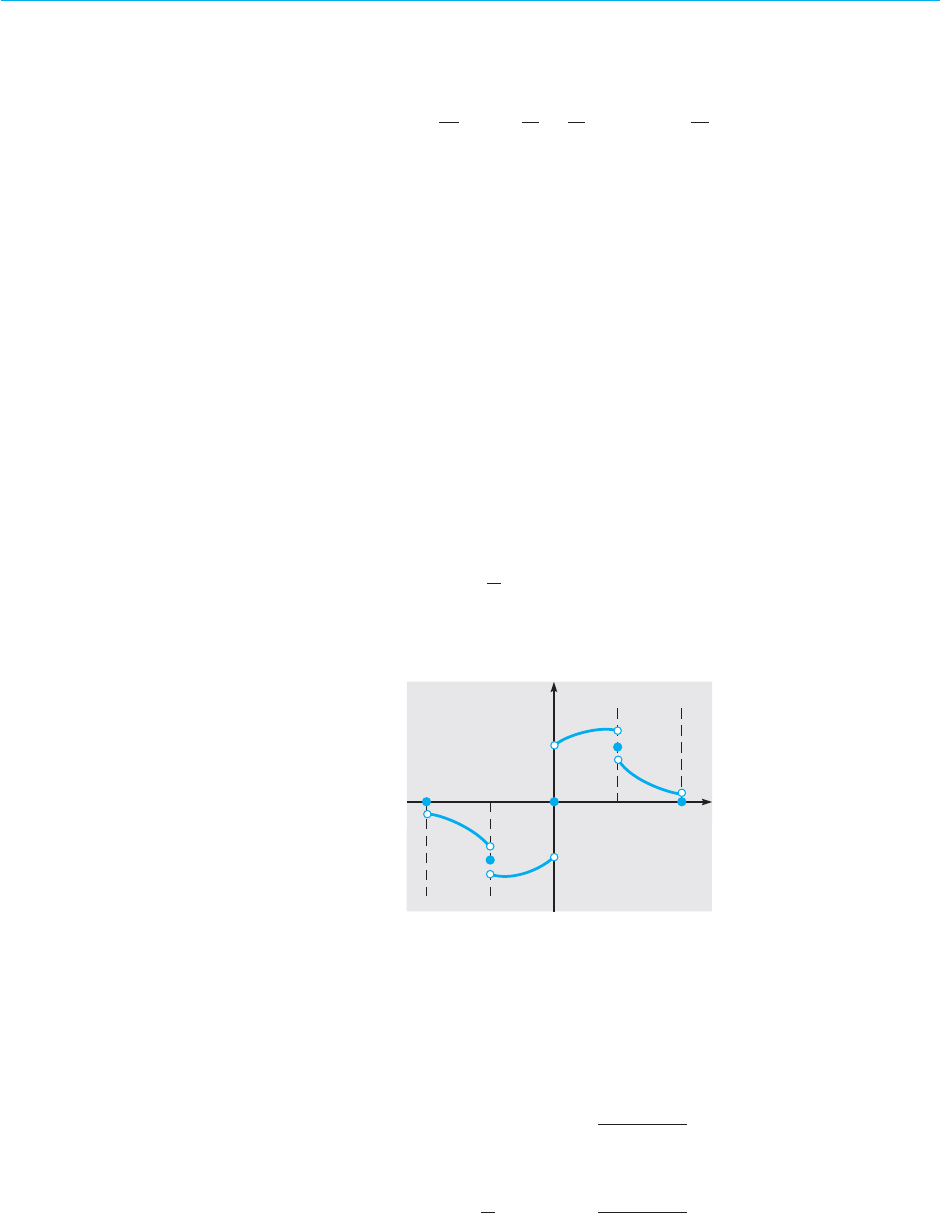

Consider the following list of differential equations, some of which produced the direction

fields shown in Figures 1.1.5 through 1.1.10. In each of Problems 15 through 20 identify the

differential equation that corresponds to the given direction field.

(a) y

′

= 2y − 1 (b) y

′

= 2 + y (c) y

′

= y − 2

(d) y

′

= y(y + 3) (e) y

′

= y(y − 3) (f) y

′

= 1 + 2y

(g) y

′

=−2 − y (h) y

′

= y(3 − y) (i) y

′

= 1 − 2y

(j) y

′

= 2 − y

15. The direction field of Figure 1.1.5.

16. The direction field of Figure 1.1.6.

1

2

3

4

1234

y

t

FIGURE 1.1.5 Problem 15.

1

2

3

4

1234

y

t

FIGURE 1.1.6 Problem 16.

17. The direction field of Figure 1.1.7.

18. The direction field of Figure 1.1.8.

–4

–3

–2

–1

1234

y

t

FIGURE 1.1.7 Problem 17.

–4

–3

–2

–1

1234

y

t

FIGURE 1.1.8 Problem 18.

August 7, 2012 21:03 c01 Sheet number 9 Page number 9 cyan black

1.1 Some Basic Mathematical Models; Direction Fields 9

19. The direction field of Figure 1.1.9.

20. The direction field of Figure 1.1.10.

–1

1

2

3

4

5

1234

y

t

FIGURE 1.1.9 Problem 19.

–1

1

2

3

4

5

1234

y

t

FIGURE 1.1.10 Problem 20.

21. A pond initially contains 1,000,000 gal of water and an unknown amount of an undesirable

chemical. Water containing 0.01 g of this chemical per gallon flows into the pond at a rate

of 300 gal/h. The mixture flows out at the same rate, so the amount of water in the pond

remains constant.Assume that the chemical is uniformly distributed throughout the pond.

(a) Write a differential equation for the amount of chemical in the pond at any time.

(b) How much of the chemicalwill bein the pondafter a verylong time?Does this limiting

amount depend on the amount that was present initially?

22. A spherical raindrop evaporates at a rate proportional to its surface area. Write a

differential equation for the volume of the raindrop as a function of time.

23. Newton’s law of cooling states that the temperature of an object changes at a rate propor-

tional to the difference between the temperature of the object itself and the temperature

of its surroundings (the ambient air temperature in most cases). Suppose that the ambient

temperature is 70

◦

F and that the rate constant is 0.05 (min)

−1

.Write a differential equation

for the temperature of the object at any time. Note that the differential equation is the

same whether the temperature of the object is above or below the ambient temperature.

24. A certain drug is being administered intravenously to a hospital patient. Fluid containing

5 mg/cm

3

of the drug enters the patient’s bloodstream at a rate of 100 cm

3

/h. The drug is

absorbed by body tissues or otherwise leaves the bloodstream at a rate proportional to

the amount present, with a rate constant of 0.4 (h)

−1

.

(a) Assuming that the drug is always uniformly distributed throughout the bloodstream,

write a differential equation for the amount of the drug that is present in the bloodstream

at any time.

(b) How much of the drug is present in the bloodstream after a long time?

25.

For small, slowly falling objects, the assumption made in the text that the drag force

is proportional to the velocity is a good one. For larger, more rapidly falling objects, it is

more accurate to assume that the drag force is proportional to the square of the velocity.

2

(a) Write a differential equation for the velocity of a falling object of mass m if the mag-

nitude of the drag force is proportional to the square of the velocity and its direction is

opposite to that of the velocity.

2

See Lyle N. Long and Howard Weiss, “The Velocity Dependence of Aerodynamic Drag: A Primer for

Mathematicians,”American Mathematical Monthly 106 (1999), 2, pp. 127–135.

August 7, 2012 21:03 c01 Sheet number 10 Page number 10 cyan black

10 Chapter 1. Intr oduction

(b) Determine the limiting velocity after a long time.

(c) If m = 10 kg, find the drag coefficient so that the limiting velocity is 49 m/s.

(d) Using the data in part (c), draw a direction field and compare it with Figure 1.1.3.

In each of Problems 26 through 33, draw a direction field for the given differential equation.

Based on the direction field, determine the behavior of y as t →∞. If this behavior depends

on the initial value of y at t = 0, describe this dependency. Note that the right sides of these

equations depend on t as well as y; therefore, their solutions can exhibit more complicated

behavior than those in the text.

26.

y

′

=−2 + t − y 27. y

′

= te

−2t

− 2y

28.

y

′

= e

−t

+ y 29. y

′

= t + 2y

30.

y

′

= 3 sin t + 1 + y 31. y

′

= 2t − 1 − y

2

32. y

′

=−(2t + y)/2y 33. y

′

=

1

6

y

3

− y −

1

3

t

2

1.2 Solutions of Some Differential Equations

In the preceding section we derived the differential equations

m

d v

dt

= mg − γv (1)

and

dp

dt

= rp − k. (2)

Equation (1) models a falling object, and Eq. (2) models a population of field mice

preyed on by owls. Both of these equations are of the general form

dy

dt

= ay − b, (3)

where a and b are given constants. We were able to draw some important qualitative

conclusions about the behavior of solutions of Eqs. (1) and (2) by considering the

associated direction fields. To answer questions of a quantitative nature,however,we

need to find the solutions themselves, and we now investigate how to do that.

EXAMPLE

1

Fiel d Mi ce

and Owls

(continued)

Consider the equation

dp

dt

= 0.5p − 450, (4)

which describes the interaction of certain populations of field mice and owls [see Eq. (8) of

Section 1.1]. Find solutions of this equation.

To solve Eq. (4), we need to find functions p(t) that, when substituted into the equation,

reduce it to an obvious identity. Here is one way to proceed. First, rewrite Eq. (4) in the form

dp

dt

=

p − 900

2

, (5)

or, if p ̸= 900,

dp/dt

p − 900

=

1

2

. (6)

August 7, 2012 21:03 c01 Sheet number 11 Page number 11 cyan black

1.2 Solutions of Some Differential Equations 11

By the chain rule the left side of Eq. (6) is the derivative of ln |p − 900| with respect to t,sowe

have

d

dt

ln |p − 900|=

1

2

. (7)

Then, by integrating both sides of Eq. (7), we obtain

ln |p − 900|=

t

2

+ C, (8)

where C is an arbitrary constant of integration. Therefore, by taking the exponential of both

sides of Eq. (8), we find that

|p − 900|=e

(t/2)+C

= e

C

e

t/2

, (9)

or

p − 900 =±e

C

e

t/2

, (10)

and finally

p = 900 + ce

t/2

, (11)

where c =±e

C

is also an arbitrary (nonzero) constant. Note that the constant function p = 900

is also a solution of Eq. (5) and that it is contained in the expression (11) if we allow c to take

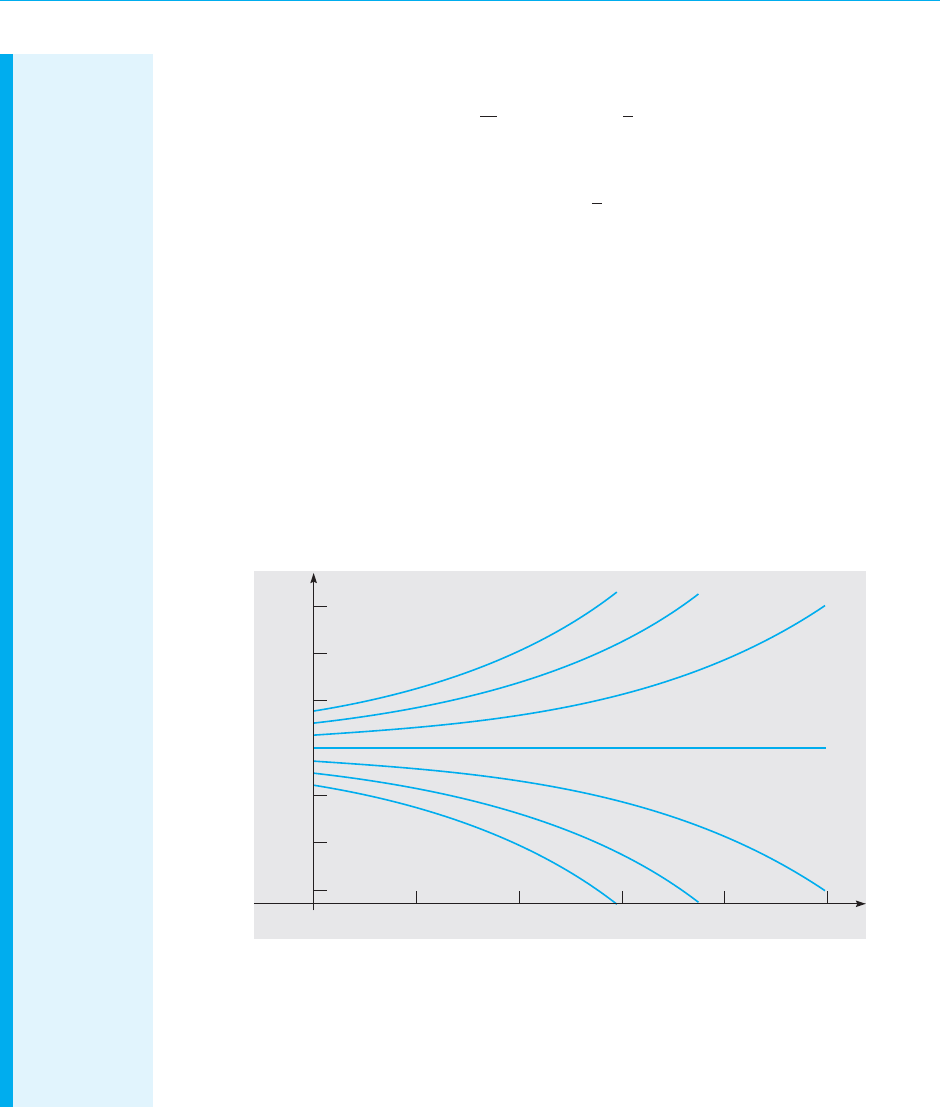

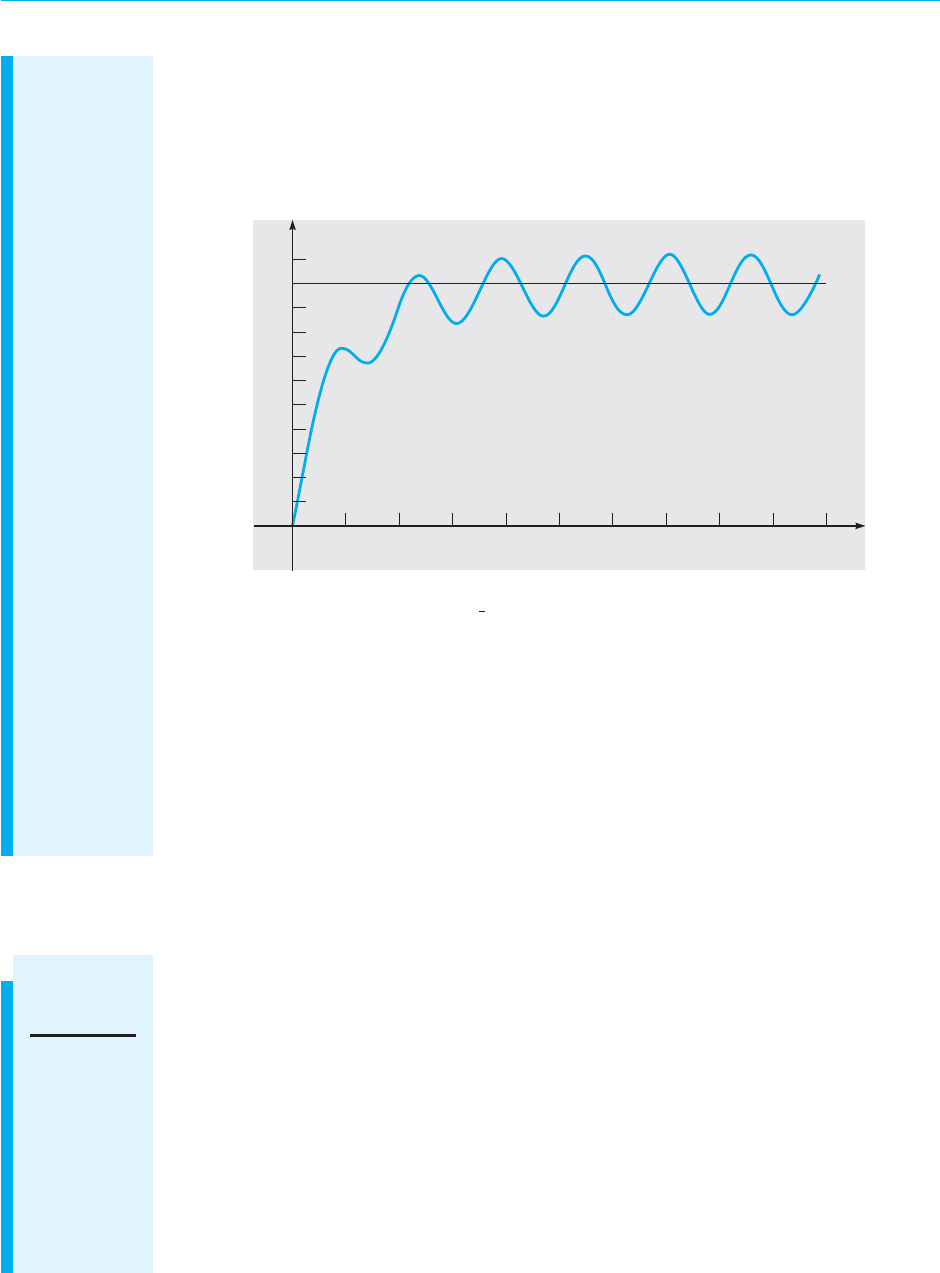

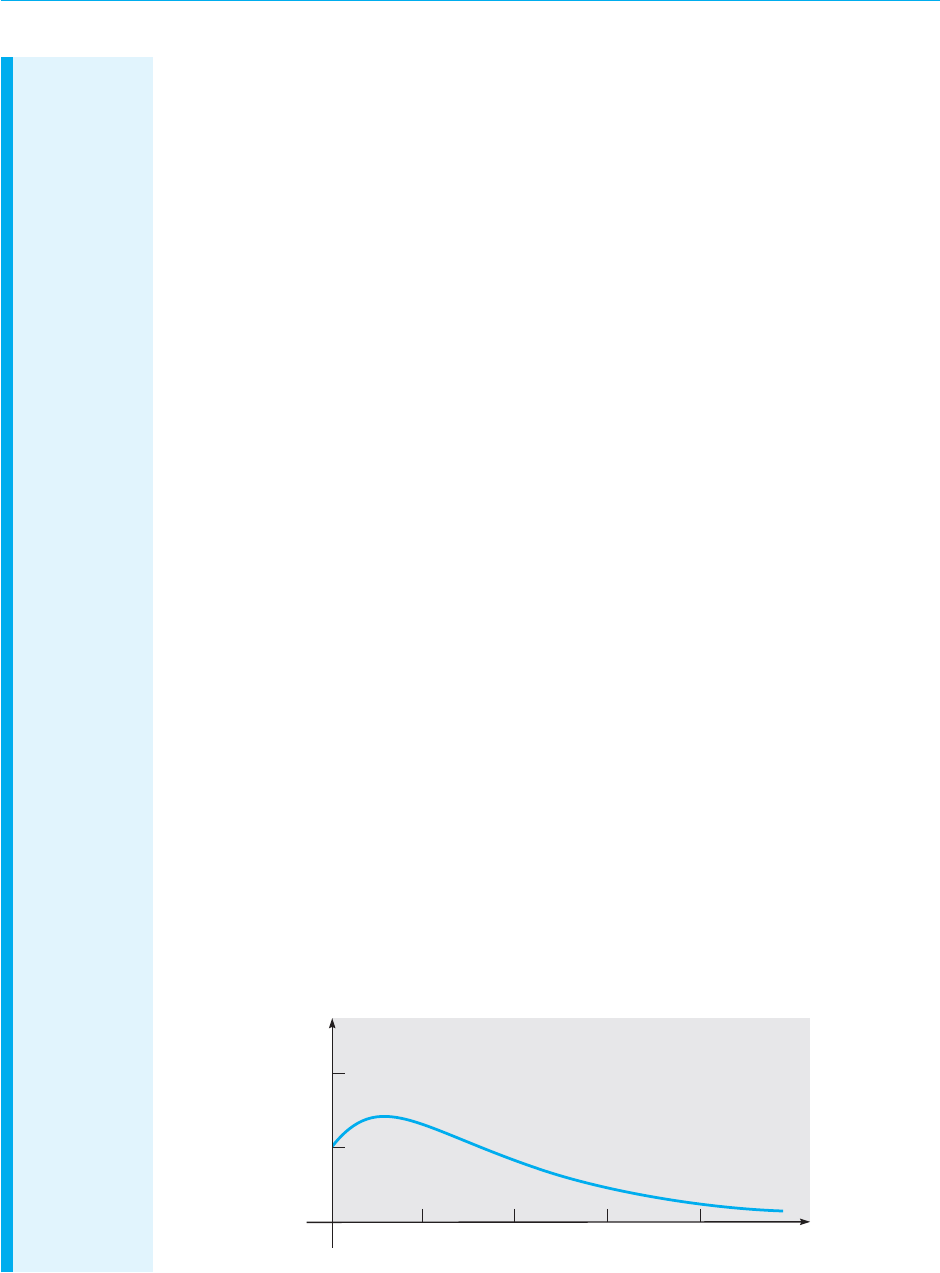

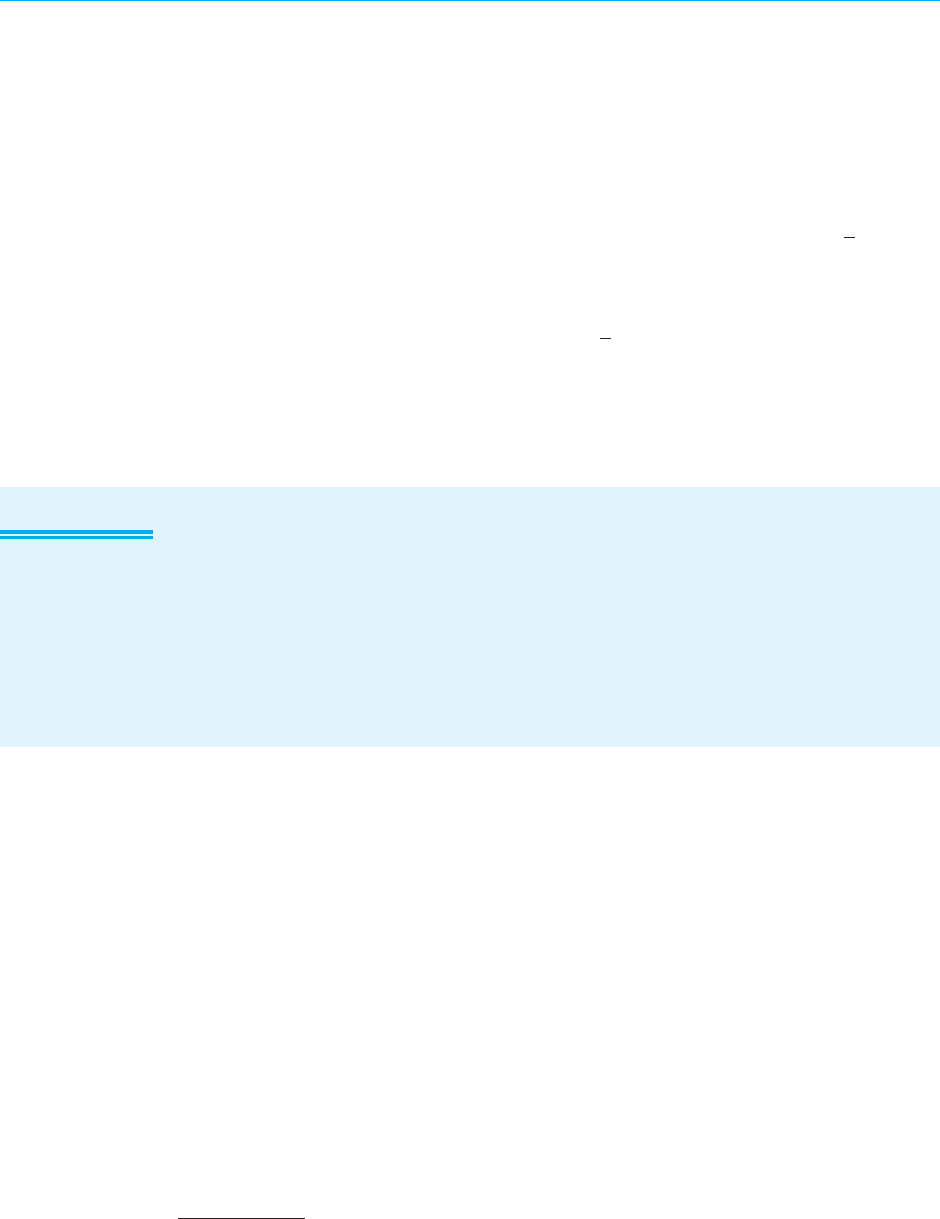

the value zero. Graphs of Eq. (11) for several values of c are shown in Figure 1.2.1.

900

600

12 t345

700

800

1000

1100

1200

p

FIGURE 1.2.1 Graphs of p = 900 + ce

t/2

for several values of c.

These are solutions of dp/dt = 0.5p − 450.

Note that they have the character inferred from the direction field in Figure 1.1.4. For

instance, solutions lying on either side of the equilibrium solution p = 900 tend to diverge

from that solution.

In Example 1 we found infinitely many solutions of the differential equation (4),

corresponding to the infinitely many values that the arbitrary constant c in Eq. (11)

might have.Thisis typicalof what happenswhen yousolve adifferential equation.The

solution process involves an integration, which brings with it an arbitrary constant,

whose possible values generate an infinite family of solutions.

August 7, 2012 21:03 c01 Sheet number 12 Page number 12 cyan black

12 Chapter 1. Intr oduction

Frequently,we want to focus our attention on a single member of the infinite family

of solutions by specifying the value of the arbitrary constant. Most often, we do this

indirectly by specifying instead a point that must lie on the graph of the solution. For

example,to determine the constant c in Eq. (11),we could require that the population

have a given value at a certain time,such as the value 850 at time t = 0. In other words,

the graph of the solution must pass through the point (0, 850). Symbolically, we can

express this condition as

p(0) = 850. (12)

Then, substituting t = 0 and p = 850 into Eq. (11), we obtain

850 = 900 + c.

Hence c =−50, and by inserting this value into Eq. (11), we obtain the desired

solution, namely,

p = 900 − 50e

t/2

. (13)

The additional condition (12) that we used to determine c is an example of an

initial condition. The differential equation (4) together with the initial condition (12)

form an initial value problem.

Now consider the more general problem consisting of the differential equation (3)

dy

dt

= ay − b

and the initial condition

y(0) = y

0

, (14)

where y

0

is an arbitrary initial value. We can solve this problem by the same method

as in Example 1. If a ̸= 0 and y ̸= b/a, then we can rewrite Eq. (3) as

dy/dt

y − (b/a)

= a. (15)

By integrating both sides, we find that

ln |y − (b/a)|=at + C, (16)

where C is arbitrary.Then,taking the exponential ofboth sidesof Eq.(16) and solving

for y, we obtain

y = (b/a) + ce

at

, (17)

where c =±e

C

is also arbitrary. Observe that c = 0 corresponds to the equilibrium

solution y = b/a. Finally, the initial condition (14) requires that c = y

0

− (b/a), so

the solution of the initial value problem (3), (14) is

y = (b/a) +[y

0

− (b/a)]e

at

. (18)

For a ̸= 0 the expression (17) contains all possible solutions of Eq. (3) and is called

the general solution.

3

The geometrical representation of the general solution (17) is

an infinite family of curves called integral curves. Each integral curve is associated

with a particular value of c and is the graph of the solution corresponding to that

3

If a = 0,then the solution of Eq. (3) is not given by Eq. (17). We leave it to you to find the general solution

in this case.

August 7, 2012 21:03 c01 Sheet number 13 Page number 13 cyan black

1.2 Solutions of Some Differential Equations 13

value of c. Satisfying an initial condition amounts to identifying the integral curve

that passes through the given initial point.

To relate the solution (18) to Eq. (2), which models the field mouse population, we

need only replace a by the growth rate r and replace b by the predation rate k. Then

the solution (18) becomes

p = (k/r) +[p

0

− (k/r)]e

rt

, (19)

where p

0

is the initial population of field mice. The solution (19) confirms the conclu-

sions reached on the basis of the direction field and Example 1. If p

0

= k/r,then from

Eq. (19) it follows that p = k/r for all t; this is the constant, or equilibrium, solution.

If p

0

̸= k /r, then the behavior of the solution depends on the sign of the coefficient

p

0

− (k/r) of theexponential term in Eq. (19). If p

0

> k/r,then p grows exponentially

with time t;ifp

0

< k/r,then p decreases and eventually becomes zero, corresponding

to extinction of the field mouse population. Negative values of p, while possible for

the expression (19), make no sense in the context of this particular problem.

To put the falling-object equation (1) in the form (3),we must identify a with −γ/m

and b with −g. Making these substitutions in the solution (18), we obtain

v = (mg/γ) +[v

0

− (mg/γ)]e

−γt/m

, (20)

where v

0

is the initial velocity. Again, this solution confirms the conclusions reached

in Section 1.1 on the basis of a direction field. There is an equilibrium, or constant,

solution v = mg/γ,and all other solutions tend to approach this equilibrium solution.

The speed of convergence to the equilibrium solution is determined by the exponent

−γ/m. Thus, for a given mass m, the velocity approaches the equilibrium value more

rapidly as the drag coefficient γ increases.

EXAMPLE

2

AFalling

Object

(continued)

Suppose that, as in Example 2 of Section 1.1, we consider a falling object of mass m = 10 kg

and drag coefficient γ = 2 kg/s. Then the equation of motion (1) becomes

dv

dt

= 9.8 −

v

5

. (21)

Suppose this object is dropped from a height of 300 m. Find its velocity at any time t. How

long will it take to fall to the ground, and how fast will it be moving at the time of impact?

The first step is to state an appropriate initial condition for Eq. (21). The word “dropped” in

the statement of the problem suggests that the initial velocity is zero, so we will use the initial

condition

v(0) = 0. (22)

The solution of Eq. (21) can be found by substituting the values of the coefficients into the

solution (20),but we will proceed instead to solve Eq. (21) directly. First,rewrite the equation as

dv/dt

v − 49

=−

1

5

. (23)

By integrating both sides, we obtain

ln |v − 49|=−

t

5

+ C, (24)

and then the general solution of Eq. (21) is

v = 49 + ce

−t/5

, (25)

August 7, 2012 21:03 c01 Sheet number 14 Page number 14 cyan black

14 Chapter 1. Intr oduction

where c is arbitrary. To determine c, we substitute t = 0 and v = 0 from the initial condition

(22) into Eq. (25), with the result that c =−49. Then the solution of the initial value problem

(21), (22) is

v = 49(1 − e

−t/5

). (26)

Equation (26) gives the velocity of the falling object at any positive time (before it hits the

ground, of course).

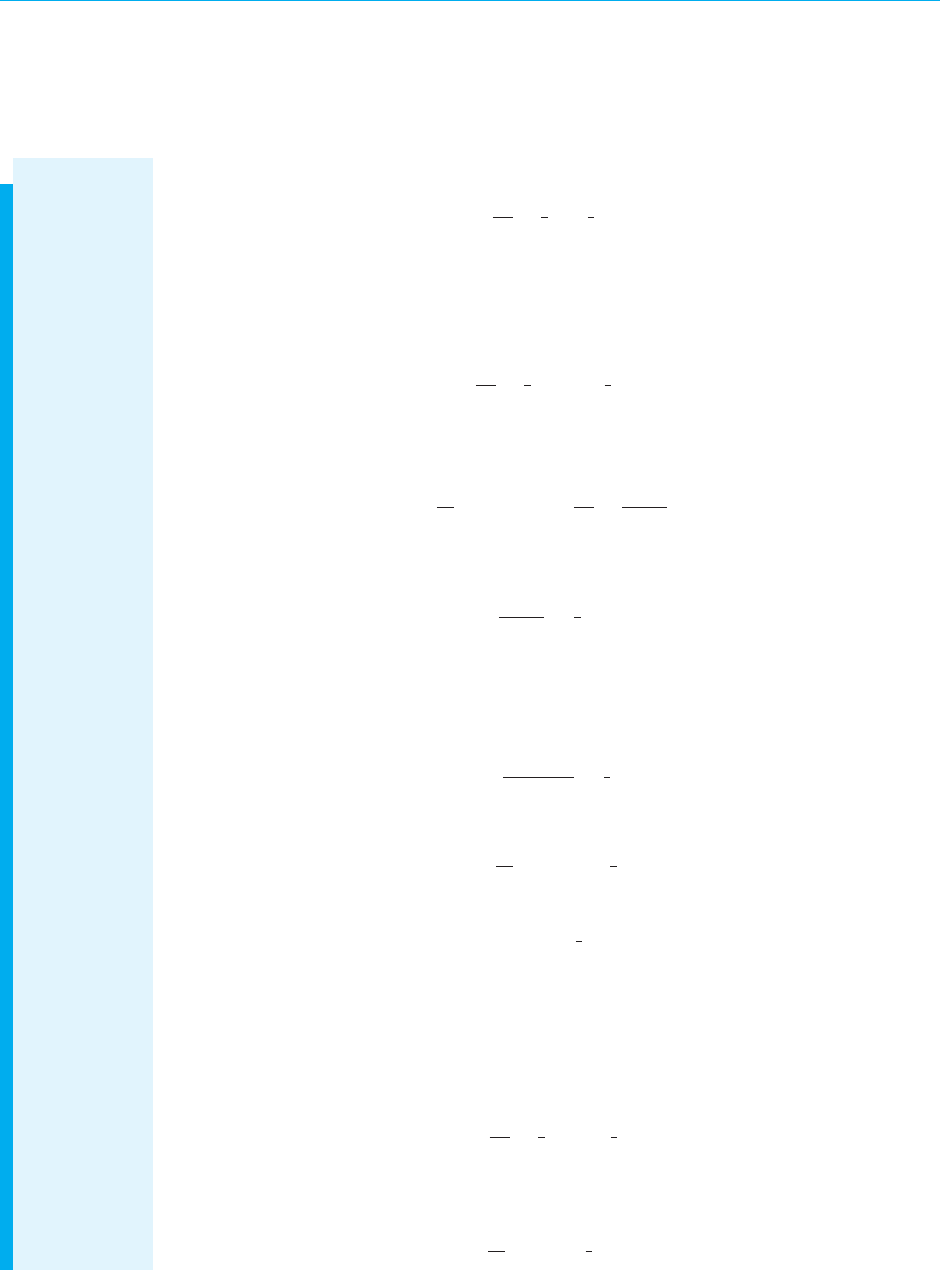

Graphs of the solution (25) for several values of c are shown in Figure 1.2.2,with the solution

(26) shown by the black curve. It is evident that all solutions tend to approach the equilibrium

solution v = 49. This confirms the conclusions we reached in Section 1.1 on the basis of the

direction fields in Figures 1.1.2 and 1.1.3.

100

80

60

40

20

24 t6 8 1210

v

v = 49 (1 – e

–t/5

)

(10.51, 43.01)

FIGURE 1.2.2 Graphs of the solution (25), v = 49 + ce

−t/5

, for several

values of c. The black curve corresponds to the initial condition v(0) = 0.

To find the velocity of the object when it hits the ground, we need to know the time at which

impact occurs. In other words, we need to determine how long it takes the object to fall 300 m.

To do this, we note that the distance x the object has fallen is related to its velocity v by the

equation v = dx/dt, or

dx

dt

= 49(1 − e

−t/5

). (27)

Consequently, by integrating both sides of Eq. (27), we have

x = 49t + 245e

−t/5

+ c, (28)

where c is an arbitrary constant of integration. The object starts to fall when t = 0, so we know

that x = 0 when t = 0. From Eq. (28) it follows that c =−245, so the distance the object has

fallen at time t is given by

x = 49t + 245e

−t/5

− 245. (29)

Let T be the time at which the object hits the ground;then x = 300 when t = T. By substituting

these values in Eq. (29), we obtain the equation

49T + 245e

−T/5

− 545 = 0. (30)

August 7, 2012 21:03 c01 Sheet number 15 Page number 15 cyan black

1.2 Solutions of Some Differential Equations 15

Thevalue of T satisfying Eq. (30) can be approximated by a numerical process

4

using a scientific

calculator or computer,withthe resultthat T

∼

=

10.51 s.At this time,the corresponding velocity

v

T

is found from Eq. (26) to be v

T

∼

=

43.01 m/s. The point (10.51, 43.01) is also shown in

Figure 1.2.2.

Further Remarks on Mathematical Modeling. Up to this point we have related our discus-

sion of differential equations to mathematical models of a falling object and of a

hypothetical relation between field mice and owls. The derivation of these models

may have been plausible, and possibly even convincing, but you should remember

that the ultimate test of any mathematical model is whether its predictions agree

with observations or experimental results. We have no actual observations or exper-

imental results to use for comparison purposes here, but there are several sources of

possible discrepancies.

In the case of the falling object, the underlying physical principle (Newton’s law

of motion) is well established and widely applicable. However, the assumption that

the drag force is proportional to the velocity is less certain. Even if this assumption is

correct, the determination of the drag coefficient γ by direct measurement presents

difficulties. Indeed, sometimes one finds the drag coefficient indirectly—for example,

by measuring the time of fall from a given height and then calculating the value of γ

that predicts this observed time.

The model of the field mouse population is subject to various uncertainties.

The determination of the growth rate r and the predation rate k depends on

observations of actual populations, which may be subject to considerable variation.

The assumption that r and k are constants may also be questionable. For example,

a constant predation rate becomes harder to sustain as the field mouse population

becomes smaller. Further, the model predicts that a population above the equilib-

rium value will grow exponentially larger and larger. This seems at variance with the

behavior of actual populations; see the further discussion of population dynamics in

Section 2.5.

If the differences between actual observations and a mathematical model’s pre-

dictions are too great, then you need to consider refining the model, making more

careful observations, or perhaps both. There is almost always a tradeoff between

accuracy and simplicity. Both are desirable, but a gain in one usually involves a loss

in the other. However,even if a mathematical model is incomplete or somewhat inac-

curate,it may nevertheless be useful in explaining qualitative features of the problem

under investigation. It may also give satisfactory results under some circumstances

but not others. Thus you should always use good judgment and common sense in

constructing mathematical models and in using their predictions.

PROBLEMS 1.

Solve each of the following initial value problems and plot the solutions for several values

of y

0

. Then describe in a few words how the solutions resemble, and differ from, each

other.

(a) dy/dt =−y + 5, y(0) = y

0

4

A computer algebra system provides this capability; many calculators also have built-in routines for

solving such equations.

August 7, 2012 21:03 c01 Sheet number 16 Page number 16 cyan black

16 Chapter 1. Intr oduction

(b) dy/dt =−2y + 5, y(0) = y

0

(c) dy/dt =−2y + 10, y(0) = y

0

2. Follow the instructions for Problem 1 for the following initial value problems:

(a) dy/dt = y − 5, y(0) = y

0

(b) dy/dt = 2y − 5, y(0) = y

0

(c) dy/dt = 2y − 10, y(0) = y

0

3. Consider the differential equation

dy/dt =−ay + b,

where both a and b are positive numbers.

(a) Find the general solution of the differential equation.

(b) Sketch the solution for several different initial conditions.

(c) Describe how the solutions change under each of the following conditions:

i. a increases.

ii. b increases.

iii. Both a and b increase, but the ratio b/a remains the same.

4. Consider the differential equation dy/dt = ay − b.

(a) Find the equilibrium solution y

e

.

(b) Let Y(t) = y − y

e

; thus Y(t) is the deviation from the equilibrium solution. Find the

differential equation satisfied by Y(t).

5. Undetermined Coefficients. Here is an alternative way to solve the equation

dy/dt = ay − b. (i)

(a) Solve the simpler equation

dy/dt = ay. (ii)

Call the solution y

1

(t).

(b) Observe that the only difference between Eqs. (i) and (ii) is the constant −b in Eq. (i).

Therefore, it may seem reasonable to assume that the solutions of these two equations

also differ only by a constant. Test this assumption by trying to find a constant k such that

y = y

1

(t) + k is a solution of Eq. (i).

(c) Compare your solution from part (b) with the solution given in the text in Eq. (17).

Note: This method can also be used in some cases in which the constant b is replaced

by a function g(t). It depends on whether you can guess the general form that the solution

is likely to take.This method is described in detail in Section 3.5 in connection with second

order equations.

6. Use the method of Problem 5 to solve the equation

dy/dt =−ay + b.

7. The field mouse population in Example 1 satisfies the differential equation

dp/dt = 0.5p − 450.

(a) Find the time at which the population becomes extinct if p(0) = 850.

(b) Find the time of extinction if p(0) = p

0

, where 0 < p

0

< 900.

(c) Find the initial population p

0

if the population is to become extinct in 1 year.

August 7, 2012 21:03 c01 Sheet number 17 Page number 17 cyan black

1.2 Solutions of Some Differential Equations 17

8. Consider a population p of field mice that grows at a rate proportional to the current

population, so that dp/dt = rp.

(a) Find the rate constant r if the population doubles in 30 days.

(b) Find r if the population doubles in N days.

9. The falling object in Example 2 satisfies the initial value problem

dv/dt = 9.8 − (v/5), v(0) = 0.

(a) Find the time that must elapse for the object to reach 98% of its limiting velocity.

(b) How far does the object fall in the time found in part (a)?

10. Modify Example 2 so that the falling object experiences no air resistance.

(a) Write down the modified initial value problem.

(b) Determine how long it takes the object to reach the ground.

(c) Determine its velocity at the time of impact.

11.

Consider the falling object of mass 10 kg in Example 2,but assume now that the drag force

is proportional to the square of the velocity.

(a) If the limiting velocity is 49 m/s (the same as in Example 2), show that the equation

of motion can be written as

dv/dt =[(49)

2

− v

2

]/245.

Also see Problem 25 of Section 1.1.

(b) If v(0) = 0, find an expression for v(t) at any time.

(c) Plot your solution from part (b) and the solution (26) from Example 2 on the same

axes.

(d) Based on your plots in part (c), compare the effect of a quadratic drag force with that

of a linear drag force.

(e) Find the distance x (t) that the object falls in time t.

(f) Find the time T it takes the object to fall 300 m.

12. A radioactive material,suchas the isotope thorium-234,disintegratesat a rate proportional

to the amount currently present.If Q(t) is the amount presentat time t,then dQ/dt =−rQ,

where r > 0 is the decay rate.

(a) If 100 mg of thorium-234 decays to 82.04 mg in 1 week, determine the decay rate r.

(b) Find an expression for the amount of thorium-234 present at any time t.

(c) Find the time required for the thorium-234 to decay to one-half its original amount.

13. The half-life of a radioactive material is the time required for an amount of this material

to decay to one-half its original value. Show that for any radioactive material that decays

according to the equation Q

′

=−rQ,the half-lifeτ and the decay rate r satisfy the equation

rτ = ln 2.

14. Radium-226 has a half-life of 1620 years. Find the time period during which a given amount

of this material is reduced by one-quarter.

15. According to Newton’s law of cooling (see Problem 23 of Section 1.1), the temperature

u(t) of an object satisfies the differential equation

du

dt

=−k(u − T),

where T is the constant ambient temperature and k is a positive constant. Suppose that

the initial temperature of the object is u(0) = u

0

.

(a) Find the temperature of the object at any time.

August 7, 2012 21:03 c01 Sheet number 18 Page number 18 cyan black

18 Chapter 1. Intr oduction

(b) Let τ be the time at which the initial temperature difference u

0

− T has been reduced

by half. Find the relation between k and τ.

16. Supposethat a building loses heatin accordance with Newton’slaw ofcooling (see Problem

15)and that the rate constantk hasthevalue 0.15h

−1

.Assume thatthe interior temperature

is 70

◦

F when the heating system fails. If the external temperature is 10

◦

F, how long will it