Python Data Science

Essentials

Third Edition

A practitioner's guide covering essential data science

principles, tools, and techniques

Alberto Boschetti

Luca Massaron

BIRMINGHAM - MUMBAI

Python Data Science Essentials

Third Edition

Copyright © 2018 Packt Publishing

All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form

or by any means, without the prior written permission of the publisher, except in the case of brief quotations

embedded in critical articles or reviews.

Every effort has been made in the preparation of this book to ensure the accuracy of the information presented.

However, the information contained in this book is sold without warranty, either express or implied. Neither the

authors, nor Packt Publishing or its dealers and distributors, will be held liable for any damages caused or alleged to

have been caused directly or indirectly by this book.

Packt Publishing has endeavored to provide trademark information about all of the companies and products

mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy

of this information.

Commissioning Editor: Pravin Dhandre

Acquisition Editor: Namrata Patil

Content Development Editor: Snehal Kolte

Technical Editor: Dinesh Chaudhary

Copy Editor: Safis Editing

Project Coordinator: Manthan Patel

Proofreader: Safis Editing

Indexer: Tejal Daruwale Soni

Graphics: Jisha Chirayil

Production Coordinator: Deepika Naik

First published: April 2015

Second edition: October 2016

Third edition: September 2018

Production reference: 1260918

Published by Packt Publishing Ltd.

Livery Place

35 Livery Street

Birmingham

B3 2PB, UK.

ISBN 978-1-78953-786-4

www.packtpub.com

mapt.io

Mapt is an online digital library that gives you full access to over 5,000 books and videos, as

well as industry leading tools to help you plan your personal development and advance

your career. For more information, please visit our website.

Why subscribe?

Spend less time learning and more time coding with practical eBooks and Videos

from over 4,000 industry professionals

Improve your learning with Skill Plans built especially for you

Get a free eBook or video every month

Mapt is fully searchable

Copy and paste, print, and bookmark content

Packt.com

Did you know that Packt offers eBook versions of every book published, with PDF and

ePub files available? You can upgrade to the eBook version at www.packt.com and as a print

book customer, you are entitled to a discount on the eBook copy. Get in touch with us at

[email protected] for more details.

At www.packt.com, you can also read a collection of free technical articles, sign up for a

range of free newsletters, and receive exclusive discounts and offers on Packt books and

eBooks.

Contributors

About the authors

Alberto Boschetti is a data scientist with expertise in signal processing and statistics. He

holds a Ph.D. in telecommunication engineering and currently lives and works in London.

In his work projects, he faces challenges ranging from natural language processing (NLP)

and behavioral analysis to machine learning and distributed processing. He is very

passionate about his job and always tries to stay updated about the latest developments in

data science technologies, attending meet-ups, conferences, and other events.

I would like to thank my family, my friends, and my colleagues. Also, big thanks to the

open source community.

Luca Massaron is a data scientist and marketing research director specialized in

multivariate statistical analysis, machine learning, and customer insight, with over a decade

of experience of solving real-world problems and generating value for stakeholders by

applying reasoning, statistics, data mining, and algorithms. From being a pioneer of web

audience analysis in Italy to achieving the rank of a top-10 Kaggler, he has always been

very passionate about every aspect of data and its analysis, and also about demonstrating

the potential of data-driven knowledge discovery to both experts and non-experts.

Favoring simplicity over unnecessary sophistication, Luca believes that a lot can be

achieved in data science just by doing the essentials.

To Yukiko and Amelia, for their loving patience.

"Roads go ever ever on, under cloud and under star, yet feet that wandering have gone

turn at last to home afar."

About the reviewers

Pietro Marinelli has been working with artificial intelligence, text analytics and many other

data science techniques, and has more than 10 years of experience in designing products

based on data for different industries.

He produced a variety of algorithms, ranging from predictive modeling to advanced

simulation algorithm to support top management's business decisions for different

multinational companies.

He has consistently been ranked among the top data scientists in the world in the Kaggle

rankings for years, reaching 3rd position among Italian data scientists.

Matteo Malosetti is a mathematical engineer working as a data scientist in insurance. He is

passionate about NLP applications and Bayesian statistics.

Packt is searching for authors like you

If you're interested in becoming an author for Packt, please visit authors.packtpub.com

and apply today. We have worked with thousands of developers and tech professionals,

just like you, to help them share their insight with the global tech community. You can

make a general application, apply for a specific hot topic that we are recruiting an author

for, or submit your own idea.

Table of Contents

Preface

1

Chapter 1: First Steps

6

Introducing data science and Python

7

Installing Python

9

Python 2 or Python 3?

9

Step-by-step installation

10

Installing the necessary packages

12

Package upgrades

14

Scientific distributions

15

Anaconda

15

Leveraging conda to install packages

16

Enthought Canopy

17

WinPython

18

Explaining virtual environments

18

Conda for managing environments

21

A glance at the essential packages

22

NumPy

23

SciPy

23

pandas

24

pandas-profiling

24

Scikit-learn

24

Jupyter

25

JupyterLab

26

Matplotlib

26

Seaborn

27

Statsmodels

27

Beautiful Soup

28

NetworkX

28

NLTK

29

Gensim

29

PyPy

29

XGBoost

30

LightGBM

32

CatBoost

33

TensorFlow

33

Keras

34

Introducing Jupyter

35

Fast installation and first test usage

39

Jupyter magic commands

41

Installing packages directly from Jupyter Notebooks

43

Checking the new JupyterLab environment

44

Table of Contents

[ ii ]

How Jupyter Notebooks can help data scientists

45

Alternatives to Jupyter

51

Datasets and code used in this book

52

Scikit-learn toy datasets

53

The MLdata.org and other public repositories for open source data

57

LIBSVM data examples

58

Loading data directly from CSV or text files

58

Scikit-learn sample generators

62

Summary

63

Chapter 2: Data Munging

64

The data science process

64

Data loading and preprocessing with pandas

67

Fast and easy data loading

67

Dealing with problematic data

71

Dealing with big datasets

74

Accessing other data formats

78

Putting data together

81

Data preprocessing

85

Data selection

92

Working with categorical and textual data

95

A special type of data – text

98

Scraping the web with Beautiful Soup

104

Data processing with NumPy

106

NumPy's n-dimensional array

107

The basics of NumPy ndarray objects

108

Creating NumPy arrays

111

From lists to unidimensional arrays

112

Controlling memory size

113

Heterogeneous lists

114

From lists to multidimensional arrays

115

Resizing arrays

117

Arrays derived from NumPy functions

118

Getting an array directly from a file

120

Extracting data from pandas

120

NumPy fast operation and computations

122

Matrix operations

124

Slicing and indexing with NumPy arrays

126

Stacking NumPy arrays

129

Working with sparse arrays

131

Summary

135

Chapter 3: The Data Pipeline

136

Introducing EDA

136

Building new features

141

Dimensionality reduction

144

Table of Contents

[ iii ]

The covariance matrix

145

Principal component analysis

146

PCA for big data – RandomizedPCA

151

Latent factor analysis

152

Linear discriminant analysis

153

Latent semantical analysis

154

Independent component analysis

154

Kernel PCA

155

T-SNE

157

Restricted Boltzmann Machine

158

The detection and treatment of outliers

159

Univariate outlier detection

160

EllipticEnvelope

163

OneClassSVM

168

Validation metrics

172

Multilabel classification

172

Binary classification

175

Regression

176

Testing and validating

177

Cross-validation

182

Using cross-validation iterators

185

Sampling and bootstrapping

188

Hyperparameter optimization

190

Building custom scoring functions

193

Reducing the grid search runtime

195

Feature selection

198

Selection based on feature variance

199

Univariate selection

199

Recursive elimination

201

Stability and L1-based selection

203

Wrapping everything in a pipeline

206

Combining features together and chaining transformations

206

Building custom transformation functions

209

Summary

211

Chapter 4: Machine Learning

212

Preparing tools and datasets

212

Linear and logistic regression

214

Naive Bayes

218

K-Nearest Neighbors

221

Nonlinear algorithms

223

SVM for classification

224

SVM for regression

226

Tuning SVM

227

Ensemble strategies

230

Table of Contents

[ iv ]

Pasting by random samples

231

Bagging with weak classifiers

231

Random Subspaces and Random Patches

232

Random Forests and Extra-Trees

233

Estimating probabilities from an ensemble

235

Sequences of models – AdaBoost

237

Gradient tree boosting (GTB)

238

XGBoost

239

LightGBM

243

CatBoost

248

Dealing with big data

253

Creating some big datasets as examples

253

Scalability with volume

254

Keeping up with velocity

257

Dealing with variety

258

An overview of Stochastic Gradient Descent (SGD)

260

A peek into natural language processing (NLP)

262

Word tokenization

262

Stemming

264

Word tagging

264

Named entity recognition (NER)

265

Stopwords

266

A complete data science example – text classification

267

An overview of unsupervised learning

269

K-means

269

DBSCAN – a density-based clustering technique

273

Latent Dirichlet Allocation (LDA)

276

Summary

281

Chapter 5: Visualization, Insights, and Results

282

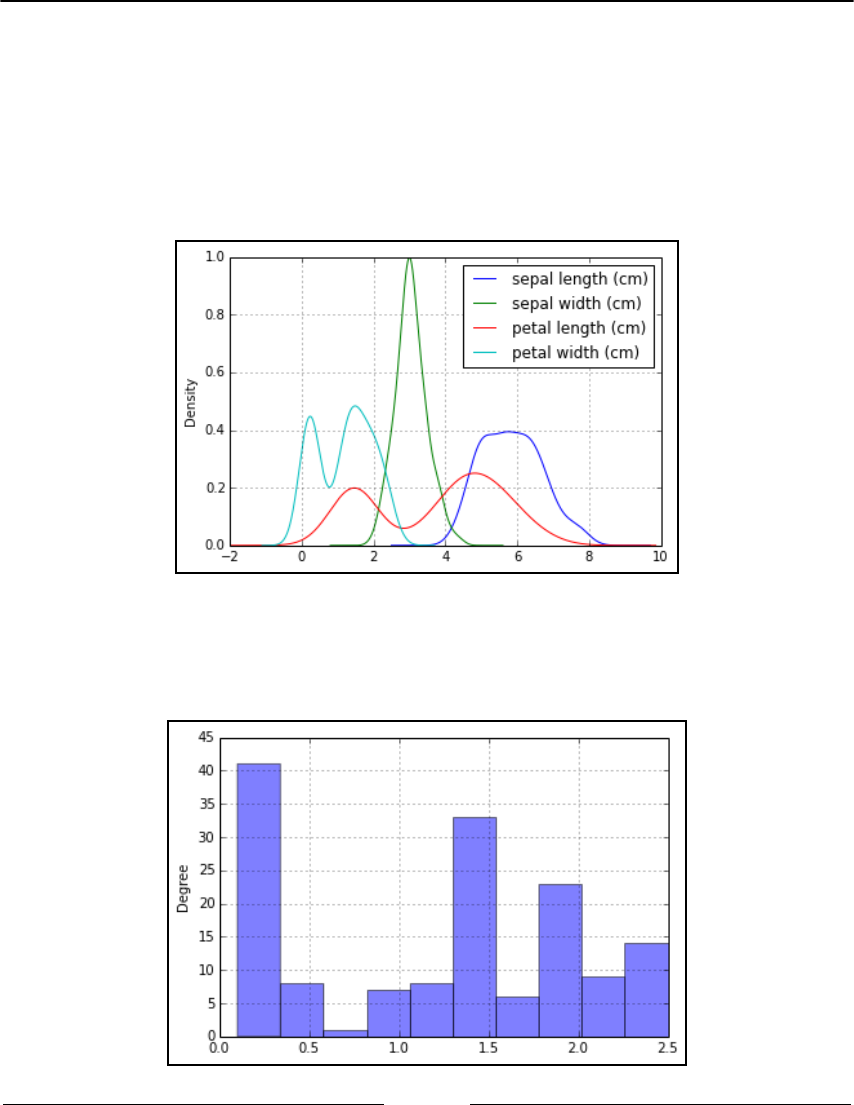

Introducing the basics of matplotlib

282

Trying curve plotting

284

Using panels for clearer representations

286

Plotting scatterplots for relationships in data

288

Histograms

289

Bar graphs

291

Image visualization

292

Selected graphical examples with pandas

295

Working with boxplots and histograms

296

Plotting scatterplots

299

Discovering patterns by parallel coordinates

302

Wrapping up matplotlib's commands

303

Introducing Seaborn

304

Enhancing your EDA capabilities

310

Advanced data learning representation

318

Learning curves

318

Table of Contents

[ v ]

Validation curves

321

Feature importance for RandomForests

322

Gradient Boosting Trees partial dependence plotting

325

Creating a prediction server with machine-learning-as-a-service

326

Summary

331

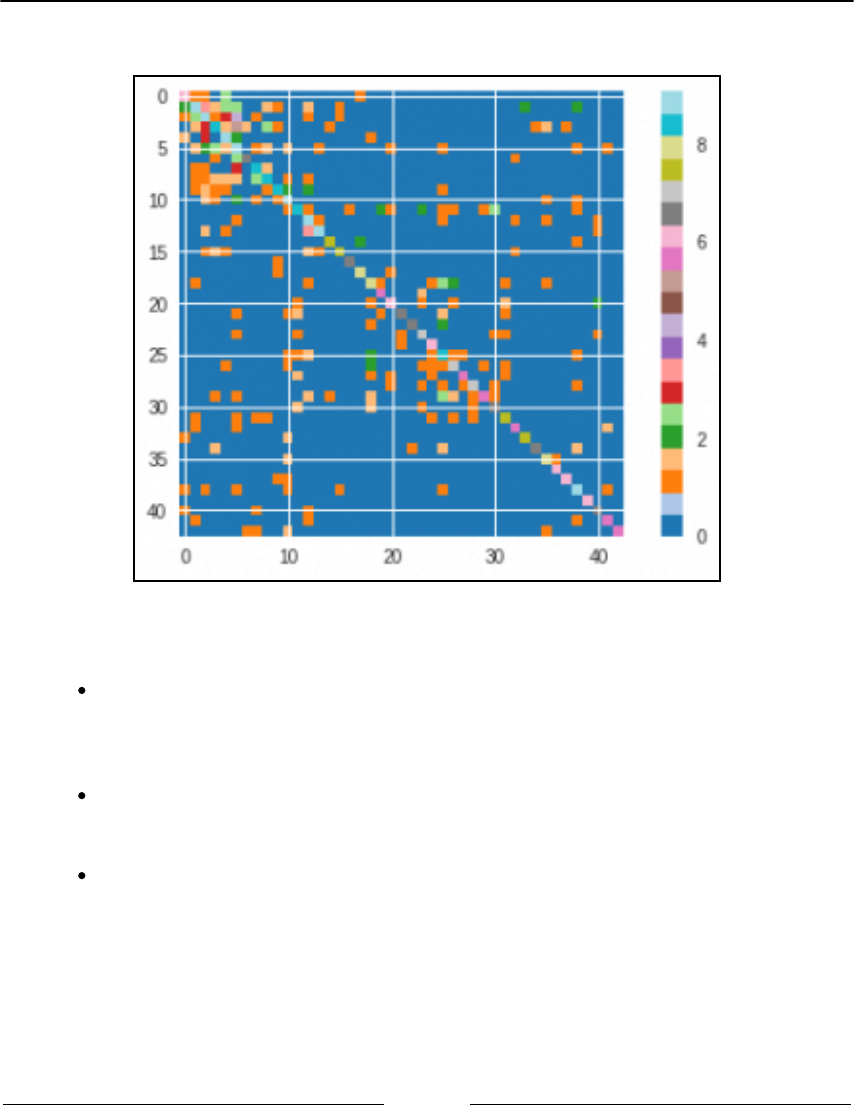

Chapter 6: Social Network Analysis

333

Introduction to graph theory

333

Graph algorithms

340

Types of node centrality

343

Partitioning a network

347

Graph loading, dumping, and sampling

350

Summary

354

Chapter 7: Deep Learning Beyond the Basics

355

Approaching deep learning

355

Classifying images with CNN

359

Using pre-trained models

371

Working with temporal sequences

375

Summary

378

Chapter 8: Spark for Big Data

379

From a standalone machine to a bunch of nodes

380

Making sense of why we need a distributed framework

381

The Hadoop ecosystem

382

Hadoop architecture

382

Hadoop Distributed File System

384

MapReduce

385

Introducing Apache Spark

387

PySpark

387

Starting with PySpark

388

Setting up your local Spark instance

388

Experimenting with Resilient Distributed Datasets

391

Sharing variables across cluster nodes

398

Read-only broadcast variables

398

Write-only accumulator variables

399

Broadcast and accumulator variables together—an example

400

Data preprocessing in Spark

403

CSV files and Spark DataFrames

403

Dealing with missing data

405

Grouping and creating tables in-memory

406

Writing the preprocessed DataFrame or RDD to disk

408

Working with Spark DataFrames

409

Machine learning with Spark

411

Spark on the KDD99 dataset

411

Reading the dataset

412

Table of Contents

[ vi ]

Feature engineering

415

Training a learner

418

Evaluating a learner's performance

420

The power of the machine learning pipeline

420

Manual tuning

422

Cross-validation

425

Final cleanup

427

Summary

428

Appendix A: Strengthen Your Python Foundations

429

Your learning list

430

Lists

430

Dictionaries

432

Defining functions

434

Classes, objects, and object-oriented programming

436

Exceptions

437

Iterators and generators

439

Conditionals

440

Comprehensions for lists and dictionaries

440

Learn by watching, reading, and doing

441

Massive open online courses (MOOCs)

441

PyCon and PyData

442

Interactive Jupyter

442

Don't be shy, take a real challenge

443

Other Books You May Enjoy

444

Index

447

Preface

"A journey of a thousand miles begins with a single step."

– Laozi (604 BC - 531 BC)

Data science is a relatively new knowledge domain that requires the successful integration

of linear algebra, statistical modeling, visualization, computational linguistics, graph

analysis, machine learning, business intelligence, and data storage and retrieval.

The Python programming language, having conquered the scientific community during the

last decade, is now an indispensable tool for the data science practitioner and a must-have

tool for every aspiring data scientist. Python will offer you a fast, reliable, cross-platform,

and mature environment for data analysis, machine learning, and algorithmic problem

solving. Whatever stopped you before from mastering Python for data science applications

will be easily overcome by our easy, step-by-step, and example-oriented approach, which

will help you apply the most straightforward and effective Python tools to both

demonstrative and real-world datasets.

As the third edition of Python Data Science Essentials, this book offers updated and

expanded content. Based on the recent Jupyter Notebook and JupyterLab interface

(incorporating interchangeable kernels, a truly polyglot data science system), this book

incorporates all the main recent improvements in NumPy, pandas, and scikit-

learn. Additionally, it offers new content in the form of new GBM algorithms (XGBoost,

LightGBM, and CatBoost), deep learning (by presenting Keras solutions based on

TensorFlow), beautiful visualizations (mostly due to seaborn), and web deployment (using

bottle).

This book starts by showing you how to set up your essential data science toolbox in

Python's latest version (3.6), using a single-source approach (implying that the book's code

will be easily reusable on Python 2.7 as well). Then, it will guide you across all the data

munging and preprocessing phases in a manner that explains all the core data science

activities related to loading data, transforming, and fixing it for analysis, and

exploring/processing it. Finally, the book will complete its overview by presenting you with

the principal machine learning algorithms, graph analysis techniques, and all the

visualization and deployment instruments that make it easier to present your results to an

audience of both data science experts and business users.

Preface

[ 2 ]

Who this book is for

If you are an aspiring data scientist and you have at least a working knowledge of data

analysis and Python, this book will get you started in data science. Data analysis with

experience of R or MATLAB/GNU Octave will also find the book to be a comprehensive

reference to enhance their data manipulation and machine learning skills.

What this book covers

Chapter 1, First Steps, introduces Jupyter Notebook and demonstrates how you can have

access to the data run in the tutorials.

Chapter 2, Data Munging, presents all the key data manipulation and transformation

techniques, highlighting best practices for munging activities.

Chapter 3, The Data Pipeline, discusses all the operations that can potentially improve data

science project results, rendering the reader capable of advanced data operations.

Chapter 4, Machine Learning, presents the most important learning algorithms available

through the scikit-learn library. The reader will be shown practical applications and what is

important to check and what parameters to tune for getting the best from each learning

technique.

Chapter 5, Visualization, Insights, and Results, offers you basic and upper-intermediate

graphical representations, indispensable for representing and visually understanding

complex data structures and results obtained from machine learning.

Chapter 6, Social Network Analysis, provides the reader with practical and effective skills for

handling data representing social relations and interactions.

Chapter 7, Deep Learning Beyond the Basics, demonstrates how to build a convolutional

neural network from scratch, introduces all the tools of the trade to enhance your deep

learning models, and explains how transfer learning works, as well as how to use recurrent

neural networks for classifying text and predicting series.

Chapter 8, Spark for Big Data, introduces a new way to process data: scaling big data

horizontally. This means running a cluster of machines, having installed the Hadoop and

Spark frameworks.

Appendix, Strengthening Your Python Foundations, covers a few Python examples and

tutorials that are focused on the key features of the language that are indispensable in order

to work on data science projects.

Preface

[ 3 ]

To get the most out of this book

In order to get the most out of this book, you will need the following:

A familiarity with the basic Python syntax and data structures (for example, lists

and dictionaries)

Some knowledge about data analysis, especially regarding descriptive statistics

You can build up both these skills as you are reading the book, though the book does not go

too much into the details, instead providing only the essentials for most of the techniques

that a data scientist has to know in order to be successful on her/his projects.

You will also need the following:

A computer with a Windows, macOS, or Linux operating system and at least 8

GB of memory (if you have just 4 GB on your machine, you should be fine with

most examples anyway)

A GPU installed on your computer if you want to speed up the computations

you will find in Chapter 7, Deep Learning Beyond the Basics.

A Python 3.6 installation, preferably from Anaconda (https://www.anaconda.

com/download/)

Download the example code files

You can download the example code files for this book from your account at

www.packt.com. If you purchased this book elsewhere, you can visit

www.packt.com/support and register to have the files emailed directly to you.

You can download the code files by following these steps:

Log in or register at www.packt.com.1.

Select the SUPPORT tab.2.

Click on Code Downloads & Errata.3.

Enter the name of the book in the Search box and follow the onscreen4.

instructions.

Preface

[ 4 ]

Once the file is downloaded, please make sure that you unzip or extract the folder using the

latest version of:

WinRAR/7-Zip for Windows

Zipeg/iZip/UnRarX for Mac

7-Zip/PeaZip for Linux

The code bundle for the book is also hosted on GitHub

at https://github.com/PacktPublishing/Python-Data-Science-Essentials-Third-

Edition. In case there's an update to the code, it will be updated on the existing GitHub

repository.

We also have other code bundles from our rich catalog of books and videos available

at https://github.com/PacktPublishing/. Check them out!

Download the color images

We also provide a PDF file that has color images of the screenshots/diagrams used in this

book. You can download it here: http://www.packtpub.com/sites/default/files/

downloads/9781789537864_ColorImages.pdf.

Conventions used

There are a number of text conventions used throughout this book.

CodeInText: Indicates code words in the text, database table names, folder names,

filenames, file extensions, pathnames, dummy URLs, user input, and Twitter handles. Here

is an example: "Mount the downloaded WebStorm-10*.dmg disk image file as another disk

in your system."

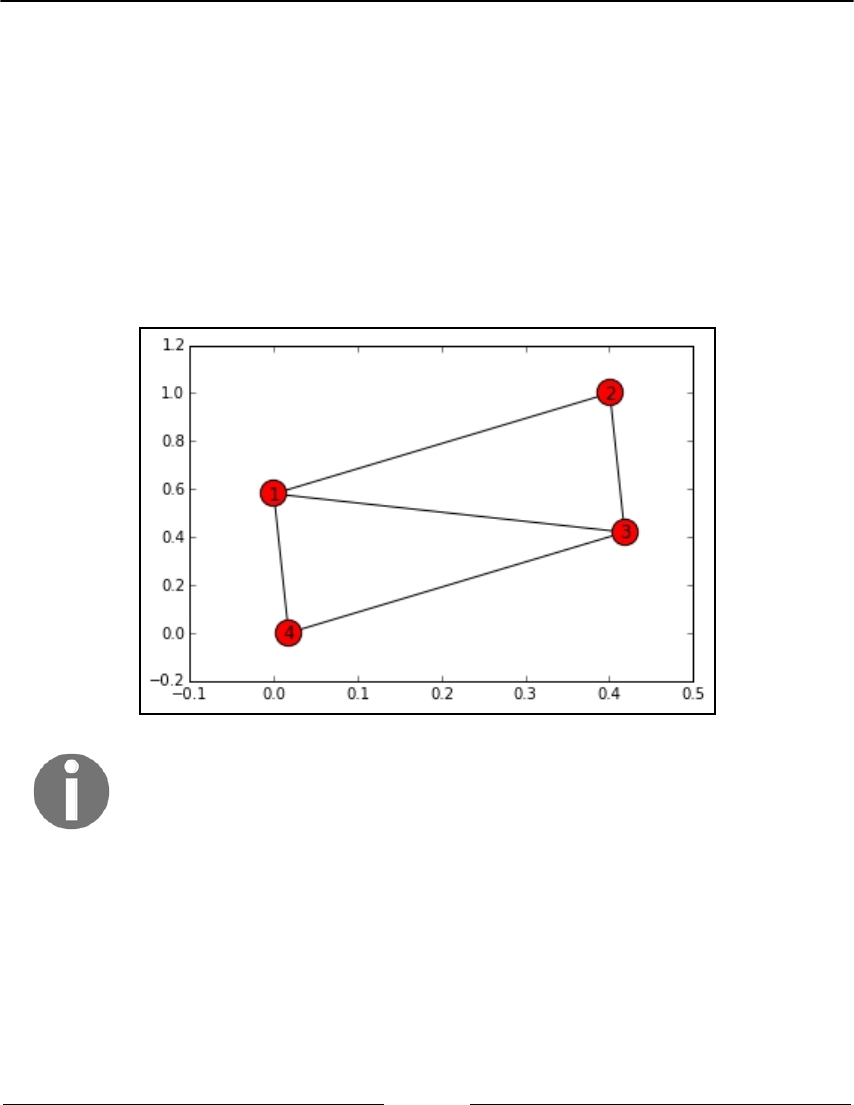

A block of code is set as follows:

In: G.add_edge(3,4)

G.add_edges_from([(2, 3), (4, 1)])

nx.draw_networkx(G)

plt.show()

Bold: Indicates a new term, an important word, or words that you see onscreen. For

example, words in menus or dialog boxes appear in the text like this. Here is an example:

"Select System info from the Administration panel."

Preface

[ 5 ]

Warnings or important notes appear like this.

Tips and tricks appear like this.

Get in touch

Feedback from our readers is always welcome.

General feedback: If you have questions about any aspect of this book, mention the book

title in the subject of your message and email us at [email protected].

Errata: Although we have taken every care to ensure the accuracy of our content, mistakes

do happen. If you have found a mistake in this book, we would be grateful if you would

report this to us. Please visit www.packt.com/submit-errata, selecting your book, clicking

on the Errata Submission Form link, and entering the details.

Piracy: If you come across any illegal copies of our works in any form on the Internet, we

would be grateful if you would provide us with the location address or website name.

Please contact us at [email protected] with a link to the material.

If you are interested in becoming an author: If there is a topic that you have expertise in

and you are interested in either writing or contributing to a book, please visit

authors.packtpub.com.

Reviews

Please leave a review. Once you have read and used this book, why not leave a review on

the site that you purchased it from? Potential readers can then see and use your unbiased

opinion to make purchase decisions, we at Packt can understand what you think about our

products, and our authors can see your feedback on their book. Thank you!

For more information about Packt, please visit packt.com.

1

First Steps

Whether you are an eager learner of data science or a well-grounded data science

practitioner, you can take advantage of this essential introduction to Python for data

science. You can use it to the fullest if you already have at least some previous experience in

basic coding, in writing general-purpose computer programs in Python, or in some other

data-analysis-specific language such as MATLAB or R.

This book will delve directly into Python for data science, providing you with a straight

and fast route to solving various data science problems using Python and its powerful data

analysis and machine learning packages. The code examples that are provided in this book

don't require you to be a master of Python. However, they will assume that you at least

know the basics of Python scripting, including data structures such as lists and dictionaries,

and the workings of class objects. If you don't feel confident about these subjects or have

minimal knowledge of the Python language, before reading this book, we suggest that you

take an online tutorial. There are good online tutorials that you may take, such as the one

offered by the Code Academy course at https://www.codecademy.com/learn/learn-

python, the one by Google's Python class at

https://developers.google.com/edu/python/, or even the Whirlwind tour of Python by

Jake Vanderplas (https://github.com/jakevdp/WhirlwindTourOfPython). All the courses

are free, and, in a matter of a few hours of study, they should provide you with all the

building blocks that will ensure you enjoy this book to the fullest. In order to provide an

integration of the two aforementioned free courses, we have also prepared a tutorial of our

own, which can be found in the appendix of this book.

In any case, don't be intimidated by our starting requirements; mastering Python enough

for data science applications isn't as arduous as you may think. It's just that we have to

assume some basic knowledge on the reader's part because our intention is to go straight to

the point of doing data science without having to explain too much about the general

aspects of the Python language that we will be using.

Are you ready, then? Let's get started!

First Steps Chapter 1

[ 7 ]

In this short introductory chapter, we will work through the basics to set off in full swing

and go through the following topics:

How to set up a Python data science toolbox

Using Jupyter

An overview of the data that we are going to study in this book

Introducing data science and Python

Data science is a relatively new knowledge domain, though its core components have been

studied and researched for many years by the computer science community. Its

components include linear algebra, statistical modeling, visualization, computational

linguistics, graph analysis, machine learning, business intelligence, and data storage and

retrieval.

Data science is a new domain, and you have to take into consideration that, currently, its

frontiers are still somewhat blurred and dynamic. Since data science is made of various

constituent sets of disciplines, please also keep in mind that there are different profiles of

data scientists depending on their competencies and areas of expertise (for instance, you

may read the illustrative There’s More Than One Kind of Data Scientist by Harlan D Harris at

radar.oreilly.com/2013/06/theres-more-than-one-kind-of-data-scientist.html, or

delve into the discussion about type A or B data scientists and other interesting taxonomies

at https://stats.stackexchange.com/questions/195034/what-is-a-data-scientist).

In such a situation, what can be the best tool of the trade that you can learn and effectively

use in your career as a data scientist? We believe that the best tool is Python, and we intend

to provide you with all the essential information that you will need for a quick start.

In addition, other programming languages such as R and MATLAB provide data scientists

with specialized tools to solve specific problems in statistical analysis and matrix

manipulation in data science. However, only Python really completes your data scientist

skill set with all the key techniques in a scalable and effective way. This multipurpose

language is suitable for both development and production alike; it can handle small- to

large-scale data problems and it is easy to learn and grasp, no matter what your

background or experience is.

First Steps Chapter 1

[ 8 ]

Created in 1991 as a general-purpose, interpreted, and object-oriented language, Python has

slowly and steadily conquered the scientific community and grown into a mature

ecosystem of specialized packages for data processing and analysis. It allows you to have

uncountable and fast experimentations, easy theory development, and prompt deployment

of scientific applications.

At present, the core Python characteristics that render it an indispensable data science tool

are as follows:

It offers a large, mature system of packages for data analysis and machine

learning. It guarantees that you will get all that you may need in the course of a

data analysis, and sometimes even more.

Python can easily integrate different tools and offers a truly unifying ground for

different languages, data strategies, and learning algorithms that can be fitted

together easily and which can concretely help data scientists forge powerful

solutions. There are packages that allow you to call code in other languages (in

Java, C, Fortran, R, or Julia), outsourcing some of the computations to them and

improving your script performance.

It is very versatile. No matter what your programming background or style is

(object-oriented, procedural, or even functional), you will enjoy programming

with Python.

It is cross-platform; your solutions will work perfectly and smoothly on

Windows, Linux, and macOS systems. You won't have to worry all that much

about portability.

Although interpreted, it is undoubtedly fast compared to other mainstream data

analysis languages such as R and MATLAB (though it is not comparable to C,

Java, and the newly emerged Julia language). Moreover, there are also static

compilers such as Cython or just-in-time compilers such as PyPy that can

transform Python code into C for higher performance.

It can work with large in-memory data because of its minimal memory footprint

and excellent memory management. The memory garbage collector will often

save the day when you load, transform, dice, slice, save, or discard data using

various iterations and reiterations of data wrangling.

It is very simple to learn and use. After you grasp the basics, there's no better

way to learn more than by immediately starting with the coding.

Moreover, the number of data scientists using Python is continuously growing:

new packages and improvements have been released by the community every

day, making the Python ecosystem an increasingly prolific and rich language for

data science.

First Steps Chapter 1

[ 9 ]

Installing Python

First, let's proceed and introduce all the settings you need in order to create a fully working

data science environment to test the examples and experiment with the code that we are

going to provide you with.

Python is an open source, object-oriented, and cross-platform programming language.

Compared to some of its direct competitors (for instance, C++ or Java), Python is very

concise. It allows you to build a working software prototype in a very short time, and yet it

has become the most used language in the data scientist's toolbox not just because of that. It

is also a general-purpose language, and it is very flexible due to a variety of available

packages that solve a wide spectrum of problems and necessities.

Python 2 or Python 3?

There are two main branches of Python: 2.7.x and 3.x. At the time of the revision of this

third edition of the book, the Python foundation (www.python.org/) is offering downloads

for Python Version 2.7.15 (release date January 5, 2018) and 3.6.5 (release date January 3,

2018). Although the Python 3 version is the newest, the older Python 2 has still been in use

in both scientific (20% adoption) and commercial (30% adoption) areas in 2017, as depicted

in detail by this survey by JetBrains: https://www.jetbrains.com/research/python-

developers-survey-2017. If you are still using Python 2, the situation could turn quite

problematic soon, because in just one year's time Python 2 will be retired and maintenance

will be ceased (pythonclock.org/ will provide you with the countdown, but for an official

statement about this, just read https://www.python.org/dev/peps/pep-0373/), and there

are really only a handful of libraries still incompatible between the two versions

(py3readiness.org/) that do not give enough reasons to stay with the older version.

In addition to all these reasons, there is no immediate backward compatibility between

Python 3 and 2. In fact, if you try to run some code developed for Python 2 with a Python 3

interpreter, it may not work. Major changes have been made to the newest version, and that

has affected past compatibility. Some data scientists, having built most of their work on

Python 2 and its packages, are reluctant to switch to the new version.

In this third edition of the book, we will continue to address the larger audience of data

scientists, data analysts, and developers, who do not have such a strong legacy with Python

2. Consequently, we will continue working with Python 3, and we suggest using a version

such as the most recently available Python 3.6. After all, Python 3 is the present and the

future of Python. It is the only version that will be further developed and improved by the

Python foundation, and it will be the default version of the future on many operating

systems.

First Steps Chapter 1

[ 10 ]

Anyway, if you are currently working with version 2 and you prefer to keep on working

with it, you can still use this book and all of its examples. In fact, for the most part, our code

will simply work on Python 2 after having the code itself preceded by these imports:

from __future__ import (absolute_import, division,

print_function, unicode_literals)

from builtins import *

from future import standard_library

standard_library.install_aliases()

The from __future__ import commands should always occur at the

beginning of your scripts, or else you may experience Python reporting an

error.

As described in the Python-future website (python-future.org), these imports will help

convert several Python 3-only constructs to a form that's compatible with both Python 3

and Python 2 (and in any case, most Python 3 code should just simply work on Python 2,

even without the aforementioned imports).

In order to run the upward commands successfully, if the future package is not already

available on your system, you should install it (version >= 0.15.2) by using the following

command, which is to be executed from a shell:

$> pip install -U future

If you're interested in understanding the differences between Python 2 and Python 3

further, we recommend reading the wiki page offered by the Python foundation itself:

https://wiki.python.org/moin/Python2orPython3.

Step-by-step installation

Novice data scientists who have never used Python (who likely don't have the language

readily installed on their machines) need to first download the installer from the main

website of the project, www.python.org/downloads/, and then install it on their local

machine.

First Steps Chapter 1

[ 11 ]

This section provides you with full control over what can be installed on

your machine. This is very useful when you have to set up single

machines to deal with different tasks in data science. Anyway, please be

warned that a step-by-step installation really takes time and effort.

Instead, installing a ready-made scientific distribution will lessen the

burden of installation procedures and it may be well-suited for first

starting and learning because it saves you time and sometimes even

trouble, though it will put a large number of packages (and we won't use

most of them) on your computer all at once. Therefore, if you want to start

immediately with an easy installation procedure, just skip this part and

proceed to the next section, Scientific distributions.

This being a multiplatform programming language, you'll find installers for machines that

either run on Windows or Unix-like operating systems.

Remember that some of the latest versions of most Linux distributions (such as CentOS,

Fedora, Red Hat Enterprise, and Ubuntu) have Python 2 packaged in the repository. In

such a case, and in the case that you already have a Python version on your computer

(since our examples run on Python 3), you first have to check what version you are exactly

running. To do such a check, just follow these instructions:

Open a python shell, type python in the terminal, or click on any Python icon1.

you find on your system.

Then, after starting Python, to test the installation, run the following code in the2.

Python interactive shell or REPL:

>>> import sys

>>> print (sys.version_info)

If you can read that your Python version has the major=2 attribute, it means that3.

you are running a Python 2 instance. Otherwise, if the attribute is valued 3, or if

the print statement reports back to you something like v3.x.x (for instance,

v3.5.1), you are running the right version of Python, and you are ready to move

forward.

To clarify the operations we have just mentioned, when a command is given in the terminal

command line, we prefix the command with $>. Otherwise, if it's for the Python REPL, it's

preceded by >>>.

First Steps Chapter 1

[ 12 ]

Installing the necessary packages

Python won't come bundled with everything you need unless you take a specific pre-made

distribution. Therefore, to install the packages you need, you can use either pip or

easy_install. Both of these two tools run in the command line and make the process of

installation, upgrading, and removing Python packages a breeze. To check which tools

have been installed on your local machine, run the following command:

$> pip

To install pip, follow the instructions given

at https://pip.pypa.io/en/latest/installing/.

Alternatively, you can also run the following command:

$> easy_install

If both of these commands end up with an error, you need to install any one of them. We

recommend that you use pip because it is thought of as an improvement over

easy_install. Moreover, easy_install is going to be dropped in the future and pip

has important advantages over it. It is preferable to install everything using pip because of

the following:

It is the preferred package manager for Python 3. Starting with Python 2.7.9 and

Python 3.4, it is included by default with the Python binary installers

It provides an uninstall functionality

It rolls back and leaves your system clear if, for whatever reason, the package's

installation fails

First Steps Chapter 1

[ 13 ]

Using easy_install in spite of the advantages of pip makes sense if you

are working on Windows because pip won't always install pre-compiled

binary packages. Sometimes, it will try to build the package's extensions

directly from C source, thus requiring a properly configured compiler

(and that's not an easy task on Windows). This depends on whether the

package is running on eggs (and pip cannot directly use their binaries,

but it needs to build from their source code) or wheels (in this case, pip

can install binaries if available, as explained

here: http://pythonwheels.com/). Instead, easy_install will always

install available binaries from eggs and wheels. Therefore, if you are

experiencing unexpected difficulties installing a package, easy_install

can save your day (at some price, anyway, as we just mentioned in the

list).

The most recent versions of Python should already have pip installed by default.

Therefore, you may have it already installed on your system. If not, the safest way is to

download the get-pi.py script from https://bootstrap.pypa.io/get-pip.py and then

run it by using the following:

$> python get-pip.py

The script will also install the setup tool from pypi.org/project/setuptools, which also

contains easy_install.

You're now ready to install the packages you need in order to run the examples provided in

this book. To install the < package-name > generic package, you just need to run the

following command:

$> pip install < package-name >

Alternatively, you can run the following command:

$> easy_install < package-name >

Note that, in some systems, pip might be named as pip3 and easy_install as

easy_install-3 to stress the fact that both operate on packages for Python 3. If you're

unsure, check the version of Python that pip is operating on with:

$> pip -V

For easy_install, the command is slightly different:

$> easy_install --version

First Steps Chapter 1

[ 14 ]

After this, the <pk> package and all its dependencies will be downloaded and installed. If

you're not certain whether a library has been installed or not, just try to import a module

inside it. If the Python interpreter raises an ImportError error, it can be concluded that the

package has not been installed.

This is what happens when the NumPy library has been installed:

>>> import numpy

This is what happens if it's not installed:

>>> import numpy

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ImportError: No module named numpy

In the latter case, you'll need to first install it through pip or easy_install.

Take care that you don't confuse packages with modules. With pip, you

install a package; in Python, you import a module. Sometimes, the

package and the module have the same name, but in many cases, they

don't match. For example, the sklearn module is included in the package

named Scikit-learn.

Finally, to search and browse the Python packages available for Python, look at pypi.org.

Package upgrades

More often than not, you will find yourself in a situation where you have to upgrade a

package because either the new version is required by a dependency or it has additional

features that you would like to use. First, check the version of the library you have installed

by glancing at the __version__ attribute, as shown in the following example, numpy:

>>> import numpy

>>> numpy.__version__ # 2 underscores before and after

'1.11.0'

Now, if you want to update it to a newer release, say the 1.12.1 version, you can run the

following command from the command line:

$> pip install -U numpy==1.12.1

First Steps Chapter 1

[ 15 ]

Alternatively, you can use the following command:

$> easy_install --upgrade numpy==1.12.1

Finally, if you're interested in upgrading it to the latest available version, simply run the

following command:

$> pip install -U numpy

You can alternatively run the following command:

$> easy_install --upgrade numpy

Scientific distributions

As you've read so far, creating a working environment is a time-consuming operation for a

data scientist. You first need to install Python, and then, one by one, you can install all the

libraries that you will need (sometimes, the installation procedures may not go as smoothly

as you'd hoped for earlier).

If you want to save time and effort and want to ensure that you have a fully working

Python environment that is ready to use, you can just download, install, and use the

scientific Python distribution. Apart from Python, they also include a variety of preinstalled

packages, and sometimes, they even have additional tools and an IDE. A few of them are

very well-known among data scientists, and in the sections that follow, you will find some

of the key features of each of these packages.

We suggest that you first promptly download and install a scientific distribution, such as

Anaconda (which is the most complete one), and after practicing the examples in this book,

decide whether or not to fully uninstall the distribution and set up Python alone, which can

be accompanied by just the packages you need for your projects.

Anaconda

Anaconda (https://www.anaconda.com/download/) is a Python distribution offered by

Continuum Analytics that includes nearly 200 packages, which comprises NumPy, SciPy,

pandas, Jupyter, Matplotlib, Scikit-learn, and NLTK. It's a cross-platform distribution

(Windows, Linux, and macOS) that can be installed on machines with other existing Python

distributions and versions. Its base version is free; instead, add-ons that contain advanced

features are charged separately. Anaconda introduces conda, a binary package manager, as

a command-line tool to manage your package installations.

First Steps Chapter 1

[ 16 ]

As stated on the website, Anaconda's goal is to provide enterprise-ready Python

distribution for large-scale processing, predictive analytics, and scientific computing.

Leveraging conda to install packages

If you've decided to install an Anaconda distribution, you can take advantage of the conda

binary installer we mentioned previously. conda is an open source package management

system, and consequently, it can be installed separately from an Anaconda distribution. The

core difference from pip is that conda can be used to install any package (not just Python's

ones) in a conda environment (that is, an environment where you have installed conda and

you are using it for providing packages). There are many advantages in using conda over

pip, as described by Jack VanderPlas in this famous blog post of

his: jakevdp.github.io/blog/2016/08/25/conda-myths-and-misconceptions.

You can test immediately whether conda is available on your system. Open a shell and

digit the following:

$> conda -V

If conda is available, your version will appear; otherwise, an error will be reported. If

conda is not available, you can quickly install it on your system by going to

http://conda.pydata.org/miniconda.html and installing the Miniconda software that's

suitable for your computer. Miniconda is a minimal installation that only includes conda

and its dependencies.

Conda can help you manage two tasks: installing packages and creating virtual

environments. In this paragraph, we will explore how conda can help you easily install

most of the packages you may need in your data science projects.

Before starting, please check that you have the latest version of conda at hand:

$> conda update conda

Now you can install any package you need. To install the <package-name> generic

package, you just need to run the following command:

$> conda install <package-name>

You can also install a particular version of the package just by pointing it out:

$> conda install <package-name>=1.11.0

First Steps Chapter 1

[ 17 ]

Similarly, you can install multiple packages at once by listing all their names:

$> conda install <package-name-1> <package-name-2>

If you just need to update a package that you previously installed, you can keep on using

conda:

$> conda update <package-name>

You can update all the available packages simply by using the --all argument:

$> conda update --all

Finally, conda can also uninstall packages for you:

$> conda remove <package-name>

If you would like to know more about conda, you can read its documentation at

http://conda.pydata.org/docs/index.html. In summary, as its main advantage, it

handles binaries even better than easy_install (by always providing a successful

installation on Windows without any need to compile the packages from source) but

without its problems and limitations. With the use of conda, packages are easy to install

(and installation is always successful), update, and even uninstall. On the other hand,

conda cannot install directly from a git server (so it cannot access the latest version of

many packages under development), and it doesn't cover all the packages available on PyPI

like pip itself.

Enthought Canopy

Enthought Canopy (https://www.enthought.com/products/canopy/) is a Python

distribution by Enthought Inc. It includes more than 200 preinstalled packages, such as

NumPy, SciPy, Matplotlib, Jupyter, and pandas. This distribution is targeted at engineers,

data scientists, quantitative and data analysts, and enterprises. Its base version is free

(which is named Canopy Express), but if you need advanced features, you have to buy a

front version. It's a multi-platform distribution, and its command-line installation tool is

canopy_cli.

First Steps Chapter 1

[ 18 ]

WinPython

WinPython (http://winpython.github.io/) is a free, open-source Python distribution

that's maintained by the community. It is designed for scientists and includes many

packages such as NumPy, SciPy, Matplotlib, and Jupyter. It also includes Spyder as an IDE.

It is free and portable. You can put WinPython into any directory, or even into a USB flash

drive, and at the same time maintain multiple copies and versions of it on your system. It

only works on Microsoft Windows, and its command-line tool is the WinPython Package

Manager (WPPM).

Explaining virtual environments

No matter whether you have chosen to install a standalone Python or instead used a

scientific distribution, you may have noticed that you are actually bound on your system to

the Python version you have installed. The only exception, for Windows users, is to use a

WinPython distribution, since it is a portable installation and you can have as many

different installations as you need.

A simple solution to breaking free of such a limitation is to use virtualenv, which is a tool

for creating isolated Python environments. That means, by using different Python

environments, you can easily achieve the following things:

Testing any new package installation or doing experimentation on your Python

environment without any fear of breaking anything in an irreparable way. In this

case, you need a version of Python that acts as a sandbox.

Having at hand multiple Python versions (both Python 2 and Python 3), geared

with different versions of installed packages. This can help you in dealing with

different versions of Python for different purposes (for instance, some of the

packages we are going to present on Windows OS only work when using Python

3.4, which is not the latest release).

Taking a replicable snapshot of your Python environment easily and having your

data science prototypes work smoothly on any other computer or in production.

In this case, your main concern is the immutability and replicability of your

working environment.

You can find documentation about virtualenv at

http://virtualenv.readthedocs.io/en/stable/, though we are going to provide you

with all the directions you need to start using it immediately. In order to take advantage of

virtualenv, you first have to install it on your system:

$> pip install virtualenv

First Steps Chapter 1

[ 19 ]

After the installation completes, you can start building your virtual environments. Before

proceeding, you have to make a few decisions:

If you have more versions of Python installed on your system, you have to

decide which version to pick up. Otherwise, virtualenv will take the Python

version that was used when virtualenv was installed on your system. In order

to set a different Python version, you have to digit the argument -p followed by

the version of Python you want, or insert the path of the Python executable to be

used (for instance, by using -p python2.7, or by just pointing to a Python

executable such as -p c:Anaconda2python.exe).

With virtualenv, when required to install a certain package, it will install it

from scratch, even if it is already available at a system level (on the python

directory you created the virtual environment from). This default behavior

makes sense because it allows you to create a completely separated empty

environment. In order to save disk space and limit the time of installation of all

the packages, you may instead decide to take advantage of already available

packages on your system by using the argument --system-site-packages.

You may want to be able to later move around your virtual environment across

Python installations, even among different machines. Therefore, you may want

to make the functionality of all of the environment's scripts relative to the path it

is placed in by using the argument --relocatable.

After deciding on the Python version you wish to use, linking to existing global packages,

and the virtual environment being relocatable or not, in order to start, you just need to

launch the command from a shell. Declare the name you would like to assign to your new

environment:

$> virtualenv clone

virtualenv will just create a new directory using the name you provided, in the path from

which you actually launched the command. To start using it, you can just enter the

directory and digit activate:

$> cd clone

$> activate

At this point, you can start working on your separated Python environment, installing

packages, and working with code.

First Steps Chapter 1

[ 20 ]

If you need to install multiple packages at once, you may need some special function from

pip, pip freeze, which will enlist all the packages (and their versions) you have installed

on your system. You can record the entire list in a text file by using the following

command:

$> pip freeze > requirements.txt

After saving the list in a text file, just take it into your virtual environment and install all the

packages in a breeze with a single command:

$> pip install -r requirements.txt

Each package will be installed according to the order in the list (packages are listed in a

case-insensitive sorted order). If a package requires other packages that are later in the list,

that's not a big deal because pip automatically manages such situations. So, if your package

requires NumPy and NumPy is not yet installed, pip will install it first.

When you've finished installing packages and using your environment for scripting and

experimenting, in order to return to your system defaults, just issue the following

command:

$> deactivate

If you want to remove the virtual environment completely, after deactivating and getting

out of the environment's directory, you just have to get rid of the environment's directory

itself by performing a recursive deletion. For instance, on Windows, you just do the

following:

$> rd /s /q clone

On Linux and macOS, the command will be as follows:

$> rm -r -f clone

If you are working extensively with virtual environments, you should

consider using virtualenvwrapper, which is a set of wrappers for

virtualenv in order to help you manage multiple virtual environments

easily. It can be found at bitbucket.org/dhellmann/virtualenvwrapper.

If you are operating on a Unix system (Linux or macOS), another solution

we have to quote is pyenv, which can be found

at https://github.com/yyuu/pyenv. It lets you set your main Python

version, allows for the installation of multiple versions, and creates virtual

environments. Its peculiarity is that it does not depend on Python to be

installed and works perfectly at the user level (no need for sudo

commands).

First Steps Chapter 1

[ 21 ]

Conda for managing environments

If you have installed the Anaconda distribution, or you have tried conda by using a

Miniconda installation, you can also take advantage of the conda command to run virtual

environments as an alternative to virtualenv. Let's see how to use conda for that in

practice. We can check what environments we have available like this:

>$ conda info -e

This command will report to you what environments you can use on your system based on

conda. Most likely, your only environment will be root, pointing to your Anaconda

distribution folder.

As an example, we can create an environment based on Python Version 3.6, having all the

necessary Anaconda-packaged libraries installed. This makes sense, for instance, when

installing a particular set of packages for a data science project. In order to create such an

environment, just perform the following:

$> conda create -n python36 python=3.6 anaconda

The preceding command asks for a particular Python version, 3.6, and requires the

installation of all packages that are available on the Anaconda distribution (the argument

anaconda). It names the environment as python36 using the argument -n. The complete

installation should take a while, given a large number of packages in the Anaconda

installation. After having completed all of the installations, you can activate the

environment:

$> activate python36

If you need to install additional packages to your environment when activated, you just use

the following:

$> conda install -n python36 <package-name1> <package-name2>

That is, you make the list of the required packages follow the name of your environment.

Naturally, you can also use pip install, as you would do in a virtualenv environment.

You can also use a file instead of listing all the packages by name yourself. You can create a

list in an environment using the list argument and pipe the output to a file:

$> conda list -e > requirements.txt

First Steps Chapter 1

[ 22 ]

Then, in your target environment, you can install the entire list by using the following:

$> conda install --file requirements.txt

You can even create an environment, based on a requirements list:

$> conda create -n python36 python=3.6 --file requirements.txt

Finally, after having used the environment, to close the session, you simply use the

following command:

$> deactivate

Contrary to virtualenv, there is a specialized argument in order to completely remove an

environment from your system:

$> conda remove -n python36 --all

A glance at the essential packages

We mentioned previously that the two most relevant characteristics of Python are its ability

to integrate with other languages and its mature package system, which is well embodied

by PyPI (the Python Package Index: pypi.org), a common repository for the majority of

Python open source packages that are constantly maintained and updated.

The packages that we are now going to introduce are strongly analytical and they will

constitute a complete data science toolbox. All of the packages are made up of extensively

tested and highly optimized functions for both memory usage and performance, ready to

achieve any scripting operation with successful execution. A walkthrough on how to install

them is provided in the following section.

Partially inspired by similar tools present in R and MATLAB environments, we will explore

how a few selected Python commands can allow you to efficiently handle data and then

explore, transform, experiment, and learn from the same without having to write too much

code or reinvent the wheel.

First Steps Chapter 1

[ 23 ]

NumPy

NumPy, which is Travis Oliphant's creation, is the true analytical workhorse of the Python

language. It provides the user with multidimensional arrays, along with a large set of

functions to operate a multiplicity of mathematical operations on these arrays. Arrays are

blocks of data that are arranged along multiple dimensions, which implement

mathematical vectors and matrices. Characterized by optimal memory allocation, arrays are

useful—not just for storing data, but also for fast matrix operations (vectorization), which

are indispensable when you wish to solve ad hoc data science problems:

Website: http://www.numpy.org/

Version at the time of print: 1.12.1

Suggested install command: pip install numpy

As a convention largely adopted by the Python community, when importing NumPy, it is

suggested that you alias it as np:

import numpy as np

We will be doing this throughout the course of this book.

SciPy

An original project by Travis Oliphant, Pearu Peterson, and Eric Jones, SciPy completes

NumPy's functionalities, which offers a larger variety of scientific algorithms for linear

algebra, sparse matrices, signal and image processing, optimization, fast Fourier

transformation, and much more:

Website: http://www.scipy.org/

Version at time of print: 1.1.0

Suggested install command: pip install scipy

First Steps Chapter 1

[ 24 ]

pandas

The pandas package deals with everything that NumPy and SciPy cannot do. Thanks to its

specific data structures, namely DataFrames and Series, pandas allows you to handle

complex tables of data of different types (which is something that NumPy's arrays cannot

do) and time series. Thanks to Wes McKinney's creation, you will be able to easily and

smoothly load data from a variety of sources. You can then slice, dice, handle missing

elements, add, rename, aggregate, reshape, and finally visualize your data at will:

Website: http://pandas.pydata.org/

Version at the time of print: 0.23.1

Suggested install command: pip install pandas

Conventionally, the pandas package is imported as pd:

import pandas as pd

pandas-profiling

This is a GitHub project that easily allows you to create a report from a pandas DataFrame.

The package will present the following measures in an interactive HTML report, which is

used to evaluate the data at hand for a data science project:

Essentials, such as type, unique values, and missing values

Quantile statistics, such as minimum value, Q1, median, Q3, maximum, range,

and interquartile range

Descriptive statistics such as mean, mode, standard deviation, sum, median

absolute deviation, the coefficient of variation, kurtosis, and skewness

Most frequent values

Histograms

Correlations highlighting highly correlated variables, and Spearman and

Pearson matrixes

Here is all the information about this package:

Website: https://github.com/pandas-profiling/pandas-profiling

Version at the time of print: 1.4.1

Suggested install command: pip install pandas-profiling

First Steps Chapter 1

[ 25 ]

Scikit-learn

Started as part of SciKits (SciPy Toolkits), Scikit-learn is the core of data science operations

in Python. It offers all that you may need in terms of data preprocessing, supervised and

unsupervised learning, model selection, validation, and error metrics. Expect us to talk at

length about this package throughout this book. Scikit-learn started in 2007 as a Google

Summer of Code project by David Cournapeau. Since 2013, it has been taken over by the

researchers at INRIA ( Institut national de recherche en informatique et en automatique,

that is the French Institute for Research in Computer Science and Automation):

Website: http://Scikit-learn.org/stable

Version at the time of print: 0.19.1

Suggested install command: pip install Scikit-learn

Note that the imported module is named sklearn.

Jupyter

A scientific approach requires the fast experimentation of different hypotheses in a

reproducible fashion. Initially named IPython and limited to working only with the Python

language, Jupyter was created by Fernando Perez in order to address the need for an

interactive Python command shell (which is based on shell, web browser, and the

application interface), with graphical integration, customizable commands, rich history (in

the JSON format), and computational parallelism for an enhanced performance. Jupyter is

our favored choice throughout this book; it is used to clearly and effectively illustrate

operations with scripts and data, and the consequent results:

Website: http://jupyter.org/

Version at the time of print: 4.4.0 (ipykernel = 4.8.2)

Suggested install command: pip install jupyter

First Steps Chapter 1

[ 26 ]

JupyterLab

JupyterLab is the next user interface for the Jupyter project, which is currently in beta. It is

an environment devised for interactive and reproducible computing which will offer all the

usual notebook, terminal, text editor, file browser, rich outputs, and so on arranged in a

more flexible and powerful user interface. JupyterLab will eventually replace the classic

Jupyter Notebook after JupyterLab reaches Version 1.0. Therefore, we intend to introduce

this package now in order to make you aware of it and of its functionalities:

Website: https://github.com/jupyterlab/jupyterlab

Version at the time of print: 0.32.0

Suggested install command: pip install jupyterlab

Matplotlib

Originally developed by John Hunter, matplotlib is a library that contains all the building

blocks that are required to create quality plots from arrays and to visualize them

interactively.

You can find all the MATLAB-like plotting frameworks inside the PyLab module:

Website: http://matplotlib.org/

Version at the time of print: 2.2.2

Suggested install command: pip install matplotlib

First Steps Chapter 1

[ 27 ]

You can simply import what you need for your visualization purposes with the following

command:

import matplotlib.pyplot as plt

Seaborn

Working out beautiful graphics using matplotlib can be really time-consuming, for this

reason, Michael Waskom (http://www.cns.nyu.edu/~mwaskom/) developed Seaborn, a

high-level visualization package based on matplotlib and integrated with pandas data

structures (such as Series and DataFrames) capable to produce informative and beautiful

statistical visualizations.

Website: http://seaborn.pydata.org/

Version at the time of print: 0.9.0

Suggested install command: pip install seaborn

You can simply import what you need for your visualization purposes with the following

command:

import seaborn as sns

Statsmodels

Previously a part of SciKits, statsmodels was thought to be a complement to SciPy's

statistical functions. It features generalized linear models, discrete choice models, time

series analysis, and a series of descriptive statistics, as well as parametric and non-

parametric tests:

Website: http://statsmodels.sourceforge.net/

Version at the time of print: 0.9.0

Suggested install command: pip install statsmodels

First Steps Chapter 1

[ 28 ]

Beautiful Soup

Beautiful Soup, a creation of Leonard Richardson, is a great tool to scrap out data from

HTML and XML files that are retrieved from the internet. It works incredibly well, even in

the case of tag soups (hence the name), which are collections of malformed, contradictory,

and incorrect tags. After choosing your parser (the HTML parser included in Python's

standard library works fine), thanks to Beautiful Soup, you can navigate through the objects

in the page and extract text, tables, and any other information that you may find useful:

Website: http://www.crummy.com/software/BeautifulSoup

Version at the time of print: 4.6.0

Suggested install command: pip install beautifulsoup4

Note that the imported module is named bs4.

NetworkX

Developed by the Los Alamos National Laboratory, NetworkX is a package specialized in

the creation, manipulation, analysis, and graphical representation of real-life network data

(it can easily operate with graphs made up of a million nodes and edges). Besides

specialized data structures for graphs and fine visualization methods (2D and 3D), it

provides the user with many standard graph measures and algorithms, such as the shortest

path, centrality, components, communities, clustering, and PageRank. We will mainly use

this package in Chapter 6, Social Network Analysis:

Website: http://networkx.github.io/

Version at the time of print: 2.1

Suggested install command: pip install networkx

Conventionally, NetworkX is imported as nx:

import networkx as nx

First Steps Chapter 1

[ 29 ]

NLTK

The Natural Language Toolkit (NLTK) provides access to corpora and lexical resources,

and to a complete suite of functions for Natural Language Processing (NLP), ranging from

tokenizers to part-of-speech taggers and from tree models to named-entity recognition.

Initially, Steven Bird and Edward Loper created the package as an NLP teaching

infrastructure for their course at the University of Pennsylvania. Now it is a fantastic tool

that you can use to prototype and build NLP systems:

Website: http://www.nltk.org/

Version at the time of print: 3.3

Suggested install command: pip install nltk

Gensim

Gensim, programmed by Radim Řehůřek, is an open source package that is suitable for the

analysis of large textual collections with the help of parallel distributable online algorithms.

Among advanced functionalities, it implements Latent Semantic Analysis (LSA), topic

modeling by Latent Dirichlet Allocation (LDA), and Google's word2vec, a powerful

algorithm that transforms text into vector features that can be used in supervised and

unsupervised machine learning:

Website: http://radimrehurek.com/gensim/

Version at the time of print: 3.4.0

Suggested install command: pip install gensim

PyPy

PyPy is not a package; it is an alternative implementation of Python 3.5.3 that supports

most of the commonly used Python standard packages (unfortunately, NumPy is currently

not fully supported). As an advantage, it offers enhanced speed and memory handling.

Thus, it is very useful for heavy-duty operations on large chunks of data, and it should be

part of your big data handling strategies:

Website: http://pypy.org/

Version at time of print: 6.0

Download page: http://pypy.org/download.html

First Steps Chapter 1

[ 30 ]

XGBoost

XGBoost is a scalable, portable, and distributed gradient boosting library (a tree ensemble

machine learning algorithm). Initially created by Tianqi Chen from Washington University,

it has been enriched by a Python wrapper by Bing Xu and an R interface by Tong He (you

can read the story behind XGBoost directly from its principal creator at

http://homes.cs.washington.edu/~tqchen/2016/03/10/story-and-lessons-behind-the-

evolution-of-xgboost.html). XGBoost is available for Python, R, Java, Scala, Julia, and

C++, and it can work on a single machine (leveraging multithreading) in both Hadoop and

Spark clusters:

Website: https://xgboost.readthedocs.io/en/latest/

Version at the time of print: 0.80

Download page: https://github.com/dmlc/xgboost

Detailed instructions for installing XGBoost on your system can be found

at https://github.com/dmlc/xgboost/blob/master/doc/build.md.

The installation of XGBoost on both Linux and macOS is quite straightforward, whereas it

is a little bit trickier for Windows users, though the recent release of a pre-built binary

wheel for Python has made the procedure a piece of cake for everyone. You simply have to

type this on your shell:

$> pip install xgboost

If you want to install XGBoost from scratch because you need the most recent bug fixes or

GPU support, you need to first build the shared library from C++ (libxgboost.so for

Linux/macOS and xgboost.dll for Windows) and then install the Python package. On a

Linux/macOS system, you just have to build the executable by the make command, but on

Windows, things are a little bit more tricky.

Generally, refer to https://xgboost.readthedocs.io/en/latest/build.html#, which

provides the most recent instructions for building from scratch. For a quick reference, here,

we are going to provide specific installation steps to get XGBoost working on Windows:

First, download and install Git for Windows,1.

(https://git-for-windows.github.io/).

Then, you need a MINGW compiler present on your system. You can download2.

it from http://www.mingw.org/ or http://tdm-gcc.tdragon.net/, according to

the characteristics of your system.

First Steps Chapter 1

[ 31 ]

From the command line, execute the following:3.

$> git clone --recursive https://github.com/dmlc/xgboost

$> cd xgboost

$> git submodule init

$> git submodule update

Then, always from the command line, copy the configuration for 64-byte systems4.

to be the default one:

$> copy make\mingw64.mk config.mk

Alternatively, you just copy the plain 32-byte version:5.

$> copy make\mingw.mk config.mk

After copying the configuration file, you can run the compiler, setting it to use6.

four threads in order to speed up the compiling procedure:

$> mingw32-make -j4

In MinGW, the make command comes with the name mingw32-make. If you are7.

using a different compiler, the previous command may not work. If so, you can

simply try this:

$> make -j4

Finally, if the compiler completes its work without errors, you can install the8.

package in your Python by using the following:

$> cd python-package

$> python setup.py install

After following all the preceding instructions, if you try to import

XGBoost in Python and it doesn't load and results in an error, it may well

be that Python cannot find MinGW's g++ runtime libraries.

You just need to find the location on your computer of MinGW's binaries

(in our case, it was in C:\mingw-w64\mingw64\bin; just modify the

following code and put yours) and place the following code snippet

before importing XGBoost:

import os

mingw_path = 'C:\mingw-w64\mingw64\bin'

os.environ['PATH']=mingw_path + ';' + os.environ['PATH']

import xgboost as xgb

First Steps Chapter 1