Department of Defense

Systems Engineering Plan (SEP)

Outline

Version 4.1

May 2023

Office of the Under Secretary of Defense for Research and Engineering

Washington, D.C.

Distribution Statement A. Approved for public release. Distribution is unlimited.

Department of Defense Systems Engineering Plan (SEP) Outline, Version 4.1

Please direct questions or comments to the Office of Primary Responsibility:

Office of the Under Secretary of Defense for Research and Engineering

3030 Defense Pentagon

Washington, DC 20301

https://www.cto.mil

Distribution Statement A. Approved for public release. Distribution is unlimited.

DOPSR Case # 23-S-1904

Approved by

Thomas W. Simms

Acting Principal Deputy Director for Systems Engineering and Architecture

Office of the Under Secretary of Defense for Research and Engineering

Department of Defense Systems Engineering Plan (SEP) Outline Change Record

Date Change Rationale

May 2023

Added section 3.2.7 on

corrosion prevention and

control.

Requested by Deputy’s Management

Action Group (DMAG) and required by

DoD Instruction 5000.88, Engineering

of Defense Systems.

iv

Preface

The Department of Defense (DoD) Office of the Under Secretary of Defense for Research and

Engineering (OUSD(R&E)) prepared this Systems Engineering Plan (SEP) Outline for DoD

acquisition programs to use in preparing their SEPs. In accordance with DoD Instruction (DoDI)

5000.88, Engineering of Defense Systems, the SEPs for Major Defense Acquisition Programs,

Acquisition Category (ACAT) II, and ACAT III programs will contain the content described in this

outline unless the SEP approval authority waives the requirement.

Although the outline indicates required SEP content, the format is not prescribed. The

Component may use this document as a template or establish a SEP template that includes the

required content.

Passages labeled Expectation: indicate activities or content that the SEP approval authority will

evaluate as part of their review in approving or not approving the SEP. For example,

Expectation: At the start of the program, PMs will prepare a SEP to manage systems

engineering activities in accordance with DoDI 5000.88, Engineering of Defense

Systems.

Expectations passages stating the program “will . . . ” indicate the expectation is grounded in

and required by policy. Expectations stating the program “should . . . ” indicate OUSD(R&E)

highly recommends the item as a best practice even if it is not explicitly required by DoD policy.

CLASSIFICATION

1

[Program Name]

SYSTEMS ENGINEERING PLAN (SEP)

[DATE]

Publishing Organization

Distribution Statements as Needed

CLASSIFICATION

2

Office of Primary Responsibility (OPR):

Name

Address

Email

CLASSIFICATION

Contents

3

Contents

1 Introduction ............................................................................................................................. 8

2 Program Technical Definition .................................................................................................. 9

2.1 Requirements Development .............................................................................................. 9

2.2 Architectures and Interface Control ................................................................................. 11

2.3 Specialty Engineering ..................................................................................................... 12

2.4 Modeling Strategy ........................................................................................................... 13

2.5 Design Considerations .................................................................................................... 13

2.6 Technical Certifications ................................................................................................... 17

3 Program Technical Management .......................................................................................... 18

3.1 Technical Planning .......................................................................................................... 18

Technical Schedule ................................................................................................ 18

3.1.1.1 Schedule Management ...................................................................................... 19

3.1.1.2 Family of Systems/System of Systems Management ........................................ 20

Maturity Assessment Planning ............................................................................... 22

Technical Structure and Organization .................................................................... 22

3.1.3.1 Work Breakdown Structure ................................................................................ 22

3.1.3.2 Government Program Office Organization ........................................................ 22

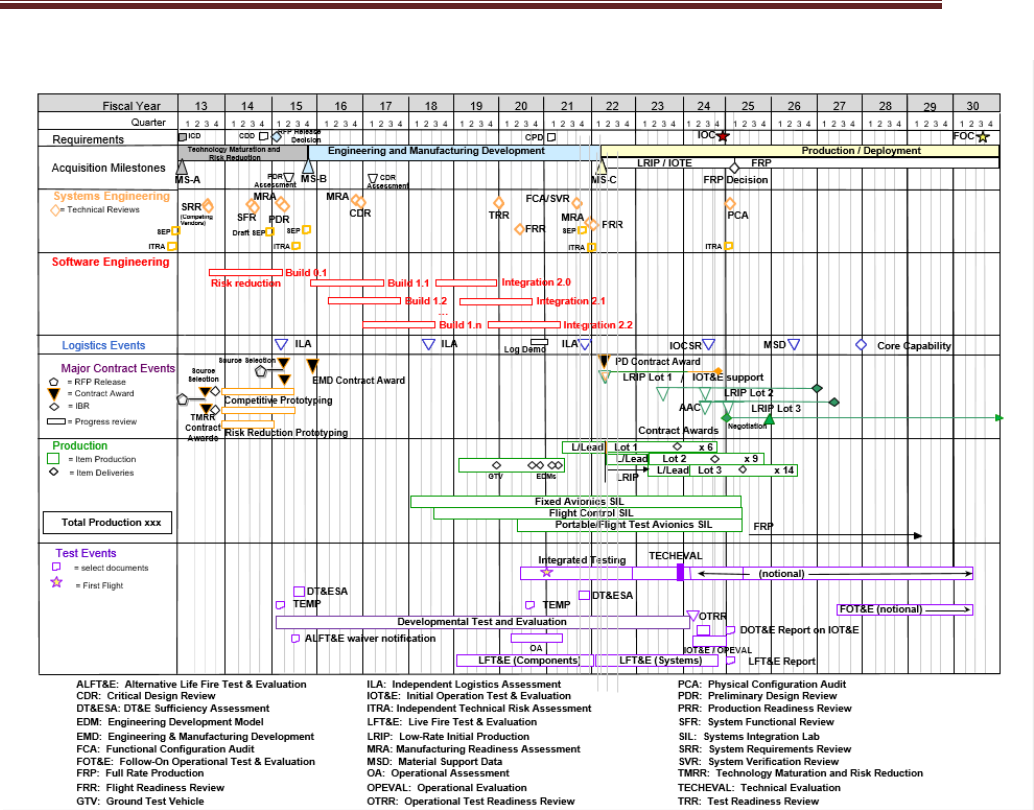

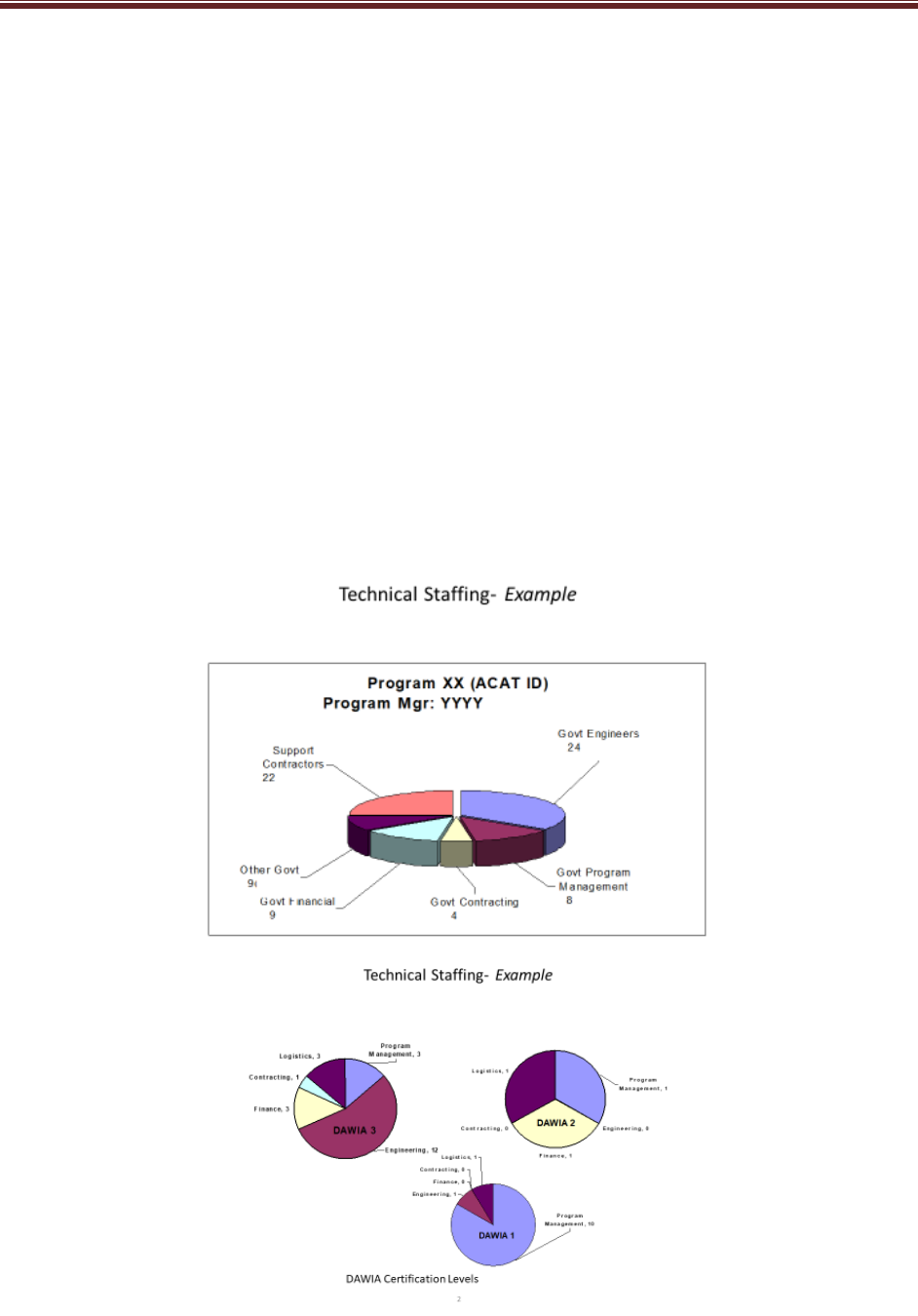

3.1.3.3 Program Office Technical Staffing Levels .......................................................... 23

3.1.3.4 Engineering Team Organization and Staffing .................................................... 26

3.2 Technical Tracking .......................................................................................................... 31

Technical Risk, Issue, and Opportunity Management ............................................ 31

Technical Performance Measures .......................................................................... 34

Reliability and Maintainability Engineering ............................................................. 39

3.2.3.1 Reliability and Maintainability Requirements and Engineering Activities ........... 39

3.2.3.2 Reliability Growth Planning ................................................................................ 40

Manufacturing and Quality Engineering ................................................................. 41

3.2.4.1 Manufacturing and Quality Requirements and Engineering Activities ............... 41

3.2.4.2 Manufacturing Maturity ...................................................................................... 42

Human Systems Integration ................................................................................... 42

System Safety ........................................................................................................ 43

Corrosion Prevention and Control .......................................................................... 45

Software Engineering ............................................................................................. 45

3.2.8.1 Software Engineering Overview ........................................................................ 45

CLASSIFICATION

Contents

4

3.2.8.2 Software Planning Phase .................................................................................. 46

3.2.8.3 Software Execution Phase ................................................................................. 47

3.2.8.4 Software Obsolescence ..................................................................................... 48

Technology Insertion and Refresh ......................................................................... 48

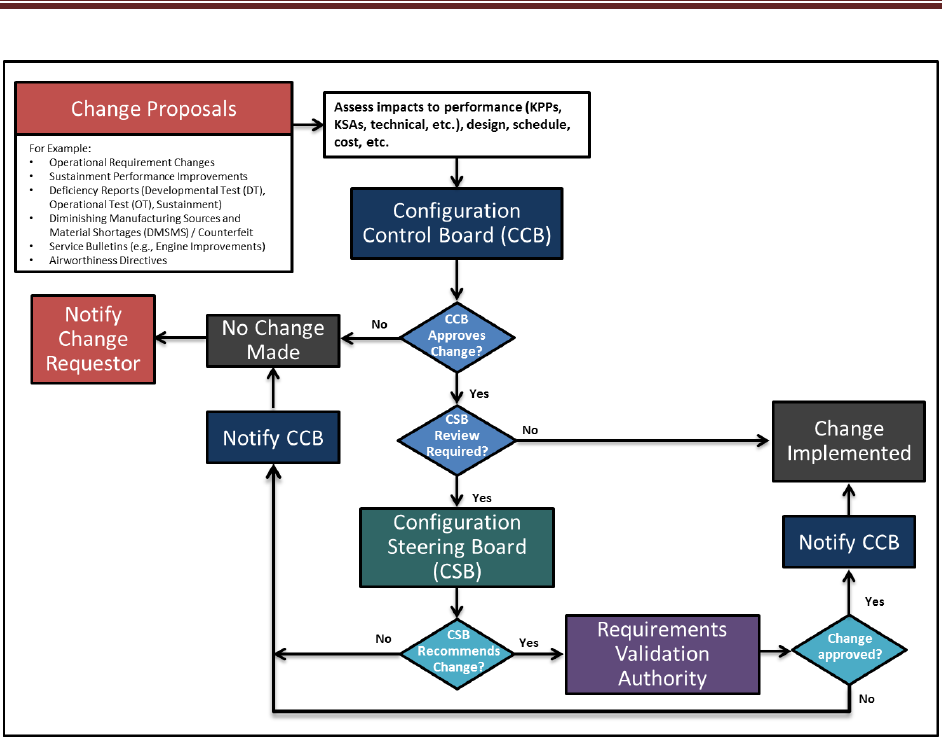

Configuration and Change Management ............................................................... 49

Technical Data Management ................................................................................. 51

System Security Engineering ................................................................................. 51

Technical Reviews, Audits and Activities ............................................................... 52

Appendix A – Acronyms .............................................................................................................. 54

Appendix B – Item Unique Identification Implementation Plan ................................................... 54

Appendix C – Agile and Development Security and Operations Software Development Metrics

54

Appendix D – Concept of Operations Description ...................................................................... 55

Appendix E – Digital Engineering Implementation Plan .............................................................. 56

References .................................................................................................................................. 58

Tables

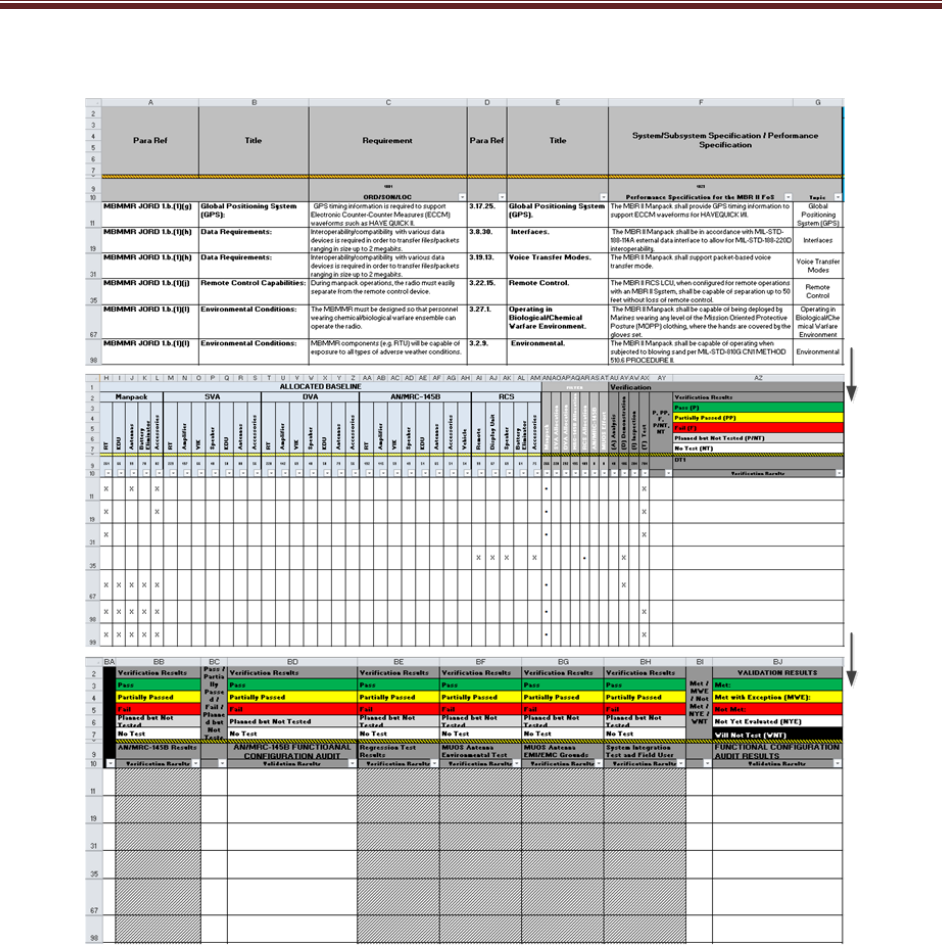

Table 2.1-1 Requirements Traceability Matrix (mandatory) (sample) ......................................... 11

Table 2.5-1 Design Considerations (mandatory) (sample) ......................................................... 14

Table 2.6-1 Certification Requirements (mandatory) (sample) ................................................... 17

Table 3.1-1 Integrated Product Team Details (mandatory unless charters are submitted)

(sample) ................................................................................................................................ 28

Table 3.2-1 Technical Performance Measures (mandatory) (sample) ........................................ 36

Figures

Figure 2.1-1 Specification Tree Illustrating Requirements Decomposition and Technical

Baselines............................................................................................................................... 10

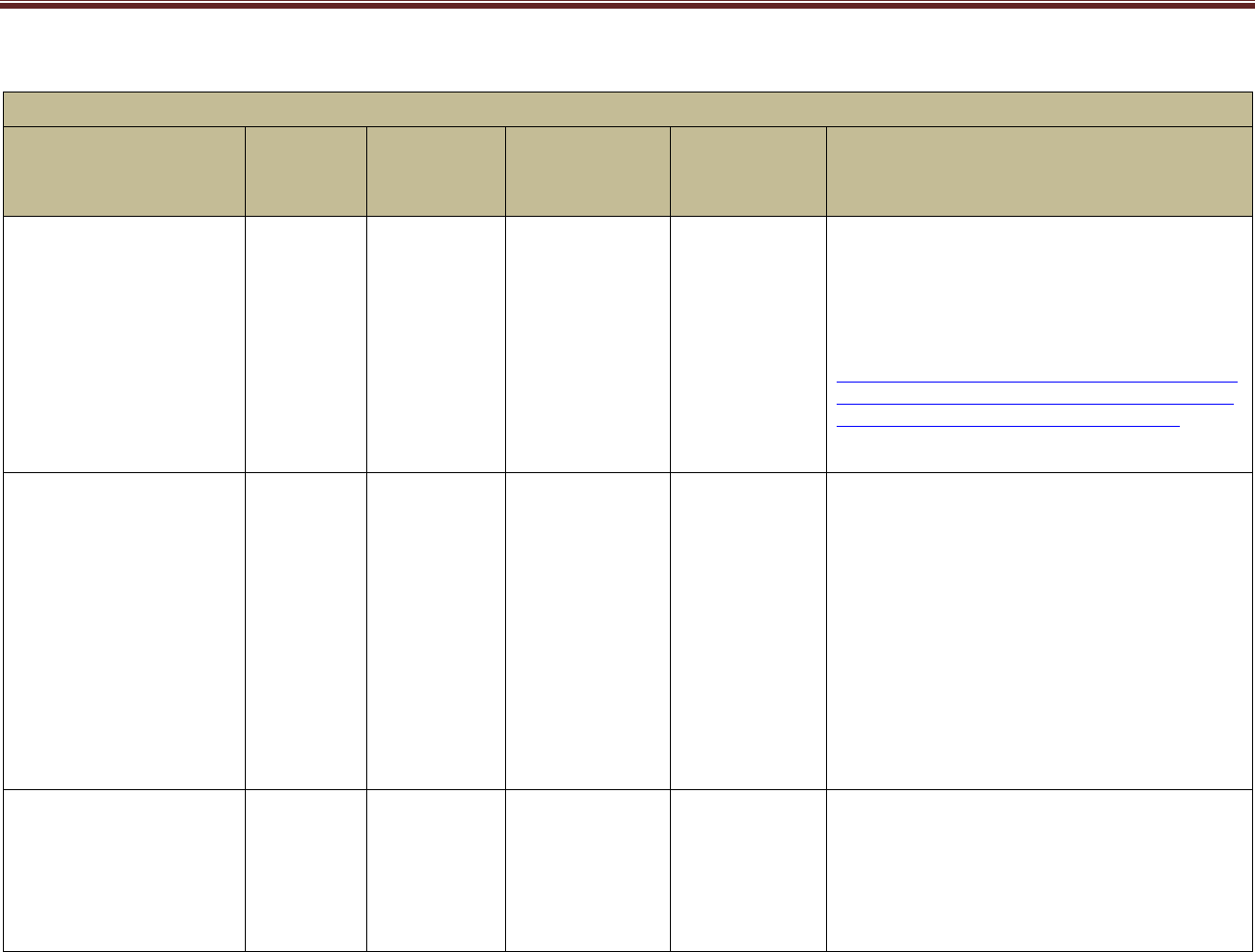

Figure 3.1-1 System Technical Schedule as of [Date] (mandatory) (sample) ............................ 19

Figure 3.1-2 System-of-Systems Schedule as of [Date] (mandatory) (sample) .......................... 21

Figure 3.1-3 Program Office Organization as of [Date] (mandatory) (sample) ........................... 23

Figure 3.1-4 Program Technical Staffing (mandatory) (sample) ................................................. 25

Figure 3.1-5 SEPM Budget (mandatory) (sample) ...................................................................... 26

Figure 3.1-6 IPT/WG Hierarchy (mandatory) (sample) ............................................................... 27

Figure 3.2-1 Risk Reporting Matrix as of [Date] (mandatory) (sample) ...................................... 32

Figure 3.2-2 Risk Burn-Down Plan as of [Date] (mandatory for high risks; others optional)

(sample) ................................................................................................................................ 33

Figure 3.2-3 Technical Performance Measure or Metric Graph (recommended) (sample) ........ 38

Figure 3.2-4 TPM Contingency Definitions ................................................................................. 39

Note: Additional tables and figures may be included at the Component or Program Manager’s

discretion.

CLASSIFICATION

5

[MANDATORY APPROVAL PAGE CONTENT]

PROGRAM NAME – ACAT LEVEL___

[ACQUISITION PATHWAY]

SYSTEMS ENGINEERING PLAN

VERSION ___

SUPPORTING ______DECISION

AND

SUPPORTING TRANSITION INTO______PHASE

[DATE]

*************************************************************************************

SEP APPROVAL AUTHORITY APPROVAL

______________________________________________

Approval Authority Name

Approval Authority Signature Block

__________________________

Date

CLASSIFICATION

6

SUBMITTED BY

__________________________

Name

Program Lead Systems Engineer

__________

Date

__________________________

Name

Program Manager

_________

Date

CONCURRENCE

__________________________

Name

Lead/Chief Systems Engineer

(System Center or Command)

__________

Date

__________________________

Name

Program Executive Officer or

Equivalent

_________

Date

COMPONENT APPROVAL

__________________________

Name

Title, Office

Component SEP Approval

Authority

__________

Date

CLASSIFICATION

1 Introduction

7

Expectation: The following expectations apply to the Systems Engineering Plan (SEP) as a

whole:

• The Lead Systems Engineer/Chief Engineer (LSE/CE), under the direction of the Program

Manager (PM), will prepare a SEP to manage the systems engineering (SE) activities

starting at Milestone A (Department of Defense Instruction (DoDI) 5000.88, Engineering of

Defense Systems). The SEP should be a “living,” “go-to” technical planning document and

should serve as the blueprint for the conduct, management, and control of the technical

aspects of the government’s program from concept to disposal.

• The SEP is a planning and management tool, specific to the program and tailored to meet

program needs. Although the SEP Outline employs terminology mainly applicable to DoDI

5000.02, Operation of the Adaptive Acquisition Framework (e.g., DoDI 5000.85, Major

Capability Acquisition), the principles and practices described herein should be applied, as

appropriate, to all DoD programs.

• The SEP defines the methods for implementing all system requirements having technical

content, technical staffing, and technical management.

• The SEP will include the engineering management approach to include technical baseline

management; requirements traceability; linkage to the system architecture; configuration

management (CM); risk, issue, and opportunity management; and technical trades and

evaluation criteria (DoDI 5000.88, Para 3.4.a.(3).(b, d and l)).

• The SEP should include a digital ecosystem implementation plan that addresses the DoD

Digital Engineering Strategy goals and defines six key digital engineering ecosystem

attributes: infrastructure, environment, data, security, collaboration, and innovation. Applied

elements of these attributes (requirements, models, digital artifacts, network

hardware/software tools, data accessibility, and compatibility, etc.) will be evident in the

planning of the digital ecosystem implementation that results in the authoritative source of

truth (ASoT) for the program (DoDI 5000.88, Para 3.4.a.(3).(m)).

• The SEP will describe a data management approach consistent with the DoD Data Strategy.

The approach should support maximizing the technical coherency of data as it is shared

across engineering disciplines (DoDI 5000.88, Para 3.4.a.(3).(s)). Additional approaches to

data management should at a minimum describe:

o The government’s ownership in, or intellectual property (IP) license rights it has acquired

to, data it created or a contractor delivered to it, respectively;

o Digital artifact generation for reporting and distribution purposes;

o Expected data and method of delivery to the government, from all models, simulations,

designs, reviews, audits, analysis, formal contract deliverables, and expected level of

data rights (DoDI 5000.88, Para 3.4.a.(3).(j)); and

o Sufficient data to support system testing and assessment of the system.

• Upon approval by the Milestone Decision Authority (MDA), the SEP provides authority and

empowers the LSE/CE to execute the program’s technical plan.

• The SEP should be updated following a technical review, before milestones or the

Development Request for Proposal (RFP) Release Decision Point, or as a result of SE

planning changes.

CLASSIFICATION

1 Introduction

8

• The SEP should be updated after contract award to reflect (1) the winning contractor(s)’

technical approach reflected in the Systems Engineering Management Plan (SEMP) and

(2) details not available before contract award. This post-award update should be

completed within 120 days of contract award or no later than 30 days before the next

technical review. The program should define and justify this update as either a minor or

major update as a way to influence related staffing and approval risk.

1 Introduction

The introduction should:

• Summarize the program (ensure the description aligns with the program Acquisition

Strategy (AS)).

• Describe how the Program Management Office (PMO) has tailored the SEP to execute

the AS.

• Describe the program’s plan to align the Prime Contractor’s SEMP with the PMO SEP.

• Summarize how and when the SEP is updated and the criteria for doing so.

• Identify the phase of the program, its entry and exit criteria, and approval and updating

authority(ies).

CLASSIFICATION

2 Program Technical Definition

9

2 Program Technical Definition

2.1 Requirements Development

Describe how technical requirements are defined, derived, and refined from the Joint

Capabilities Integration and Development System (JCIDS) or other applicable capability

requirements documents down to configuration item (CI) build-to specifications and verification

plans. (See SE Guidebook (2022), Requirements Analysis Process, for additional guidance).

Expectation: Program should maximize traceability and the use of models as an integral part of

the mission, concept, and technical baseline to trace measures of effectiveness, measures of

performance, and all requirements throughout the life cycle from JCIDS (or equivalent

requirements authoritative source(s)) into a verification matrix, equivalent artifact, or tool that

provides contiguous requirements traceability digitally. A decomposition/specification tree

provides a summary of the requirements traceability and technical baselines. The requirements

trace should not contain any orphan requirements. The requirements trace should identify

those requirements that were identified in the JCIDS documents as expected to change over the

life of the program due to evolution of the threat or technology so that they may be considered in

the modular open systems approach (MOSA). Figure 2.1-1 shows a sample Requirements

Decomposition/Specification Tree/Baseline (DoDI 5000.88, Para 3.4.a.(3).(l)).

Expectation: Program requirements documents for all acquisition programs with digital

components and interoperability requirements will have program protection, cybersecurity, cyber

survivability, and operational resilience requirements defined in the requirements source (see

DoDI 5000.82, Acquisition of Information Technology (IT)). Cybersecurity requirements are

usually related to the Risk Management Framework (DoDI 8510.01, Risk Management

Framework for DoDEA Information Technology) and federal laws. Cyber survivability

requirements are specified using the Joint Staff Cyber Survivability Endorsement

Implementation Guide and are threshold requirements in addition to the System Survivability

(SS) Key Performance Parameter (KPP), even if the program does not have an SS KPP.

Operational resilience is a specified requirement in the DoDI 8500.01. Implied and derived

cyber requirements (security, survivability, resilience) should be considered if the requirements

source is lacking these cyber requirements, as all digital acquisitions are susceptible to some

cyber threats. Traceability and models should trace the cyber requirements through

decomposition as with all other requirements.

Expectation: System safety engineering principles and analyses are part of all requirements

development. Brief justification should be provided if system safety engineering principles and

analyses are not part of a requirement.

CLASSIFICATION

2 Program Technical Definition

10

CLASSIFICATION

Source: Name Year if applicable. CLASSIFICATION

Figure 2.1-1 Specification Tree Illustrating Requirements Decomposition and Technical Baselines

(mandatory) (sample)

Expectation: Program should trace all requirements from the highest level (JCIDS or equivalent

requirements sources) to the lowest level (e.g., component specification or user story). This

traceability should be captured and maintained in digital requirements management tools or

within model(s). The system Requirements Traceability Matrix (RTM) should be a model output

that can be embedded in or attached to the SEP, or the SEP should contain a tool reference

location. This matrix will grow as the system matures. The matrix should include the

verification method for each of the identified requirements and an indication whether each

requirement is expected to change over the life of the program. Table 2.1-1 shows a sample

RTM. If applicable, provide a link to a location where the current RTM is maintained that will

meet the expectation for requirements traceability.

Expectation: Program cyber requirements trace should also flow to the lowest level (e.g.,

component specification for passive sensing or user story for software automated resilience

approaches). Use early and repeated or updated Mission-Based Cyber Risk Assessments

(MBCRAs) supported by cyber test representatives (contractor and government) to inform cyber

requirement flow down.

CLASSIFICATION

2 Program Technical Definition

11

Table 2.1-1 Requirements Traceability Matrix (mandatory) (sample)

Source: Name Year if applicable. Classification: UNCLASSIFIED.

2.2 Architectures and Interface Control

Describe the architecture products, including the mandatory JCIDS architecture artifacts and

any additional architecture views or diagrams, the program will develop. Explain how those

mandatory architecture artifacts and additional architecture products are related to requirements

definition or how they support other SE activities. (See SE Guidebook (2022), Architecture

Design Process, for additional guidance).

The LSE should have all interfaces (including temporary interfaces related to mission

requirements) and dependencies clearly identified and accounted for in functional and physical

architectures (including but not limited to mechanical, electrical, thermal, data, control,

procedural, and other interactions). (See SE Guidebook (2022), Interface Management Process,

for additional guidance). Include as appropriate the following:

• List of the program’s planned suite of architecture products with status of each.

CLASSIFICATION

2 Program Technical Definition

12

• Architecture diagrams and models (e.g., physical, functional, behavior model and software).

• All hardware-defined modular system interfaces that define shared boundaries between the

major system platform and major system components, modular systems, or both, residing

within that platform; or between those major system components, modular systems, or both,

and between major system platforms (e.g., Interface Control Documents (ICDs), Interface

Requirements Specification (IRS), Interface Design Description (IDD), and functional

descriptions of software-defined interfaces conveying semantic meaning of interface

elements (e.g., the function of a given interface field)).

• All software-defined modular system interfaces that define interface syntax and properties

specifically governing how values are validly passed and received between major

subsystems and components in machine-readable format and a machine-readable definition

of the relationship among the delivered interface and existing common standards or

interface repositories (e.g., Application Program Interfaces (APL), Dynamic Link Libraries

(DLL).

• The contractor’s Software Architecture Description.

• List of major external system (outside the authority/control of the program) interfaces (attach

or embed separate ICD).

• List of modular system interfaces with the interface requirement specifications necessary for

system operation, interface standards and standards profiles, and other documentation that

fully describe the physical and functional interfaces needed to ensure compatibility between

interfacing components, systems, and platforms.

• List and reference of all program Component-specific, joint, and coalition mission threads

(JMT and CMT). (Department of Defense Acquisition Framework (DoDAF CV-6 (Capability

to Operational Activities Mapping) provides list of JMTs).

• Consistent with the program's acquisition strategy and Life Cycle Sustainment Plan, the

level(s) of indenture of the WBS (see section 3.1.3.1) and Software Architecture Description

(see section 3.2.3.2) for specific modular systems and major system components into which

functionality will be partitioned in discrete, cohesive, and self-contained units.

Expectation: Architectures are generated to describe and understand the system and how the

subsystems join together, including internal and external interfaces (e.g. human-machine

interactions, role-based access), to form the system and also to inform interoperability and

cyber testing.

2.3 Specialty Engineering

Provide a summary of the program approach for the integration of Specialty Engineering (SpE)

disciplines (e.g., Reliability and Maintainability, Manufacturing and Quality, Human Systems

Integration (HSI), and System Safety) throughout systems engineering planning (e.g.,

requirements, schedule, staffing, Technical Performance Measures (TPMs), and technical

reviews and activities) (DoDI 5000.88, Para 3.4.a.(3).(t)). Summarize critical elements of the

SpE sections in 3.2. Technical Tracking. As part of the program’s digital engineering approach,

describe how models, simulations, the digital ecosystem, and digital artifacts will be used as

part of an integrated approach to supporting SpE activities and deliverables. (See

https://ac.cto.mil/specialty-engineering/).

CLASSIFICATION

2 Program Technical Definition

13

2.4 Modeling Strategy

Define the modeling strategy to be used (model-supported, model-integrated, or model-centric).

Describe why the modeling strategy was chosen. Describe basic model components. The

modeling strategy may be included in the Digital Engineering Implementation Plan (See

Appendix E).

2.5 Design Considerations

As shown in Table 2.5-1, identify the design considerations that are critical to achieving the

program’s technical requirements. Ensure the design and architectural factors from DoDI

5000.88 are addressed. If additional documentation is required, those documents may need to

be embedded/attached in the SEP or located within the program’s digital ecosystem. (See SE

Guidebook (2022), Design Considerations, for a partial list of design considerations.) Not all are

equally relevant or critical to a given program, but all should be examined for relevance.

CLASSIFICATION

2 Program Technical Definition

14

Table 2.5-1 Design Considerations (mandatory) (sample)

Mapping Key Design Considerations into Contracts

Name (Reference)

Cognizant

PMO

Org

Certification

Documentation

(embedded or

reference

attached)

Contractual

Requirements

(CDRL #)

Describe how the program captures,

integrates, and uses technology, models,

simulations and data to support life cycle

activities within a digital ecosystem

Chemical, Biological,

Radiological, and Nuclear

(CBRN) Survivability

Describe how the design incorporates the

CBRN survivability requirements and how

progress toward these requirements is tracked

and documented over the life cycle.

For additional information on CBRN

Survivability, see

https://www.dodtechipedia.mil/dodwiki/display/t

echipedia/Chemical%2C+Biological%2C+Radi

ological%2C+and+Nuclear+Survivability

(Defense Technical Information Center (DTIC)

account required).

Modular Open Systems

Approach (MOSA)

List of applicable

MOSA/Interface

Standards and

Reference

Architectures |

Describe how the program uses MOSA in the

system design to enable affordable change,

evolutionary acquisition, and interoperability.

Describe how the system design considers the

evolution of requirements identified in the

capability documents. Describe how the

architectural design accommodates the

requirements. Provide rationale if MOSA is not

feasible or cost-effective. List known key

interfaces (with identification of spec),

known/desired severable modules and modular

system interfaces. Name MOSA-related

controlling or guiding reference architectures

and standards.

Digital Ecosystem Describe how the program uses the digital

ecosystem in the system’s design of life cycle

activities to establish system performance

validation capability through models,

simulations, or digital twin instantiations.

Describe how the digital ecosystem will be

maintained through the sustainment phase of

CLASSIFICATION

2 Program Technical Definition

15

Mapping Key Design Considerations into Contracts

Name (Reference)

Cognizant

PMO

Org

Certification

Documentation

(embedded or

reference

attached)

Contractual

Requirements

(CDRL #)

Describe how the program captures,

integrates, and uses technology, models,

simulations and data to support life cycle

activities within a digital ecosystem

the system to facilitate enhancements,

updates, and changes.

Describe how the digital ecosystem or parts of

it will be required to stay updated and

maintained in order to support quick software

updates and fast delivery to the field. Identify

design considerations that (i) leverage the

digital engineering implementation and digital

representations of design products (e.g., digital

threads, digital twin) and (ii) the program plans

to use to support development activities,

manufacturing activities, operations, and

sustainment activities.

System Security

Engineering

Program

Protection Plan

(PPP)

Describe how the design addresses protection,

survivability, and resilience of DoD warfighting

capability from foreign intelligence collection;

from hardware (HW), software (SW), and

firmware (FW) vulnerabilities, cyberspace

attacks, cyber events, and supply chain

exploitation; and from battlefield loss

throughout the system life cycle, balancing

security requirements, designs, testing,

sustainment activities, and risk management in

the respective trade spaces.

Diminishing

Manufacturing Sources

and Material Shortages

(DMSMS)

DMSMS

Management

Contract

Language

Describe how the design seeks to exhibit

DMSMS resiliency by both minimizing the

occurrence of obsolescence and enabling

quicker, lower cost resolutions when

obsolescence does occur. Describe how the

design is adapted to meet any contract

requirement so the product will have no

DMSMS issues for a specified period of time.

Describe how the part selection process avoids

items with projected near-term obsolescence.

CLASSIFICATION

2 Program Technical Definition

16

Mapping Key Design Considerations into Contracts

Name (Reference)

Cognizant

PMO

Org

Certification

Documentation

(embedded or

reference

attached)

Contractual

Requirements

(CDRL #)

Describe how the program captures,

integrates, and uses technology, models,

simulations and data to support life cycle

activities within a digital ecosystem

Describe how the program is conducting

monitoring and surveillance to identify issues

as early as possible as well as the processes

the program uses to mitigate those issues by

changing the design before production.

Parts Management Parts

Management

Contract

language

Describe how the program implements

contracts for standardization and parts

management to reduce the costly proliferation

of parts and equipment; enhance reliability,

availability and maintainability; and mitigate

counterfeit and DMSMS occurrences in

support of life cycle management and

sustainability through integrated program

planning and systems engineering throughout

the acquisition life cycle.

Intelligence

Life-Cycle

Mission Data

Plan (LMDP)

(MS A, Dev RFP

Rel, B, & C)

(if program is

Intelligence

Mission Data

(IMD)

dependent)

Validated Online

Lifecycle Threat

(VOLT) Report

Summarize the plans to identify IMD

requirements and need dates. Describe how

the program plans to address the risk of

unavailable IMD. Also, describe how the

design will address current and future threat

capabilities, specifically highlighting what will

be done to manage risk to system performance

in the event of a Critical Intelligence Parameter

(CIP) breach.

CLASSIFICATION

2 Program Technical Definition

17

Expectation: SEP demonstrates necessary design considerations as an integral part of the

design decision process, including trade study criteria.

2.6 Technical Certifications

Summarize in table format (Table 2.6-1) the system-level technical certifications obtained during

the program’s life cycle. Review the following references and add and delete certifications

to/from table 2.6-1 as applicable to your program. (See AFPAM 63-128, Attachment 14, AFI 63-

101/20-101, para 5.1.5).

Table 2.6-1 Certification Requirements (mandatory) (sample)

Certification

PMO

Team/POC

Activities to Obtain

Certification

1

Certification

Authority

Expected

Certification

Date

Airworthiness Airframe

Integrated

Product Team

(IPT)

?Q FY?

Joint

Interoperability

Test Command

(JITC)

Systems

Engineering

Integration and

Test (SEIT)

Operational test demonstrates

the system:

• Is able to support military

operations

• Is able to be entered and

managed on the network

• Effectively exchanges

information

JITC system

interoperabilit

y test

certification

memorandu

m

?Q FY?

Joint Weapons

Safety Working

Group

Any weapon or laser systems

used by two or more DoD

components must be reviewed

by the JWSWG

Weapon System

Explosives

Safety Review

Board

(WSESRB)

SEIT ?Q FY?

Transportability

?Q FY?

Insensitive

Munitions (IM)

Manufacturing

IPT

Reference Document: Program

Executive Office (PEO) IM

Strategic Plan

?Q FY?

Etc. ?Q FY?

1

Note: This entry should be specific, such as a specification compliance matrix; test, inspection,

or analysis; or a combination. It can also reference a document such as the Test and

Evaluation Master Plan (TEMP) for more information.

Expectation: Program should include the plans for required technical certification activities and

timing in the program Integrated Master Plan (IMP) and the Integrated Master Schedule (IMS).

CLASSIFICATION

3 Program Technical Management

18

3 Program Technical Management

3.1 Technical Planning

Technical Schedule

• List scheduling/planning assumptions and describe schedule risk assessment methodology

and frequency ((DoDI 5000.88, Para 3.4.a.(3).(e)).

• Describe how the IMP is maintained, where it is stored, and how to obtain access to it.

• Provide the current technical schedule derived from the IMP/IMS (Figure 3.1-1) for the

program, including activities/tasks and event milestones such as:

o SE technical reviews and audits

o Program protection activities

o Technology on/off-ramps

o RFP release dates

o SW builds/releases

o Minimum Viable Product

(MVP)/Minimum Viable Capability

Release (MVCR)

o Hardware/Software (HW/SW)

Integration phases

o Contract award (including bridge

contracts)

o Testing events/phases

o System-level certifications

o Technology Readiness

Assessments (TRAs)

o Manufacturing Readiness

Assessments (MRAs)

o Logistics/sustainment events

o System Diminishing Manufacturing

Sources and Material Shortages

(DMSMS) health assessments

o Long-lead or advanced

procurements

o Technology development efforts to

include prototyping

o Production lot/phases

o Need dates for government-

furnished equipment (GFE)

deliveries

o HSI domain and management

activities (e.g., HSI Plan, task

analysis)

o Production Readiness Reviews

(PRRs)

o Independent Technical Risk

Assessments (ITRAs)

o Developmental Test and Evaluation

Sufficiency Assessments

o Reliability growth testing

o Key modeling activities

o Model release dates

CLASSIFICATION

3 Program Technical Management

19

Source: Name Year [if applicable]. Classification: UNCLASSIFIED.

Figure 3.1-1 System Technical Schedule as of [Date] (mandatory) (sample)

Expectation: Program should properly phase activities and key events (competitive and risk

reduction prototyping, TRA, Preliminary Design Review (PDR), Critical Design Review (CDR),

etc.) to ensure a strong basis for financial commitments. Program schedules are event driven

and reflect adequate time for SE, integration, test, corrective actions, and contingencies. SEPs

for approval should include a current schedule, no more than 3 months old.

3.1.1.1 Schedule Management

• Provide a description of the program’s IMP and IMS process, to include definitions, updated

schedules, audits, baseline control, and the integration between program-level and

contractor detailed schedules (DoDI 5000.88, Para 3.4.a.(3).(f)).

• Provide the program-level IMP as an attachment to the SEP.

• Discuss the relationship of the program’s IMP to the contractor(s) IMS, how they are

linked/interfaced, and what the primary data elements are.

CLASSIFICATION

3 Program Technical Management

20

• Identify who or what team (e.g., Integrated Product Team/Working Group (IPT/WG)) is

responsible for developing the IMP, when it is required, and whether it is a part of the

contract.

• Describe how identified technical risks are incorporated and tracked into the program’s IMP,

IMS, and digital ecosystem.

• If used, discuss how the program uses Earned Value Management (EVM) cost reporting to

track/monitor the status of IMS execution and performance to plan.

• If EVM is not used, state how often and discuss how the IMS is tracked according to

contract requirements and how performance is tracked to budget.

• Summarize the program’s planned schedule risk analysis (SRA) products. Describe how

each product will help determine the level of risk associated with various tasks, determine

the readiness for technical reviews, and inform acquisition decisions. Identify who will

perform SRAs, methodologies used, and periodicity.

• Discuss how often the program conducts Defense Contract Management Agency (DCMA)

14-point schedule health checks on the IMS (Earned Value Management System (EVMS)

Program Analysis Pamphlet (PAP) (DCMA-EA PAM 200.1) October 2012:

http://www.dcma.mil/LinkClick.aspx?fileticket=0CBjAarXWZA%3d&portalid=31).

• Describe the process to resolve/correct deficiencies identified by the DCMA health check.

• Describe the impact of schedule constraints and dependencies.

• Describe initiated, completed, or planned actions to mitigate schedule drivers.

• Describe the periodicity for performing critical path analysis, identifying items on the critical

path with any risks and mitigations to meet schedule objectives.

• Describe how the PM will substantiate HW/SW schedule realism and the rigorous basis of

estimate used to develop the detailed hardware/software activities.

Expectation: Program should regularly check IMS health and conduct SRAs to inform program

decisions.

3.1.1.2 Family of Systems/System of Systems Management

As part of the digital ecosystem implementation and within the ecosystem, describe the external

organization integration plan. Identify the organization responsible for coordinating SE and

ecosystem integration efforts associated with FoS/SoS and its authority to reallocate resources

(funding and manpower). Describe methods used to document, facilitate, and manage

interaction among SE team(s) and external-to-program government organizations (e.g.,

OUSD(R&E) on technical tasks, activities, and responsibilities (e.g., requirements, technical

baselines, and technical reviews). Address the following:

• Resolution of issues that cross PM, PEO, and Component lines

• Digital engineering implementation and how it interfaces with new starts and legacy

programs. Include how the digital ecosystem will be implemented to track and highlight

integration issues within the program and with other programs (SoS)

• ICDs and any interface control WGs (ICWGs)

CLASSIFICATION

3 Program Technical Management

21

• “Triggers” that require a FoS/SoS member to inform the others if there is a cost, schedule, or

performance deviation

• Description of who or what team (e.g., IPT/WG) is responsible for maintaining the alignment

of the IMP and IMS across the interdependent programs

• Planned linkage between HW and SW upgrade programs within the FoS/SoS, to include

modeling

• Any required GFE/government-furnished property/information (GFP/GFI) (e.g., test ranges,

integration laboratories, and special equipment)

• Any major system components and modular system interfaces shared from or used by other

programs (MOSA)

Include an SoS schedule (Figure 3.1-2) that shows FoS/SoS dependencies such as alignment

of technical reviews, major milestones, test phases, GFE/GFP/GFI, etc.

Note: If the system neither has nor will have any relationship with any external organization, the

program may omit the content of 3.1.1.2 FoS/SoS Management and the associated Figure 3.1-

2 SoS Schedule.

Source: Name Year if applicable. Classification: UNCLASSIFIED.

Figure 3.1-2 System-of-Systems Schedule as of [Date] (mandatory) (sample)

Expectation: Program should

• Manage the internal program schedule and synchronize it with external program schedules.

CLASSIFICATION

3 Program Technical Management

22

• Identify external interfaces and clearly define dependencies. This information should

include interface control specifications or documents, which should be confirmed early on

and placed under strict configuration control. Compatibility with other interfacing systems

and common architectures should be maintained throughout the development/design

process.

• Identify any major system components, major system platforms, and modular system

interfaces (MOSA) with dependencies clearly defined (DoDI 5000.88, Para 3.4.a.(3).(r)).

This description should include all technical data and computer software (see Section 3.2.9)

that will be delivered with appropriate IP rights.

• Develop Memorandums of Agreement with interfacing organizations that include:

o Tripwires and notification to FoS/SoS members of any significant (nominally >10%)

variance in cost, schedule, or performance

o Mechanisms for FoS/SoS members to comment on proposed interface changes to

include program’s digital engineering implementation

o Fast-track issue identification and resolution process

Maturity Assessment Planning

Identify how the program will assess and document the technology maturity of all critical

technologies and manufacturing processes consistent with the USD(R&E) guidance for

technology readiness and Manufacturing Readiness Level (MRL) assessments. Identify the test

results, including any early cyber testing and artifacts that have been conducted or are planned,

that provide the documentation of the technology and manufacturing process maturity.

Expectation: Programs will develop all critical technologies consistent with the USD(R&E)

guidance for assessing technology readiness and MRL and document the maturity of those

critical technologies and manufacturing processes. This documentation will be made available

to support Office of the Secretary of Defense (OSD)- and Service-conducted reviews and

assessments.

Technical Structure and Organization

3.1.3.1 Work Breakdown Structure

If a WBS exists, embed or attach it to the SEP. In addition, provide:

• WBS dictionary that is traceable from the IMS

• Explanation of the traceability between the system’s technical requirements and the WBS

• (Optional) A digital ecosystem support IPT that is resourced or is part of the SEIT IPT or

LSE/CE

3.1.3.2 Government Program Office Organization

Provide the planned program office organizational structure (i.e., wiring diagram to illustrate

hierarchy and identify any positions that are not filled) with an as-of date, and include the

following elements (Figure 3.1-3):

• Organization to which the program office reports

CLASSIFICATION

3 Program Technical Management

23

• PM

• LSE/CE

• Functional Leads (e.g., test and evaluation (T&E), logistics, DMSMS, risk, production/quality,

reliability, SW, digital ecosystem, system safety).

FFRDC: Federally Funded Research and Development Center; KLP: Key Leadership Position

Source: Name Year if applicable. Classification: UNCLASSIFIED.

Figure 3.1-3 Program Office Organization as of [Date] (mandatory) (sample)

3.1.3.3 Program Office Technical Staffing Levels

Summarize the program’s technical staffing plan, to include:

• Risks and increased demands on existing resources if staffing requirements are not met

• A figure (e.g., sand chart, Figure 3.1-4) to show the number of required PMO full-time

equivalent (FTE) positions (e.g., organic, matrix support, and contractor support) over time,

by key program events (e.g., milestones and technical reviews)

• Description of the basis of estimate for the staffing sand chart

• A figure to show the program’s budget for SE and program management (SEPM) over time

as a percentage of total program budget (Figure 3.1-5)

• Description of the adequacy of SW development staffing resources

CLASSIFICATION

3 Program Technical Management

24

o

Describe the key PMO and contractor SWE position experience and qualification

requirements (e.g., quantity and experience level).

• Description of the adequacy of staffing resources for the digital ecosystem

o Describe the key digital ecosystem position experience to include the skill set,

experience and qualification requirements applicable for model-based systems

engineering, simulation, software engineering (SWE), and information technology

positions (e.g., quantity and experience level).

• For programs still under competition, the approaches used to manage flow of information in

the competitive environment

• Description of the adequacy of cyber engineering development staffing resources

o The key PMO and contractor cyber engineering position experience and qualification

requirements (e.g., quantity and experience level), to include adversarial testing

• Description of the adequacy of system safety staffing resources

Expectation: Program should use a workload analysis tool to determine the adequate level of

staffing, appropriate skill mix, and required amount of experience to properly staff, manage, and

execute successfully.

CLASSIFICATION

3 Program Technical Management

25

Source: Name Year if applicable. Classification: UNCLASSIFIED.

Figure 3.1-4 Program Technical Staffing (mandatory) (sample)

CLASSIFICATION

3 Program Technical Management

26

Source: Name Year if applicable. Classification: UNCLASSIFIED.

Figure 3.1-5 SEPM Budget (mandatory) (sample)

3.1.3.4 Engineering Team Organization and Staffing

• Integrated Product Team (IPT) Organization – Provide diagrams that show the

government and contractor (when available) IPTs and their associated working-level IPTs

(WIPTs) and WGs that illustrate the hierarchy and relationship among them (Figure 3.1-6).

Identify the government leadership for all teams.

• IPT Details – For government and contractor(s) (when available) IPTs and other key teams

(e.g., Level 1 and 2 IPTs and WGs), include the following details either by attaching

approved charters or in a table (Table 3.1-1, mandatory unless charters attached):

o IPT name

o Functional team membership (to include external program members, and representation

from all SpE disciplines (Section 2.3) and design consideration areas (Section 2.4))

o IPT roles, responsibilities, and authorities

o WBS tasks assigned to IPT

o IPT products (e.g., updated baselines, risks, etc.)

o IPT-specific TPMs and other metrics.

CLASSIFICATION

3 Program Technical Management

27

Source: Name Year if applicable. Classification: UNCLASSIFIED.

Figure 3.1-6 IPT/WG Hierarchy (mandatory) (sample)

Expectation: Program should integrate SE activities with all appropriate functional and

stakeholder organizations within the digital ecosystem. In addition, IPTs should include

personnel responsible for each of the design consideration areas in Table 2.5-1. Note: Ensure

the IPTs in Figure 3.1-6 match the IPTs in Table 3.1-1.

Systems

Engineering IPT

Digital

Engineering WG

Air Vehicle IPT

Airframe WG

Propulsion WG

Software WG

Security WG

Airworthiness

WG

E3 WG

Flight Systems

WG

T&E IPT

Live Fire WG

Survivability

WG

Interoperabity

WG

R&M WG

Mission

Equipment IPT

CNS/ATM

Mission

Planning

Defensive

Systems WG

Cyber WG

CLASSIFICATION

3 Program Technical Management

28

Table 3.1-1 Integrated Product Team Details (mandatory unless charters are submitted) (sample)

Team

Name

Chair

Team Membership

(by Function or Organization)

Team Role, Responsibility, and Authority

Products and

Metrics

SE IPT Lead SE

• Program Office

o Platform Lead

o Mission Equipment Lead

o Weapons Lead

o Test Lead

o Logistics Manager

o DMSMS Lead

o SW Lead

o Production/Quality Manager

o System Safety Lead

o Interoperability Lead

o R&M Lead

o System Security Engineering Lead

o Cyber Lead

• PEO and PM

• Service Representative

• OSD SE

• Key Subcontractor or Suppliers

• External programs

• Digital Ecosystem Lead

• Intelligence Lead

• Environmental Lead

• DCMA Engineers

Role: IPT Purpose (e.g., Aircraft Design and Development)

Responsibilities: Integrate all technical efforts throughout

the life cycle within an end-to-end digital ecosystem

• Manage and oversee design activities

• Oversee configuration management of requirements and

their traceability

• System Safety

• Manage specialty engineering activities including the

following disciplines: survivability/vulnerability, human

systems, integration, electromagnetic environmental

effects (E3), reliability and maintainability (including

availability), system security, and environmental impacts

to system/subsystem performance

• Evaluate and mitigate counterfeit and DMSMS risk in

design, production, and sustainment

• Manage safety and certification requirements

• Ensure compliance with applicable international, federal,

state, and local environment, safety, and occupational

health (ESOH) laws, regulations, and treaties

• Manage system manufacturing assessments, weight,

and facilities management (System Integration

Laboratory) planning

• Perform functional allocations and translate the system

definition into WBS

• Ensure compliance with all specialty engineering

specification requirements

• Support the Program Protection IPT and Program

Protection System Engineering

• Manage SEIT performance through digital ecosystem,

EVMS, TPMs, and other metrics and risk assessments

Products:

SEP/SEP

updates

WBS, IMP/IMS

input

Specifications

Digital

Ecosystem

Architecture and

Design

Description

Metrics tracked

by IPT:

• Cost

• Performance

• Schedule

• Engineering

Infrastructure

and

Environment

Utilization and

Performance

Metrics

CLASSIFICATION

3 Program Technical Management

29

Team

Name

Chair

Team Membership

(by Function or Organization)

Team Role, Responsibility, and Authority

Products and

Metrics

• Identify and communicate SEIT issues to leadership

• Evaluate technical and performance content and

cost/schedule impacts to support the Configuration

Control Board (CCB) process

• Support test plan development and execution

• Support the T&E IPT in system verification requirements

• Support the Product Support IPT WGs and other

Technical Interchange Meetings (TIMs)

• Develop and support the SEIT part of the incremental

development and technology refresh processes

• Support Program Management Reviews (PMRs)

• Support program technical reviews and audits

• Perform SEIT trade studies to support affordability

goals/caps

• Perform FAR mandatory engineering surveillance

• Ensure minimum essential data is acquired and

managed.

Schedule and frequency of meetings

Date of signed IPT charter and signatory

CLASSIFICATION

3 Program Technical Management

30

Team

Name

Chair

Team Membership

(by Function or Organization)

Team Role, Responsibility, and Authority

Products and

Metrics

XXX

IPT

XXX

Lead

• Program Office

o Lead SE

o Mission Equipment Lead

o Weapons Lead

o Test Manager

o Logistics Manager

o DMSMS lead

o SW Lead

o R&M Lead

o Production/Quality Manager

o Safety Lead

o System Security Lead

o Interoperability Rep.

o

Key Subcontractor or Suppliers

Role: IPT Purpose

Responsibilities: Integrate all technical efforts

• Team member responsibilities

• Cost, performance, schedule goals

• Scope, boundaries of IPT responsibilities

Schedule and frequency of meetings

Date of signed IPT charter and signatory

Products:

• Specification

input

• SEP input

• TEMP input

• DMP input

• AS input

Metrics tracked

by IPT:

• TPM 1

• TPM 2

CCB: DMP: Data Management Program; FAR: Federal Acquisition Regulation; IPT: Integrated Product Team; SEP: Systems Engineering Plan; TEMP: Test and Evaluation Master

Plan; TPM: Technical Performance Measure; etc.....

CLASSIFICATION

3 Program Technical Management

31

3.2 Technical Tracking

Technical Risk, Issue, and Opportunity Management

• Technical Risk, Issue, and Opportunity (RIO) Management Process Diagrams

o Embed or attach to the SEP the latest (no more than 3 months old) RIO management

document including an as-of date.

• Risk Management Roles

o Determine roles, responsibilities, and authorities within the risk management process for

the following:

• Reporting/identifying risks or issues

• Criteria used to determine whether a “risk” submitted for consideration becomes a

risk or not (typically, criteria for likelihood and consequence)

• Adding/modifying risks

• Changing likelihood and consequence of a risk

• Closing/retiring a risk or issue

o If Risk Review Boards or Risk Management Boards are part of the process, identify the

chair and participants and state how often they meet.

o State how the process will be implemented using the digital ecosystem and digital

artifacts, establishing the risk ASoT while maximizing automated reporting, seamless

access, and accuracy of risk status.

• Risk/Issue Management

o Risk Tools – Describe the risk management and tracking tools the program office and

contractor(s) will use. If the program office and contractor(s) use different risk tools,

describe how information will be transferred or integrated without loss. Note: In general,

the same tool should be used. If the contractor’s tool is acceptable, the government may

opt to use it but must have direct, networked access to the tool.

o Technical Risk and Mitigation Planning – Summarize the key engineering, integration,

technology, SpE, and unique SW risks and planned mitigation measures for each risk

(DoDI 5000.88, Para 3.4.a.(3).(q)).

o Risk Reporting – Provide a risk reporting matrix (Figure 3.2-1) or a list of the current

system-level technical risks and issues with:

• As-of date

• Risk rating

• Risk statement and consequences, if realized

• Mitigation activities and expected closure date.

System Safety Risks can also be mapped on the risk cube and reporting matrix in Figure

3.2-1. However, the process for risk burn down shown in Figure 3.2-2 depends on the

process to attain acceptance by the System Safety Risk Assessment Authority or mitigation

through system safety design order of precedence.

CLASSIFICATION

3 Program Technical Management

32

Source: Name Year if applicable. Classification: UNCLASSIFIED.

Figure 3.2-1 Risk Reporting Matrix as of [Date] (mandatory) (sample)

(Note: Include an as-of date – time-sensitive figure.)

• Risk Burn-Down

o Describe the program’s use of risk burn-down plan to show how the program should

implement mitigation activities to control and retire risks. Also discuss how activities are

= Original Risk Analysis

= Current Assessment

= Predicted Final

HighModerate

Low

o

o

• Risk ID #82: Risk Statement…

• Consequences if Realized:

- Cost -

- Performance -

- Schedule -

• Mitigation Method: (Accept, Avoid, Transfer

or Control) Summarize activities:

1. Summarize Key Activity 1

2. Summarize Key Activity 2

3. Etc.

• Planned Closure Date:

• Risk ID #23: Risk Statement…

• Consequences if Realized:

- Cost -

- Performance -

- Schedule -

• Mitigation Method: (Accept, Avoid, Transfer

or Control) Summarize activities:

1. Summarize Key Activity 1

2. Summarize Key Activity 2

3. Etc.

• Planned Closure Date:

• Risk ID #45: Risk Statement…

• Consequences if Realized:

- Cost -

- Performance -

- Schedule -

• Mitigation Method: (Accept, Avoid,

Transfer or Control) Summarize activities:

1. Summarize Key Activity 1

2. Summarize Key Activity 2

3. Etc.

• Planned Closure Date:

• Risk ID #97: Risk Statement…

• Consequences if Realized:

- Cost -

- Performance -

- Schedule -

• Mitigation Method: (Accept, Avoid,

Transfer or Control) Summarize activities:

1. Summarize Key Activity 1

2. Summarize Key Activity 2

3. Etc.

• Planned Closure Date:

• Risk ID #85: Risk Statement…

• Consequences if Realized:

- Cost -

- Performance -

- Schedule -

• Mitigation Method: (Accept, Avoid,

Transfer or Control) Summarize activities:

1. Summarize Key Activity 1

2. Summarize Key Activity 2

3. Etc.

• Planned Closure Date:

Likelihood

Consequence

5

4

3

2

1

1 2 3 4 5

Level Likelihood Probability of Occurrence

5 Near Certainty > 80% to ≤ 99%

4 Highly Likely > 60% to ≤ 80%

3 Likely > 40% to ≤ 60%

2 Low Likelihood > 20% to ≤ 40%

1 Not Likely > 1% to ≤ 20%

Level

Cost

Schedule Performance

5

Critical

Impact

10% or greater increase over APB objective

values for RDT&E, PAUC, or APUC

Cost increase causes program to exceed

affordability caps

Schedule slip will require a major schedule

rebaselining

Precludes program from meeting its APB schedule

threshold dates

Degradation precludes system from meeting a KPP or key

technical/supportability threshold; will jeopardize program success

2

Unable to meet mission objectives (defined in mission threads,

ConOps, OMS/MP)

4

Significant

Impact

5% - <10% increase over APB objective

values for RDT&E, PAUC, or APUC

Costs exceed life cycle ownership cost KSA

Schedule deviations will slip program to within 2

months of approved APB threshold schedule date

Schedule slip puts funding at risk

Fielding of capability to operational units delayed by

more than 6 months

1

Degradation impairs ability to meet a KSA.

2

Technical design or

supportability margin exhausted in key areas

Significant performance impact affecting System-of System

interdependencies. Work-arounds required to meet mission

objectives

3

Moderate

Impact

1% - <5% increase over APB objective values

for RDT&E, PAUC, or APUC

Manageable with PEO or Service assistance

Can meet APB objective schedule dates, but other non-

APB key events (e.g., SETRs or other Tier 1 Schedule

events) may slip

Schedule slip impacts synchronization with

interdependent programs by greater than 2 months

Unable to meet lower tier attributes, TPMs, or CTPs

Design or supportability margins reduced

Minor performance impact affecting System-of System

interdependencies. Work-arounds required to achieve mission tasks

2

Minor

Impact

Costs that drive unit production cost (e.g.,

APUC) increase of <1% over budget

Cost increase, but can be managed internally

Some schedule slip, but can meet APB objective dates

and non-APB key event dates

Reduced technical performance or supportability; can be tolerated

with little impact on program objectives

Design margins reduced, within trade space

2

1

Minimal

Impact

Minimal impact. Costs expected to meet

approved funding levels

Minimal schedule impact Minimal consequences to meeting technical performance or

supportability requirements. Design margins will be met; margin to

planned tripwires

CLASSIFICATION

3 Program Technical Management

33

linked to TPMs and to the project schedule for critical tasks. For each high technical

risk, provide the risk burn-down plan. (Figure 3.2-2 contains a sample risk burn-down

plan.)

Expectation: Program should use hierarchical boards to address risks and integrates risk

systems with contractors. The approach to identify risks is both top-down and bottom-up. Risks

related to technology maturation, internal and external integration, modeling, and each design

consideration indicated in Table 2.5-1 are considered in risk identification. SEPs submitted for

approval contain a current, updated Risk Reporting Matrix and associated Risk Burn-Down plan

for high technical risks. Reporting risk artifacts should be auto-generated from within the digital

ecosystem at any time depicting the real-time status and should be accessible by all program

personnel.

Source: Name Year if applicable. Classification: UNCLASSIFIED.

Figure 3.2-2 Risk Burn-Down Plan as of [Date] (mandatory for high risks; others optional) (sample)

• Opportunity Management – Discuss the program’s opportunity management plans to

create, identify, model, analyze, plan, implement, and track initiatives (including technology

investment planning and pollution prevention projects) that can yield improvements in the

program’s cost, schedule, or performance baseline through reallocation of resources.

o If applicable, insert a chart or table that depicts the opportunities being pursued, and

summarize the cost/benefit analysis and expected closure dates (Table 3.2-1).

o Address opportunities that would mitigate system safety risks and improve return on

investment.

(1)

(2)

(3)

2 months 3 months 4 months

5 months

6 months1 month

(1) = Install higher efficiency magnets (Static test results)

(2) = Improve generator power output (Bench test)

(3) = Flight test of UAV

Initial

Date

= Complete

= Pending

Current Date

= Planned

= Actual

CLASSIFICATION

3 Program Technical Management

34

Table 3.2-1 Opportunity Register (if applicable) (sample)

Opportunity

Likeli-

hood

Cost to

Implement

Return on Investment

Program

Priority

Manage

ment

Strategy

Owner

Expected

Closure

Monetary

Schedule Performance

System

Safety

Impact

RDT&E

Procurement

O&M

Opportunity 1:

Procure Smith

rotor blades

instead of Jones

rotor blades.

Mod $3.2M $4M

3-month

margin

4% greater lift

#2

Reevalua

te;

summari

ze the

plan

Mr. Bill

Moran

March

2017

Opportunity 2:

Summarize the

opportunity

activity.

Mod $350K $25K $375K

#3

Reject

Ms. Dana

Turner

N/A

Opportunity 3:

Summarize the

opportunity

activity.

High $211K $0.04M $3.6M

4 months

less long-

lead time

needed

#1

Summari

ze the

plan to

realize

the

opportuni

ty

Ms. Kim

Johnson

January

2017

Technical Performance Measures

Summarize the program’s strategy for selecting the set of measures for tracking and reporting

the maturation of system development, design, and production. TPMs are carefully chosen and

their values collected over time for the purpose of seeing trends and forecasting program

progress to plan. TPMs provide the ability for the PM, LSE, and senior decision makers to

(1) gain quantifiable insight to technical progress, trends, and risks; (2) empirically forecast the

impact on program cost, schedule, and performance; and (3) provide measurable feedback of

changes made to program planning or execution to mitigate potentially unfavorable outcomes.

TPMs are metrics that show how well a system is satisfying its requirements or meeting its

goals. TPMs for cyber survivability and operational resilience should be defined. TPMs should

not repeat Critical Risks, KPPs, Key System Attributes (KSAs), or Critical Technical Parameters

(CTPs) but should trace to them. As the system matures, the program should add, update, or

delete TPMs documented in the SEP.

(See SE Guidebook (2022), Technical Assessment Process, for category definitions and

additional guidance.) This section should include:

• An overview of the measurement planning and selection process, including the approach to

monitor execution to the established plan, and identification of roles, responsibilities, and

authorities for this process

• A set of TPMs covering a broad range of core categories, rationale for tracking, intermediate

goals, and the plan to achieve them with as-of dates (Table 3.2-2.)

• SE leading indicators to provide insight into the system technical maturation relative to a

baseline plan

• The maturation strategy, assumptions, reporting methodology, and maturation plans for

each metric with each performance metric traced to system requirements and mission

capability characteristics

• The program’s process and documentation approach for adding or deleting TPMs and any

changes to the TPM goals

• Whether any contractual provisions relate to meeting TPM goals or objectives

CLASSIFICATION

3 Program Technical Management

35

• Description of how models, simulations, the digital ecosystem, and digital artifacts will be

used to support TPM tracking and reporting.

• Description of the traceability among KPPs; KSAs; key technical risks and identified TPMs;

CTPs (listed in the TEMP); Critical Program Information (CPI); threats associated with the

program’s Critical Intelligence Parameters (CIPs) (identified by Service Intelligence);

vulnerabilities (listed in the Program Protection Plan (PPP)); or other measures:

o Identify how each KPP and KSA is covered by a TPM. If not, explain why a KPP or KSA

is not covered by a TPM.

o Identify how the achievement of each CTP is covered by a TPM. If not, explain why a

CTP is not covered by a TPM.

o Identify planned manufacturing measures, appropriate to the program phase, to track

manufacturing readiness performance to plan.

o Identify SW measures for SW technical performance, process, progress, and quality

(e.g., Table 3.2-2, Appendix C – Agile and Development, Security and Operations

(DevSecOps) Software Development Metrics).

o Identify what threat information is being used and if a Validated Online Lifecycle Threat

(VOLT) from Service intelligence was used. The VOLT should be used and reviewed by

the engineering team and provided to the prime contractor. If a VOLT is not being used,

explain why.

o Indicate what CIPs have been defined for any threat-sensitive requirements per the

JCIDS Manual. Identify how CIP breach(es) affect TPM(s).

Table 3.2-2 provides examples of TPMs in each of 15 core categories. The table includes

examples of each, with intermediate goals as a best practice for effective technical management

(DoDI 5000.88, Para 3.4.a.(3).(g)).

CLASSIFICATION

3 Program Technical Management

36

Table 3.2-1 Technical Performance Measures (mandatory) (sample)

Technical

Performance

Measure

TPM

Category

Responsible

IPT

Requirement

Trace

KPP(s),KSA(s),

CTP(s)

TPM

Goal

Plan /

Actual

SRR

Status

SFR

Status

PDR

Status

MS B

Status

CDR

Status

SVR/FCA

Status

MS C

Status

FRP

Status

Mean Time

Between

Operational

Mission

Failure

(MTBOMF)

Reliability,

Maintainability,

Availability

R&M KSA (Reliability)

>45

Plan

36

36

37

38

40

45

47

50

Actual

33 34 35 37

Operating

Weight (lb)

System

Performance

Air Vehicle

KPP (Effective

Time on Station)

<99,000

Plan

98,999

98,999

98,000

95,000

85,540

85,540

85,540

85,650

Actual

97,001

97,001

102,950

97,001

Interface

Definition -

External ICDs

Planned vs.

Actual

Mission

Integration

Management

Configuration

Management

Specification (%

ICD

s

approved vs.

planned)

100%

Plan

0

0

10

20

40

95

98

99

Actual

0 0 15 20

Time to

perform

mission-critical

function

Mission (End

to End)

Performance

Mission

Systems

KPP (Time to

perform critical

functions-sec)

<15s

Plan

25

25

25

23

20

15

15

15

Actual

25 25 25 25

Risk-based

supply chain,

design, SWA,

system

function,

component,

part-level

protection

measures

System

Security

Mission

Systems

KSA (% IA

detected and

prevented)

>99.5%

Plan

85

90

90

95

95

99.5

99.5

99.5

Actual

80 81 86 92

First pass

yield (FPY)

(%)

Manufacturing

Quality

Manufacturing

Specification (%

of 1st pass

acceptance)

>=0.95

Plan

0.95

0.96

0.97

Actual

Parts Delivery

Performance

Manufacturing

Management

Manufacturing

Specification (%

of parts accepted)

>=98%

Plan

95%

97%

98%

99%

Actual

Schedule

Deviation

Schedule

Management

System

Engineering

Specification (%

critical path

variance)

<=5

Plan

5.3

5.3

5.3

5

5

5

5

5

Actual

8 7 6.5 5.5

Plan

5.5

5.5

5.5

5

5

5

5

5

CLASSIFICATION

3 Program Technical Management

37

Government

Staffing

Deviation

Staffing and

Personnel

Management

System

Engineering

Specification (%

variance plan vs.

filled)

<=5

Actual

6.5 6.5 7 5.2

Average

Production

Unit Cost

(APUC)

Resource

Management

Cost KSA (APUC) ($)

<150M

Plan

170

170

170

167

160

155

150

150

Actual

175 180 175 170

Average %

Requirements

Change per

Month

Requirements

Management

System

Engineering

KPP/CTP (Design

Control and

Stability)

0%

Plan

35

30

25

17

2

0

0

0

Actual

33 29 26 24

Software Size

Software

Engineering

Software

Metric (e.g.,

SLOC, ESLOC,

Story Points,

Function Points)

(% Estimating

Uncertainty)

n/a

Plan

500

FP

500

FP

500 FP

500

FP

500

FP

500 FP

500

FP

500

FP

Actual

250 @

70%

350 @

75%

460 @

80%

480 @

85%

Software

Schedule /

Duration

Software

Schedule

Software

Metric (Project

phase; e.g.,

Rqmts,

High-level and

Detailed Design,

Code and Unit

Test, Integration,

System Test) (#

months)

(% Schedule

Definition)

n/a

Plan

70 70 80 90 95 100 100 100

Actual

70 @

70%

70 @

75%

80 @

80%

95 @

90%

Software

Staffing

Software

Resources

Software

Metric (Full-time

Equivalent)

n/a

Plan

70

90

100

110

110

80

70

50

Actual

60

88

99

110

Effort

Software

Engineering

Software

Metric (Hours)

(% Estimating

Uncertainty)

n/a

Plan

80

80

95

100

100

100

100

100

Actual

75 @

65%

70 @

75%

90 @

80%

105 @

90%

110 @

95%

Software

Defects

Software

Quality

Software

Metric (Open

critical Priority 1

and 2 defects,

optionally by

CSCI)

0

Plan

n/a

n/a

n/a

20

10

0

0

0

Actual

n/a n/a n/a 21 15 6 2 0

Phase

Containment

Software

Quality

Software