Information Processing and Management 61 (2024) 103672

Available online 3 February 2024

0306-4573/© 2024 The Authors. Published by Elsevier Ltd. This is an open access article under the CC BY license

(http://creativecommons.org/licenses/by/4.0/).

Contents lists available at ScienceDirect

Information Processing and Management

journal homepage: www.elsevier.com/locate/ipm

Cognitive Biases in Fact-Checking and Their Countermeasures: A

Review

Michael Soprano

a,

∗

, Kevin Roitero

a

, David La Barbera

a

, Davide Ceolin

b

,

Damiano Spina

c

, Gianluca Demartini

d

, Stefano Mizzaro

a

a

University of Udine, Via Delle Scienze 206, Udine, Italy

b

Centrum Wiskunde & Informatica (CWI), Science Park 123, Amsterdam, The Netherlands

c

RMIT University, 124 La Trobe St, Melbourne, Australia

d

The University of Queensland, St Lucia QLD 4072, Brisbane, Australia

A R T I C L E I N F O

Keywords:

Cognitive bias

Misinformation

Fact-checking

Truthfulness assessment

A B S T R A C T

The increase of the amount of misinformation spread every day online is a huge threat to

the society. Organizations and researchers are working to contrast this misinformation plague.

In this setting, human assessors are indispensable to correctly identify, assess and/or revise

the truthfulness of information items, i.e., to perform the fact-checking activity. Assessors, as

humans, are subject to systematic errors that might interfere with their fact-checking activity.

Among such errors, cognitive biases are those due to the limits of human cognition. Although

biases help to minimize the cost of making mistakes, they skew assessments away from an

objective perception of information. Cognitive biases, hence, are particularly frequent and

critical, and can cause errors that have a huge potential impact as they propagate not only

in the community, but also in the datasets used to train automatic and semi-automatic machine

learning models to fight misinformation. In this work, we present a review of the cognitive

biases which might occur during the fact-checking process. In more detail, inspired by PRISMA

– a methodology used for systematic literature reviews – we manually derive a list of 221

cognitive biases that may affect human assessors. Then, we select the 39 biases that might

manifest during the fact-checking process, we group them into categories, and we provide a

description. Finally, we present a list of 11 countermeasures that can be adopted by researchers,

practitioners, and organizations to limit the effect of the identified cognitive biases on the

fact-checking activity.

1. Introduction

The amount of information which is generated daily by users on social media platforms, news agencies, and the web in general

is rapidly increasing. As a result, organizations performing fact-checking of information items are overwhelmed by the amount

∗

Corresponding author.

Michael Soprano, Linkedin: michaelsoprano (M. Soprano), David La Barbera, Linkedin: david-la-barbera-8a1a646a (D. La Barbera), Davide

Ceolin, Linkedin: davideceolin (D. Ceolin), Damiano Spina, Linkedin: damianospina (D. Spina), Gianluca Demartini, Linkedin: gianlucademartini

(G. Demartini), Stefano Mizzaro, Linkedin: stefano-mizzaro-1234082 (S. Mizzaro).

E-mail addresses: [email protected] (M. Soprano), [email protected] (K. Roitero), [email protected] (D. La Barbera),

[email protected] (D. Ceolin), [email protected] (D. Spina), [email protected] (G. Demartini), [email protected] (S. Mizzaro).

URLs: http://www.michaelsoprano.com (M. Soprano), http://www.kevinroitero.com (K. Roitero), http://www.cwi.nl/en/people/davide-ceolin/ (D. Ceolin),

http://www.damianospina.com (D. Spina), http://www.gianlucademartini.net (G. Demartini), http://users.dimi.uniud.it/~stefano.mizzaro/ (S. Mizzaro).

https://doi.org/10.1016/j.ipm.2024.103672

Received 10 July 2023; Received in revised form 13 December 2023; Accepted 22 January 2024

Information Processing and Management 61 (2024) 103672

2

M. Soprano et al.

of material that requires inspection (Porter, 2020). Moreover, the current processes and pipelines implemented by fact-checking

organizations cannot cope with the current trend as they are not designed to scale up to the massive amount of information

that requires attention. The problem is so serious that the World Health Organization (WHO) director-general used the neologism

‘‘infodemic’’ to refer to the problem of misinformation during the 2020 edition of the Munich Security Conference,

1

while the

COVID-19 pandemic was still ongoing (Alam et al., 2021).

People such as fact-checkers, being experts (Drobnic Holan, 2018; FactCheck.org, 2020; The RMIT ABC Fact Check Team, 2021)

or crowd workers (Demartini, Mizzaro, & Spina, 2020; Roitero, Soprano, Fan, et al., 2020; Roitero, Soprano, Portelli, et al., 2020;

Soprano et al., 2021), can be subject to systematic errors that can harm the information assessment process. Systematic errors due

to the limits of human cognition are called cognitive biases. According to the Oxford Dictionary, a general definition of bias is

‘‘a strong feeling in favor of or against one group of people, or one side in an argument, often not based on fair judgment’’. There

exist different types of biases: cognitive biases, conflicts of interest, statistical biases, prejudices. In this paper, we focus on cognitive

biases because these are systematic biases due to limits in human cognition that can unintentionally affect the effectiveness of fact-

checking processes. From a psychological point of view, evolutionary studies suggest that humans developed biases to minimize

the cost of making mistakes in the long period, as they can improve decision-making processes (Johnson, Blumstein, Fowler, &

Haselton, 2013). According to error management studies that aim at explaining human processes in decision-making, cognitive

biases, defined as ‘‘the ones that skew our assessments away from an objective perception of information’’ (Johnson et al., 2013),

have been favored by nature in order to minimize whichever error that caused a great cost (Haselton & Nettle, 2006; Nesse, 2001,

2005). In fact, decision-making processes are often complex, and we are not always capable of keeping up to date – and statistically

correct – the estimations of the error probabilities involved in such processes; thus, natural selection might have favored cognitive

biases to simplify the overall decision process (Cosmides & Tooby, 1994; Todd & Gigerenzer, 2000). Summarizing, cognitive biases

evolved because of the intrinsic limitations of humans when making a decision. Cognitive biases play a major role in the way

(mis)information and verified content are consumed (Draws et al., 2022), and different debiasing strategies have been proposed in

relation to cognitive factors such as people’s memory for misinformation (Lewandowsky, Ecker, Seifert, Schwarz, & Cook, 2012);

also, debiasing is not the only possible choice, as other proposals aim at managing bias instead of removing it (Demartini, Roitero,

& Mizzaro, 2021).

It is important to remark that biases can have far-fetched consequences. Keeping the focus on fact-checking, machine learning is

an interesting potential solution to address the obvious scalability issues of the approach based on human experts (Ciampaglia et al.,

2015; Liu & Wu, 2020; Wang, 2017; Weiss & Taskar, 2010). In this respect, biases not only interfere with the human fact-checking

activity in practice, but they also create issues for automatic approaches as they creep into the datasets that are then used to train

the machine learning systems, in some cases contributing to blatant errors (see, e.g., the famous ‘‘Gorilla Case’’ (Simonite, 2018)

where an image recognition algorithm misclassified black people as ‘‘gorillas’’). Since biases introduce errors due to systematic

limits in human cognition that are potentially shared among several individuals, bias prevention, management, and/or control are

fundamental.

The contributions of this work are four-fold. First, we propose a comprehensive list of 221 cognitive biases by reviewing the

available related literature. Next, we extract the subset of 39 biases that may manifest during the fact-checking activity. Furthermore,

we categorize them and propose a list of countermeasures to limit their impact. Lastly, we outline the building blocks of a bias-aware

assessment pipeline for fact-checking, with each countermeasure mapped to a constituting block of the pipeline.

The remainder of the paper is structured as follows. Section 2 provides some background on the fact-checking process and on

cognitive biases. In Section 3 we discuss aims and motivations of our work, as well as the relations and differences with other

existing reviews. Next, we describe the methodology used in this paper (Section 4). We then present the list of cognitive biases

selected in this work as relevant to fact-checking (Section 5), along with their categorization (Section 6). Finally, we propose a

list of countermeasures (Section 7) and the constituting blocks of a bias-aware assessment pipeline (Section 8) to be employed in

fact-checking. To conclude, we discuss the limitations of our approach (Section 9) and provide implications of the work, future

work, and a summary of the contributions (Section 10).

2. Background

To contextualize our research within the existing body of work, we describe the fact-checking process by presenting how some

notable organizations work in practice (Section 2.1). Next, we investigate in Section 2.2 the methodologies developed by researchers

to evaluate the truthfulness of information items (i.e., the core process of the fact-checking activity) using crowdsourcing and

machine learning. Then, in Section 2.3, we recall works on cognitive biases and we briefly discuss existing literature reviews and

comparing them with the scope of our paper.

2.1. The process of fact-checking

Fact-checking is a complex process that involves several activities (Mena, 2019; Vlachos & Riedel, 2014). An abstract and general

pipeline for fact-checking might include the following steps (not necessarily in this order): check-worthiness (i.e., ensure that an

1

https://www.who.int/director-general/speeches/detail/munich-security-conference

Information Processing and Management 61 (2024) 103672

3

M. Soprano et al.

information item is of great interest for a possibly large audience), evidence retrieval (i.e., retrieve the evidence needed to fact-check

the information item), truthfulness assessment, discussion among the assessors to reach a consensus, and assignment and publication

of the final truthfulness score for the information item inspected. With respect to this pipeline, in this paper we focus mainly on the

truthfulness assessment step.

It is also interesting to briefly examine the fact-checking processes adopted in practice by three famous organizations, namely

PolitiFact, RMIT ABC Fact Check, and FactCheck.org – verified signatories to the International Fact-Checking Network (IFCN,

https://www.poynter.org/ifcn/) – given that they set a de-facto standard for the pipeline required to perform fact-checking at scale.

PolitiFact

2

fact-checks information items by US Politicians. Drobnic Holan (2018) details the process as follows. The reporter in

charge of running the fact-checking proposes, to perform the truthfulness assessment step, a rating using a six-level ordinal scale

(Pants On Fire, False, Mostly False, Half True, Mostly True, and True). Such assessment is reported to an editor. The reporter

and the editor work together to reach a consensus on the rating proposed by adding clarifications and details if needed. Then, the

information item is shown to two additional editors, which review the work of the editor and the reporter by providing answer to

the following four questions (Drobnic Holan, 2018, Section ‘‘How We Determine Truth-O-Meter Ratings’’): (1) Is the information

item literally true? (2) Is there another way to read the information item? Is the information item open to interpretation? (3) Did

the speaker provide evidence? Did the speaker prove the information item to be true? (4) How have we handled similar information

items in the past? What is PolitiFact’s jurisprudence? Then, the definitive rating of the item is decided upon using the majority vote

of the score submitted by the editors, final edits are made to make sure everything is consistent, and the report is finally published.

RMIT ABC Fact Check

3

focuses on information items made by Australian public figures, advocacy groups, and institutions. Their

process works as follows (see The RMIT ABC Fact Check Team, 2021 for a more detailed description). The information item to be

checked needs to be approved by the director which assesses its check-worthiness. Then, one of the researchers at RMIT ABC Fact

Check contacts experts in the field and occasionally the claimant to retrieve evidence and get back data which can be helpful for

fact-checking. The researcher writes the data and the information. An expert fact-checker inspects and reviews them. In this stage, the

expert fact-checker identifies possible problems and questions the researcher on anything that they might have missed (e.g., missing

or not exhaustive evidence retrieved). The expert fact-checker and the researcher revise the draft until the fact-checker is satisfied

with the outcome; then, the whole team discusses the final verdict for the item. The final verdict is then expressed on a fine-grained

categorical scale, which is used in their publications. For documentation purposes, the verdict is further refined into a three-level

ordinal scale defining its truthfulness value: False, In Between, True. This choice is based on previous work demonstrating that a

three-level scale may be the most suitable.

FactCheck.org

4

fact-checks information items dealing with US politicians. Their process works as follows (see FactCheck.org,

2020 for a more detailed description). As for the check-worthiness step, they select items made by the president of the United

States and important politicians, focusing on those made by presidential candidates during public appearances, top senate races,

and congress actions. To perform evidence retrieval, they seek through video transcripts or articles to identify possible misleading

or false information items and ask the organization or the person making the item to prove its truthfulness by providing supporting

documentation. If no evidence is provided, FactCheck.org searches trusted sources for evidence confirming or refusing the item.

Finally, the verdict about the information item is published, without assigning a fine-grained truthfulness label. At FactCheck.org,

each item is revised in most cases by four people (FactCheck.org, 2020, Section ‘‘Editing’’): a line editor (reviewing content), a copy

editor (reviewing style and grammar), a fact-checker (in charge of the fact-checking process), and the director of the Annenberg

Public Policy Center.

In summary, the fact-checking processes of the three organizations share similarities and differences, described in Table 1.

The table reports, for each fact-checking organization considered, the information items provenance, together with the claimants

considered, the truthfulness scale used, and the number of expert fact-checkers involved in the process. All three organizations are

committed to upholding the principles of the International Fact-Checking Network (IFCN) and focus on checking information items

made by politicians and/or public figures. However, they differ in the specific process followed for evidence retrieval, truthfulness

assessment, and rating of the items. PolitiFact focuses on US politicians, using a six-level rating scale and a consensus-based process

among editors and reporters to determine the final rating. RMIT ABC Fact Check targets Australian public figures, engaging field

experts and a collaborative review process where fact-checking is performed by three experts, thus having the whole team decide the

final verdict. FactCheck.org also concentrates on US politicians, seeking evidence from claimants and trusted sources, and has a four-

person team to review each information item. Despite these differences, all three organizations demonstrate a strong commitment

to accuracy, transparency, and thoroughness in their fact-checking processes, providing valuable resources for the public to access

reliable information on political items. Moreover, it is worth mentioning that since all three organizations rely exclusively on human

judgment for their evaluations, their processes are potentially susceptible to the set of cognitive biases addressed in this paper.

2.2. Fact-checking: Crowdsourcing and machine learning

The process of fact-checking, as implemented by the organizations, has the clear limitation of being not scalable: it requires

experts and, being rather time-consuming, is clearly not capable of coping with the huge amount of information shared online

2

https://politifact.com/

3

https://www.abc.net.au/news/factcheck

4

https://www.factcheck.org/

Information Processing and Management 61 (2024) 103672

4

M. Soprano et al.

Table 1

Statistics of the fact-checking process as performed by different organizations.

Organization Country Claimants Truthfulness scale Experts involved

PolitiFact USA Politicians Pants On Fire,

False, Mostly

False, Half True,

Mostly True, True

4

RMIT ABC Fact

Check

Australia Public Figures,

Advocacy Groups,

Institutions

False, In Between,

True

3

FactCheck.org USA Politicians / 4

every day (Das, Liu, Kovatchev, & Lease, 2023; Demartini et al., 2020; Spina et al., 2023). For this reason, the misinformation

problem gained attention among researchers who tried to develop alternative methodologies which can help in identifying and

evaluating the truthfulness of online information. To this end, essentially two main approaches have been proposed.

Fact-checking has been studied with the help of crowdsourcing techniques, which have been leveraged to obtain reliable labels

at scale (Demartini, Difallah, Gadiraju, & Catasta, 2017; Demartini et al., 2020). Draws, Rieger, Inel, Gadiraju, and Tintarev (2021)

focused on debiasing strategies for crowdsourcing, proposing a methodology that researchers and practitioners can follow to improve

their task design and report limitations of collected data; Eickhoff (2018) gathered labels to study and analyze assessor bias, finding

that they have major effects on annotation quality (Eickhoff, 2018); Li et al. (2020) worked in the setting of Twitter topic models;

La Barbera, Roitero, Demartini, Mizzaro, and Spina (2020) and Roitero, Demartini, Mizzaro, and Spina (2018), Roitero, Soprano,

Fan, et al. (2020) collected thousands of truthfulness labels focusing on crowd workers’ effectiveness, agreement, and bias. They

show that crowdsourcing labels correlate with expert judgments, and workers’ backgrounds and biases (e.g., political orientation)

have a major impact on label quality. Roitero, Soprano, Portelli, et al. (2020) worked in a similar setting with a focus on COVID-19

pandemic-related information items. Some other works focused on the credibility and trust of sources of information (Bhuiyan,

Zhang, Sehat, & Mitra, 2020; Epstein, Pennycook, & Rand, 2020) and on echo chambers and filter bubbles (Eady, Nagler, Guess,

Zilinsky, & Tucker, 2019; Nguyen, 2020).

Besides human-powered systems, other lines of research investigated the usage of automatic machine learning techniques for

fact-checking (Hassan et al., 2015; Thorne & Vlachos, 2018). Such techniques rely on training some machine learning algorithm on

a labeled dataset which is usually built using human assessors. Thus, the set of biases that are present in the dataset might impact

the trained machine learning model (Caliskan, Bryson, & Narayanan, 2017). For this reason, we briefly report related work in the

setting of machine learning techniques to cope with disinformation. A set of works focused on the bias of the datasets used to train

machine learning models: Vlachos and Riedel (2014) defined the setting and the challenges needed to create a benchmark dataset

for fact-checking; Ferreira and Vlachos (2016) described a dataset for stance classification; Wang (2017) created the LIAR dataset

which contains a large collection of fact-checked information items; Liu and Wu (2020) used a deep neural network architecture to

perform detection and stop the spreading of misinformation before it becomes viral. Another line of research focused not on the data

but rather on the algorithms which can be employed to build a fully automatic methodology to fact-check information: Weiss and

Taskar (2010) developed a method based on adversarial networks; Ciampaglia et al. (2015) used an approach based on knowledge

networks; Alhindi, Petridis, and Muresan (2018) leveraged justification modeling; Reis, Correia, Murai, Veloso, and Benevenuto

(2019) and Wu, Rao, Yang, Wang, and Nazir (2020) discussed explainable machine learning algorithms that can be employed for

fake news detection; Evans, Edge, Larson, and White (2020) and Oeldorf-Hirsch and DeVoss (2020) considered information sources

and their metadata.

2.3. Cognitive biases

Given that the truthfulness assessment step of the fact-checking pipeline is driven by human assessments, either as direct human

assessments or when using the human labeled data to train machine learning models, it is prone to suffer from cognitive biases.

According to the literature, more than 180 cognitive biases exist (Caverni, Fabre, & Gonzalez, 1990; Haselton, Nettle, & Murray,

2015; Hilbert, 2012; Kahneman & Frederick, 2002). Even if a standard conceptualization or classification of such biases is a debated

problem (Gigerenzer & Selten, 2008; Hilbert, 2012), many works confirmed the presence of bias in many domains using reproducible

studies (Thomas, 2018), for example in information seeking and retrieval (Azzopardi, 2021). Furthermore, biases are often classified

by their generative mechanism (Hilbert, 2012); Oeberst and Imhoff (2023), for instance, argue that multiple biases can be generated

by a given fundamental belief. Research also agreed that multiple biases can occur at the same time (MacCoun, 1998; Nickerson,

1998).

It is common knowledge that cognitive biases affect the reasoning process, decision-making, and human behavior in general.

Their effect has been widely studied in multiple disciplines: Barnes (1984), Das and Teng (1999), Ehrlinger, Readinger, and

Kim (2016), Hilbert (2012), and Swets, Dawes, and Monahan (2000) studied the effect of cognitive biases in decision processes

and planning, Fisher and Statman (2000) focused on market forecasting, Draws et al. (2021) and Eickhoff (2018) analyzed

cognitive biases in crowdsourcing, Baeza-Yates presented an overview of biases in the web (Baeza-Yates, 2018) and in search

and recommendation systems (Baeza-Yates, 2020), Sylvia Chou, Gaysynsky, and Cappella (2020) studied the role of cognitive

Information Processing and Management 61 (2024) 103672

5

M. Soprano et al.

biases in social media platforms, Kiesel, Spina, Wachsmuth, and Stein (2021) studied biases related to presentation format using

conversational interfaces in the context of systems for argument search. All those works reported that bias is strongly correlated

with data quality and with the effectiveness of the systems and models detailed in the papers.

Among all the studies dealing with cognitive biases, a line of research has focused on the role of specific cognitive biases in

relation to the misinformation topic. Park, Park, and Kang (2021) discuss the fact-checking activity, asserting that its effectiveness

varies due to multiple factors. They illustrate how statements that are neither entirely false nor true, often resulting in borderline

judgments, can manifest unexpected cognitive biases in human perception. Meanwhile, Zhou and Zafarani (2020) survey and

evaluate methods used to detect misinformation from four perspectives. They argue that people’s trust in fake news can be built

when the fake news confirms one’s preexisting political biases (i.e., particular cognitive biases), thus providing resources to evaluate

the partisanship of news publishers. Mastroianni and Gilbert (2023) show, in a recent study, how biased exposure to information and

biased memory for information makes people believe that morality is declining for decades. Zollo (2019) studied how information

spreads across communities on Facebook, focusing on echo chambers and confirmation bias. They provide empirical evidence of

echo chambers and filter bubbles, showing that confirmation bias plays a crucial role in content selection and diffusion (Cinelli,

Cresci, Quattrociocchi, Tesconi, & Zola, 2022; Cinelli, De Francisi, Galeazzi, Quattrociocchi, & Starnini, 2021; Cinelli et al., 2020; Del

Vicario et al., 2016; Zollo & Quattrociocchi, 2018). Wesslen et al. (2019) explored the role of visual anchors in the decision-making

process related to Twitter misinformation. They found that these visual anchors significantly impact users in terms of activity, speed,

confidence, and accuracy. Karduni et al. (2018) focused on uncertainty on truthfulness assessment when employing visual analysis.

Acerbi (2019) analyzed a cognitive attraction phenomenon in online misinformation, identifying a set of cognitive features that

contribute to the spread of misinformation. Chou, Gaysynsky, and Vanderpool (2021) investigated factors such as biases driving

misinformation sharing and acceptance in the context of COVID-19. Traberg and Van Der Linden (2022) investigated the role

of perceived source credibility in mitigating the effects of political bias. Zhou and Shen (2022) considered confirmation bias on

misinformation related to the topic of climate change. Ceci and Williams (2020) propose ‘‘adversarial fact-checking’’, i.e., pairing

fact-checkers from different sociopolitical backgrounds, as a mechanism to address biases that may occur when verifying political

claims.

While the previously cited works focus on specific aspects, there are literature reviews that relate with the issues of cognitive

biases and the overall misinformation spreading problem. Ruffo, Semeraro, Giachanou, and Rosso (2023) address the most important

psychological effects that provide provisional explanations for reported empirical observations regarding the mechanisms behind

the spread of misinformation on social networks. Wang, McKee, Torbica, and Stuckler (2019) reviews works specifically focused

on the spread of health-related misinformation, but they explicitly choose to avoid the extensive literature related to cognitive

biases. Tucker et al. (2018) reviews research findings related to cognitive biases in political discourse, with a focus on the detection

of computational propaganda.

More generally, the literature includes recent reviews that address cognitive biases in various fields of study not related to

misinformation and fact-checking. Armstrong et al. (2023) specifically explores biases that may impact surgical events and discusses

mitigation strategies used to reduce their effects. Similarly, Vyas, Murphy, and Greenberg (2023) investigates biases affecting military

personnel. Eberhard (2023) reviews strategies to mitigate the effects of cognitive biases resulting from visualization strategies on

judgment and decision-making. Additionally, Gomroki, Behzadi, Fattahi, and Fadardi (2023) collect data on cognitive biases in

information retrieval.

3. Aims & motivations

In addressing the challenges posed by cognitive biases that may impact the fact-checking activity, various studies have focused

on different aspects of fact-checking. There is still a gap in comprehensively reviewing how cognitive biases specifically influence

the fact-checking process.

Existing literature reviews have primarily concentrated on technical and procedural aspects of fact-checking (Zhou & Zafarani,

2020), addressing cognitive biases partially. For instance, they propose generative mechanisms for subsets of cognitive biases (Oe-

berst & Imhoff, 2023), address partisanship-related biases exclusively (Walter, Cohen, Lance Holbert, & Morag, 2020), review only

those deemed as the most important by the authors (Ruffo et al., 2023), or avoid the aspect completely (Wang et al., 2019). Some

reviews focus on the context of political conversations only (Tucker et al., 2018). Other recent reviews dealing with cognitive

biases are contributions to other fields of study (Armstrong et al., 2023; Eberhard, 2023; Gomroki et al., 2023; Vyas et al., 2023).

The existing reviews, thus, often overlook the underlying set of cognitive biases that can significantly impact the outcomes of the

fact-checking activity. Our review is aimed at filling these gaps and we believe it is necessary because cognitive biases are inherent

in human judgments and, as a consequence, in the datasets used for training machine learning models for the fact-checking domain.

These biases can subtly but profoundly influence the effectiveness and reliability of fact-checking processes. By identifying and

understanding these biases, we can develop more robust and unbiased fact-checking methodologies, being them human-based or

automatic. This approach not only enhances the accuracy of fact-checking but also contributes to the broader discussion on the

reliability and trustworthiness of information.

In this review, our primary motivation is to provide a comprehensive and systematic investigation of the cognitive biases that

may manifest during the fact-checking process, compromising its effectiveness in a real-world scenario. Thus, the purpose of this

review is fourfold: (i) to systematically identify the cognitive biases that are relevant to the fact-checking process, (ii) to provide

a categorization of these biases and real-world examples to illustrate their impact on fact-checking, (iii) to propose potential

countermeasures that can help mitigate the risk of cognitive biases manifesting in a fact-checking context, and (iv) to provide

Information Processing and Management 61 (2024) 103672

6

M. Soprano et al.

the constituting blocks of a bias-aware fact-checking pipeline that helps to minimize such a risk. In more detail, we adopt PRISMA,

a methodology well-grounded in the literature, to systematically collect and report biases. Specifically, our focus is on retrieving

a single formulation for each considered bias and, if possible, a single reference to support its framing in a fact-checking-related

scenario. Our goal, therefore, is not to build a comprehensive list of references, but rather, a comprehensive list of biases.

In summary, our work aims to characterize cognitive biases that may manifest during the fact-checking activity, offering a novel

perspective that complements and extends existing literature. While we acknowledge that our proposal is not conclusive and should

be regarded as a starting point for further investigation in this area, our aim is to provide valuable insights and practical guidance for

researchers, practitioners, and policymakers working in the field of fact-checking and information assessment. Furthermore, to our

knowledge, this is the first work to provide a comprehensive set of countermeasures to prevent cognitive biases in the fact-checking

process.

4. Methodology

We initially introduce the PRISMA methodology, an approach to conduct high-quality systematic reviews and meta-analyses.

Then, we describe how we adopt it to find existing literature about cognitive biases. To summarize, we explore various information

sources to find literature that addresses one or more examples of cognitive biases by relying on our search strategy. From the

literature found, we perform data collection by extracting one or more biases according to our eligibility criteria. Then, we filter

the whole list of cognitive biases according to our selection process to obtain only those cognitive biases that might manifest while

performing the fact-checking activity.

4.1. The PRISMA methodology

Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) is an evidence-based minimum set of items for

reporting in systematic reviews and meta-analyses. Moher, Liberati, Tetzlaff, and Altman (2009) originally proposed the approach in

2009 and it was a reformulation of the QUORUM guidelines (Moher et al., 1999). In 2020, Page, McKenzie, et al. (2021) proposed

an updated version known as ‘‘The PRISMA 2020 Statement’’. In the following, we describe and refer to such a formulation.

PRISMA is a transparent approach that has been widely adopted in various research fields. It aims to help researchers in

conducting high-quality systematic reviews and meta-analyses. Its clear and structured framework facilitates the identification,

assessment, and synthesis of relevant data, ensuring that the review process is rigorous, replicable, and unbiased. At its core, PRISMA

consists of a checklist

5

and a flow diagram,

6

both publicly available.

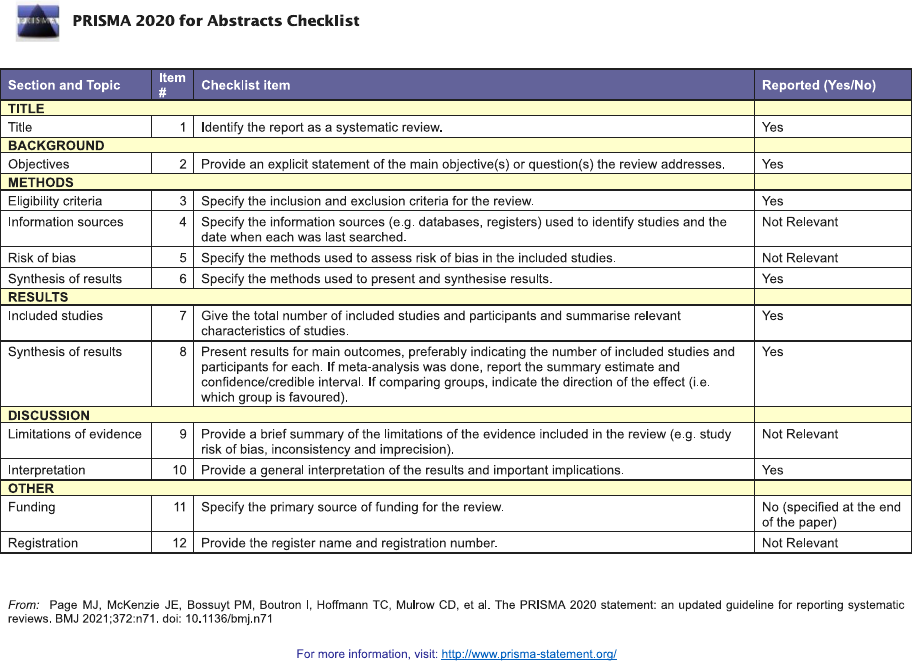

The PRISMA checklist is composed of 27 items addressing the introduction, methods, results, and discussion sections of a

systematic review report, summarized in Table 2. Items 10, 13, 16, 20, 23, and 24 are further split into sub-items (not shown in the

table). The flow diagram, on the other hand, depicts the flow of information through the different phases of a systematic review. It

maps out the number of records identified, included, and excluded, together with the rationale for exclusions; it is available in two

forms, depending on whether the review is a new contribution or an updated version of an existing one. Given that the review we

are proposing is a new contribution, we rely on the former version, reported in Fig. 1. If we compare the figure with Table 2, we

can see how the diagram provides further details mostly related to items 5 to 10, and 16 of the checklist.

The checklist and the diagram should be used according to the ‘‘Explanation and Elaboration Document’’ (Page, Moher, et al.,

2021), which aims to enhance the use, understanding, and dissemination of a review made using PRISMA. Several extensions

7

have been developed to facilitate the reporting of different types or aspects of systematic reviews. The ‘‘PRISMA 2020 Extension For

Abstracts’’, published together with the overall statement (Page, McKenzie, et al., 2021), is a 12-item checklist that gives researchers

a framework for condensing their systematic reviews into abstracts for journals or conferences. To summarize, the review proposed

in this paper is conducted by relying on four main PRISMA elements: (i) the checklist, (ii) the flow diagram, (iii) the checklist for

abstracts, and (iv) the explanation and elaboration document of the PRISMA 2020 statement (Page, McKenzie, et al., 2021; Page,

Moher, et al., 2021).

Given that our goal is to identify and extract the cognitive biases that might manifest in the fact-checking process from the

whole set of cognitive biases described in the literature, rather than finding all the research papers that address cognitive biases

to some extent (see Section 3), not all the items provided by the PRISMA checklist have to be addressed. However, we recognize

the importance of adhering to the original guidelines as much as possible. Thus, we start by detailing the checklist items that we

did not address because they are not relevant for the goal of finding biases or simply because they are not needed. In more detail,

we do not: compute effect measures (Item 12), study heterogeneity and robustness of the synthesized results (Item 13), present

assessments of risk of bias for each included study (Item 18), perform statistical analyses (Item 20), present assessments of risk of

bias due to missing results for each synthesis (Item 21), present assessments of certainty in the body of evidence for each outcome

(Item 22), provide particular registration information about the review (Item 24).

Most of the work performed to adopt PRISMA concerns the alterations of the inclusion and exclusion criteria that would typically

be applied to research articles in a literature review and the collection and selection process of the cognitive biases presented in

5

http://prisma-statement.org/PRISMAStatement/Checklist

6

http://prisma-statement.org/PRISMAStatement/FlowDiagram

7

http://prisma-statement.org/Extensions/

Information Processing and Management 61 (2024) 103672

7

M. Soprano et al.

Table 2

The 27 items of the PRISMA checklist.

Source: Adapted from Page, McKenzie, et al. (2021).

Item # Section/Topic

1 Title

2 Abstract

3 Rationale

4 Objectives

5 Eligibility Criteria

6 Information Sources

7 Search Strategy

8 Selection Process

9 Data Collection Process

10 Data Items

11 Study Risk Of Bias Assessment

12 Effect Measures

13 Synthesis Methods

14 Reporting Bias Assessment

15 Certainty Assessment

16 Study Selections

17 Study Characteristics

18 Risk Of Bias In Studies

19 Results Of Individual Studies

20 Results Of Syntheses

21 Reporting Biases

22 Certainty Of Evidences

23 Discussion

24 Registration And Protocol

25 Support

26 Competing Interests

27 Availability Of Data

Fig. 1. The PRISMA flow diagram for new systematic reviews which included searches of databases, registers and other sources.

Source: Adapted from Page, McKenzie, et al. (2021)

Information Processing and Management 61 (2024) 103672

8

M. Soprano et al.

Fig. 2. Data collection and selection process.

the following; that is, how we approach the items 5–10 and 13 reported in Table 2 and how we perform the processes described

by the flow diagram shown in Fig. 1. This tailored adaptation allows us to follow PRISMA’s structured approach – which involves

predefined eligibility criteria, search strategies, and data extraction – that helps minimize the risk of errors in our review process by

considering a slightly different final outcome. Section 4.2 describes our eligibility criteria, information sources and search strategy,

while Section 5 details the data collection and selection processes. The full ‘‘PRISMA Abstract Checklist’’ and ‘‘PRISMA Checklist’’

are reported in Appendix A.

4.2. Eligibility criteria, information sources, and search strategy

To build the list of cognitive biases that may manifest while performing the fact-checking process, we started by defining three

eligibility criteria to include or not a given work:

1. Is a given bias described in a peer-reviewed literature work?

2. Does the bias have a clear definition? Are its causes and domains of application explained?

3. Can we frame a fact-checking related scenario which involves the bias, eventually supported by existing literature?

The PRISMA statement emphasizes the importance of obtaining a balance between precision and recall, depending on the goals

of the review. Keeping this in mind, we have defined our eligibility criteria to identify all cognitive biases with the outlined

characteristics. Biases were excluded if they were not well-established, lacked a clear definition, or were not relevant to fact-checking.

Concerning information sources, more than 200 biases are listed on publicly available web pages. Specifically, Wikipedia lists 227

biases,

8

while The Decision Lab, an applied research firm, provides a list of 103 biases.

9

Other researchers have listed cognitive biases

as well. For instance, Dimara, Franconeri, Plaisant, Bezerianos, and Dragicevic (2020) identifies 154 cognitive biases, and Hilbert

(2012) lists 9. The search strategy involved exploring the literature retrieved by performing manual searches using each bias name

as a query. The databases we utilized are Google Scholar, Scopus, PubMed, Wiley Online Library, ACL Anthology, and DBLP.

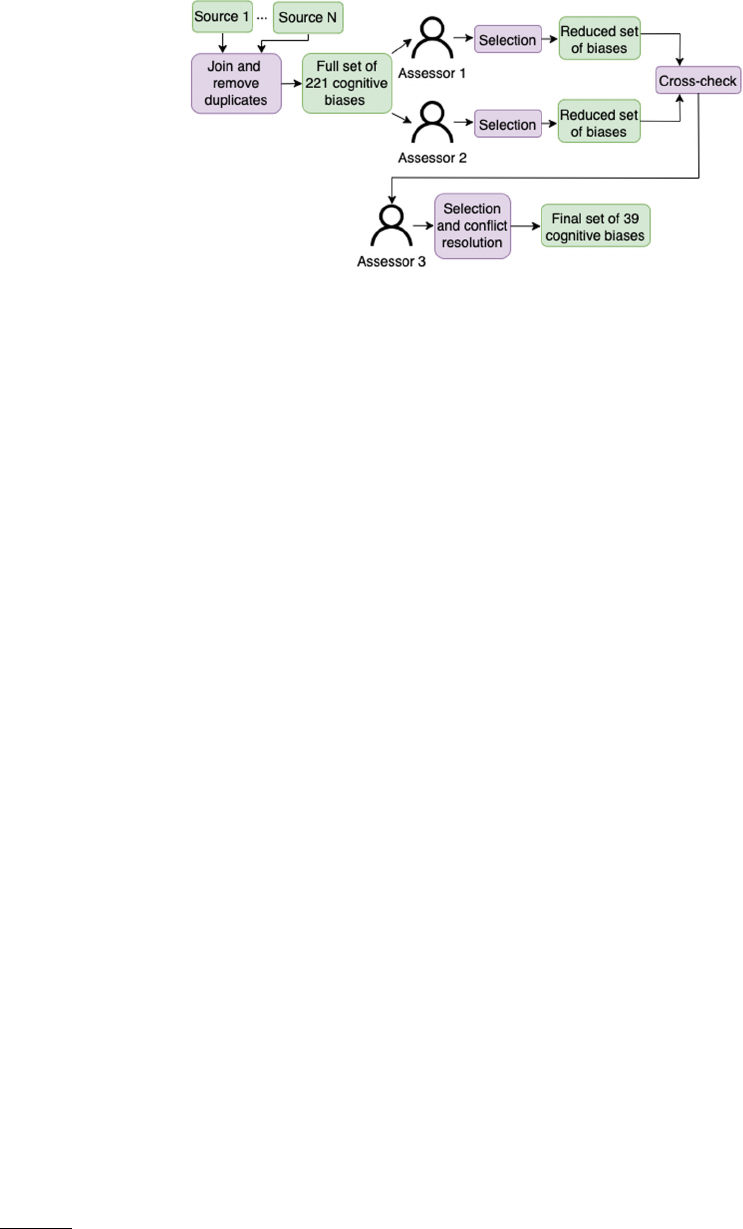

4.3. Data collection and selection processes

The complete methodology for data collection and selection that we adhere to is derived from the diagram shown in Fig. 1

and presented in Fig. 2. We consolidated the lists obtained from the information sources by removing duplicates and performing

disambiguation for each bias, thus obtaining the final amount of 221 cognitive biases. Given that a standard conceptualization or

classification of biases is a debated issue (Gigerenzer & Selten, 2008; Hilbert, 2012), and as our objective is to maximize the number

of cognitive biases identified, we include two biases even if their differences are subtle.

To select the cognitive biases that might manifest during the fact-checking process, we analyzed each of the 221 cognitive biases

found in the literature, consolidated in our list, one at a time. We focused on their definitions, causes, and domains of application,

evaluating each bias according to the eligibility criteria as described in Section 4.2. The selection process has been carried out as

follows: two authors of the paper (referred to as Assessor 1 and Assessor 2) individually and independently examined the full

set of 221 biases, analyzing each bias definition along with a collection of practical examples for each bias. They also provided

justification for the inclusion or exclusion of specific biases by citing examples of their manifestation in a fact-checking scenario.

Then, Assessor 1 and Assessor 2 compared their respective lists, discussed any conflicts that arose, and reached a consensus. In

order to maximize recall, they chose to include a bias in the list even if its likelihood of manifesting was relatively low. After such

a step, a third author (referred to as Assessor 3) reviewed the finalized, conflict-free list of biases to ensure its comprehensiveness

8

https://en.wikipedia.org/wiki/List_of_cognitive_biases

9

https://thedecisionlab.com/biases/

Information Processing and Management 61 (2024) 103672

9

M. Soprano et al.

and consistency. While the selection process inherently involves some degree of subjectivity, we believe that our implementation

of discussion points, redundancy, and cross-checks establishes a robust and reliable methodology for identifying relevant cognitive

biases in the context of fact-checking.

The process detailed lead to a list of 39 cognitive biases that might manifest while performing fact-checking. To the best of our

knowledge, this is the first time such a list of cognitive biases will be made public. It must be noted that proposed list of cognitive

biases should not be taken as final, but rather should be updated as new evidence of effects of specific cognitive biases get published

in the literature.

5. List of cognitive biases

In this section, we list in alphabetical order the 39 cognitive biases that might manifest while performing fact-checking found

by following the process described in Section 4; for each of them, we provide reference to literature that propose a psychological

explanation for it, a short description, and we frame a situation where such bias can manifest. We also provide a fact-checking

related reference to support our framing, when available. The list of 39 cognitive biases selected is presented in the following, while

the full list of the 221 cognitive biases considered is reported in Appendix B.

B1. Affect Heuristic (Slovic, Finucane, Peters, & MacGregor, 2007). To often rely on emotions, rather than concrete information,

when making decisions. This allows one to conclude quickly and easily, but can also distort the reasoning and lead to making

suboptimal choices. This bias can manifest when the assessor likes, for example, the speaker of an information item.

B2. Anchoring Effect (Ni, Arnott, & Gao, 2019). To rely too much on an information item (typically the first one acquired) when

making a decision. This bias can occur when the assessor inspects more than one source of information when assessing the

truthfulness of an information item (Stubenvoll & Matthes, 2022).

B3. Attentional Bias (Bar-Haim, Lamy, Pergamin, Bakermans-Kranenburg, & van IJzendoorn, 2007). To misperceive because of

recurring thoughts. This effect may occur due to the overwhelming amount of certain topics on news media over time, for

example for an assessor who is asked to evaluate the truthfulness of COVID-19 related information items (Lee & Lee, 2023).

B4. Authority Bias (also called Halo Effect) (Ries, 2006). To attribute higher accuracy to the opinion of an authority figure

(unrelated to its content) and be more influenced by that opinion. This bias can manifest when the assessor is shown the

speaker/organization making the information item (Javdani & Chang, 2023).

B5. Automation Bias (Cummings, 2004). To rely on automated systems which might override correct decisions made by a human

assessor. This bias can occur when the assessor is presented with the outcome of an automated system that is designed to

help him/her to make an informed decision on a given information item.

B6. Availability Cascade (Kuran & Sunstein, 1998). To attribute a higher plausibility to a belief just because it is public and more

‘‘available’’. This bias might occur when the information item presented to the assessor contains popular beliefs or popular

facts (Shatz, 2020a).

B7. Availability Heuristic (Groome & Eysenck, 2016): to overestimate the likelihood of events that are recent in the memory.

This bias can occur when the assessors are evaluating recent information items (Hayibor & Wasieleski, 2009).

B8. Backfire Effect (Wood & Porter, 2019). To increase one’s own original belief when presented with opposed evidence. This

bias, which is based on a typical human reaction, can in principle always occur in fact-checking (Swire-Thompson, DeGutis,

& Lazer, 2020).

B9. Bandwagon Effect (Kiss & Simonovits, 2014). To do (or believe) things because many other people do (or believe) the same.

This bias manifests for example when an assessor is asked to evaluate an information item related to recent or debated topics,

for which the media coverage is high.

B10. Barnum Effect (also called Forer Effect) (Fichten & Sunerton, 1983). To fill the gaps in vague information by including

personal experiences or information. This bias can in principle always occur in fact-checking (Escola-Gascon, Dagnall,

Denovan, Drinkwater, & Diez-Bosch, 2023).

B11. Base Rate Fallacy (Welsh & Navarro, 2012). To focus on specific parts of information which support an information item

and ignore the general information. This bias is related to the fact that the assessors are asked to report the piece of text or

sources of information motivating their assessment.

B12. Belief Bias (Leighton & Sternberg, 2004). To attribute too much logical strength to someone’s argument because of the

validity of the conclusion. This bias is most likely to occur when the assessors are asked to evaluate factual information

items (Porter & Wood, 2021).

B13. Choice-Supportive Bias (Kafaee, Marhamati, & Gharibzadeh, 2021). To remember one’s own choices as better than they

actually were. This bias might occur when an assessor is asked to perform a task more than one time, or when s/he is asked

to revise their judgment; it might prevent assessors from revising their initially submitted score (Lind, Visentini, Mäntylä, &

Del Missier, 2017).

B14. Compassion Fade (Leighton & Sternberg, 2004). To act more compassionately towards a small group of victims. This bias

can occur for example when the information item to be evaluated is related to minorities, or tragic events (Thomas, Cary,

Smith, Spears, & McGarty, 2018).

B15. Confirmation Bias (Nickerson, 1998). To focus on or to search for the information item which confirms prior beliefs. This

bias can in principle always occur in fact-checking, for example if the assessor receives a true information that contradicts

their prior beliefs, or if the assessor is asked to provide supporting evidence for their evaluation of such an information item.

Information Processing and Management 61 (2024) 103672

10

M. Soprano et al.

B16. Conjunction Fallacy (also called Linda Problem) (Tversky & Kahneman, 1983). To assume that a conjunct event is more

probable than a constituent event. Tversky and Kahneman (1983) presented this effect with a well-known example. They

propose the following description: ‘‘Linda is 31 years old, single, outspoken, and very bright. She majored in philosophy.

As a student, she was deeply concerned with issues of discrimination and social justice, and also participated in antinuclear

demonstrations.’’ and ask which of the following alternative is more probable: that (a) Linda is a bank teller (constituent

event), or (b) Linda is a bank teller and is active in the feminist movement (conjunct event). Participants’ intuitive methods

for assessing probability often resulted in them concluding that the latter option was more likely than the former. Thus, this

bias can potentially arise when an information item attributes simultaneous occurrences to a specific causal event, a pattern

often found in conspiracy theories related to COVID-19 (Wabnegger, Gremsl, & Schienle, 2021).

B17. Conservatism Bias (Luo, 2014). To revise one’s belief insufficiently when presented with new evidence. Note that this bias

is different from B15. Confirmation Bias: the former deals with the revision of a belief, while the latter deals with new

information. This bias, like B15. Confirmation Bias, can occur when the assessor is asked to provide supporting evidence for

their evaluation of such an information item.

B18. Consistency Bias (Clark & Kashima, 2007). To attribute past events as resembling present behavior. This bias might occur

when the assessor has evaluated an information item in the past and is asked to assess another information item coming from

the same speaker and/or party.

B19. Courtesy Bias (Jones, 1963). To give a socially accepted answer to avoid offending anyone. This bias is often influenced by

the assessor’s personal experience and background, as well as the specific context in which they is providing their answer.

B20. Declinism (Etchells, 2015). To see the past with a positive connotation and the future with a negative one. This bias is related

to the temporal part of the information items that the assessor is evaluating (Ralston, 2022).

B21. Dunning-Kruger Effect (Dunning, 2011). To overestimate oneself competence due to a lack of knowledge and skill in a certain

area. This bias can occur typically to non-expert individuals, e.g., when an assessor is not trained and is overconfident about

a given subject or certain topics. It is more likely to manifest with non-expert assessors in general, such as crowd workers,

than expert fact-checkers like journalists.

B22. Framing Effect (Malenka, Baron, Johansen, Wahrenberger, & Ross, 1993). To draw different conclusions from logically

equivalent information items based on the context, the alternatives, and the presentation method. This bias is likely to

manifest, for example, when negative and positive equivalents of information items to assess refer to two counterparts of a

property that have respectively negative and positive connotations, and its presentation is in terms of the share that belongs

to either one or the other of these counterparts (Lindgren et al., 2022). For example, let us hypothesize that a natural disaster

kills 400 out of 1000 people, thus leaving 600 alive. An information item could describe its outcome by stating that ‘‘60%

of people survived the disaster’’ or ‘‘40% of people did not survive the disaster’’; that is, by framing the fact either positively

or negatively.

B23. Fundamental Attribution Error (Harvey, Town, & Yarkin, 1981). To under-emphasize situational and environmental factors

for the behavior of an actor while over-emphasizing dispositional or personality factors. This bias is likely to manifest while

fact-checking information items made by politically aligned speakers or news outlets, as in the case of a politician who says

that people are poor because they are lazy. Furthermore, it is more likely to affect younger or older human assessors, since

age differences influence its manifestation (Follett & Hess, 2002).

B24. Google Effect (Brabazon, 2006). To forget information that can be found readily online by using search engines. This bias

can manifest when a worker is required to use a search engine to find evidence, and/or when they are asked to assess an

information item at different time spans. For example, an assessor forgetting part of the information item right after reading

it, because they know that it is easily retrievable again if needed by querying a search engine (Lurie & Mustafaraj, 2018).

B25. Hindsight Bias (also called ‘‘I-knew-it-all-along’’ Effect) (Roese & Vohs, 2012). To see past events as being predictable at the

time those events happened. Since it may cause distortions of memories of what was known before an event, this bias may

manifest when an assessor is required to evaluate an event after some time or when is asked to evaluate the same information

item multiple times at different time spans (Hom, 2022).

B26. Hostile Attribution Bias (Pornari & Wood, 2010). To interpret someone’s behavior as hostile even if it is not. This bias

can occur for assessors who have experienced discrimination from authority figures or the dominant social group. For

example, a speaker from an under-represented ethnicity remarks oneself that they perceive something as offensive, thus

fueling hostility (Bushman, 2016).

B27. Illusion of Validity (Einhorn & Hogarth, 1978). To overestimate someone’s judgment when the available information is

consistent. This bias can occur for example when an assessor works with a set of previously true information items from a

specific person and predicts that the subsequent set of information items will have the same outcome from the same person.

B28. Illusory Correlation (Hamilton & Gifford, 1976). To perceive the correlation between non-correlated events. This bias can

manifest when an assessor works on multiple information items in a single task and may perceive nonexistent patterns

between the items.

B29. Illusory Truth Effect (Newman, Schwarz, & Ly, 2020). To perceive an information item as true if it is easier to process or it

has been stated multiple times. This bias can manifest for example when using straightforward or naive gold questions in a

task to check for malicious assessors (Brashier, Eliseev, & Marsh, 2020).

B30. Ingroup Bias (Mullen, Brown, & Smith, 1992). To favor people belonging to one’s own group. This bias can manifest for

example when the assessors are required to work on information items related to their own political party, city, etc (Shin &

Thorson, 2017).

Information Processing and Management 61 (2024) 103672

11

M. Soprano et al.

B31. Just-World Hypothesis (Lerner & Miller, 1978). To believe that the world is just. This bias can happen for example when

the assessor is working with information items related to major political institutions, as people tend to assign to them higher

scores in belief (Rubin & Peplau, 1975).

B32. Optimism Bias (also called Optimistic Bias) (Sharot, 2011). To be over-optimistic, underestimating the probability of

undesirable outcomes and overestimating favorable and pleasing outcomes. This bias can occur for example when the

information item provides some kind of assessment of the risk of an event manifesting, such as the likelihood of getting

infected by COVID-19 (Druică, Musso, & Ianole-Călin, 2020).

B33. Ostrich Effect (also called Ostrich Problem) (Karlsson, Loewenstein, & Seppi, 2009). To avoid potentially negative but useful

information, such as feedback on progress, to avoid psychological discomfort. This bias can occur when an assessor avoids

evaluating, explicitly or not, an information item which has a negative connotation according to their own beliefs.

B34. Outcome Bias (Baron & Hershey, 1988). To judge a decision by its eventual outcome instead on the basis of the quality of

the decision at the time it was made. This bias can manifest when the information item under consideration is related to a

past event (Robson, 2019).

B35. Overconfidence Effect (Dunning, Griffin, Milojkovic, & Ross, 1990). To be too confident in one’s own answers. This effect

can manifest when the assessor is an expert in the field, as for example an expert journalist who performs fact-checking

related to their writing or a medical specialist who assesses health-related information items.

B36. Proportionality Bias (also called Major Event/Major Cause Heuristic) (Leman & Cinnirella, 2007). To assume that big events

have big causes. This innate human tendency can also explain why some individuals accept conspiracy theories. This bias can

occur when the factual information being assessed deals with the causes and effects of a particular event (Stall & Petrocelli,

2023).

B37. Salience Bias (Mullen et al., 1992). To focus on items that are more prominent or emotionally striking and ignore those

that are unremarkable, even though this difference is irrelevant by objective standards. For example, an information item

detailing the numerous deaths of infants will receive more attention than an information item detailing a less emotionally

striking fact. If those two facts are presented in the same information item, the score assessed for the prominent fact might

drive the overall assessment of the whole information item.

B38. Stereotypical Bias (Heilman, 2012). To discriminate against a personal trait (e.g., gender). Like B30. Ingroup Bias, this bias

can happen when the assessor, especially a crowd worker, identifies themselves with the group related to the information

item they is assessing.

B39. Telescoping Effect (Thompson, Skowronski, & Lee, 1988). To displace recent events backward in time and remote events

forward in time, so that recent events appear more remote, and remote events, more recent. This bias might occur when the

information item presented contains temporal references.

6. Categorization of cognitive biases

Considering at the same time all the 39 biases that might manifest while performing fact-checking can be challenging for

researchers and practitioners that aim studying their manifestation and/or impact in fact-checking settings. Thus, providing a second

level of aggregation might support laying out the problem and facilitate further analysis, for instance by considering the type of

fact-checking related task. To this end, we further categorize the 39 biases from a task-based perspective, utilizing the classification

scheme proposed by Dimara et al. (2020) and employed to address cognitive biases specifically affecting information visualization

tasks. This scheme allows for aggregating our initial list based on the psychological explanations of why biases might occur in a

fact-checking related context, as reported in Section 5.

Dimara et al. scheme for classifying cognitive biases works as follows. They first identify user tasks that might involve cognitive

biases, thus generating a set of 7 task categories (Dimara et al., 2020, Section 3.4) using open card-sorting analysis (Wood &

Wood, 2008). The user task categories found are summarized in Table 3. Then, they focus on the relevance of cognitive biases

with information visualization aspects. The initial classification of cognitive biases according to the user task included a fairly large

number of biases for each category. Thus, they further refined the overall scheme by proposing a set of 5 sub-categories called

flavors (Dimara et al., 2020, Section 3.4) that focus on other types of similarities across cognitive biases, related with how each

bias affects human cognition. Such flavors are summarized in Table 4.

We categorize our set of 39 biases that might manifest in the fact-checking activity by assessing which type of task they might

affect and how they influence human cognition, that is, by assigning them with a given task/flavor combination according to the

scheme by Dimara et al. (2020, Table 2). The categorization process involved evaluating each bias identified in our initial list

against the seven task categories and five flavors. This was done by first determining the most likely fact-checking task each bias

could influence (Table 3). Subsequently, we analyzed how each bias affects human cognition by aligning them with one of the

flavors (Table 4). This dual-level categorization allowed for a detailed understanding of how each bias could potentially manifest in

various aspects of fact-checking. Among our list of 39 cognitive biases, there are 35 biases that both we and Dimara et al. consider

(although in two different contexts); for them the two classifications agree. Furthermore, there are four biases that Dimara et al.

did not consider in their classification. Such biases are: B18. Consistency Bias, B19. Courtesy Bias, B36. Proportionality Bias, and

B37. Salience Bias. We conducted an in-depth analysis to appropriately assign these biases to both a task category and a flavor.

This involved assessing the nature and implications of each bias and determining the most relevant task and flavor based on their

characteristics and impact on cognitive processes during fact-checking.

Information Processing and Management 61 (2024) 103672

12

M. Soprano et al.

Table 3

Types of user tasks that may involve cognitive biases, as proposed by Dimara et al. (2020).

Task Description

Causal Attribution Tasks involving an assessment of causality.

Decision Tasks involving the selection of one over several

alternative options.

Estimation Tasks where people are asked to assess the value

of a quantity.

Hypothesis Assessment Tasks involving an investigation of whether one or

more hypotheses are true or false.

Opinion Reporting Tasks where people are asked to answer questions

regarding their beliefs or opinions on political,

moral, or social issues.

Recall Tasks where people are asked to recall or

recognize previous material.

Other Tasks which are not included in one of the

previous categories.

Table 4

Phenomena that affect human cognition, as proposed by Dimara et al. (2020).

Flavor Description

Association Cognition is biased by associative connections

between information items.

Baseline Cognition is biased by comparison with (what is

perceived as) a baseline.

Inertia Cognition is biased by the prospect of changing

the current state.

Outcome Cognition is biased by how well something fits an

expected or desired outcome.

Self-Perspective Cognition is biased by a self-oriented viewpoint.

The task/flavor classification described in Table 5 provides a structured and detailed approach to understanding the multifaceted

ways in which cognitive biases can influence the fact-checking process. We acknowledge that differently from the selection of the 39

biases, where a structured PRISMA-based approach was possible, this classification is necessarily more subjective, as it is achieved

by agreement between evaluators. By mapping each bias to specific fact-checking tasks and cognitive influences, we aim to offer a

comprehensive framework that aids researchers and practitioners in identifying and addressing potential biases in their work.

To provide an interpretation of the categorization scheme based on tasks and flavors in a fact-checking context, let us make

an example of a Decision task as defined by Dimara et al. (2020) (see Table 3). As these types of tasks involve selecting one

option from several alternatives, let us consider a scenario where an assessor is asked to determine which information item is more

truthful among two different alternatives. Let us further hypothesize that the two information items are made by politicians, with

one belonging to the governing party. In such a case, one may argue that since the trustworthiness of the speaker might be linked to

B4. Authority Bias, the assessor might believe that being part of the governing party implies higher trustworthiness for the speaker.

Moreover, since reasoning (or, as Dimara et al. call it, cognition) is biased by an associative connection between the two pieces of

information, we conclude that the underlying flavor is Association.

7. List of countermeasures

The literature allows us to specify 11 countermeasures that can be employed in a fact-checking context to help prevent

manifesting the cognitive biases outlined in Table 5. We detail each countermeasure in the following (C1–C11).

To select the countermeasures, we proceed as follows. First, we inspect the literature to identify works aiming at addressing

specific biases, and second, we select the proposed countermeasures from those works that can be applied when performing the

fact-checking activity. Note that this approach only details how to remove individual biases, but it must be noted that the removal

of one bias as a result of the application of a countermeasure might result in a manifestation of another one. For instance, Park et al.

(2021) show that often there are unexpected biases that arise in a fact-checking scenario, thus there might not exist a systematic

way to safely remove all the possible sources of bias altogether. Hence, researchers and practitioners should aim at finding a good

compromise between the possibility of bias manifestation and the specific experimental setting. The 11 identified countermeasures

are listed in the following, in alphabetical order; for each countermeasure we cite the relevant literature examined.

Information Processing and Management 61 (2024) 103672

13

M. Soprano et al.

Table 5

Categorization of cognitive biases, adapted from Dimara et al. (2020).

Association Baseline Inertia Outcome Self-Perspective

Causal

Attribution

– – – B26. Hostile

Attribution Bias

B31. Just-World

Hypothesis

B23. Fundamental

Attribution

Error

B30. Ingroup

Bias

Decision B4. Authority Bias

B5. Automation

Bias

B22. Framing Effect

– – – –

Estimation B7. Availability

Heuristic

B16. Conjunction

Fallacy

B2. Anchoring

Effect

B11. Base Rate

Fallacy

B14. Compassion

Fade

B21. Dunning-

Kruger Effect

B35. Overconfidence

Effect

B17. Conservatism

Bias

B27. Illusion of

Validity

B34. Outcome

Bias

B32. Optimism

Bias

B37. Salience

Bias

Hypothesis

Assessment

B6. Availability

Cascade

B29. Illusory Truth

Effect

– – B10. Barnum

Effect

B12. Belief Bias

B15. Confirmation

Bias

B28. Illusory

Correlation

–

Opinion

Reporting

– B36. Proportionality

Bias

B8. Backfire

Effect

B9. Bandwagon

Effect

B38. Stereotypical

Bias

B19. Courtesy

Bias

Recall B24. Google Effect

B39. Telescoping

Effect

– B18. Consistency

Bias

B13. Choice-

Supportive Bias

B20. Declinism

B25. Hindsight

Bias

–

Other B3. Attentional Bias – – B33. Ostrich

Effect

–

C1. Custom search engine. Researchers and practitioners should be extremely careful with the system supplied to the assessors

to help them retrieving some kind of supporting evidence, since such a system can be biased (Diaz, 2008; Mowshowitz &

Kawaguchi, 2005; Otterbacher, Checco, Demartini, & Clough, 2018; Wilkie & Azzopardi, 2014). Researchers should employ

a custom and controllable search engine when asking the assessors to evaluate an information item. The assessors might be

influenced by the score assigned to the news by a news agency or an online website for the very same information item. Thus,

the researcher may tune the search engine parameters to limit the bias that each assessor encounters during a fact-checking

activity due to the result source.

C2. Inform assessors. Researchers should always inform assessors about the presence of any kind of automatic (e.g., AI-based)

system designed to provide support during the assessment activity, for example by asking them for confirmation or rejection

while using such systems, thus limiting B5. Automation Bias (Goddard, Roudsari, & Wyatt, 2011; Kupfer et al., 2023). This

includes, for example, the presence of a search engine helping them finding some kind of evidence (Draws et al., 2022;

Roitero, Soprano, Fan, et al., 2020; Roitero, Soprano, Portelli, et al., 2020; Soprano et al., 2021).

C3. Discussion. Researchers should allow a synchronous discussion among assessors when possible. In fact, when evaluating the

truthfulness of an information item each individual is more prone to accept information items that are consistent with their

set of beliefs (La Barbera et al., 2020; Lewandowsky et al., 2012; Roitero, Soprano, Fan, et al., 2020). Reimer, Reimer, and

Czienskowski (2010) and Szpara and Wylie (2005), indeed, proved the effectiveness of conducting a synchronous discussion

between different assessors to reduce their own bias. Pitts, Coles, Thomas, and Smith (2002) and Zheng, Cui, Li, and Huang

(2018) show how discussion among assessors improves the overall assessment quality.

C4. Engagement. It is important to put the assessors in a good mood when performing a fact-checking task. Cheng and Wu

(2010) show that engaged assessors are less likely to experience both:

– B22. Framing Effect.

Information Processing and Management 61 (2024) 103672

14

M. Soprano et al.

– B28. Illusory Correlation.

Moreover, Furnham and Boo (2011) show that if assessors are engaged they are less likely to experience:

– B2. Anchoring Effect.

– B33. Ostrich Effect.

C5. Instructions. Another important aspect to consider consists of formulating an adequate set of instructions. Gillier, Chaffois,

Belkhouja, Roth, and Bayus (2018) have shown that a set of instructions helps assessors in coming up with new ideas when

performing a crowdsourcing task. Even though Gadiraju, Yang, and Bozzon (2017) explain that assessors can perform a

task even if they have a sub-optimal understanding of the work requested, task instructions clarity should be taken into

account. Furthermore, the assessors should be encouraged explicitly to be skeptical about the information that they are

evaluating (Lewandowsky et al., 2012). Indeed, Ecker, Lewandowsky, and Tang (2010) and Schul (1993) prove that pre-

exposure warning (i.e., telling explicitly a person that they could be exposed to something) reduces the overall impact on

the person itself. Thus, showing a set of assessment instructions can be seen as a pre-exposure warning against the impact of

misinformation on the assessor.

C6. Require evidence. Requiring the assessors to provide supporting evidence for their judgments is another effective counter-

measure with several advantages. It encourages the assessor to focus on verifiable facts. Lewandowsky et al. (2012) explain

that such a countermeasure increases the perceived familiarity with the information item, reinforcing the assessor perceived

trustworthiness of the information item itself. They also show that reporting a small set of facts as evidence has the effect

of discouraging possible criticisms by other assessors, thus reinforcing the assessment provided. Jerit (2008) observes such

a phenomenon in public debates. Furthermore, asking the assessors to come up with arguments to support their assessment

has proven to reduce:

– B2. Anchoring Effect, as shown by Mussweiler, Strack, and Pfeiffer (2000).

– B11. Base Rate Fallacy, as shown by Kahneman and Tversky (1973).

– B22. Framing Effect, as shown by Cheng and Wu (2010), Kim, Goldstein, Hasher, and Zacks (2005).

– B27. Illusion of Validity, as shown by Kahneman and Tversky (1973).

– B28. Illusory Correlation, as shown by Matute, Yarritu, and Vadillo (2011).

However, requesting for evidence may be a source of bias itself. Luo (2014) and Wood and Porter (2019) show, indeed, that

such a request can lead to the manifestation of, respectively:

– B8. Backfire Effect.

– B17. Conservatism Bias.

Thus, the requester of the fact-checking activity should address this matter carefully.

C7. Randomized or constrained experimental design. Using a randomized or constrained experimental design is helpful in

reducing biases. Indeed, different assessors should evaluate different information items. Moreover, each set of items should

be evaluated according to a different order, and the assignment of an information item to a given assessor should be such

that the item overlap between every two assessors is minimum. If such a constraint cannot be satisfied, a randomization

process should minimize the chances of overlap between items and assessors (Ceschia et al., 2022; Hettiachchi, Kostakos, &

Goncalves, 2022).

C8. Redundancy and diversity. Redundancy should be employed when asking more than one assessor to fact-check a set of

information items. Indeed, the same information item should be evaluated by different assessors. Each item can thus be

characterized by a final score, that should be computed by aggregating the individual scores provided by each assessor.

In this way, the individual bias of each assessor is mitigated by the remaining assessors. If the population of assessors is

diverse enough, one can ideally expect less bias from the fact-checking activity. The population of assessors should thus be

as variegated as possible, in terms of both background and experience (Difallah, Filatova, & Ipeirotis, 2018).

C9. Revision. Asking the assessors to revise and/or double-check their answers or even provide them with alternative labels is

a useful countermeasure to reduce many biases. In more detail, Cheng and Wu (2010), Kahneman (2011), Kahneman and

Tversky (1973), Kim et al. (2005), Mussweiler et al. (2000), and Shatz (2020b) show that assessment revision helps reducing:

– B2. Anchoring Effect.

– B7. Availability Heuristic.

– B9. Bandwagon Effect.

– B11. Base Rate Fallacy.

– B22. Framing Effect.

Furthermore, Bollinger, Leslie, and Sorensen (2011), Cooper et al. (2014), Hettiachchi, Schaekermann, McKinney, and Lease

(2021), and Mussweiler et al. (2000) show that providing feedback to assessors while performing a given task is useful to

reduce biases such as:

– B2. Anchoring Effect.

– B3. Attentional Bias.

Information Processing and Management 61 (2024) 103672

15

M. Soprano et al.

– B37. Salience Bias.

C10. Time. Researchers should be careful when setting the time available for each assessor to fact-check a given information item.

An adequate amount of time should be left to the assessor. There are advantages and disadvantages of granting the assessor

with a small or large amount of time. For instance, one may assume that providing the assessor with more time will encourage

careful consideration of the decision, thus helping to avoid the B2. Anchoring Effect. However, Furnham and Boo (2011)

show that overthinking might actually increase such a bias. On the other hand, Shatz (2020b) shows that assessors left with

an adequate amount of time experienced a reduction of the B9. Bandwagon Effect.

C11. Training. Dugan (1988), Kazdin (1977), Lievens (2001), Pell, Homer, and Roberts (2008), and Szpara and Wylie (2005) show