NBER WORKING PAPER SERIES

SAFETY REVIEWS ON AIRBNB:

AN INFORMATION TALE

Aron Culotta

Ginger Zhe Jin

Yidan Sun

Liad Wagman

Working Paper 31855

http://www.nber.org/papers/w31855

NATIONAL BUREAU OF ECONOMIC RESEARCH

1050 Massachusetts Avenue

Cambridge, MA 02138

November 2023, Revised January 2024

We are grateful to AirDNA for providing the data and to our home universities for financial

support. Marshall Van Alstyne, Matthias Hunold, Xiang Hui, Meng Liu, Peter Coles, Francine

Lafontaine, Ying Fan, Juan Pablo Atal, Juan Camilo Castillo, Sophie Calder-Wang, Yufeng

Huang, Zhe Yuan, Jisu Cao, and participants at the Luohan Academy Webinar, Washington

University at St. Louis, Boston University, University of Michigan, University of Pennsylvania,

the 2022 MaCCI annual conference, the 2022 IIOC annual conference, the 2022 INFORMS

Marketing Science and virtual conference, and the 2023 Strategy and Economics of Digital

Markets conference have provided constructive comments. Tejas Nazare, Nour Ben Ltaifa, and

Hunter Petrik provided excellent research assistance. The content and analyses in this paper

reflect the authors' own work and do not relate to any institution or organization with whom the

authors are affiliated. None of us has a financial relationship with Airbnb or competing short term

rental platforms. All rights reserved. All errors are our own. The views expressed herein are those

of the authors and do not necessarily reflect the views of the National Bureau of Economic

Research.

NBER working papers are circulated for discussion and comment purposes. They have not been

peer-reviewed or been subject to the review by the NBER Board of Directors that accompanies

official NBER publications.

© 2023 by Aron Culotta, Ginger Zhe Jin, Yidan Sun, and Liad Wagman. All rights reserved.

Short sections of text, not to exceed two paragraphs, may be quoted without explicit permission

provided that full credit, including © notice, is given to the source.

Safety Reviews on Airbnb: An Information Tale

Aron Culotta, Ginger Zhe Jin, Yidan Sun, and Liad Wagman

NBER Working Paper No. 31855

November 2023, Revised January 2024

JEL No. D83,L15,R3

ABSTRACT

Consumer reviews, especially those expressing concerns of product quality, are crucial for the

credibility of online platforms. However, reviews that criticize a product or service may also

dissuade buyers from using the platform, creating an incentive to blur the visibility of critical

reviews. Using Airbnb and official crime data in five major US cities, we find that both reviews

and self experiences concerning the safety of a listing's vicinity decrease guest bookings on the

platform. Counterfactual simulations suggest that a complete removal of vicinity safety reviews

(VSR) would hurt guests but increase revenues from reservations on Airbnb, with positive sorting

towards listings formerly with VSR. Conversely, incorporating VSR in a listing's overall ratings

or highlighting VSR could generate opposite effects. Either way, the interests of consumers are

not always aligned with the interests of a revenue-centric platform. Because VSR are more

closely correlated with official crime statistics in low-income and minority neighborhoods, our

findings suggest that suppressing or highlighting VSR would have different effects on different

neighborhoods.

Aron Culotta

School of Science and Engineering

Tulane University

New Orleans, LA 70118

United States

Ginger Zhe Jin

University of Maryland

Department of Economics

College Park, MD 20742-7211

and NBER

Yidan Sun

Illinois Institute of Technology

565 W. Adams St.

Chicago, IL 60661

Liad Wagman

Illinois Institute of Technology

565 W Adams, 4th Floor

Chicago, IL 60661

1 Introduction

Information design is crucial for online platforms. Take consumer feedbac

k as an example: not only does

it allow future buyers to discern high- and low-quality sellers, it also encourages good sellers to maintain

high quality and motivates poor-performing sellers to improve quality. Arguably, reputation mechanism

is even more effective online than offline, because online plat f orms can gather consumer feedback in a

standardized format, make it available globally, and aggregate it in a way t h at is sali ent and easy to

digest and search, if they so choose (see reviews by Einav et al., 2016; Tadel i s, 2016; Luca, 2017).

1

Critical consumer feedback (i.e., reviews criticizing a product or service) is part i cu lar l y important for

online reputation systems, whether i t is a simple choice of positive/neutral/negative, a star rating, or

detailed reviews with free-flowing text, photos, or even videos. It is likely t h at an e-commerce website

that continuously lists all products or services as rated five stars or with 100% positive feedback would

quickly lose credibili ty. Indeed, the literature has shown that c onsumers respond significantly to critical

feedback, although consumers tend to under-report critical feedback. Many platforms try to encourage

consumer feedback—including criti cal feedback—by offering status, coupons, and merchandise discounts.

Some p l at for ms also encourage sellers to respond to consumer rev i ews.

However, platforms face mixed incentives regarding critical consumer feedback. On the one hand,

future buyers may compare listings on the focal platform and prefer those with no or l ess criti cal feedback.

Such within-platform sorting may benefit the platform, as high-quality sellers often charge a higher price

or enjoy a h i gher probability of selling. On the other hand, buyers always have an outside good in mind;

observing many listings with critical feedback on a platform may dissuade buyers from using the platform

at all. The d an ger of losing a potential buyer could motivate a plat f or m to blur the visibility of critical

consumer feedback, by keeping it private to the platform, d el et in g it after a short time of posting, or

making it difficul t to find despite public posting.

More specifically, crit i cal consumer feedback may generate three information externalities on a digital

platform: first, buyer A’s critical feedback on product listing X may deter herself and other buyers from

buying X in the future. This “within-listing-across-buyer” effect is typical in a reputation system and is

well-studied. Second, critical feed b ack regarding X may lead other buyers to infer that listings similar

to X may have similar quality concerns even if they have not themselves received such feedback. This

is a “cross-listing-cross-buyer” effect. Third, a poor experien ce with listing X may motivate buyer A

to give critical feedback to X and reassess other buyers’ critical feedback towards other listings or even

the whole feedback system. This “cross-listing-within-buyer” effect is often omitted because Bayesian

updating assumes that learning from others’ experience is the same as learning from self experience if the

information has the same accuracy. However, in practice, self-experience can be much more salient to an

1

Recent examples include YouTu be, which has adopted a policy of hiding dislike counts on shared videos (see, e.g.,

https://rb.gy/xhhqnd), an d Instagram, which has given users the option of hiding likes (see, e.g., https://rb.gy/tacuj5).

2

individual. Few researchers have quantified the second and third ext er nal i t i es explicit ly ; one exception is

Nosko and Tadelis (2015), who show that buyers that have bought from a more reputable seller on eBay

are more likely to retu rn t o the platform to transact with any sellers, above and beyond the likelihood

to t r ansact with the same seller that created t h at good experience.

In this paper, we use user reviews about vicinity safety of short-term rentals to demonstrat e the

importance of these information externalities. Safety around a listi n g’s vicinity is an important dimension

of quality given the listing’s physical location . The host of a listin g cannot do much to change its vicinity

safety but prior guests may comment on it in their reviews. Such reviews may inform other gu est s of

the vi ci ni ty safety risk for nearby listings, which is a built-in cross-listing externality. Consumer reviews

regarding vi ci n ity safety are often of a critical tone because guests that have chosen to stay at a d welling

owned or managed by an anonymous host usually assume the neighborhoo d is reasonably safe. At the

same time, almost no hosts would volunteer to discuss neighborhood safety in their li st i ng descriptions,

because any mention (even the phrase of “perfectly safe”) may call guest attention to safety concerns.

Using all Airbnb listings and their reviews in five major US cities (Atlanta, Chicago, Los Angeles,

New Orleans, and New York City) from 2015/5 to 2019/12, we use a Lexicon ap pr oach to identify safety

reviews posted by Airbnb guests. We find that 0.51% of the 4.8 mil l ion guest reviews express concerns

of safety, among which 48.08% are about safety issues near but outside the focal property (referred to as

vicinity safety reviews, or VSR) rather than safety issues inside the property (referred to as listing safety

reviews, or LSR). Further sentiment analysis suggests that VSR and LSR identified by our algorithm are

significantly more negative in sentiments than an average review. Alth ou gh VSR and LSR only account

for a tiny fraction of guest reviews, 4.43% of listing-months ever have any VSR since May 2015, and

8.49% of listing-months ever have any VSR or LSR. These facts imply that safety concerns are not iced

by guests, regardless of whet h er they relate to the actual d welling or its nearby surroundings. At the

same time, th e low occurrence of VSR and LSR makes learning through self-experience a lengthy process.

Thus, guests with safety concerns mostly rely on the platform’s online review system and/or external

information.

Since guest feedback may reflect guests’ subjective opinion of their stay experience, we obtain (local)

government-reported crime statistics for the five sample cities, by zip code and month. Th e data suggest

that, as VSR accumulate slowly on Airbnb, the rank correlation between the normalized total count of

VSR in a zip code up to a month t and the normalized official cr i me statistics of that zip code-month is

increasing over time. For low-income or minority zip codes, the rank correlation can be as h i gh as 0.75

by the end of our sample period (2019/12). This suggests that the VSR, though noisy and subj ec t ive, do

reflect real safety risks in the related zip codes t o some degree.

As expected, when we f oll ow the same listings before and after they receive any VSR or LSR, there is

a sign ifi cant drop in the listing’s monthly occupancy rate as well as its average paid price per night. The

effect is stronger for LSR (-2.58% in occupancy and -1.52% in price) than VSR (-1.82% in occupancy

3

and -1.48% in price), but all are statistically significant with 99% confidence. These findings suggest that

prospective guests are concerned about both listing and vicinity safety, and have different sensitiviti es

to changes in t h ese two types of safety reviews. In addition to this classical “within-listing-cross-buyer”

effect in listing reputation, we also find a significant negative effect from VSR of nearby listings, where

nearby listings are defined as those wi t h in 0.3 mile radius of the focal listing accordi n g to Airbnb’s proxy

longitude and latitude of each listing. This “cross-listing-cross-buyer” effect corresponds to the second

information externality as mentioned above.

To document the third externali ty, we zoom into the gu est s that wrote VSR on Airbnb (ref er red to as

VS guests). Compar ed to the guests that have used Airbnb with similar frequencies and booked similar

listings (in terms of crime and VSR) but never write any VSR in our dataset, VS guests are 60.07% less

likely to book future stays on Air b nb after posting the VSR. And when they do book on Airbnb, they

tend to book in areas with fewer official crimes, fewer overall VSR, and a lower percentage of listings with

any VSR. The learning is weaker if the focal listing that t r i gger ed the VS guest’s VSR h ad previously

received any VSR from other guests, but even in this case, the VS guests are still 51.62% less likely to

book future stays on Airbnb after posting their own VSR. This suggests that self experience is much

more salient than reading other guests’ VSR; thus, the online review system is not fully effective as far

as conveying all the information emb ed ded in VSR.

Platform wide, we argue that these information external it i es —especially VSR spillovers to nearby

listings (the second externality) and VS guests’ strong reactions to thei r own vicinity safety exper ien ces

(the third externality)—may undermine a platform’s incentives to post an d highlight VSR as critical

feedback. Interestingly, in a recent policy change effective December 11, 2019, Ai r bnb announced that,

going forward, guest reviews about listings that include “content that refers to circumstances entirely

outside of another’s control” may be removed by the platform.

2

This policy change, if strictly enforced,

could imply that VS R are discouraged and may be subject to deletion by the platform while LSR are still

permitted. To be clear, we find no evidence suggesting that Airbnb has omitted or deleted VSR in any

systematic way after the 2019/12 policy. But the announcement itself suggests that Airbnb has broad

discretion regarding the collection, posting, or removal of c ons umer reviews, especially those that include

contents that t h e platform believes to be irrelevant or useless. Our analysis of VSR aims to shed light on

the potential economic incentives behind a platform’s review policy.

To do so, we must incorporate listing competition becau se within - and cross-platform sortings have

different implications for the platf or m. To account for listing competition, we obtain a dataset of compet-

ing entire-home VRBO listings and use a discrete choice model to estimate consumer utility from Airbnb

entire-home l ist i n gs, while treating VRBO listings in the same zip code-month as the outside good. We

then use the structural estimates to quantify consumer surplus under the status quo of our sample (i . e.,

VSR are largely permitted) versus three counterfactual information regimes: elimin at i ng all VSR (“no

2

See, e.g. , https://rb.gy/0pu5ck and https://rb.gy/9y6bum .

4

disclosure”), adjusting the rating of each listing to account for the number of VSR of the list i ng itsel f and

nearby listings (“VSR-adjusted ratings”), or alerting all guests to the existing VSR an d making them as

informed as those that have writt en VSR themselves (“high alert”).

Compared to the status quo, we find t h at no disclosure of VSR would decrease consumer surplus

in the market by 0.032% and increase revenues from reservations on Airbnb by 0.041%, with positive

sorting towards list in gs formerly with VSR. Conversely, VSR-adjusted ratings would increase the market

consumer surplus by 0.004% but decrease Airbnb’s GBV by 0.142%. High alert would increase the market

consumer surplus by 3.065% to 4.144% and change Airbnb’s GBV by +0.301% (+$10.1 millions) to -

1.304% (-$44 millions), depen d in g on whether we allow listin g price to change by 1% in response and

whether we assume the hi gh alert on vicinity safety also applies to the VSR for nearby listings. In short,

the interests of consumers and Airbnb are not always aligned, because guest sorting from Airbnb to

off-Airbnb alternatives would hurt Ai rb nb’s GBV with certainty but the within-Airbnb sort i ng between

listings with and without VSR may increase or decrease Airbnb’s GBV depending on how sensitive guests

are t o pricing and perceived vicinity safety of listings.

Although the overall welfare effects are moderate (because VSR is rare in the data), they mask l arge

distributional effects: more VSR transpar en cy benefits the l i st in gs without VSR at the cost of the listings

with VSR. Because l i st i ngs with VSR are more likely to locate in low-income or mi nor i ty neighborhoods,

consumer sorting upon VSR transparency would generate sizable GBV shifts across hosts in different

neighborhoods. These effects highlight a tradeoff as far as generating high er revenues and attracting

hosts in low-income and minority ar eas on the one hand, which can enhance the economic impact of the

platform in und erser ved neighborhoods, an d providing additional value t o guests on the other.

As detailed below, we contribute to the empirical literatu re of online feedback and sell er reputation,

and the risin g literature of information design in online plat f orms. As an information intermediary, online

platforms have more incentives than a traditional seller to alleviate information asymmetries between

buyers and sellers. But they are still inherently different from a social planner, because t h ey may put

more weight on their own business interests than on the welfare of buyers and sellers on t he platform, and

they may not fu l l y internalize the impact of their polici es on competing plat for ms. Our empirical findings

highlight these differences. We also document how the impact of a platform’s review policy may vary for

neighborhoods of different incomes or with different minority representation, as being inclusive could be

important for the platform or the social planner. These findings can help facilitate ongoing discussions

as to what role and r esponsibility digital platforms should have as far as collecting and disseminating

quality-related information online.

The rest of t he paper is organized as follows. Sect i on 2 reviews the related literature. Section 3

provides background regard i ng Airbnb’s review sy st em. Section 4 describes the dataset, defines VSR and

LSR, and provides summary statistics. Section 5 reports reduced-form evidence for the three information

externalities of safety reviews. Section 6 incorporates all of these externali t i es into a structural demand

5

model and predi ct s how listings’ GBV and consumer su r pl u s would change under three counterfactual

scenarios. Sect i on 7 discusses the implications of our findi n gs and future research directions.

2 Related Literature

Safety review is a type of buyer-to-seller feedback; thus, ou r stud

y is directly related to the literature on

online feedback and seller reputation.

The efficacy of online reputation depends on how consumers respond to buyer feedback posted on the

platform. Researchers have shown that consumers are more likely to purchase from sellers with better

buyer feedback and, conditional on purchase, are wi l l in g to pay more to reputable sellers (see reviews

by Bajari and Hortacsu , 2004; Tadelis, 2016; Einav et al., 2016). Consistently, we find that having any

safety reviews associated with a listing tends to negatively impact the occupancy and price of the li st i ng

because safety reviews dampen the li sti n g’ s rep ut at i on on Air bnb. Th e magnit u de of this effect on the

occupancy rate is comparable to a 70.18% red uct i on in the listin g’ s average guest ratings, confirming

the finding in Chak ravar ty et al. (2010) that consumers are more responsive to critical feedback than to

positive feedback.

Beyond the classical within-listing-across-b uyer effect, we are one of the few that attempt t o quantify

the spillover effect s of critical feedback. By definition, vicinity safety reviews may generate spillovers

among listings in nearby geographies, should guests infer the overall safety of the vicinity from multiple

nearby list i ngs. We find that for a focal listing, a higher percentage of other nearby listings with VSR

is negatively associated with the focal listing’s occupancy rate, as well as its price. This cross-listing-

cross-buyer spillover h as different implications for hosts and guests: hosts without VSR may suffer from

the negat i ve externality of nearby listings with VSR; but from a pr ospective guest’s perspective, this is

a positive information externality that coul d help them make more informed choices ex ante. Hence, the

information design optimal to the hosts or the platform can be different from that optimal to guests, a

key point we examine in th e counterfactual analysis.

While the cross-listing-cross-buyer spillover is specific to the nature of vicinity safety, we argue the

cross-listing-within-buyer spillover of critical feedback is more generalizable to other onli n e platforms.

As shown by Nosko and Tadelis (2015), buyers that have had a good experience from a reputable seller

on eBay are more likely to return to eBay for sales with any sellers. Similarly, we show that h aving a

negative vi ci ni ty safety experience tends to motivate a guest to avoid booking any listings on Airbnb in

our sample cities and, if she books again at all, to avoid both the listings and the areas that have any

VSR. Compared to Nosko and Tadelis (2015), we show that the cross-listing-within-buyer spillover is not

only limited to the extensive margin (whether to return to the platform for future transactions); but it

also motivates the experienced buyer to adju st how she interprets the presence of VSR in other listings.

Using a structural approach, we take a deep dive into t he implications of these spillover effects for the

6

welfare of guests, the revenue of hosts and the platform, and the distributional changes across different

types of neighborhoods.

Most of the aforementioned liter at ure of seller reputation is conditional on buyer feedback that online

platforms aggregate and present to consumers. However, buyer feedback is under-provided partly because

reviewers are not compensated for submitting reviews. For example, 64% of eBay transactions are rated

by buyers in the sample studied by Hui et al. (2021), and 73.5% of New York City UberX trips are

rated by passengers (Liu et al., 2021). In comparison, 44.6% of Airbnb trips in our sample have received

feedback from guests, which is in line with the guest r ev i ew rate repor ted by Fradkin et al. (2021) based

on ear li er Airbnb data in 2014.

Since accurate feedback is a public good subject to under-provision, many platforms attempt to

encourage buyer feedback by offering status, coupons, and merchandise discounts (Li and Xiao, 2014;

Cabral and Li, 2015; Li et al., 2020; Fradkin et al., 2015; Fradki n and Holtz, 2023). Some even encourage

sellers to respond to consumer reviews. Proserpio and Zervas (2017) find that hotels responding to user

online reviews enjoy 0.12-star increase in ratings and a 12% increase in review volume. When hotels

start responding, they tend to receive fewer but longer negative reviews because unsatisfi ed consumers

become less likely to leave short ind efen si bl e reviews when hotels are likely to scrutinize them. Similarly,

Chevalier et al. (2018) find that managerial responses stimulate consumers’ reviewing activity, especially

the negative revi ews that are seen as more impactful. This effect is reinforced by the fact that managers

respond more frequently and in more detail to negative reviews. These findings suggest that allowing

managerial response can be viewed as a platform policy that effectively highlights and addresses critical

feedback. In contrast, the 2019 Airbnb policy that motivates this study, if fully implemented, could

discourage buyers from providing critical feed b ack on certain quality dimensions such as vicinity safety,

and thus exacerbate the public good problem of critical feedback.

The imperfect review rate is particularly problematic as far as critical feedback is concerned. Studies

have shown that buyers tend to under-report b ad experiences, with potential explanations that include

fear of retaliation (Dellarocas and Wood, 2008), preference to leave the platform after a b ad experi-

ence (Nosko and Tadelis, 2015) , pressure to provide above-average ratings (Barach et al. , 2020), and

social connections to the rated sellers (Fradkin et al., 2015). For arguab l y rare, bad experiences such as

safety, th e prob ab ili ty of observi n g per t i nent feedb ack from prior buyers could be fur th er red uced , simply

because the chance of exp er i en cin g a safety i ssu e is small in absolute terms, even i f a neighborhood has

safety risks. A platform policy that discourages VSR could r ei nfor c e an existing bias against critical

feedback.

Another consequence of the bias against critical feedback is that saf ety r ev i ews on any Airbnb listi n g

accumulate slowly over time. This could affect the overall informativeness of safety revie ws. As shown

below, between 2015 and 2019, we observe a growing rank correlation between a zip code’s normalized

cumulative VSR count and the zip code’s normalized official crime stat i sti cs in low income and minority

7

areas. This suggests that cumulative VSR do contain useful informati on regarding a zip code’s actual

safety status, with informativeness that may increase over time. The rare occurrence of VSR further

highlights the importance of cross-listing-cross-buyer and cross-list i n g-wit h i n-b u yer spillovers, because

they magnify the impact of the rare experien ces and thus make t h e gradual accumulation of VSR more

informative. In comparison, a few studies argue that online feedback systems may b ecome less informative

over time because of the aforementioned feedback bias reasons (Barach et al., 2020; Klein et al., 2009;

Hui et al., 2021). Most of these stud ies infer feedback informativeness fr om the content of feedback or

policy variations within the feedback system. Our approach is different, as we compare online feedback

with a completely independent data source.

More broadly, our study contributes to the growing literature of inf or mati on d esi gn in online platf orms.

Because feedback is under-provided and there is a selection against critical feedback, researchers have

studied the design of feedback systems in terms of who is allowed to provide feedback (Klein et al., 2016;

Mayzlin et al., 2014; Zervas et al., 2021), h ow to improve the authenticity of feedback (Wagman and

Conitzer, 2008; Conitzer et al., 2010; Conitzer and Wagman, 2014), when the feedb ack i s revealed to the

public (Bolton et al., 2013; Fradkin et al., 2021), what kind of feedback is shown to the public, and how

to aggr egat e historical feedback (Staats et al., 2017; Dai et al., 2018).

Interestingly, some p lat f or ms highlight criti cal consumer feedback, so t hat future consumers are aware

of potential risks associated with the target seller or target product. An economic reason to do so is

that many consumers on online platforms tend to be more responsi ve to criti cal feed back than to positive

feedback (Chakravarty et al., 2010). Highlighting such f eedb ack may hurt the sellers with critical feedback

but d i vert buyers towards ot h er sellers on the same plat f or m with zero or not as much crit ical feedback.

If this sorting effect generates more revenue for the platform or reinforces the platform’s re put at i on as

far as honesty and transparency, the platform would have an incentive to highlight critical feedback.

In our setting, we offer a counterexample where a platform’s review policy has the potential to

discourage buyers from providing a specific type of critical feedback. The discouragement can occur

when a platform hides, obfuscates, or deletes critical feedback. To be clear, there are legitimate reasons

to do so in some situati on s: for example, a platform may find certain feedback fake, abusive, or misleading

ex post; omitting such feedback could make the information system more authentic and informative for

both buyers and sellers (Luca and Zervas, 2016; Chevalier and Mayzlin, 2006).

At the same time, theories have shown that platforms may be strategically motivated t o omit certain

information, includi n g critical feedback. For instance, Kovbasyuk and S pagn ol o (2018) explain why

sometimes plat for ms seek to erase some historical bad records of sellers, in order to increase matching

rates. Romanyuk and Smolin (2019) show that platforms such as Uber may seek t o hide some buyer

information (say, destination) prior to completing a buyer-seller match, because doing so would avoid

sellers waiting for a specific typ e of next buyer which would reduce the overall matching rate on the

platform. These two papers differ in the direction of information withholding: the former withholds

8

seller-relevant information from futur e buyers, while the latter withholds buyer-relevant information from

future sellers. Both suggest that the party from whom the information is kept hidden may be worse off

and the platform has an incentive to trade off their welfare loss against the welfare gain of the other side

of t he platform and the platform’s overall matching rate.

As shown in our counterfactual analysis, the plat f orm may have economic incentives to downplay

vicinity safety reviews, because the more guests are alerted ab ou t vicinity safety, the lower the matching

rate for the whole platform. In theory, such incentives could be dominated by a sorting effect, if posting

or highlighting VSR could dir ect buyers towards safer listings on t h e same platform and motivate the

safer listings to increase their prices sufficiently high to compensate for the pl at f orm’ s loss from a lower

matching rate. Our counterfactual analysis suggests that this i s not the case.

Finally, we are not the first to study safety issues regarding online short-term rental platforms. Suess

et al. (2020) find that non-host i n g residents with a higher emotional solidarity with Airbnb visitors are

more supportive of Airbnb hosts, and reside nts hold different views about safety (“stranger danger”) and

Airbnb dependin g on whether t h ey have children i n the household. Local plan ner s pay attention to the

impact of online sh ort -t er m rentals on neighborhood noise, congestion, safety, and local housing markets

(Gurran and Phibbs, 2017; Nieuwland and Van Melik, 2020; Kim et al., 2017). Zhang et al. (2021)

shows that regulations that negatively affect Uber/Lyft services may also n egati vely affect the demand

for Airbnb. Han and Wang (2019) d ocument a positive association between commercial house-sharing

and the rise of crime rate in a city, whil e non-commercial house-sharing does not have this association.

A number of studies find that an increase in Airbnb listings — but not reviews — relates to mor e

neighborhood crimes in later years (Xu et al., 2019; Maldonado-Guzm´an, 2020; Roth, 2021; Han et al. ,

2020; Fil i er i et al., 2021). More specifically, Airbnb clusters are found to correlate positively with property

crimes such as robbery and motor vehicle theft, but negatively with violent crimes such as murder and

rape. Also, Airbnb listings of the type in which guests may share a room wit h other unrelated guests

are found t o be more related to cri mes (Xu et al., 2019; Maldonado-G u zm´an, 2020) and to skirting local

regulations (Jia and Wagman, 2020).

Our study complements this growing literature, by highlighting safety reviews, distinguishing vicinity

and list i ng safety reviews, and documenting consumer responses to safety reviews or experiencing safety

issues. Although we cannot identify the effect of Airbnb on local crime rates, our work helps quantify

guest preferences regarding safety, and clarify how the interests of guests, different hosts and the platform

diverge with r espect to the disclosure of VSR. As shown in our counterfactuals, disclosing and highlighting

VSR could encourage guests to shy away from potentially unsafe listings and disproportionately affect

hosts in certain areas.

9

3 Background of Airbnb’s Review System

Over the past decade, short-term vacation rental markets have quickly

expanded worldwide. Airbnb, the

leading home-sharing marketplace, now offers 6.6 million active listings from over 4 million hosts in more

than 220 countries and regions.

3

As with any lodging accommodation, the specific location of a listing

can affect the experience of its gu est s. For instance, if a property is lo cat ed in a relatively unsafe area,

crimes such as carjacking or burglary may be more likely. In Los Angeles, the number of victims t o crimes

such as theft or burglary at short-term rental l odgings reportedly increased by 555% in 2017-2019.

4

As is

common in th e lodging indu st ry, guests, who may b e traveling outside their home towns and are theref or e

less familiar with local neighborhoods, are responsible for their own safety in the areas in which they

choose to stay. In particular, as with hotels, guests receive little to no protection fr om rental platforms

as far as crimes they may experience in a listing’s vicinity.

5

However, prior to making a reservation, potential guests may refer to a number of sources to gauge

the safety of a listing’s area — these sources include local news, crime maps, websites that summarize

neighborhoods

6

, and perhaps most readily linked to each li st i n g, the list i n g’ s reviews from prior guests.

7

Airbnb enables guests and hosts to blind l y review each other after a guest’s stay.

8

In an effort to appease

hosts, and perhaps to encourage more listings acr oss a larger number and variety of neighborhoods, a

recent Airb nb policy effective December 11, 2019 announced that, going forward, guest reviews about a

listing that include “content that refers to ci r cumst an ces entirely outside of another’s control” may be

irrelevant and subject to removal.

9

This policy change implies that reviews about the safety of a li st in g’ s

vicinity (“vicinity safety reviews” or VS R) may be deemed irrelevant and subject to removal, since such

a safety aspect is outside the control of the host. As detailed below, we compare th e frequency of VSR

(as observed on Airbnb) from mid 2015 to the end of 2020 but find no evidence indicating that Airbnb

has enforced this policy post 2019/12 as far as vicinity safety is concerned. However, anecdotes suggest

that some reviews that touched on neighborhood safety have been removed.

10

The policy does not apply

3

See Airbnb’s official statistics as of December 31, 202 2 available at https://news.airbnb.com/about-us/#:

~

:text=

Airbnb%20was%20born%20in%202007,every%20country%20across%20the%20globe.

4

See, e.g. , https://rb.gy/1eohbw .

5

See, e.g. , https://rb.gy/nwetrv and https://rb.gy/wrqvy4 .

6

See, e.g. , https://www.neighborhoodscout.com/.

7

Reviews have been well establish ed as having a potential effect on buyer decisio n s an d sellers’ repu t a ti o n s, p a rti c u la rl y

in the tourism in d u st ry (Schuckert et al., 2015). The lit erat u re also suggests that cri ti c a l information in reviews in particular

can have an effect on guest decisions and be useful to platforms in disting u i sh in g seller and produc t quality (Jia et al., 2021) .

8

If on e side does not review the other, the other’s review becomes visible aft er 14 days.

9

See, fo r example, https://rb.gy/0pu5ck and https://rb.gy/9y6bum .

10

For example, o n Jan. 27 , 2020, a tweet from “PatrickR0820” wrote “I used @Airbnb when we went to Atlanta fo r

the Panthers game. In my review I left numerous things that could be fixed as well as ‘the area that it is located in, is

pretty sketchy.’ My review and 4 ot h er simi la r rec ent reviews were deleted because it wasn’t relevant.” Another tweet by

“AveryBrii” on May 18, 2021 stated : “@Airbnb is such a joke!!! we litera l ly had a car stolen at the place we stayed at,

didn’t get refunded (which wahtever) & then i try to leave a review to inform others that it clearly was not a safe area

(cops told us this & other info that i tried to include) & they didn’t post.” A journalist also describes his experience on

Bloomberg Opinion: “Airbnb Took Down My Negative Review. Why?” (May 26, 2021 by Timothy L. O’Brien), accessed

at https://rb.gy/dxfkxw , on November 26, 2021.

10

to “listing safety reviews” (LSR), because these reviews are about the safety wit h in the listed property,

which presumably can be more r ead il y controlled and improved by the listing’s host.

It is difficult to pin down exact l y why Airbnb adopted this new review policy in 2019/12. If Airbnb

believes that the main role of online reviews is to motivate hosts to provide high-quality servi ce s t o guest s,

review content regarding something outside the host’s control may not help in that regard. Anecdot es

suggest that hosts have complained about the harm they suffer f r om “irrelevant” reviews about the

vicinity of their li st in gs,

11

and this policy change could be a way t o address these complaints. Another

reason might be the concern of review accuracy: arguably, vicinity safety is a subjective feeling subject to

the reviewer’s prior and interpretation, and it is often difficult to prove correct or wrong. However, similar

accuracy concerns cou l d apply to other review content, though the degree of objectiveness may vary. A

third reason may have somethin g to do with the aspiration of being inclusive. Airbnb has advocated

for in cl usi ve design, whi ch is defined as “consciously designing products, servi ces, and environments that

don’t create barriers to belonging.”

12

The same aspiration may have motivated Airbnb to adopt an anti-

discrimination policy, establish a permanent anti-discrimination team, and encourage designs and services

friendly to users with disabilities. To the extent that vicinity saf ety reviews are more present in low-

income or minority neighborhoods, the new review policy could be another effort to make the platform

more friendly to hosts in economically disadvantaged neighborhoods. The key question we address in

this paper is how the new poli cy, if fully implemented as far as VSR is concer ned , would redistribu t e the

economic benefits and costs among hosts, guests, and the plat for m.

To be clear, Airbnb has adopted other methods to address neighborhood safety directly. For example,

Airbnb introduced a neighborhood support hotline in 2019/12

13

, around the same time as Airbnb adopted

the new review policy. This hotline is primarily intended to be a means for neighbors of Airbnb listings to

contact the platform in certain situations (e.g., in the event of a party taki n g place at a listed proper ty).

In addition, since our main analysis sample ends in 2019/12 and we do not know how many guests that

left VSR in our sample would have used the hotline should the hotline exist at the time of the review,

we cannot predict how the hotline could counter some of the effects shown in our analy si s. That being

said, hotli n e usage is ex post and is not visible to future guest s, hence its impact on guests can be

fundamentally different from the impact of rev i ews visible under each listing on Airbnb.

Airbnb’s review system also allows guests to leave a 1-5 star rating by specific categories (cleanliness,

accuracy, check-in, communication, location, and value), in addition to leaving an overall rating and de-

tailed review. According to Airbnb’s response to a host’s question, location rating is meant to “help future

guests get a sense of the area and t en ds to reflect proximity to nearby destinations.”

14

Hence, location

11

Nina Medvedeva, “Airbnb’s Location Ratings as Anti-Black Spatial Disinvestment in Washin g t o n D.C.” Platypus: The

CASTAC Blog (March 16, 2021) accessed at https://rb.gy/ottzf9 .

12

See, e.g. , https://rb.gy/eq7ltv .

13

See, e.g. , https://rb.gy/sykoim .

14

See, e.g. , https://rb.gy/qs13gh .

11

rating could capt u re many location-speci fi c aspects such as local transit, nearby stores, neighborhood

walkability and noise, and may not be directly related to vicinity safety.

4 Data

Data of shor t-term rental listings. T

he main dataset we use has information on the set of short-term

rental list i n gs that had been advertised on Airbnb fr om 2015/5 to 2019/12, and on VRBO from 2017/6

to 2019/12, in five US cities (Atlanta, Chicago, Los Angeles, New Orleans, and New York). The data was

acquired from AirDNA, a company that specializes in collecting Airbnb and VRBO data. For Airbnb

listings, this dataset includes the textual contents of al l Airbnb listing reviews in those cities. We have

no access to reviews on VRBO. The original data from AirDNA extends to 2020/12 but demand for

short-term rentals has changed dramatically because of the COVID-19 pandemic, so our main analysis

uses data up to 2019/12 but we use data til l 2020 to infer Ai rb nb’s (lack of) enforcement of its 2019/12

policy beyond 2019.

Each listing is identified by a unique property ID and comes with time-invariant characteristics such

as the listing zip code, listing’s property type (entire home, private room, shared room, or hotel room)

as well as the host’s unique identifier. Listings also have time-variant characteristics, including average

daily rate,

15

the number of reservations, days that are reserved by guests, occupancy rate,

16

number

of reviews, overall rating scores,

17

the listing’s Superhost status,

18

the listing’s guest-facing cancellation

policy,

19

the average number of words in the listing’s reviews, the number of listings in the same zip code,

and whether the listing is cross-listed on VRBO.

20

Our unit of observat i on is listing-month. We focus on “active listings” (listings whose calendars ar e

not indi cat ed as ‘blocked’ in the dataset for an entire month), and exclude observations with an average

daily rate (ADR akin price per ni ght) over $1000, as some hosts may set their rates prohibit i vely h i gh in

lieu of blocking their calendars. We use regular monthly scrapes between 2015/5 and 2019/12 on Airbnb

(2017/6 to 2019/12 for VRBO). In total, the sample comprises 2,866,238 listing-months observations on

Airbnb, and 201,718 listing-months observations on VRBO.

Definition of safety reviews on Airbnb. We define two different types of safety reviews —

15

Average daily rate (ADR) is calculated by dividing the total revenue, including both nightly rates and cleaning fees,

earned by the host from reservations over a gi ven month by the total number of nights in that month’s reservations.

16

Occupancy rate is ca lc u l a ted by dividing the number of booked nights by the sum of the available nights an d booked

nights.

17

Overall rating scores are normalized to 0-10 range. Our dataset also includes location star ratings. Adding it as an extra

control variable does not change our main results, so we do not report it in this paper. Results are available upon request.

18

Superhost refers to a status badge rel a ted to metrics concerning a listing’ s performance. Hosts who meet the following

criteria, evaluated quarterly, receive a Superhost design a t io n : (i) Completed at least 10 reservations in the past 12 months;

(ii) maintained a high response rate and low response time; (iii) rec eived p ri m ari ly 5-star reviews; (iv) did not cancel guest

reservations in the past 12 months.

19

Cancellation policy could be strict, moderate, fl exi b le. For simplicity, we use a dummy variable to indicate whether a

listing’s cancellation p o li c y is strict or not.

20

Only l ist in gs with entire home that could be bot h listed on Airbnb and VRBO.

12

listing safety reviews (LSR) and vicinity safety reviews (VSR). LSR are those reviews that describe

issues pertaining to safety within a listing (e.g., “the listing is unsafe because there are fire hazards”,

“the listing is unsafe because of the slippery tub”, or “we saw mice in the kitchen three times during

our stay”). VSR contain information pertaini ng to the safety of the nearby vicinity or neighbor hood

of the listing (e.g., “the neighborhood is not safe”, “shady neighborhood”, or “unsafe ar ea”) . While

there i s considerable research regarding the use of machine learning for automated content analysis, these

methods typically require a large number of hand-labeled examples for training. We instead use a lexicon

approach due to its simplicity and transparency. Lexicons are also f oun d to have high levels of precision

as compared to machine learning approaches (Zhang et al., 2014; Hutto and Gilbert, 2014), and have

been used exte nsively in the literature (Monroe et al., 2008; Dhaoui et al., 2017).

To identify a suitable set of keywords, we use an it er at ive approach, startin g with terms such as

“unsafe,” “dangerous,” and “scary” and all of their synonyms, to obtain an initial keyword set; next, we

manually inspect reviews containing such keywords so as to identify additional keywords. We then select

keywords based on the accuracy of safety reviews.

More specifically, we conduct two iterations of manual labelin g. In the first iterati on, three research

assistants (comprising both male and female and different races) labeled 1.4K reviews that were generated

from the Lexicon approach algorithm wi t h the initial keyword set for both LSR and VSR. W hi l e labeling,

for each review the reviewers identified (i) whether the review pertains to neighborhood and/or listing

safety, (ii) whether the review has a negative sentiment with respect to neighborhood an d/or listing

safety, and (iii) three specific keywords that suppor t ed the reviewer’s decision in (i) and (ii). With these

human-labeled keywords, we obtain an updat ed list of vicinity and listing safety keywords such that the

percentage of critical reviews regarding vicinity safety (listing safety) in the 1.3K sample with such a

human-selected keyword is greater than 0% (10%).

In the second iteration of labeling, two research assistants (male and female) of d i ffer ent races labeled

3.1K reviews that were generated from the Lexicon approach algorithm wit h the updated keyword set

for both LSR and VSR, such that 5 reviews associated with each keyword were randomly selected. In

this iteration, reviewers labeled wheth er each r ev i ew pertains to negative sentiment about vicinity and/or

listing safety. The final set of keywords is the one where each vicinity safety (listing safety) keyword has

a percentage of negative-sentiment vicinity safety (listing safety) reviews greater than or eq ual to 60%

from both reviewers’ second-iter at ion labeling results. After two iterations, we expanded the list to 41

vicinity safety keywords and 50 listing safety keywords, as delineated in Appendix Table A1.

21

The keyword lists developed above are not the only inputs we use to define vicinity or listing safety

reviews. As far as VSR, to improve precision and to ensure that the text is indeed describing issues

21

Most of the keywords appear relatively infrequently, and removing any one of them alone has little effect on the results.

For example, one may argue t h a t “government housing” sug gest s a low-income area rather than vicinity safety issues.

Including it in our vicinity safety keyword list would only identify th ree more vicinity safety reviews and removing the

keyword has no qualitative impact on the results.

13

pertaining to the safety of a listing’s vicinity and not other aspects of a list i ng, we identified a list of 24

location keywords that tend to indicate a statement about the surrounding area (e.g., “neighborhood”,

“area”, “outside”) in Appendix Table A1. We then categorized the matching reviews into those in which

the vicinity safety keyword occurred within 20 words of a location keyword as vicinity safety reviews,

and those in whi ch the listing safety keyword occurred outside of the 20-word context as listing safety

reviews.

22

Next, we selected 13 ‘negative’ keywords, and fi l t ered out double-n egat i ve reviews where the

keyword occurs within 5 words of a safety keyword.

Overall, our approach resulted in 11.8k matched VSR and 12.8k mat ched LSR across the 5 sample

cities. In total, they account for 0.25% and 0.27% of all the observed Airbnb reviews respectively. From

2015/5 to 2019/12, only 4.43% of listings ever had any VSR, and only 8.49% of listing ever had any safety

reviews (VSR or LSR).

As shown in Appendix Figures A1 and A2, the top matching vicinity safety keywords are “unsafe”

(4,519), “homeless” (3,398), “yelling” (854), and “uneasy” (733), and the top matching listing safety

keywords are “worst” (1,803), “mold” (1,350), “stained” (1,172), and “filthy” (1,135). As an additional

validation check, we sampled several thousand matches at random, and manually labeled them as relevant

or not, finding 78.21% and 75. 64% accuracy for vicinity safety keywords and listing safety keywords,

respectively.

23

The mislabeled data often used figurative language (“scary how perfect this neighborhood

is”) or used safety words in other contexts (e.g., “watched a scary movie on Netflix”). While any such

method will be imperfect, we did not find any evidence suggesting that the error rat es were systematically

biased for some neighborhoods over others. However, we did restrict our keywords to English, so the

method will be less effective in areas with many non-English reviews.

To check whether the safety reviews defined above are indeed critical feedback as we intend to identify,

we employ a pre-trained NLP mo d el from Hugging Face to determine the sentiment score of all reviews

24

. According to the analysis, the overall average sentiment score across all available reviews is 0.79.

Specifically, VSR show a relatively neutral average sentiment score of 0.06, while sentences containing

VSR safety keywords tend to have a negative average sentiment score of -0.31. In contrast, the non-VSR

reviews have an average sentiment scor e matching the overall average of 0.79. On t h e other hand, LSR

demonstrate a comparatively lower average sentiment score of -0.41, and sentences with safety keywords

within the LSR category have the most negative average sentiment score of -0.76. In comparison, the

22

While the 20-word window is arbitrary, a sensitivity analysis suggests no qualitative difference when using a slightly

longer or shorter window. Moreover, the average review had roughly 50 words, so this seemed to restrict to the 1-2 sentences

around the keyword match.

23

This indicates a 21.79% fa l se-positive error rate for vicinity safety revi ews (24.36% for listing safety reviews). Since our

lexicon approach aims to minimize the false- positive rate while allowing false negatives, the safety reviews identified by this

approach tends to make the estimated impact of safety reviews more c o n servative than the true effect.

24

The utilized model is a fine-tuned checkpoint of DistilBERT-base-uncased, accessible at https://huggingface.co/

distilbert-base-uncased-finetuned-sst-2-english. It demonstrates a noteworthy accuracy of 91.3% on th e develop-

ment set. The sentiment scoring system ran ges from -1 to 1, where a score of -1 indicates an extremely negative sentiment,

and a score of 1 indicates an extremely positive sentiment.

14

non-LSR reviews have an average sentiment score again aligning with the overall average of 0.79. These

patterns suggest that our Lexicon ap pr oach has successfully captured the negative sentiment when guests

comment on listing or vicinity safety issues during their stay.

Official crime and demographic statistics. A second dataset we collect covers official c rime

records from databases tracking cr i mes in Chicago

25

, New Orleans

26

, New York City

27

, Atlanta

28

, and

Los Angeles.

29

These databases cover d i fferent types of crimes, including property-related crimes and

violent crimes. In terms of the geographical granularity of crimes, we consider crime events at the zip

code level. We also obtain median income and other demogr aph ic information at the zip code level from

2014, one year before our Airbnb sample period begins, from the Uni t ed States Census Bureau

30

. We

make the assumption that the income and demograp hi c in f ormat i on di d not change significantly over

our sample period. Throughout the paper, we ref er to a zip code as high-income (H) or low-income (L)

according to whether it s average income is above or below the median of the city it lo c ate s in. Similarly,

we r ef er to a zip code as minority (M) or white (W) according to whether i ts percentage of minorities in

population is below or above the city median.

Variable Definition. Ab ove al l , Appendix Table A2 defines the key variables used i n this paper,

including listing att r i bu t es (such as price, occupancy rate, safety reviews, and ratings) and neighbor h ood

attributes (such as income, population, and crime statist ics by zip code).

Summary of VSR and LSR on Airbnb. Tab le 1 summarizes the data at the listing-month level,

where vicinity safety (VS) Airbnb listings are defined as observations that have a positive number of

vicinity safety reviews (VSR) before the reporting month, while “normal” Airbnb listings do not h ave

any VSR before the reporting month. As the table indicates, about 4% of the total observations are

VS listings. O n average, VS l i st i ngs have higher occupancy rates, a higher number of reservations, a

higher fraction of Superhosts, and a higher number of reviews than normal listings. In contrast, the

nightly rates and overall rating of VS listings are lower on average than normal listings. The mean

number of cumulative VSR (aggregat ed up to the report i ng month) is 0.06 across all Airbnb listings,

and the mean number of cumulative listing safety reviews (LSR) is 0.06. Appendi x F i gur es A3 an d

A4 demonstrate t h e distribution of VS keywords for four groups of zip codes (high-income, low-income,

white, and minority). Compar i n g high-income with low-income (and white with minority) groups, it

appears that t he low-income (minority) group dominates the volume of VSR.

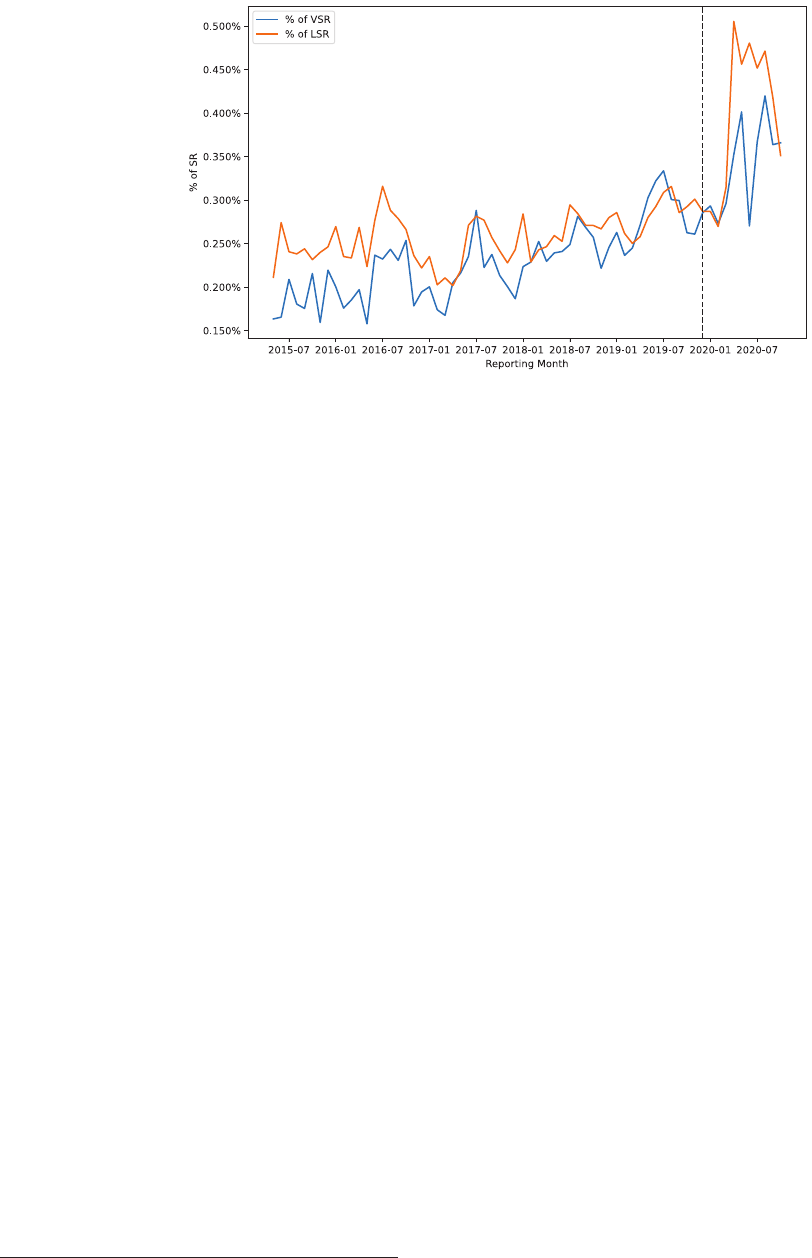

Did Airbnb enforce its new review policy after 2019/12? To infer whether Airbnb has enforced

its 2019/12 policy as far as vicini ty and listing safety is concerned, Figure 1 displays the percentage of

VSR and LSR on Airbnb, as id entified by our Lexicon method, from 2015/7 to 2020/12. While both VSR

25

Official crime data in Chicago: https://rb.gy/atjsss .

26

Official crime data in New Orleans: https://rb.gy/4vue82 .

27

Official crime data in New York City: https://rb.gy/iwrwp2 .

28

Official crime data in Atlanta: https://rb.gy/96txbl .

29

Official crime data in Los Angeles: https://rb.gy/tebnla .

30

See, e.g. , https://www.census.gov/data.html.

15

All listings VS listings Normal listings

(N=2,866,238) (N=126,868) (N=2,739,370)

VARIABLES mean p50 mean p50 mean p50

occupancyrate 0.56 0.64 0.68 0.78 0.56 0.64

occupancyrate du mmy 0.85 1.00 0.95 1.00 0.85 1.00

adr 164.69 125.51 134.15 106.31 166.10 126.67

No. of reservations 3.77 3.00 5.76 5.00 3.68 3.00

No. of reservationdays 14.16 14.00 18.56 21.00 13.95 14.00

lag VSR cumu dummy 0.04 0.00 1.00 1.00 0.00 0.00

lag LSR cumu dummy 0.05 0.00 0.20 0.00 0.04 0.00

lag VSR cumu 0.06 0.00 1.34 1.00 0.00 0.00

lag LSR cumu 0.06 0.00 0.26 0.00 0.05 0.00

lag VS listing radius pct 0.07 0.04 0.10 0.07 0.07 0.03

safety score (1-10, constructed by us) 4.96 5.09 2.83 2.33 5.06 5.23

ratingoverall (1-10) 9.18 9.60 9.09 9.20 9.18 9.60

review utd 33.71 15.00 93.02 70.00 30.96 14.00

No. of listing zip 540.67 449.00 554.66 481.00 540.02 447.00

cross listing 0.02 0.00 0.03 0.00 0.02 0.00

superhost 0.23 0.00 0.26 0.00 0.23 0.00

strict cp 0.50 0.00 0.58 1.00 0.49 0.00

ave wordcount cumu review 53.83 50.43 57.49 53.91 53.66 50.20

median income zip 57,187 50,943 42,645 34,432 57,861 51,427

population zip 48,158 45,747 42,514 36,654 48,419 46,025

white pct zip 0.53 0.59 0.41 0.38 0.53 0.60

h zip 0.52 1.00 0.29 0.00 0.53 1.00

w zip 0.60 1.00 0.44 0.00 0.61 1.00

crime cumu 19,435 9,650 31,230 14,205 18,889 9,475

Table 1: Summary Statistics of Airbnb Listings (2015/7-2019/12, unit of observation=listing-month)

and LSR have increased drastical l y after 2020/3, neither shows any discontinuous jumps f rom 2019/12

to 2020/2 as compared to the month-to-month fluctuation b ef or e 2019/12. The increase post 2020/3 is

likely driven by guests’ high attention to safety issues due to the pandemic rather than Airbnb’s change

of review policy in 2019/12, because that policy, if significantly enforced, should have led to a differential

reduction of VSR relative to LSR.

To double check, we have also examined the number of VSR/LSR removed in each quarter, by

comparing the reviews available on Airbnb from time to time. We find that almost all of the removed

VSR/LSR were from inactive listings. In short, we conclude that no eviden ce suggests Airbnb has enforced

its 2019/12 policy for VSR up to the end of 2020.

How do VSR correlate with official cr ime statistics? We also test the rank correlation between

the official crime records and VSR. Speci fi call y, we use the percentile rank of normalized crime records

in each zip code-month with i n each city — calculated as the number of reported crime cases in a month,

divided by the size of the population in that zip code. For each month, we rank the normalized crime

data within each city, and determine t he percentile crime rank of the zip code for that month. For

VSR, we use the percentile rank of the number of cumulative VSR in the zip code up t o the reporting

16

Figure 1: Percentage of Vicinity Safety Reviews (VSR) and List in g Saf ety Rev i ews ( LS R) on Ai r bnb

Over Time

month.

31

We then test the percentile ran k correlation index between the crime records and VSR in each

month, resulting in the time-series correlation trends depicted in Figure 2, which illustrates the correlation

trends for the f ou r different groups of zip codes (high-income, low-income, whit e, and minority). Figure 2

indicates that the correlation in low-income and min or ity groups exhibits an increasing trend, suggesting

that the percentile rank of VSR in a zip code is more likely to reflect t h e actual crime reports in the zip

code over time in these areas.

5 Reduced-form Effects of Safety Reviews

This section presents r edu ced -for m evidence from listing-level

and guest-level analyses. Listing-level

analysis documents the within-listing-cross-buyer effects of safety reviews as well as the cross-listin g-

cross-buyer effects of VSR. Guest-level analysis captures the cross-listing-within-buyer effects of VSR.

5.1 Listing-Level Ana ly s is

Baseline results. W

e begin by assessing the effects of VSR and LSR by listing-month. Our hypothesis

is that if potential guests view VSR and LSR as a proxy for safety around or withi n a listing, such reviews

would reduce the guests’ willingness to book the listing. Our base specification is gi ven by:

y

j,t

= α

j

+ α

k,t

+ δX

j,t

+ β

1

Crime

j,t−1

+

β

2

LSR

j,t−1

+ β

3

V SR

j,t−1

+ β

4

V SRADIUS

j,t−1

+ ǫ

j,t

, (1)

31

Due to data limitations, we assume that both records begin with clean slate (0 records) as of the beginning of our

dataset.

17

Figure 2: Correlation between the rank of normalized crime flow and the normalized total VSR

where j denotes a listing j-month t observation, Crime

j,t−1

is a log transformed variable that indicates

the normalized number of cumulative official crime reports since the start of the sample period for the

zip code where listing j is located, LSR

j,t−1

and V SR

j,t−1

are two dummy variables that equal 1 if the

listing has at least one LSR and VSR, respectively, before month t, V SRADIUS

j,t−1

is t he percentage of

listings that have at least one VSR within a 0.3-mile radius of listing j p r i or to month t, X

j,t

are listing-

level controls (logged except for dummy var iab l es) , includin g the number of reviews, overall ratings,

cancellation policy, number of listing in the same zip code, cross-listing status (i.e., whether the listing

is also listed on VRBO) , and whether the listing is host ed by a Superhost. The dependent variable y

j,t

is either the l og of listing j’s average daily rate (ADR) in month t, or the log of listing j’s monthly

occupancy rate (cal cu l at ed as log of 1 plus th e occupancy rate).

32

Listing and City–year-month fixed

effects are denoted by α

j

and α

k,t

, respectively, where the ci ty of listing i is denoted by k. Standard

errors are clustered by Airbnb property ID. The primary assumption is that, within a listing, the pr esence

and timing of safety reviews are correlated with the true safety condition around or inside the listing

and do not reflect selective reporting, fake reviews, or other strategic reasons once we control for other

time-varying listing attributes.

Our main specifications in Table 2 indicate that both VSR and LSR significantly decrease a listing’s

price (ADR) and occupancy. Speci fi call y, for an average Airbnb listing in our sample, having any VSR is

associated with a 1.82% reduct i on in the listin g’ s monthly occupancy rate and a 1.48% reduction in its

32

Some listing-mo nth observations have an occupancy rate of 0 and consequently are missing an average reserved daily

rate in the dataset for tho se months, though the dataset does offer a separat e “listing price” (i.e., a base rate) for those

listings. To extrapolate the ADR of th ese listings in the months in which they are missing, we calculate the mean ratio of

their ADR to their listing price in the months in which they are available, and multiply this average by the listing price in

the m issi ng months (if available, or by using the listin g price from the nearest month in which it is available).

18

average price per reserved night; having an LSR is associated with a 2.58% drop in occupancy and 1.52% in

price. LSR thus have a large r effect on price and occupancy than VSR, possibly because some prospective

guests have a specific geograph ic area (e.g., neighborhood) in mind, regardless of safety issues concerning

that area, whereas LSR describe safety issues that per t ai n to t h e listing itself. The pe rcentage of listings

with VSR within a 0.3-mile rad i us is associated with lower prices and lower occupancy, suggesting that

guests may also infer v i ci ni ty safety from the VSR of nearby listings.

(1) (2)

SAMPLE whole whole

MODEL OLS OLS

VARIABLES log occupan cy rate log adr

lag VSR cumu dummy -0.0182*** -0.0148***

(0.00140) (0.00219)

lag LSR cumu dummy -0.0258*** -0.0152***

(0.00135) (0.00210)

lag

VS listing radius pct -0.00859*** -0.00872**

(0.00253) (0.00390)

lag log crime cumu norm 0.0693*** -0.0508***

(0.00826) (0.0130)

lag

log review utd 0.00420*** 0.0117***

(0.000415) (0.000678)

log No. of listing zip -0.0212*** 0.0146***

(0.00185) (0.00289)

log

rating overall 0.0257*** -0.00240

(0.00128) (0.00200)

superhost 0.0175*** 0.00817***

(0.000586) (0.000845)

cross

listing 0.0311*** -0.00564

(0.00278) (0.00384)

strict cp 0.000601 0.0123***

(0.000803) (0.00126)

(0.0119) (0.0185)

Observations 2,866,238 2,866,238

R-squared 0.559 0.928

Note: *** p < 0.01, ** p < 0.05, All regressions control Time*City FE and Property ID FE, with st an dar d errors

clustered by Property ID. The variable crime cumu is normalized by the population.

Table 2: Main Results of Reduced-form Listing-level Analysis of Airbnb Listings

In contrast, normalized official crime records is associated with lower prices but higher occupancy. A

potential explanation is that hosts are aware of s afe ty issues in the areas of their listings, and proactively

lower their rates when t h ei r listings are located in relatively unsafe areas. These lower prices attract

more guest bookings, p er hap s either because guests tend not to seek information about crimes in the

neighborhood or because they prioritize price. In particular, for the average Airbnb listing in our sample,

given a 1% increase in the normalized official crime records, the daily rate is 0.05% lower whereas the

occupancy rate i s 0.07% higher.

Robustness. Our first robustness check tries to separate the extensive and intensive margins. Col-

19

umn 1 of Table 3 considers as the dependent variable a dummy t hat equals 1 when a listing’s occupancy

rate is posit ive and 0 othe rw is e. It reports a positive coefficient on Crime

j,t−1

, suggesting that the

variable Crime

j,t−1

not only describes the relative crime status of a zip code, but may also capture the

relative guest traffic to the area, where areas with relatively high guest traffic (e.g., downtown areas) tend

to h ave a higher number of reported (normalized) crimes.

Comparing th e coefficients on VSR and LSR for the whole-sample specifications (Table 2) to the

conditional sample with posit i ve occupancy rates (Col u mns 2 and 6 of Table 3), we find that the coeffi-

cients are similar but have somewhat higher magnitudes for the whole sample. One exception is that the

coefficient on Crime

j,t−1

becomes negat i ve after we condition the sample on listings wit h any positive

occupancy r at e, suggesting that the positive coefficient on this variable in the whole sample is driven by

the extensive margin only, whereas the intensive margin is consistent with the prior that bookings tend

to d ecl in e for listings located in a zip code with higher crime statistics over time.

(1) (2) (3) (4) (5) (6) (7)

SAMPLE whole occ>0 review utd<=13 review utd>13 whole whole who l e

MODEL OLS OLS OLS OLS OLS OLS OLS

VARIABLES

occupa n c y

rate

dummy

log

occup an c y

rate

log

occupa n c y

rate

log

occupa n c y

rate

log

occupa n c y

rate

log

occupa n c y

rate

log

occupa n c y

rate

lag VSR cumu dummy -0.0132** * -0.0129*** -0.0212*** -0.0100*** -0.0180*** -0.0155*** -0.0152***

(0.00155) (0.00119) (0.00541) (0.00146) (0.00140) (0.00140) (0.00139)

lag LSR c u mu dummy -0.0131*** -0.0213*** -0.0362*** -0.0173*** -0.0251*** -0.0228*** -0.0224***

(0.00153) (0.00114) (0.00458) (0.00142) (0.00135) (0.00136) (0.00136)

lag

VS listing radius pct -0.0100** -0.00575** -0.00760** -0.00334 -0.00864*** -0.00848*** -0.00 8 4 7 * * *

(0.00416) (0.00238) (0.00378) (0.00342) (0.00253) (0.00263) (0.00263)

lag log crime cumu norm 0.180*** -0.0167** 0.219*** 0.0118 0.0693*** 0.0652*** 0.0653***

(0.0123) (0.00734) (0.0150) (0.0106) (0.00826) (0.00874) (0.00874)

lag

log ave wordcount cumu review -0.00890***

(0.000744)

lag r sentiL cumu ave 0.0251***

(0.00215)

lag r sentiN cumu ave 0.0228***

(0.00120)

R-squared 0.420 0.499 0.565 0.522 0.559 0.560 0.560

VARIABLES log adr log adr log adr log adr log adr log adr

lag VSR cumu dummy -0.0126*** -0.00411 -0.0110*** -0.0150*** -0.0145*** -0.0146***

(0.00201) (0.00726) (0.00231) (0.0021 9 ) (0.00219) (0.00219 )

lag LSR c u mu dummy -0.0112*** -0.00152 -0.0124*** -0.0158*** -0.0150*** -0.0152***

(0.00189) (0.00705) (0.00218) (0.0021 0 ) (0.00210) (0.00210 )

lag

VS listing radius pct -0.00848** 0.00263 -0.0140*** -0.00868** -0.00993** -0.00992**

(0.00337) (0.00620) (0.00478) (0.0039 0 ) (0.00387) (0.00387 )

lag log crime cumu norm -0.000974 -0.0600** -0.0242 -0.0508*** -0.0490*** -0.0490***

(0.0120) (0 . 0 2 40 ) (0.0168) (0.0130) (0.0135) (0.0135)

lag

log ave wordcount cumu review 0.00645***

(0.00106)

lag r sentiL cumu ave 0.00572*

(0.00293)

lag r sentiN cumu ave -0.00124

(0.00163)

R-squared 0.943 0.931 0.937 0.928 0.931 0.931

Observations 2,866,238 2,441,566 1 , 3 7 0 ,6 5 5 1,495 ,5 8 3 2,866,238 2,655,504 2,655,504

Note: *** p < 0.01, ** p < 0.05, * p < 0.1. All regressions control Time*City FE and Prop erty ID FE, with standard errors

clustered by Property ID.

Table 3: Robustness Checks for Reduced-form Listing Level Analysis of Air

bnb Listings

We conduct a number of additional checks. First, we split t he sample by whether a listing has an

20

above- or below-median number of rev i ews in a given month (median is 12), as a proxy for whether the

listing is in its early or later “stage” of taking guest reservations, since only staying guests can post a

review.

33

Another motivation for this partition is that prospective guests are more likely to notice safety

reviews (both VSR and LSR) when listings have a lower number of reviews. Indeed, Columns 3 and 4 of

Table 3 report that in the subsample of listings with 13 or fewer reviews, the negative effects of having any

VSR and LSR on occupancy rate (2.12% for VSR and 3.62% for LSR) are higher than the corresponding

negative effects for list in gs with more than 13 reviews (1.00% for VSR and 1.73% for LSR). However,

Columns 7 and 8 indicate that as far as listings’ dail y rates are concerned, this comparison is reversed,

possibly because hosts of newer listings may still be in the process of identifying their pricing for those

listings. Second, we add additional controls for the average word count of a listin g’s reviews.

34

As

Columns 5 and 9 of Table 3 indicate, the results do not qualitat i vely change from our main specifications

when incorporating the addit i on al control.

Heterogeneous effects. We next explore a number of he t erogen eou s effects. Table A3 provides

summary statistics based on the type or area of a listing. In particular, the table reports different

normalized zip code crime levels for listings in these categories. We proceed with a similar empirical

methodology as in (Equation 1), but with different subsamples.

We begin by analyzing four groups of zip codes separately (high-i ncome, low-income, white, and

minority). Table 4 shows that VSR have negative effects on occupancy rates across all four subsamples.

The negative effects of having any VSR on occupancy rates have h i gher magnit u des in high -in come and

white zip codes (1.76% and 1.89%) than in low-income and minority zip codes (1.72% an d 1.75%). A

similar comparison holds for LSR. One potential explanation is that guests may have different prior beliefs

and different sensitivities to safety issues, and perhaps more so if their search targets a specific area that

they believe is r el ati vely safe. Hosts in different areas may also react differently to VSR and LSR, based

on h ow they gauge guest perception and guest preferences.

We next consider subsamples comprising different listing types (entire home, private room, shared

room, and hotel room). Additional heterogeneous effects may arise here because, for instance, for guests

who seek parti al spaces (private room, shared space) within a dwelling, safety issues may be more salient.

The results in Appendix Table A4 indeed show that the magnitude of the negative effects from having

any VSR and LSR on occupancy are lar ger for private rooms and shared spaces (2.10% and 3.01% for

VSR and 3.08% and 2.89% for LSR, respectively) in comparison with entire-home listings (1.61% for

VSR an d 2.36% for LSR).

33

To be clear, the same listing may be in both subsamples over time, but belong to only one of the subsamples in any

given month.

34

Host responses to safety reviews are not observed in our data

21

(1) (2) (3) (4) (5) (6) (7) (8)

SAMPLE H L W M H L W M

MODEL OLS OLS OLS OLS OLS OLS OLS OLS

VAR I A B LES

log

occ u pa n c y

rate

log

occ u p a nc y

rate

log

occu p a n c y

rate

log

occ up a n c y

rate

log adr log adr log adr log adr

lag VSR cumu dummy -0.0176*** -0.0172*** -0.0189*** -0.0175*** -0.0163*** -0.0138*** -0.0153*** -0.0136** *

(0.00257) (0.00168) (0.00215) (0.00185) (0.00389) (0.00267) (0.00330) (0.00295)

lag LSR cumu dummy -0.0263*** -0.0247*** -0.0251*** -0.0265*** -0.0181*** -0.0123*** -0.0177*** -0.0114***

(0.00196) (0.00187) (0.00178) (0.00207) (0.00284) (0.00307) (0.00269) (0.00335)

lag VS listing radius pct -0.0117*** -0 . 0 0 44 9 -0.00780** -0.00942*** -0.00261 -0.0126** -0.00308 -0.0122**

(0.00370) (0.00346) (0.00385) (0.00335) (0.00564) (0.00535) (0.00589) (0.00516)

lag

log crime cumu norm 0.0512*** 0.171*** 0.0427*** 0. 17 0 * * * -0.0496*** -0.0561*** -0.0478*** -0.0625**

(0.0111) (0.0137) (0.00950) (0.0168) (0.0179) (0.0213) (0.0150) (0.0265)

Observations 1,484,474 1,381,764 1,716,774 1,149,464 1,484,474 1,381,764 1,716,774 1,149,464

R-squared 0.552 0.569 0.551 0.573 0.921 0.924 0.919 0.925

Note: *** p < 0.01, ** p < 0.05, * p < 0.1. All regressions control Time*City FE and Prop erty ID FE, with standard errors

clustered by Property ID.

Table 4: Reduced-form Listing-level Analysis of Airbnb Listings By Four Are

a Typ es

5.2 Guest-Level Analys is

We conduct guest-level analyses to test whether guests who leave any

VSR (hencef ort h , VS guests) act

differently befor e and after they post their first VSR in comparison to otherwise similar gu ests who did

not leave any VSR. This aims to capture the cross-listi n g-wi th i n-b u yer effect of VSR. To that end, we

assume that the first VSR that a VS guest posts for one of the listings in our sample (i.e., covering Airbnb

listings in the five cities we consider, with reviews beginning in May 2015) is the first VSR that this guest

posted. To reiterate, any such guest s who have ever posted VSR in our sample are considered VS guests;

otherwise, they are treated as “normal ” users. To ensur e that the VS users have had some experience on

Airbnb prior to leaving their first VSR, we focus on the subset of VS users th at left at least two reviews

in the five sample cities before leaving their first VSR.

In order to match VS users with n or mal users, we use a K-nearest neighbor (KNN) method to select

the two most simil ar control (normal) users for each tr eat ment (VS) user. The user characteristics used

in the KNN method (as of the time of the treat ment user’s first VSR) are the user’s number of prior

reviews, the average normalized crime reports in the cities in which the user stayed (based on their prior

reviews), the average number of VSR for listings for which the user left reviews, the average percentage

of overall VS listings in the same zip codes as well as i n the 0.3-mile radius area as listings for which the