HHPC 10061

Verify this is the correct version before use.

Information Management Platform for

Data Analytics and Aggregation (IMPALA)

System Design Document

Human Health and Performance Directorate

Human Systems Engineering and Development Division

Configuration Controlled HHPD TRB

Verify that this is the correct version before use.

August 2016

Baseline

National Aeronautics and Space Administration

Lyndon B. Johnson Space Center

Houston, Texas 77058

HHPC 10061

CONTRACTOR APPROVAL SHEET

Information Management Platform for

Data Analytics and Aggregation (IMPALA)

System Design Document

Contract Number: T73062

PREPARED BY: //original signature on file// 08/16/2016

Akinyele Akinyelu DATE

LM Senior Architect

APPROVED: //original signature on file// 08/17/2016

Alan Ruter DATE

LM Project Manager

HHPC

APPROVED: //original signature on file// 08/22/2016

Ram Pisipati DATE

Project Integrator

HHPC

APPROVED: //original signature on file// 08/23/2016

Keith Kreutzberg DATE

Wyle Technical Representative

HHPC

NATIONAL AERONAUTICS AND SPACE ADMINISTRATION

Lyndon B. Johnson Space Center

Houston, Texas

HHPC 10061

NASA APPROVAL SHEET

Information Management Platform for

Data Analytics and Aggregation (IMPALA)

System Design Document

APPROVED: //original signature on file// 08/30/2016

Andrew Carnell DATE

Enterprise Architect/SF5

NASA

APPROVED: //original signature on file// 08/30/2016

Kathy Johnson-Throop DATE

Information Systems Architecture Branch Chief/SF5

NASA

NATIONAL AERONAUTICS AND SPACE ADMINISTRATION

Lyndon B. Johnson Space Center

Houston, Texas

HHPC 10061

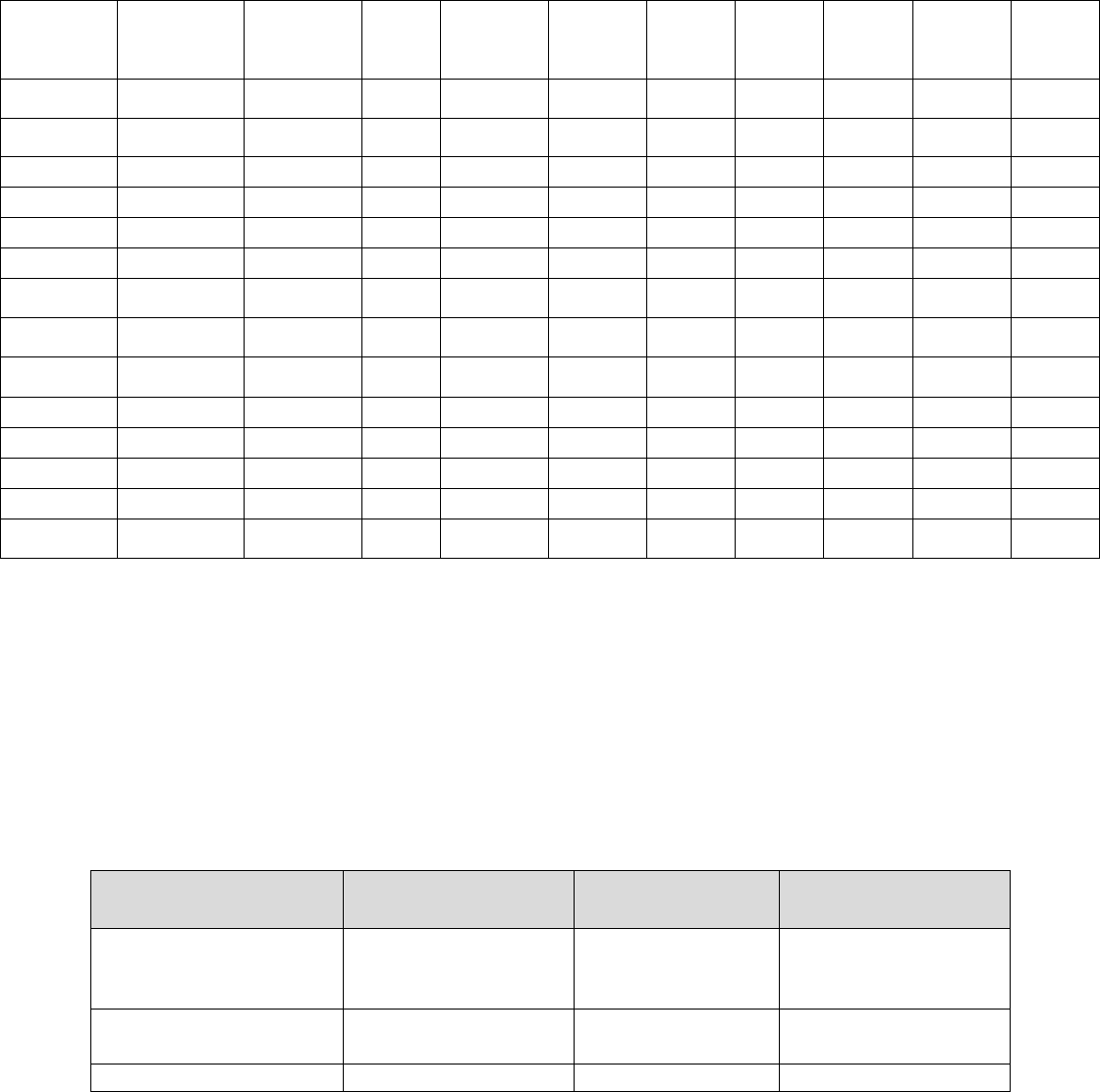

CHANGE HISTORY

REVISION/

CHANGE

DATE AUTHORIZATION DESCRIPTION OF CHANGE

— 8/30/2016 IMPALA TRB Baseline Release

HHPC 10061

i

Verify that this is the correct version before use.

TABLE OF CONTENTS

1.0 INTRODUCTION ...................................................................................................................................... 1-1

1.1 PURPOSE OF THE SYSTEM DESIGN DOCUMENT ............................................................................................. 1-1

2.0 GENERAL OVERVIEW AND DESIGN GUIDELINES/APPROACH ................................................................... 2-1

2.1 GENERAL OVERVIEW ................................................................................................................................... 2-1

2.2 ASSUMPTIONS/CONSTRAINTS/RISKS ............................................................................................................ 2-2

2.2.1 Assumptions ......................................................................................................................................... 2-2

2.2.2 Constraints ........................................................................................................................................... 2-3

3.0 DESIGN CONSIDERATIONS ...................................................................................................................... 3-1

3.1 GOALS ....................................................................................................................................................... 3-1

3.2 DEPENDENCIES ........................................................................................................................................... 3-1

4.0 OVERALL SYSTEM ARCHITECTURE ........................................................................................................... 4-1

4.1 LOGICAL ARCHITECTURE.............................................................................................................................. 4-2

4.1.1 Capture ................................................................................................................................................ 4-3

4.1.2 Transport.............................................................................................................................................. 4-4

4.1.3 Refine ................................................................................................................................................... 4-4

4.1.4 Store ..................................................................................................................................................... 4-5

4.1.5 Analyze ................................................................................................................................................. 4-5

4.1.6 Distribute ............................................................................................................................................. 4-6

4.1.7 Manage ................................................................................................................................................ 4-6

4.2 FUNCTIONAL ARCHITECTURE ....................................................................................................................... 4-7

4.3 INFRASTRUCTURE ARCHITECTURE .............................................................................................................. 4-10

4.4 NETWORK ARCHITECTURE ......................................................................................................................... 4-12

4.5 SECURITY ARCHITECTURE .......................................................................................................................... 4-14

5.0 DATA GOVERNANCE FRAMEWORK ......................................................................................................... 5-1

6.0 OPERATIONAL SCENARIOS...................................................................................................................... 6-1

6.1 END USER USAGE SCENARIOS ...................................................................................................................... 6-1

6.1.1 Data Ingestion...................................................................................................................................... 6-1

6.1.2 Profiling and Cataloging Data.............................................................................................................. 6-3

6.1.3 Manually Edit Data .............................................................................................................................. 6-4

6.1.4 Create, Manage and Share Data sets .................................................................................................. 6-7

6.2 ADMINISTRATOR USAGE SCENARIOS ............................................................................................................ 6-7

6.2.1 User Provisioning ................................................................................................................................. 6-7

6.2.2 System Monitoring ............................................................................................................................... 6-8

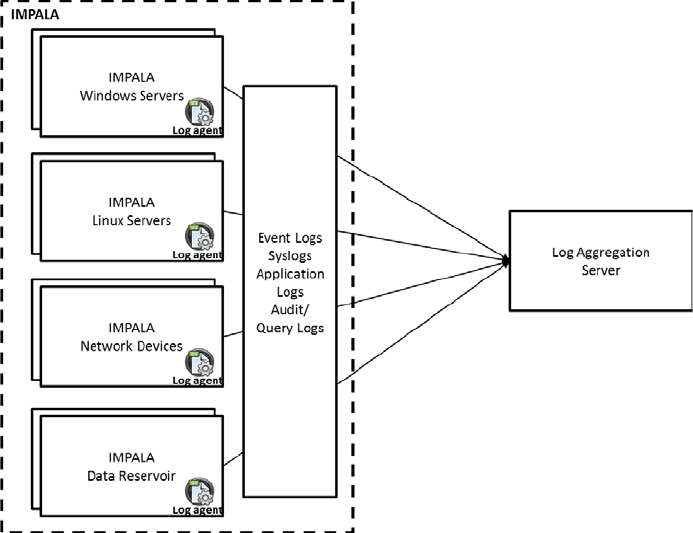

6.2.2.1 Log Aggregation ........................................................................................................................................... 6-8

6.2.2.2 Health and Availability Monitoring .............................................................................................................. 6-9

6.2.3 System Patching and Updates ........................................................................................................... 6-10

6.2.4 Backup & Recovery ............................................................................................................................ 6-10

6.2.4.1 Data Backup Schedule ................................................................................................................................ 6-10

6.2.4.2 Virtual Machine (VM) Backup .................................................................................................................... 6-12

7.0 DETAILED DESIGN ................................................................................................................................... 7-1

7.1 INFRASTRUCTURE DETAILED DESIGN ............................................................................................................ 7-1

7.1.1 Application Nodes ................................................................................................................................ 7-2

7.1.2 Enterprise Data Hub ............................................................................................................................. 7-4

7.1.2.1 Master Nodes ............................................................................................................................................... 7-5

HHPC 10061

ii

Verify that this is the correct version before use.

TABLE OF CONTENTS (Cont'd)

7.1.2.2 Data Nodes ................................................................................................................................................... 7-6

7.1.3 Storage Node ....................................................................................................................................... 7-7

7.1.4 System Software Specifications ........................................................................................................... 7-8

7.1.5 Power consumption ............................................................................................................................. 7-8

7.2 SECURITY DETAILED DESIGN ........................................................................................................................ 7-9

7.2.1 Perimeter Security .............................................................................................................................. 7-11

7.2.2 IMPALA Authentication Module ........................................................................................................ 7-11

7.2.3 IMPALA RBAC Module ........................................................................................................................ 7-14

7.2.4 IMPALA Encryption Module ............................................................................................................... 7-19

7.2.5 IMPALA Auditing ................................................................................................................................ 7-21

7.2.5.1 Navigator Audit .......................................................................................................................................... 7-21

7.2.5.2 Navigator Lineage ...................................................................................................................................... 7-22

7.2.5.3 Waterline ................................................................................................................................................... 7-22

7.2.5.4 Server Security Auditing ............................................................................................................................. 7-22

7.2.5.5 Anti-Virus Monitoring ................................................................................................................................ 7-22

8.0 INTERFACES ............................................................................................................................................ 8-1

8.1 INBOUND INTERFACES ................................................................................................................................. 8-1

8.2 OUTBOUND INTERFACES ............................................................................................................................. 8-1

APPENDIX A ACRONYMS AND ABBREVIATIONS ............................................................................................. A-1

LIST OF TABLES

TABLE 4.1-1 MAPPING OVERVIEW: USER ROLES TO IMPALA PLATFORM LAYERS ...................................................... 4-3

TABLE 4.5-1 SECURITY FUNCTION TO TOOL MAPPING ......................................................................................... 4-17

TABLE 6.1-1 SAMPLE USAGE SCENARIOS MAPPED TO IMPALA ROLES AND LAYERS ................................................... 6-1

TABLE 7.1.1-1 IMPALA VIRTUAL INFRASTRUCTURE SOFTWARE AND FUNCTIONS ....................................................... 7-2

TABLE 7.1.1-2 IMPALA APPLICATIONS AND FUNCTIONS .......................................................................................... 7-2

TABLE 7.1.1-3 IMPALA APPLICATION NODE CONFIGURATION .................................................................................. 7-4

TABLE 7.1.2.1-1 IMPALA MASTER NODE CONFIGURATION ........................................................................................ 7-5

TABLE 7.1.2.2-1 IMPALA DATA NODE CONFIGURATION ............................................................................................ 7-7

TABLE 7.1.3-1 IMPALA STORAGE NODE CONFIGURATION ...................................................................................... 7-7

TABLE 7.1.4-1 SERVER-SIDE OPERATING SYSTEMS AND COUNT ................................................................................. 7-8

TABLE 7.1.5-1 POWER CONSUMPTION ................................................................................................................... 7-8

TABLE 7.1.5-2 POWER CONSUMPTION (CONTINUED) .............................................................................................. 7-9

TABLE 7.2-1 PROTECTION POINT TO IMPALA MODULE MAPPING ......................................................................... 7-9

TABLE 7.2.2-1 IMPALA AUTHENTICATION SECURITY MODULE TERMINOLOGIES ...................................................... 7-13

TABLE 7.2.3-1 IMPALA COMPONENT ROLES AND PRIVILEGES ................................................................................ 7-14

TABLE 7.2.3-2 IMPALA SENTRY COMPONENTS AND FUNCTIONS ............................................................................ 7-17

TABLE 7.2.3-3 RECORD SERVICE COMPONENTS AND FUNCTIONS .......................................................................... 7-18

TABLE 7.2.4-1 IMPALA ENCRYPTION COMPONENTS AND FUNCTIONS ..................................................................... 7-20

HHPC 10061

iii

Verify that this is the correct version before use.

LIST OF FIGURES

FIGURE 4.1-1 IMPALA KEY ENABLERS AND PLATFORM LOGICAL ARCHITECTURE ................................................... 4-2

FIGURE 4.2-1 LAYERED FUNCTIONAL VIEW OF IMPALA PLATFORM ..................................................................... 4-7

FIGURE 4.3-1 OVERVIEW OF INFRASTRUCTURE ARCHITECTURE ......................................................................... 4-10

FIGURE 4.4-1 NETWORK ARCHITECTURE OVERVIEW ......................................................................................... 4-13

FIGURE 4.5-1 IMPALA SECURITY OVERVIEW .................................................................................................... 4-15

FIGURE 4.5-2 USER AUTHENTICATION PROCESS OVERVIEW ............................................................................... 4-16

FIGURE 6.1.1-1 DATA INGESTION FLOW ................................................................................................................ 6-2

FIGURE 6.1.2-1 DATA CATALOGING FLOW ............................................................................................................ 6-3

FIGURE 6.1.3-1 PERSISTENT MANUAL EDIT OF GENERATED DATA SET .................................................................... 6-5

FIGURE 6.1.3-2 IN-PLACE ROW/CELL MANUAL EDITS OF GENERATED DATASET ........................................................ 6-6

FIGURE 6.2.1-1 IMPALA USER PROVISIONING PROCESS ......................................................................................... 6-8

FIGURE 6.2.2.1-1 IMPALA LOG AGGREGATION PROCESS .......................................................................................... 6-9

FIGURE 6.2.4.1-1 IMPALA BACKUP SCHEDULE – SHORT TERM ................................................................................ 6-11

FIGURE 7.1-1 IMPALA PLATFORM AND HARDWARE POWER DETAILS .................................................................. 7-1

FIGURE 7.1.2-1 ENTERPRISE DATA HUB SERVICE LAYOUT ....................................................................................... 7-5

FIGURE 7.2-1 IMPALA SECURITY OVERVIEW .................................................................................................... 7-10

FIGURE 7.2.1-1 PERIMETER SECURITY ................................................................................................................. 7-11

FIGURE 7.2.2-1 IMPALA AUTHENTICATION FLOW DIAGRAM ................................................................................ 7-12

FIGURE 7.2.3-1 IMPALA AUTHORIZATION FLOW DIAGRAM ................................................................................. 7-16

FIGURE 7.2.4-1 IMPALA ENCRYPTION FLOW DIAGRAM ....................................................................................... 7-19

FIGURE 7.2.4-2 INTEGRATION BETWEEN THE IMPALA DATA RESERVOIR AND

THE IMPALA ENCRYPTION COMPONENTS .................................................................................... 7-21

HHPC 10061

1-1

Verify that this is the correct version before use.

1.0 INTRODUCTION

The System Design Document (SDD) is a compendium of three documents, providing a single source for

requirements, system design, and data design. The functional and non-functional requirements are

drawn from the Information Management Platform for Data Analytics and Aggregation (IMPALA)

System Requirements document. The three elements of requirements, user design, and data design

form the baseline from which to build a set of more technical system design specifications for the final

product, providing both high-level system design and low-level detailed design.

NOTE: For the remainder of this document, the Information Management Platform for Data

Analytics and Aggregation (IMPALA) is referred to as the IMPALA Platform.

The SDD delineates design goals and considerations, provides a high-level overview of the system

architecture, and describes the system data design, the human-machine interfaces, and operational

scenarios. The high-level system design is decomposed into low-level detailed design specifications for

each system component, including hardware, internal communications, software, system integrity

controls, and interfaces.

1.1 PURPOSE OF THE SYSTEM DESIGN DOCUMENT

The System Design document tracks the design activities that are performed to guide the integration,

installation, verification, and acceptance testing of the IMPALA Platform. The inputs to the design

document are derived from the activities recorded in Tasks 1 through 6 of the Statement of Work

(SOW), with the proposed technical solution being the completion of Phase 1-A. With the

documentation of the architecture of the IMPALA Platform and the installation steps taken, the SDD will

be a living document, capturing the details about capability enhancements and system improvements

to the IMPALA Platform to provide users in development of accurate and precise analytical models. The

IMPALA Platform infrastructure team, data architecture team, system integration team, security

management team, project manager, NASA data scientists and users are the intended audience of this

document.

The IMPALA Platform is an assembly of commercial-off-the-shelf (COTS) products installed on an

Apache-Hadoop platform. User interface details for the COTS products will be sourced from the COTS

tools vendor documentation. The SDD is a focused explanation of the inputs, design steps, and

projected outcomes of every design activity for the IMPALA Platform through installation and

validation.

HHPC 10061

2-1

Verify that this is the correct version before use.

2.0 GENERAL OVERVIEW AND DESIGN GUIDELINES/APPROACH

This section describes the principles and strategies used as guidelines in the design of and

implementation of the IMPALA Platform.

2.1 GENERAL OVERVIEW

Wyle Science, Technology, and Engineering Group (Wyle) is the prime contractor of NASA’s Human

Health and Performance Contract (HHPC), providing engineering, clinical, occupational health

surveillance, and flight hardware support to NASA’s Human Health and Performance Directorate

(HH&P). To meet its mission, HH&P collects, analyzes, and generates reports from a plethora of data in

support of crewmember occupational health surveillance and epidemiologic investigation activities,

laboratory testing, crew safety evaluations, scenario modeling, intelligence and evidence gathering,

environmental assessments, and medical countermeasures deployment.

While Wyle is currently able to meet our NASA customer’s data integration needs to meet mission

objectives with the current system and processes, NASA faces a number of process and technical

challenges.

• Inefficient, manual processes to gain access to internal and external data

• Legacy system challenges that prevent/limit automated integration of data from multiple

sources and a comprehensive view (and reuse) of laboratory, crew health records,

environmental and epidemiological data

• Lack of common processes, advanced analytics capability, and formats and controls needed to

effectively analyze, visualize, and share/reuse data across multiple systems and users

• Labor-intensive data request fulfillment processes that depend on manual data aggregation and

manual quality control reviews

• Limited or inefficient data visualization, management, governance and data sharing capabilities

(tools, skills and processes)

• Insufficient controls to store, process, and analyze longitudinal crewmember health data with

changes to metrics, data types, and units over time.

• Inability to apply descriptive, predictive, and prescriptive analytics tools across an array of

constantly changing data sets

• Inability to capture and retain meta-data on analysis and use of data, in principle, by any and all

users

A more effective method to manage astronaut health data and increase personnel productivity must be

developed. To meet the above challenges and needs, Wyle seeks to implement a data analytics

platform that will electronically integrate, manage, analyze, visualize, report on and create/share

datasets of surveillance, epidemiologic, laboratory, environmental and other data in an efficient, cost-

effective, and timely manner.

The envisioned IMPALA Platform will enable Wyle to standardize a core set of processes, metrics, and

capabilities across multiple monitoring and surveillance activities, human space flight-related research

HHPC 10061

2-2

Verify that this is the correct version before use.

programs, and data requests in a more efficient and consistent manner, as well as integrate new and

existing data types and unstructured data.

The goal of this project is to plan, design, build, test, and deploy an extensible, flexible, and modular

data integration, management, collaboration, analysis, and visualization platform in support of NASA

HH&P’s occupational health monitoring and surveillance activities, and human space flight-related

research activities.

2.2 ASSUMPTIONS/CONSTRAINTS/RISKS

2.2.1 Assumptions

The IMPALA Platform infrastructure is built on both hardware and software technologies that are

influenced by industry standards. Some of these standards are in a state of evolution. To promote the

portability of software applications and to reduce overall infrastructure costs, industry standards will be

used to implement functions where they are deemed appropriate.

The following items are design assumptions for IMPALA’s initial implementation.

1. The MEME network team will provide cabling between the IMPALA rack and the Mission

Extended Medical Enterprise (MEME) network.

2. End users laptops and /or workstations used in accessing the IMPALA platform will be provided

by NASA JSC and not as a not part of the IMPALA platform.

3. Upstream connectivity to the MEME network will provided by the MEME network team.

4. The MEME network and security team will provide security and system health monitoring tools

to ensure the health and security posture of the platform.

5. The IMPALA platform will leverage the existing MEME network virtual center metadata store,

which uses an external SQL database.

6. Infrastructure services such as Domain Name Server (DNS), Active Directory (AD) are not part of

the IMPALA platform but made available from the Information Resource Directorate (IRD)

through the Network Access Control Board (NACB).

7. The IMPALA platform will not automatically patch or update applications or operating systems.

Patching and updating schedule is dependent on the MEME environment.

8. Routable addresses used for end user communication are supplied by the MEME network team

and not a part of the IMPALA platform design.

9. The MEME network team, to cover all required IMPALA systems, will provide non-routable

addresses for internal (intra-IMPALA systems) rack communications.

10. Power consumption for each IMPALA rack is not to exceed 5000 watts.

11. The IMPALA platform websites and/or web services will be registered in the System for Tracking

and Registering Applications and Websites (STRAW) by the HH&P IT Security team.

HHPC 10061

2-3

Verify that this is the correct version before use.

2.2.2 Constraints

1. The IMPALA platform will only be accessible through NASA networks via approved, dedicated

connections or Virtual Private Network (VPN) [e.g., the MEME- Secure Socket Layer (SSL) VPN

appliance].

2. The NASA/Wyle Technical Review Board (TRB) must approve all software and hardware

technologies and products selected by the Contractor before implementation.

3. The IMPALA Platform constraints may be influenced by NASA JSC Security center policies,

procedures and protocols.

HHPC 10061

3-1

Verify that this is the correct version before use.

3.0 DESIGN CONSIDERATIONS

3.1 GOALS

The goals of the IMPALA system are to:

• Increase accessibility to accurate and actionable data without compromising security

• Increase confidence in data through defined data governance processes and controls

• Enable users to improve the quality of data analysis, reports, recommendations, and decisions

substantiated by data stored in the IMPALA Platform

• Seamlessly ingest, integrate, and manage clinical, life sciences, epidemiological, environmental,

laboratory and astronaut's longitudinal health monitoring and surveillance data

• Provide automated processes to extract, cleanse, validate, transform and curate data in

multiple formats from a variety of sources based on analyst defined rules and controls

• Extract and store metadata and establish relationship between known entities and fields in the

source data

• Provide a highly scalable and available infrastructure to store, process and analyze data as well

as continuously refresh the data from the source data

• Facilitate stakeholder collaboration and real-time data sharing internally and externally with

trusted partners, universities and other government agencies to facilitate collateral exploratory

analyses and hypothesis testing by trusted outsiders and for more extensive peer review

• Provide a secure single point of access to the data in the platform for all approved users across

multiple end user devices (mobile, desktop, laptop and tablet) with appropriate platform-

specific security protocols

• Comply with NASA's security requirements and be certified to operate within the NASA

environment and comply with federal requirements for security and handling of medical PII

• Interoperate with existing NASA and HHPD systems

• Analyze and visualize data from multiple data sources in a single unified view including data

from external investigators and programs

• Correlate, aggregate, create and share datasets from multiple data sources for analysis by

internal and external scientists as well as reuse these datasets as sources for future data

requests

• Provide advanced analytics capabilities to perform data mining, data exploration and discovery

that retains meta-data on analyses and uses of the data by all users

• Support timely processing and provisioning of new data sources for platform users

3.2 DEPENDENCIES

Implementing the IMPALA system is dependent upon the following:

• MEME Infrastructure team will provide the tools for monitoring the health of the IMPALA

platform.

• HHPIT security team will provide the tools such as the CIS benchmarking software for hardening

the IMPALA systems.

HHPC 10061

3-2

Verify that this is the correct version before use.

• HHPIT security team will provide the security tools such as log aggregation agents, and

processes for auditing the IMPALA platform.

• MEME Infrastructure team will provide Antivirus package and license instructions for agents

installed on the IMPALA servers.

• IMPALA virtual center database will be backed up by the MEME Infrastructure team post

deployment of the platform.

• The MEME Infrastructure team will execute updates and patching of the IMPALA platform’s

Operating Systems.

• A Domain Name System (DNS) will be provided by the MEME network for identifying servers

and service.

• JSC Active Directory will be provided by the MEME for user profiles and authentication, as well

as group membership.

• Access to the Launchpad application will be provided for PIV card authentication.

• MEME Network will be provide network connectivity for access to the IMPALA platform for

connectivity and access.

• MEME Infrastructure team will generate template for user requests through NASA Access

Management System (NAMS)

• MEME will provide the peripherals needed for testing the IMPALA platform within the JSC

environment (laptops, network connection to IMPALA platform)

HHPC 10061

4-1

Verify that this is the correct version before use.

4.0 OVERALL SYSTEM ARCHITECTURE

The IMPALA platform uses an open framework designed to make adding, updating or swapping

components easy. The IMPALA platform is also designed to ensure scalability (ability to grow to meet

the analytics needs of NASA), availability (ability to ensure both data and systems are available to users)

and security (ensuring that the both data and systems are protected against attacks or unauthorized

access.

The IMPALA platform provides the following additional benefits to the end-user:

• Ease of use

• Data Cataloging

• High Speed Search

• Collaboration

• Self-service Dashboards and reports

The IMPALA platform will reside within the MEME network, which in turn resides within the Johnson

Space Center (JSC) network. The sub-sections to follow provide an overview of the IMPALA platform

design from the following views:

• Logical

• Functional

• Infrastructure

• Network

• Security

These views will be described from a user’s perspective and provide functional descriptions of the

components within the platform. Section 7 of this document will expand on these descriptions at a

more technical level.

HHPC 10061

4-2

Verify that this is the correct version before use.

4.1 LOGICAL ARCHITECTURE

Figure 4.1-1 IMPALA Key Enablers and Platform Logical Architecture

HHPC 10061

4-3

Verify that this is the correct version before use.

As depicted in Figure 4.1-1, the IMPALA Platform enables the NASA community of users to operate

within seven (7) key logical layers: Capture, Transport, Refine, Store, Analyze, Distribute, and Manage

Data, using the suite of components within the platform. It also supports the governance of data and

collaboration amongst the users. This section gives a brief description of the seven (7) logical layers, the

functions performed within the layer and the user community’s interaction with each layer. Table 4.1-1

provides a brief overview of the different user role groupings, as well as how they interact with the

layers of the IMPALA platform.

TABLE 4.1-1 MAPPING OVERVIEW: USER ROLES TO IMPALA PLATFORM LAYERS

Layers IMPALA Roles Function

Capture

Data Owners

Identify data sources to be ingested into IMPALA

Transport

Developers

Connect to multiple data sources

Build ETL workflows to ingest data into IMPALA data reservoir

at scale

Apply common rule-based transformations to data on ingest

Refine

Data Scientists, Data

Owners, Data Stewards

Profile data for anomalies and correct

Catalog the data by tagging

Address exceptions/errors identified during data ingest

Store

Administrator

Ensure the creations and proper access control for data landing

zones

Ensure health of data reservoir

Ensure accurate logging and auditing

Analyze

Data Scientists, Data

Analysts

Data set creation and management

Statistical and machine-learning analysis

Generation of dashboards and reports

Exploration of data

Searching, mining and ad-hoc querying

Distribute

Data Scientists, Data

Analysts

Publish insights

Package and publish generated data sets

Manage

Administrators,

Developers, Data

Stewards

Continuous monitoring of IMPALA infrastructure health.

Ensure that data is appropriately cataloged within the data

reservoir

4.1.1 Capture

The capture layer is focused on identifying data sources that are used in servicing the functions in the

analyze layer. This layer is primarily process driven and requires domain knowledge to identify the data

sources needed. Data that will initially be captured from existing data sources within the NASA

environment, which includes the EMR (Electronic Medical Record), the LSAH (MEDB Lifetime

HHPC 10061

4-4

Verify that this is the correct version before use.

Surveillance of Astronaut Health) and MEDB Sharepoint. Data sources in the capture layer are

technically outside the IMPALA platform. IMPALA interfaces with these data sources to ingest data.

The IMPALA platform is extensible and able to capture data from other types of data sources. The

platform also supports connectivity and capture/identification of relational data sources such as

ORACLE, Microsoft SQL Server, and Microsoft Access, as well as data located in file shares, cloud based

data stores and local files of varying formats (XML, CSV, JSON, PDF, and more).

The process of identifying these data sources is a collaborative effort between the HH&P IT and the

Domain subject matter experts (SMEs). The SMEs define the data of interest and the HH&P IT teams

identify the appropriate data source within the environment containing the requested data.

During this capture layer, multiple data sources are identified for transport (import) into the IMPALA

platform.

4.1.2 Transport

The transport layer of the IMPALA platform focuses on the movement of data from the production data

sources, present and future, to the IMPALA platform data store. Where the Capture layer focuses on

the identification of the data, the Transport layer contains the pipeline for bringing the data into the

IMPALA platform.

The IMPALA platform’s transport layer is not just limited to RDBMS tools previously listed (ORACLE,

Access, and MS SQL), it enables connection to any relational data source that supports ODBC or JDBC

connectors, connection to file shares and connection to data residing in the cloud.

The HHP&IT team will leverage the functions within this layer to build, test and deploy data capture

jobs. These data capture jobs will be scheduled to connect and load data from the data sources (EMR,

LSAH, etc.). As the list of production data sources grows beyond the initial data sources, this team will

support the design and development of the required data capture jobs. This team also manages the

interface to the production data source. The interfaces for relational data stores include ODBC and

JDBC as well as the credentials to connect to these production data stores. The IMPALA platform uses

built-in functions, such as secure copy or secure file transfer protocols, to import non-relational data

sources such as file. The data capture jobs are orchestrated using the Pentaho engine. Within this

engine, business rules are defined to apply static transformations to the data as it is transported into

the data reservoir.

4.1.3 Refine

In the IMPALA refine layer, data that is ingested through the transport layer are refined (selected,

standardized, tagged, categorized, summarized) and enriched either manually or automated. Users are

able to review ingested data and apply transformations or error cleaning logic to it. SMEs are also able

to use their knowledge to enrich the data with tags, labels and comments, as well as creating newly

derived fields. The data in this layer is transient, which mean it is not in its final state. It is also

important to note that all transformations or profiling functions applied to the data in this layer are

performed against an internal IMPALA copy of the original data (from the Transport layer), not the data

source.

In this layer the users, Developers, Data Owners, Data Stewards, Data Scientists and Data Analysts,

crowd-source (i.e collaborate) on their knowledge of the domain to create profiling jobs that enable the

HHPC 10061

4-5

Verify that this is the correct version before use.

cataloging of data going into the IMPALA store. These jobs can then be scheduled or called on demand

by components of the Transport layer.

The greatest benefit of the work done in this layer is that it allows for the improved efficacy of searches

against and analysis of data performed by users such as data scientists, and data analysts. The SME

community tags each data table and field that passes through the refine layer with common terms used

by end users as defined/refined.

4.1.4 Store

The IMPALA store layer provides the landing zone for captured, transported and refined data. This

landing zone is the distributed IMPALA data reservoir. Three (3) key principals govern the design of this

layer:

• Scalability

The IMPALA store layer is built using the Apache Hadoop platform. Apache Hadoop is an open

source software platform for distributed storage and processing of very large data sets on

server clusters built from commodity hardware. Because it is built on a cluster of servers,

scaling to match growth in data is as simple as adding more server hard drives or adding

another server. As the data analysis needs of NASA grow, the data size will grow. The IMPALA

platform store layer ensures that when this growth occurs the system can accommodate it.

• Redundancy

The distributed storage design of IMPALA’s Apache Hadoop data store layer ensures that every

block of data is replicated 3 times across the cluster of servers. This means that even with the

loss of a server or a hard drive, the system continues to function as normal. Built in

management modules, described in section 7, ensure that when a hardware failure occurs, an

administrator is notified. Once the server or hard drive is repaired/replaced, data is copied back

to the server/drive as it resumes as part of the cluster. In addition, this redundancy allows data

processing on a given segment of the data to be performed on any of its available copies,

reducing data processing bottlenecks and improving performance on large datasets.

• Performance

The Apache Hadoop platform supports distributed processing and the IMPALA platform design

leverages this feature by ensuring that all end user jobs/functions, such as searching, enriching,

cataloging and transforming data, are performed by all the nodes/servers in parallel in the

cluster.

With these three (3) key principals, the IMPALA platform is ensured a quick response time as well as the

confidence of data safety.

Another key function of the store layer is its ability to store data of all types. Relational databases or

file-based data such as pdfs, CSVs, DOCs, XMLs, JPGs, PNGs and more can be stored and coexist within

the IMPALA store layer. They can also be blended together.

With this layer, the users of the IMPALA platform gain access to one aggregated data source with a

variety of data types and they are able to process and use these datasets for analytics and search.

4.1.5 Analyze

The analyze layer leverages the processing power of the store layer and provides components that

enable searching and mining of data, ad-hoc querying at scale, collaborative data request fulfillment,

HHPC 10061

4-6

Verify that this is the correct version before use.

reports generation and data exploration. The data analysis and visualization tools provided with the

IMPALA platform work with the Apache Hadoop platform to handle the distributed processing

intrinsically, providing the end users with familiar functions for joining, searching, querying, and

analyzing their datasets. The user interfaces in this layer are all web-based and many provide drag and

drop capabilities and immediate feedback on a sample of the data.

NASA data scientists and analysts use the components of this layer to consume data, deliver insights,

manage relationships between different data sources, and create and edit data sets for fulfilling data

requests.

The users access this layer through a web-based portal after authenticating against the JSC active

directory domain. The IMPALA platform uses role based access control to ensure that a user is only

allowed to access or analyze the data for which they have been granted permission.

4.1.6 Distribute

Data sets created in the analyze layer may distributed or published to other users using the components

within this layer. Internal JSC users can authenticate into the IMPALA platform and view or download

generated data sets, analytic results, or visualizations.

4.1.7 Manage

In this layer, the Data Stewards and Developers develop scripts for data loading and transformation,

cataloging of data, and tagging data based on domain knowledge. These scripts are tested and then

packaged for use in the capture, transport and refine layers. The packaged scripts are accessible as web

services or through command line interfaces.

Administrators use the components of this layer to manage access and privacy of data.

HHPC 10061

4-7

Verify that this is the correct version before use.

4.2 FUNCTIONAL ARCHITECTURE

Figure 4.2-1 Layered Functional View of IMPALA platform

HHPC 10061

4-8

Verify that this is the correct version before use.

This section describes the functional purpose of each layer mentioned in the Logical Architecture

(section 4.1). It also briefly describes how the users will gain access to and interact with the platform.

The IMPALA platform will leverage the existing Johnson Space Center (JSC) NASA Access Management

System (NAMS) and process to provision users (i.e., Users will request access to IMPALA through

NAMS). An IMPALA review board reviews user access requests for approval. The IMPALA administrator

creates the user profiles and provisions the appropriate role within the IMPALA system after the NAMS

request is approved.

Based on the current MEME infrastructure, users with appropriate credentials interact with the IMPALA

platform by connecting to the JSC network from an onsite workstation or through the JSC VPN and

authenticating into the IMPALA platform.

The IMPALA portal is the web-based graphical user interface (GUI) used for accessing all the

components described in the Logical Architecture view. Access to this interface is through a web

browser on the user’s desktop workstation.

Authenticated and approved users are able to create information dashboards and visualizations,

connect to multiple data sources, define and generate a data catalog, search for data and wrangle (or

clean) data pre or post search. Each component behind the IMPALA portal is web-based and provides

drag and drop or point and click capabilities for ease of use.

The transport functional layer enables the hydration of data into the data reservoir from initial data

sources identified such as the EMR (Electronic Medical Record) or the LSAH (Lifetime Surveillance of

Astronaut Health), which reside on two (2) different relational databases (RDBMS) – Oracle and

Microsoft SQL Server (MS SQL) in the capture process. Data is processed, cleaned, and profiled during

import for the purpose of search, analytics or reporting.

Developers create Extract, Transform and Load (ETL) jobs in the transport layer using Pentaho Data

Integration tools to move/copy data. These jobs, once validated, are scheduled to ensure the data

reservoir contains fresh data. The transport layer also functions as an orchestration engine by allowing

external processes such as transformation scripts built in the refine layer, using Trifacta, to be called

outside of the standard copy of data.

The refine functional layer provides components such as Trifacta and Pentaho to create and apply rule-

based data transformations before load. Transformations created and packaged in Trifacta for

repeatable cleansing of data are referred to as static transformations. These static transformations are

provided as steps in the transport layer mentioned above.

The analyze functional layer described in section 4.1.5 consists of the components that:

• MASH reports, Dashboard, Data Catalog, Search and Data Wrangling

• enable the creation of repeatable workflows that pull data from the data reservoir,

• establish collaboration workspaces for different risk specializations,

• connect to and pull data from other data sources to enrich searches,

HHPC 10061

4-9

Verify that this is the correct version before use.

• create dashboards for reporting and informing flight surgeons and finally for creating datasets

that fulfill request for data.

The functionality within the analyze functional layer allows for the creation of MASH reports,

Dashboard, Data Cataloging, Searching and Data Wrangling as depicted in figure 4.2-1

Behind these components is the Distributed Data Reservoir (in the store layer). This component

supports the authentication and authorization within the IMPALA platform data reservoir. Each of the

services in the other layers must authenticate against the data reservoir in order to perform any

functions with the data reservoir. This authentication is controlled using Kerberos. Authorization at the

data level is accomplished via role-based access control (RBAC) using Sentry software. This layer also

enables the encryption of data at rest for privacy. Details on the authentication and encryption are

discussed section 7 of this of this document.

The IMPALA NAS (Network Attached Storage) will be used to backup the data reservoir. This process is

described in section 6.2.4.

The IMPALA platform leverages existing infrastructure management functionality within the MEME

network by providing system SNMP (Simple Network Management Protocol) traps to existing health

management tools such as SolarWinds. The MEME environment currently uses an agent-based log

aggregation tool for security auditing. To ensure compliance, these agents are installed on the MEME

servers for security auditing purposes. The MEME environment currently uses an agent-based tool, Dell

KACE, for inventory and system management. The servers within the IMPALA platform leverage the

Computer Information Security benchmark to ensure appropriate hardening of the servers.

The IMPALA platform provides an issue-tracking interface, using JIRA, that enables HHPIT and IMPALA

administrators to post issues within the platform. These issues are tracked through to resolution using

the IMPALA issue-tracking component (JIRA). JIRA also enables the tracking of updates to the IMPALA

platform by entering and tracking update requests for the new releases of functionality.

These layers work together to allow users of the IMPALA platform to work collaboratively to meet the

goals defined in section 3.1.

HHPC 10061

4-10

Verify that this is the correct version before use.

4.3 INFRASTRUCTURE ARCHITECTURE

Figure 4.3-1 Overview of Infrastructure Architecture

HHPC 10061

4-11

Verify that this is the correct version before use.

The IMPALA Platform is a self-contained rack located in the building 46 Data Center in room 300. It is

interconnected electronically with the MEME environment behind the MEME firewall. The sub-

components of the IMPALA platform are deployed as a combination of virtual machines and physical

servers. The user facing components are deployed as virtual machines across three physical hosts,

called application nodes. The IMPALA distributed data reservoir is deployed across six (6) physical

servers (2 Master Nodes and 4 Data Nodes). Communication between the application nodes and the

data nodes within the rack is through services on the Master nodes over a 10Gbe network.

The IMPALA rack contains four switches, two (2) 10Gbe switches in an HA configuration for intra-rack

communication and two (2) 1Gbe switches in an High Availability (HA) configuration for

user/management communication. The user/management switch connects to the MEME firewall for

user and management access. The IMPALA rack also contains an iSCSI Storage device used as a central

store for VMs and for backup of data within the reservoir. The backup strategy is outlined in the

Operational Scenario section.

Data from data sources such as the Electronic Medical Record (EMR), the Lifetime Surveillance of

Astronaut Health (LSAH) and Medical Evaluation Document part B (MEDB), as well as future data

sources, will be ingested into the IMPALA data nodes. Layout and landing zone information as well as

the governance processes for data loads are in the Data Governance Framework document.

As stated in section 4.2, the IMPALA Platform leverages the following existing processes and tools

within the MEME environment for health management and security posture:

• Configured SNMP traps for monitoring IMPALA Platform server components such as CPU, disk,

memory etc., as well as availability or uptime of the servers forwarded to the infrastructure

management tool provided by the MEME infrastructure team.

• Anti-virus agents, as provided by the MEME infrastructure team, are deployed on IMPALA

servers

• Server hardening leveraging the Center for Information Security (CIS) benchmarking

requirements as provided by the NASA IT security team

• Deployment of log aggregation agents as provided by the HHPIT security team for auditing

Patches to the operating system and upgrades of the individual components of the IMPALA platform

will adhere to the policies set forth by the NASA IT security team. Section 6.2.3 details the process for

patching the IMPALA platform.

The IMPALA Platform physical architecture supports high performance through the following means:

• Clustering

The data nodes are clustered enabling sharing of the workload across commodity hardware.

The Application server hosts are also clustered to ensure that the loss of one application server

does not lead to a drop in unavailability of any of the applications

• RAID Configuration

The OS hard disks for the servers within the IMPALA Platforms are in RAID-1 configuration to

ensure continued operations in the event of a hard disk failure. The storage device is

configured for RAID-6

HHPC 10061

4-12

Verify that this is the correct version before use.

• Distributed Storage

Each block of data is triple replicated across multiple disks and servers to ensure that the loss of

one drive or server does not lead to data loss

• Distributed Processors

The IMPALA Platform leverages the processors/cores across all the servers within the cluster to

ensure requests such as searching, data mining or analytics are processed fast and in a parallel

fashion

• Network Throughput

A 10Gbe intra-rack backbone ensures high speed data transfer between the master nodes, data

nodes and application nodes.

The local iSCSI storage device within the IMPALA rack serves the following purposes:

• VMDK (Virtual Machine Disk) Storage

Each application host (also known as ESXi servers) will host the virtual machines on which each

component (or application) runs. Each virtual machine (VM) will have its disk located on the

iSCSI storage devices. This design enables high availability within IMPALA’s virtual

infrastructure.

• Backup

Section 6.2.4 describes the backup process, schedule and methodology within the IMPALA

platform. The following general items will be backed up to the storage device by default:

o Application configuration files

o Application metadata information such as project files or metadata database generated

as a result of usages

4.4 NETWORK ARCHITECTURE

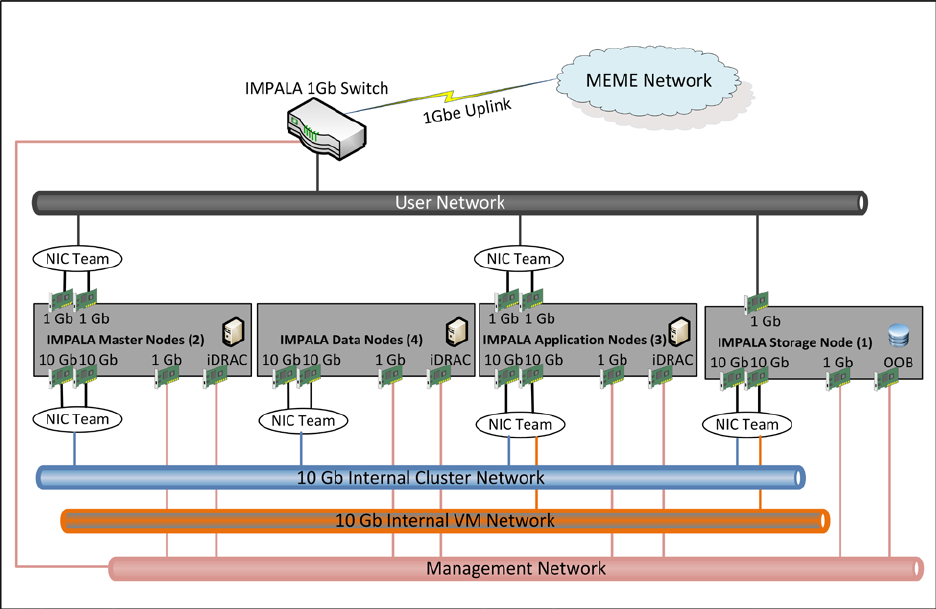

The IMPALA platform operates on four main networks:

• User Network

A 1Gbe network dedicated to end user communications. Two (2) IMPALA Top-of-Rack (TOR)

switch configured with HA support this network.

• Internal Cluster Network

A 10Gbe network dedicated to communications between the applications and services within

the IMPALA platform. Two 48 port 10Gbe switches support this communication. These

switches are in a High Availability (HA) configuration

1. Internal Virtual Machine (VM) Network

A 10Gbe network dedicated to communications between the virtual machines and storage unit

within the IMPALA platform.

• Management Network

A 1Gbe network dedicated to management of platform devices and monitoring the health of

the IMPALA platform. A 1Gbe network dedicated to out-of-band (OOB) management of the

servers within the IMPALA platform.

HHPC 10061

4-13

Verify that this is the correct version before use.

The connections to this network and how the IMPALA platform is connected to the MEME network is

depicted in Figure 4.4-1.

Figure 4.4-1 Network Architecture Overview

Users (Data Analysts, Data Scientist, Researchers, etc.,) interact with the IMPALA platform by

connecting to the JSC network from an onsite workstation or through the JSC VPN. Each server within

the rack is fitted with multiple Network Interface Cards (NICs), as depicted in figure 4.4-1.

The two-10Gbe NICs are configured in a NIC Team using NICS Teaming – a process of combining NICs

together for performance. These two NICs support communications on the 10Gbe Internal Cluster

Network. The Internal Cluster Network is used for communication between the applications located on

the virtual machines (VM) and the data reservoir nodes (master and data nodes). It is also used for

communication between the different components of the IMPALA data reservoir, for example,

communication between the resource manager and the data nodes.

The storage device and the application hosts (VM hosts) are also configured a 10Gbe NIC each. These

enable communication over the internal VM Network.

For communication on the User Network, each server is configured with two-1Gbe NICs also configured

in a NIC team for performance and high availability.

For communication over the Management Network, each server is configured with two-1Gbe NIC. The

first is for monitoring the IMPALA platform via management tools, as well as for patching and other

HHPC 10061

4-14

Verify that this is the correct version before use.

administrative functions that affect the OS of the servers. The second NIC is for Out-Of-Band (OOB)

communication using the iDRAC (integrated Dell Remote Access Controller). The iDRAC provides

functionality that helps in deploying, updating, monitoring and maintaining the servers with or without

a system management software agent.

4.5 SECURITY ARCHITECTURE

Security within the IMPALA platform is designed to protect the data via five (5) discrete layers:

Perimeter Security, Authentication, Authorization, Encryption and Policy.

HHPC 10061

4-15

Verify that this is the correct version before use.

Figure 4.5-1 IMPALA Security Overview

HHPC 10061

4-16

Verify that this is the correct version before use.

Perimeter security refers to the network controls that protect network access to the IMPALA platform.

The IMPALA platform is within the MEME environment, which is guarded by the MEME Firewall. For

any user to gain access to the MEME environment, they must first establish a secure Virtual Private

Network (VPN) connection.

Both users that access the IMPALA platform and services components that operate within the IMPALA

platform must authenticate to gain access to or perform any operation against the data. Users request

access to the IMPALA platform through the NAMS system. The MEME administrator based on defined

policies established in the data governance guide fulfills these requests. Users authenticate through the

NASA LaunchPad system (see Figure 4.5-2 below).

Figure 4.5-2 User Authentication process overview

Service accounts are created within the IMPALA platform for each component to communicate with the

data reservoir. These service accounts are created in Kerberos Key Distribution Center (KDC) so each

service has its own unique credentials (as keytabs) to access the data in the data reservoir and to

execute tasks against that data. This process is described in detail in section 7.

For authorization, the IMPALA system leverages a role-based access control system at the data and

component layers of the platform. It also leverages groups defined in active directory via a one way

trust between the NDC active directory and the Kerberos KDC. Sentry, described in more detail in

section 7, is used to assign privileges based on roles to each data entity within the IMPALA platform.

All data in the data reservoir is encrypted using AES-256 encryption. This ensures that malicious access

to the data does not lead to release of PII information.

Per the JSC/HHPIT security guidelines, the server OS’s are hardened using the Center for Internet

Security (CIS) guidelines to ensure a proper lockdown of the system. Antivirus and monitoring agents

are installed on all servers within the IMPALA platform. Logs for systems and application are configured

to be captured using a log aggregation tool specified by the HHPIT security team.

The table below shows a breakdown of each security function and which tool or component supports it.

HHPC 10061

4-17

Verify that this is the correct version before use.

TABLE 4.5-1 SECURITY FUNCTION TO TOOL MAPPING

Security Function

Provided by

Native/Leveraged

Perimeter Security

MEME Firewall/VPN

Leveraged

Access Control

Sentry

Native

Access Requests

NAMS

Leveraged

System Monitoring

SolarWinds

Leveraged

Security Audit Logging

Splunk

Leveraged

Application/System Audit Logging

Splunk

Leveraged

OS Hardening

CIS Benchmark

Native & Leveraged

Encryption of data at rest

Navigator Encrypt /AES-256

Native

Encryption of data in motion

TLS/SSL

Leveraged

Compliance Audit Support

Navigator Audit/Navigator

Lineage

Native

Key Management

KMS/KTS

Native

Malware & Harmful Code

Protection

TrendMicro

Leveraged

User Identity

NDC AD

Leveraged

Application Access

Kerberos

Native

User Access

Launchpad

Leveraged

Inventory/System Management

Dell KACE

Leveraged

Vulnerability Scanning

MVM

Leveraged

Software Vulnerability & Memory

Protection

EMET

Leveraged

HHPC 10061

5-1

Verify that this is the correct version before use.

5.0 DATA GOVERNANCE FRAMEWORK

A Data Governance Framework will be built for the NASA HHPC program to ensure clear communication

within the IMPALA team and across all organizations that it touches and to maintain scope and focus,

establish accountabilities, and define measurable successes. The details of the Data Governance

Framework are presented in the Data Governance Framework document.

HHPC 10061

6-1

Verify that this is the correct version before use.

6.0 OPERATIONAL SCENARIOS

The IMPALA Platform provides a single source of data for NASA data analysts, data scientists, and other

users to locate data, perform analysis, and share their investigations. The scope of functionality

provided by the tools in the IMPALA platform allows for a large number of operational scenarios. This

document will cover some of those operational scenarios most likely at the initial onset of use.

Initially, the IMPALA data reservoir is hydrated by scheduled Pentaho jobs that run either on demand or

on a scheduled basis (recurring daily or weekly) to refresh the data. On unsuccessful loads, there will be

exceptions and error logs generated. After data ingest, ETL engineers will use Trifacta to confirm data

or cleanse and transform it. Exception handling will also be handled at this stage and logged into the

system. After the data is imported and cleansed, it is ready for use by the Data Scientists and other end

users. Section 6.2, describes expected Operational Scenarios using the data imported into the IMPALA

platform.

6.1 END USER USAGE SCENARIOS

This section describes initial usage scenarios that portray end user experiences with the IMPALA

platform. These usage scenarios are grouped as End User and IT User scenarios. Table 6.1-1 is a

summary of some of the usage scenarios that are enabled by the IMPALA platform, which layer of the

platform they apply to, and which general IMPALA roles operate within those layers.

TABLE 6.1-1 SAMPLE USAGE SCENARIOS MAPPED TO IMPALA ROLES AND LAYERS

User Experience of Applies to Group Applicable IMPALA layer

Perform Data Ingestion at scale

Developer

IT User

Transport and Store

Profiling, Cataloging and managing data

Data Owner,

Data Steward

End User

Refine

Manually edit data

Data Steward,

Data Owner

End User

Analyze

Create, manage & share data sets

Data Scientist,

Data Owner,

Data Scientist

End User

Analyze and Distribute

6.1.1 Data Ingestion

The IMPALA platform leverages Pentaho Data Integrator (PDI) for ingesting data into the IMPALA data

reservoir. Pentaho Data Integrator enables developers on the HH&P IT team to create custom

workflow based jobs to pull data from the source, transform and push data into the data reservoir.

These jobs are executed on demand, or are scheduled, to hydrate the data reservoir from data sources

such as the EMR, LSAH, MEDB, and file shares hosting generated reports. The data ingest process

includes identifying data sources to ingest, identifying static transformations that need to be applied to

the data upon ingest, tracking of requests to create repeatable data ingest pipelines, creating and

executing repeatable pipelines.

HHPC 10061

6-2

Verify that this is the correct version before use.

Figure 6.1.1-1 Data Ingestion Flow

The Data Governance board identifies data sources that should be ingested into the IMPALA data

reservoir. Data scientists, Data Owner and Data Stewards define:

• Filters to ensure that data pulled into the data reservoir are relevant.

• Static transformations or business rules, like unit changes or dealing with nulls, that need to be

applied to the data upon ingest.

• Refresh intervals and other ingest parameters for the HH&P IT team to create data ingest

pipelines

The identified transformations, identified data sources, and ingest parameters are transformed into

requirements that are tracked within the JIRA component. The HH&P IT team reviews requirements

within JIRA and create:

• Sqoop jobs for reading data from a relational database and writing the data into IMPALA.

• Modular scripts used to transform the data using Trifacta and custom scripts.

• Workflows which orchestrate the connection to the data sources, the querying and filtering of

the data, and the transformation of the data as the data is pushed into the data reservoir.

In addition to creating workflows that are based on business rules (static transformations),

transformations created post initial data load using Trifacta can also the packaged and applied to future

ingests. The analysts reviews the data in the data reservoir and identifies necessary transformations

that need to be persisted. These transformations are then created using Trifacta and the ensuing script

is added to the data ingest workflow pipeline for future/subsequent data ingests.

HHPC 10061

6-3

Verify that this is the correct version before use.

6.1.2 Profiling and Cataloging Data

As part of a Data Governance framework, profiling and cataloging are organization techniques that

ensure data can be located and tracked within the data reservoir. One of the benefits of a data

reservoir include providing a single source for data of all types and origins. However, due to the size

and scope of the data reservoir capabilities, if organization techniques are not employed as the

reservoir fills, locating the desired information for analysis or visualizations can become increasingly

difficult. The Data Governance framework provides these processes to keep the data organized.

Figure 6.1.2-1 Data Cataloging Flow

The initial step for organization is determined by the folder structure used for storing data. The

incoming data is held in a landing zone within the IMPALA data reservoir while it is being profiled and

cataloged, then it is moved to a pre-established destination. Datasets and extracts created from the

original files can be tracked through the IMPALA platform using lineage and pedigree tools.

Profiling establishes metadata for incoming data on both the file and data element levels. The IMPALA

platform provides tools that perform automated profiling and tagging when the data is in the landing

zone within the IMPALA data reservoir. In addition to the tags provided by the tools, the user can

define business rules to customize tags based on the content of the incoming data. Once cataloging

and tagging jobs have been defined, a Pentaho Data Integrator workflow is defined and developed by a

developer to automate the process of cataloging and profiling when new data is placed into the landing

zone.

Cataloging the profiled data allows users to search the metadata and apply custom tags to quickly

locate files with specific content.

The Data Governance board provides the processes that will be followed for both automated and

customized profiling, cataloging, and file hierarchy. Collaboratively, Data scientists, Owners, Stewards

and Analysts define:

HHPC 10061

6-4

Verify that this is the correct version before use.

• Filters to ensure that data pulled into the data reservoir is relevant.

• Domains and tags for that data

• Filters and tags to define subject-area datasets

• Business rules for custom tagging

• Metadata searches

6.1.3 Manually Edit Data

There are many operational reasons for approved users to edit data in the data reservoir. The tools and

methods used to edit data depend on the length of time that the edit should persist, if at all they should

persist. There are generally three types of edits to data within the IMPALA platform, Persistent/Static

Transformation edits, Non-persistent/Analytic edits and In-place edits.

Persistent/Static transformation edits are applied to data prior to its landing in the data reservoir as

well as errors identified that need to be addressed for every data ingest after the initial load. The edits

that occur as data is being ingested the first time are applied using business rules built into Pentaho

workflows. The process for these edits is described in the steps below:

1. Subject Matter Expert/Analysts identifies a change that needs to be made to data before

ingestion based on historical knowledge or experience

2. A request is posted to the Developer to add business rules that edit the data in transit into the

data reservoir

3. Created business rules are inserted as steps in the data ingest workflow ensuring that the edits

are consistent and made on every data ingest

In other scenarios of persistent/static transformation edits, a subject matter expert or analyst identifies

error in the data while working with the data in the reservoir. In this scenario, the analysts uses Trifacta

to make edits to the data this process in-turn generates a scripts that can be called by the data ingest

orchestration workflow ensuring the transformation or edit is applied on subsequent ingests. This

process is depicted in Figure 6.1.3-1 below.

HHPC 10061

6-5

Verify that this is the correct version before use.

Figure 6.1.3-1 Persistent Manual Edit of Generated Data Set

Data that is generated and stored within the IMPALA data reservoir such as results of analysis or search

requests, are pulled into the wrangling tool, Trifacta. The analyst, who understands the data and the

edits that need to be made applies that transformation, executes the script, and validates the resulting

dataset. Execution of the script creates a new data set in the Data Reservoir with all the metadata

associated with the edit/transformation. A request is then sent to the ETL developer to add this script

to the data ingest process for persistent edits of data with the same structure.

Persistent edits or static transformations may include the following:

• Decomposing a compound field into multiple fields

• Unit conversions to standardize the measurement system for data of the same type (such as

dates, height, weight)

• Removing incorrect values

• Adding additional information

• Adding lookup or descriptive information

Tools used for persistent edits:

• Trifacta scripts integrated into the Pentaho workflow

Non-persisted edits generally describe edits that are made during the course of analyzing or dealing

with the data in the data reservoir. They are performed during analysis and modeling for a particular

purpose. These edits may be documented and shared with other users, but the edits only apply to the

data as it is being used in the analysis. In this type of edit, the data is not persisted and it is only used

for the duration of the analysis.

Types of edits performed during analysis may include:

HHPC 10061

6-6

Verify that this is the correct version before use.

• Removing null values

• Filtering outliers

• Deriving or calculating new fields

Tools used for edits during analysis:

• Trifacta scripts

• Alpine cleansing, filtering, or SQL functions

Regardless of which of the methods described above is used, the source data within the IMPALA

platform is never altered during editing. A copy of both the original data and the edited data are saved,

which allows for tracking and auditing of all data changes.

One last type of edit is in-place or row/cell level edits. These edits do not need to be persisted and do

not apply to the data-at-large. In this scenario, the analyst may recognize something as simple as a

misspelled word or unit on a particular row and cell within the generated dataset. In this scenario, an

out flow edit is required. First, the user must download the data set in a tabular form to their desktop.

Then using a tool such as excel, modify the row/cell with the errant data value. Once the error is

corrected, the data can then be uploaded back into the data reservoir in a location defined by the

governance process, if there is a requirement to track such change. Metadata that needs to apply to

this process is also defined in the data governance document. This metadata is used for auditing.

Figure 6.1.3-2 below is a depiction of this process.

Figure 6.1.3-2 In-Place Row/Cell Manual Edits of Generated Dataset

HHPC 10061

6-7

Verify that this is the correct version before use.

6.1.4 Create, Manage and Share Data sets

The main operational purpose of the IMPALA platform is to provide an environment that allows users to

access data from multiple data sources for data querying, creating data sets, assembling reports to

support requirements, performing analyses, and constructing dashboards and visualizations.

A user can create a new dataset by extracting, querying, editing, filtering, merging or joining existing

datasets within the IMPALA data reservoir. The resulting dataset will be stored in the IMPALA data

reservoir, where additional tags and metadata can be added. The IMPALA platform traces the lineage

of data elements and datasets, allowing the originating source(s) of the dataset to be traced using

Waterline or Cloudera Navigator, along with the modifications that have been made to the dataset.

The IMPALA system provides data collaboration, which allows users to share datasets, investigations,

and analysis within a secure environment. Frequently used queries can be shared with other team

members. In progress or completed investigations can be shared for review, comments, or posterity. In

particular, the methods and queries used to extract and cleanse a data set can be stored and shared

with other users, along with comments about how and why certain adjustments were made to the

dataset. Authorized team members will be able to re-use search queries, cleansing scripts or workflows

to fulfill similar data needs in the future. Over time, the shared analysis methods, SQL queries, and

search criteria will become a wealth of knowledge that can be used to rapidly onboard new team

members.

6.2 ADMINISTRATOR USAGE SCENARIOS

The Administrator manages the users and the applications that comprise the IMPALA platform. The

Administrator does not work directly with the data, and has a separate set of usage scenarios.

6.2.1 User Provisioning

The IMPALA platform relies on guidance from the data governance document and the MEME

infrastructure team to generate a NAMS request template for the IMPALA platform. The NAMS request

template will define, among other attributes, a list of IMPALA sponsors, a list of IMPALA registration

authorities, and a list of IMPALA roles.

HHPC 10061

6-8

Verify that this is the correct version before use.

Figure 6.2.1-1 IMPALA User Provisioning Process

A user requesting access to the IMPALA platform will complete a “Request New Application Account”

form within the NAMS web UI. As part of the form, the user selects the IMPALA roles to which they

wish to belong, as well as their sponsor. The request is forwarded via email to the IMPALA sponsor who

reviews the request. An approved request will generate an email request to the IMPALA registration

authority; this is the individual or individuals with super user rights within the IMPALA platform to

provision new users. The IMPALA registration authority creates an account or user profile within each