MySQL Performance Schema

Table of Contents

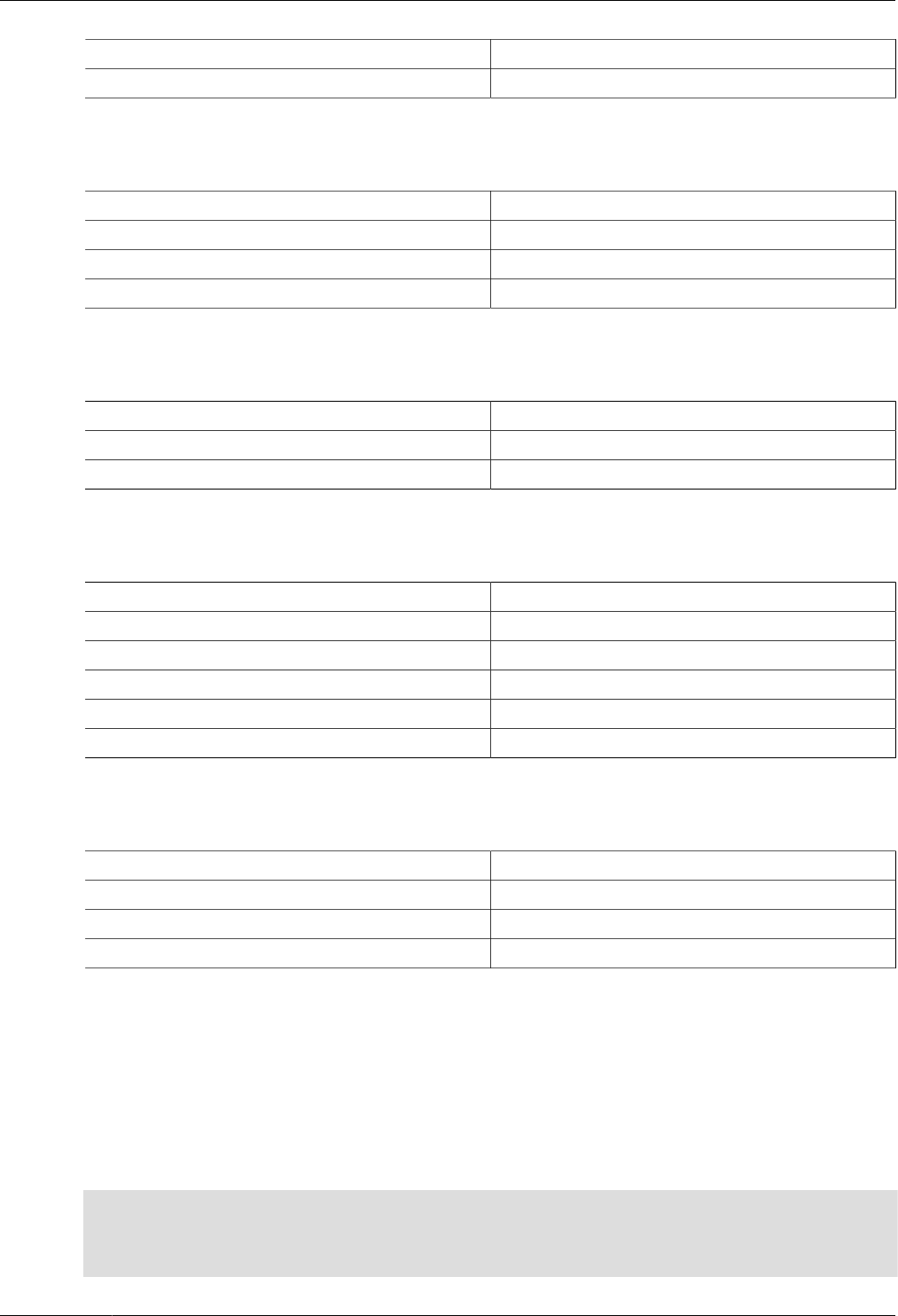

Preface and Legal Notices ............................................................................................................ v

1 MySQL Performance Schema .................................................................................................... 1

2 Performance Schema Quick Start .............................................................................................. 3

3 Performance Schema Build Configuration ................................................................................... 9

4 Performance Schema Startup Configuration .............................................................................. 11

5 Performance Schema Runtime Configuration ............................................................................ 15

5.1 Performance Schema Event Timing ............................................................................... 16

5.2 Performance Schema Event Filtering ............................................................................. 19

5.3 Event Pre-Filtering ........................................................................................................ 20

5.4 Pre-Filtering by Instrument ............................................................................................ 21

5.5 Pre-Filtering by Object .................................................................................................. 22

5.6 Pre-Filtering by Thread .................................................................................................. 24

5.7 Pre-Filtering by Consumer ............................................................................................. 26

5.8 Example Consumer Configurations ................................................................................ 29

5.9 Naming Instruments or Consumers for Filtering Operations ............................................. 34

5.10 Determining What Is Instrumented ............................................................................... 34

6 Performance Schema Queries .................................................................................................. 37

7 Performance Schema Instrument Naming Conventions .............................................................. 39

8 Performance Schema Status Monitoring ................................................................................... 43

9 Performance Schema General Table Characteristics ................................................................. 47

10 Performance Schema Table Descriptions ................................................................................ 49

10.1 Performance Schema Table Reference ........................................................................ 50

10.2 Performance Schema Setup Tables ............................................................................. 54

10.2.1 The setup_actors Table .................................................................................... 54

10.2.2 The setup_consumers Table ............................................................................. 55

10.2.3 The setup_instruments Table ............................................................................ 56

10.2.4 The setup_objects Table ................................................................................... 57

10.2.5 The setup_timers Table .................................................................................... 58

10.3 Performance Schema Instance Tables ......................................................................... 59

10.3.1 The cond_instances Table ................................................................................ 59

10.3.2 The file_instances Table ................................................................................... 60

10.3.3 The mutex_instances Table .............................................................................. 60

10.3.4 The rwlock_instances Table .............................................................................. 61

10.3.5 The socket_instances Table .............................................................................. 62

10.4 Performance Schema Wait Event Tables ..................................................................... 64

10.4.1 The events_waits_current Table ........................................................................ 65

10.4.2 The events_waits_history Table ........................................................................ 68

10.4.3 The events_waits_history_long Table ................................................................ 69

10.5 Performance Schema Stage Event Tables ................................................................... 69

10.5.1 The events_stages_current Table ...................................................................... 72

10.5.2 The events_stages_history Table ...................................................................... 74

10.5.3 The events_stages_history_long Table .............................................................. 74

10.6 Performance Schema Statement Event Tables ............................................................. 74

10.6.1 The events_statements_current Table ............................................................... 78

10.6.2 The events_statements_history Table ................................................................ 82

10.6.3 The events_statements_history_long Table ........................................................ 82

10.6.4 The prepared_statements_instances Table ........................................................ 83

10.7 Performance Schema Transaction Tables .................................................................... 85

10.7.1 The events_transactions_current Table .............................................................. 89

10.7.2 The events_transactions_history Table .............................................................. 91

10.7.3 The events_transactions_history_long Table ...................................................... 92

10.8 Performance Schema Connection Tables ..................................................................... 92

10.8.1 The accounts Table .......................................................................................... 94

10.8.2 The hosts Table ............................................................................................... 95

10.8.3 The users Table ............................................................................................... 95

iii

MySQL Performance Schema

10.9 Performance Schema Connection Attribute Tables ........................................................ 95

10.9.1 The session_account_connect_attrs Table ......................................................... 98

10.9.2 The session_connect_attrs Table ...................................................................... 98

10.10 Performance Schema User-Defined Variable Tables ................................................... 98

10.11 Performance Schema Replication Tables ................................................................... 99

10.11.1 The replication_connection_configuration Table .............................................. 102

10.11.2 The replication_connection_status Table ........................................................ 104

10.11.3 The replication_applier_configuration Table .................................................... 105

10.11.4 The replication_applier_status Table .............................................................. 105

10.11.5 The replication_applier_status_by_coordinator Table ...................................... 106

10.11.6 The replication_applier_status_by_worker Table ............................................. 107

10.11.7 The replication_group_members Table .......................................................... 109

10.11.8 The replication_group_member_stats Table ................................................... 109

10.12 Performance Schema Lock Tables ........................................................................... 110

10.12.1 The metadata_locks Table ............................................................................ 110

10.12.2 The table_handles Table ............................................................................... 112

10.13 Performance Schema System Variable Tables .......................................................... 114

10.14 Performance Schema Status Variable Tables ........................................................... 115

10.15 Performance Schema Summary Tables .................................................................... 116

10.15.1 Wait Event Summary Tables ......................................................................... 118

10.15.2 Stage Summary Tables ................................................................................. 120

10.15.3 Statement Summary Tables .......................................................................... 121

10.15.4 Transaction Summary Tables ........................................................................ 124

10.15.5 Object Wait Summary Table .......................................................................... 125

10.15.6 File I/O Summary Tables .............................................................................. 126

10.15.7 Table I/O and Lock Wait Summary Tables ..................................................... 127

10.15.8 Socket Summary Tables ............................................................................... 130

10.15.9 Memory Summary Tables ............................................................................. 131

10.15.10 Status Variable Summary Tables ................................................................. 135

10.16 Performance Schema Miscellaneous Tables ............................................................. 136

10.16.1 The host_cache Table .................................................................................. 136

10.16.2 The performance_timers Table ...................................................................... 139

10.16.3 The processlist Table .................................................................................... 139

10.16.4 The threads Table ........................................................................................ 142

11 Performance Schema and Plugins ........................................................................................ 147

12 Performance Schema System Variables ................................................................................ 149

13 Performance Schema Status Variables ................................................................................. 167

14 Using the Performance Schema to Diagnose Problems ......................................................... 171

14.1 Query Profiling Using Performance Schema ............................................................... 172

iv

Preface and Legal Notices

This is the MySQL Performance Schema extract from the MySQL 5.7 Reference Manual.

Licensing information—MySQL 5.7. This product may include third-party software, used under

license. If you are using a Commercial release of MySQL 5.7, see the MySQL 5.7 Commercial Release

License Information User Manual for licensing information, including licensing information relating to

third-party software that may be included in this Commercial release. If you are using a Community

release of MySQL 5.7, see the MySQL 5.7 Community Release License Information User Manual

for licensing information, including licensing information relating to third-party software that may be

included in this Community release.

Licensing information—MySQL NDB Cluster 7.5. This product may include third-party software,

used under license. If you are using a Commercial release of NDB Cluster 7.5, see the MySQL NDB

Cluster 7.5 Commercial Release License Information User Manual for licensing information relating

to third-party software that may be included in this Commercial release. If you are using a Community

release of NDB Cluster 7.5, see the MySQL NDB Cluster 7.5 Community Release License Information

User Manual for licensing information relating to third-party software that may be included in this

Community release.

Licensing information—MySQL NDB Cluster 7.6. If you are using a Commercial release of

MySQL NDB Cluster 7.6, see the MySQL NDB Cluster 7.6 Commercial Release License Information

User Manual for licensing information, including licensing information relating to third-party software

that may be included in this Commercial release. If you are using a Community release of MySQL NDB

Cluster 7.6, see the MySQL NDB Cluster 7.6 Community Release License Information User Manual

for licensing information, including licensing information relating to third-party software that may be

included in this Community release.

Legal Notices

Copyright © 1997, 2024, Oracle and/or its affiliates.

License Restrictions

This software and related documentation are provided under a license agreement containing

restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly

permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate,

broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any

form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless

required by law for interoperability, is prohibited.

Warranty Disclaimer

The information contained herein is subject to change without notice and is not warranted to be error-

free. If you find any errors, please report them to us in writing.

Restricted Rights Notice

If this is software, software documentation, data (as defined in the Federal Acquisition Regulation), or

related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the

U.S. Government, then the following notice is applicable:

U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated

software, any programs embedded, installed, or activated on delivered hardware, and modifications

of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed

by U.S. Government end users are "commercial computer software," "commercial computer software

documentation," or "limited rights data" pursuant to the applicable Federal Acquisition Regulation and

agency-specific supplemental regulations. As such, the use, reproduction, duplication, release, display,

disclosure, modification, preparation of derivative works, and/or adaptation of i) Oracle programs

(including any operating system, integrated software, any programs embedded, installed, or activated

v

Documentation Accessibility

on delivered hardware, and modifications of such programs), ii) Oracle computer documentation and/

or iii) other Oracle data, is subject to the rights and limitations specified in the license contained in

the applicable contract. The terms governing the U.S. Government's use of Oracle cloud services

are defined by the applicable contract for such services. No other rights are granted to the U.S.

Government.

Hazardous Applications Notice

This software or hardware is developed for general use in a variety of information management

applications. It is not developed or intended for use in any inherently dangerous applications, including

applications that may create a risk of personal injury. If you use this software or hardware in dangerous

applications, then you shall be responsible to take all appropriate fail-safe, backup, redundancy, and

other measures to ensure its safe use. Oracle Corporation and its affiliates disclaim any liability for any

damages caused by use of this software or hardware in dangerous applications.

Trademark Notice

Oracle, Java, MySQL, and NetSuite are registered trademarks of Oracle and/or its affiliates. Other

names may be trademarks of their respective owners.

Intel and Intel Inside are trademarks or registered trademarks of Intel Corporation. All SPARC

trademarks are used under license and are trademarks or registered trademarks of SPARC

International, Inc. AMD, Epyc, and the AMD logo are trademarks or registered trademarks of Advanced

Micro Devices. UNIX is a registered trademark of The Open Group.

Third-Party Content, Products, and Services Disclaimer

This software or hardware and documentation may provide access to or information about content,

products, and services from third parties. Oracle Corporation and its affiliates are not responsible

for and expressly disclaim all warranties of any kind with respect to third-party content, products,

and services unless otherwise set forth in an applicable agreement between you and Oracle. Oracle

Corporation and its affiliates will not be responsible for any loss, costs, or damages incurred due to

your access to or use of third-party content, products, or services, except as set forth in an applicable

agreement between you and Oracle.

Use of This Documentation

This documentation is NOT distributed under a GPL license. Use of this documentation is subject to the

following terms:

You may create a printed copy of this documentation solely for your own personal use. Conversion

to other formats is allowed as long as the actual content is not altered or edited in any way. You shall

not publish or distribute this documentation in any form or on any media, except if you distribute the

documentation in a manner similar to how Oracle disseminates it (that is, electronically for download

on a Web site with the software) or on a CD-ROM or similar medium, provided however that the

documentation is disseminated together with the software on the same medium. Any other use, such

as any dissemination of printed copies or use of this documentation, in whole or in part, in another

publication, requires the prior written consent from an authorized representative of Oracle. Oracle and/

or its affiliates reserve any and all rights to this documentation not expressly granted above.

Documentation Accessibility

For information about Oracle's commitment to accessibility, visit the Oracle Accessibility Program

website at

http://www.oracle.com/pls/topic/lookup?ctx=acc&id=docacc.

Access to Oracle Support for Accessibility

Oracle customers that have purchased support have access to electronic support through My Oracle

Support. For information, visit

vi

viii

Chapter 1 MySQL Performance Schema

The MySQL Performance Schema is a feature for monitoring MySQL Server execution at a low level.

The Performance Schema has these characteristics:

• The Performance Schema provides a way to inspect internal execution of the server at runtime. It

is implemented using the PERFORMANCE_SCHEMA storage engine and the performance_schema

database. The Performance Schema focuses primarily on performance data. This differs from

INFORMATION_SCHEMA, which serves for inspection of metadata.

• The Performance Schema monitors server events. An “event” is anything the server does that takes

time and has been instrumented so that timing information can be collected. In general, an event

could be a function call, a wait for the operating system, a stage of an SQL statement execution such

as parsing or sorting, or an entire statement or group of statements. Event collection provides access

to information about synchronization calls (such as for mutexes) file and table I/O, table locks, and so

forth for the server and for several storage engines.

• Performance Schema events are distinct from events written to the server's binary log (which

describe data modifications) and Event Scheduler events (which are a type of stored program).

• Performance Schema events are specific to a given instance of the MySQL Server. Performance

Schema tables are considered local to the server, and changes to them are not replicated or written

to the binary log.

• Current events are available, as well as event histories and summaries. This enables you to

determine how many times instrumented activities were performed and how much time they took.

Event information is available to show the activities of specific threads, or activity associated with

particular objects such as a mutex or file.

• The PERFORMANCE_SCHEMA storage engine collects event data using “instrumentation points” in

server source code.

• Collected events are stored in tables in the performance_schema database. These tables can be

queried using SELECT statements like other tables.

• Performance Schema configuration can be modified dynamically by updating tables in the

performance_schema database through SQL statements. Configuration changes affect data

collection immediately.

• Tables in the Performance Schema are in-memory tables that use no persistent on-disk storage. The

contents are repopulated beginning at server startup and discarded at server shutdown.

• Monitoring is available on all platforms supported by MySQL.

Some limitations might apply: The types of timers might vary per platform. Instruments that apply

to storage engines might not be implemented for all storage engines. Instrumentation of each third-

party engine is the responsibility of the engine maintainer. See also Restrictions on Performance

Schema.

• Data collection is implemented by modifying the server source code to add instrumentation. There

are no separate threads associated with the Performance Schema, unlike other features such as

replication or the Event Scheduler.

The Performance Schema is intended to provide access to useful information about server execution

while having minimal impact on server performance. The implementation follows these design goals:

• Activating the Performance Schema causes no changes in server behavior. For example, it does

not cause thread scheduling to change, and it does not cause query execution plans (as shown by

EXPLAIN) to change.

• Server monitoring occurs continuously and unobtrusively with very little overhead. Activating the

Performance Schema does not make the server unusable.

1

• The parser is unchanged. There are no new keywords or statements.

• Execution of server code proceeds normally even if the Performance Schema fails internally.

• When there is a choice between performing processing during event collection initially or during

event retrieval later, priority is given to making collection faster. This is because collection is ongoing

whereas retrieval is on demand and might never happen at all.

• It is easy to add new instrumentation points.

• Instrumentation is versioned. If the instrumentation implementation changes, previously instrumented

code continues to work. This benefits developers of third-party plugins because it is not necessary to

upgrade each plugin to stay synchronized with the latest Performance Schema changes.

Note

The MySQL sys schema is a set of objects that provides convenient access

to data collected by the Performance Schema. The sys schema is installed by

default. For usage instructions, see MySQL sys Schema.

2

Chapter 2 Performance Schema Quick Start

This section briefly introduces the Performance Schema with examples that show how to use it. For

additional examples, see Chapter 14, Using the Performance Schema to Diagnose Problems.

The Performance Schema is enabled by default. To enable or disable it explicitly, start the server with

the performance_schema variable set to an appropriate value. For example, use these lines in the

server my.cnf file:

[mysqld]

performance_schema=ON

When the server starts, it sees performance_schema and attempts to initialize the Performance

Schema. To verify successful initialization, use this statement:

mysql> SHOW VARIABLES LIKE 'performance_schema';

+--------------------+-------+

| Variable_name | Value |

+--------------------+-------+

| performance_schema | ON |

+--------------------+-------+

A value of ON means that the Performance Schema initialized successfully and is ready for use. A

value of OFF means that some error occurred. Check the server error log for information about what

went wrong.

The Performance Schema is implemented as a storage engine. If this engine is available (which you

should already have checked earlier), you should see it listed with a SUPPORT value of YES in the

output from the Information Schema ENGINES table or the SHOW ENGINES statement:

mysql> SELECT * FROM INFORMATION_SCHEMA.ENGINES

WHERE ENGINE='PERFORMANCE_SCHEMA'\G

*************************** 1. row ***************************

ENGINE: PERFORMANCE_SCHEMA

SUPPORT: YES

COMMENT: Performance Schema

TRANSACTIONS: NO

XA: NO

SAVEPOINTS: NO

mysql> SHOW ENGINES\G

...

Engine: PERFORMANCE_SCHEMA

Support: YES

Comment: Performance Schema

Transactions: NO

XA: NO

Savepoints: NO

...

The PERFORMANCE_SCHEMA storage engine operates on tables in the performance_schema

database. You can make performance_schema the default database so that references to its tables

need not be qualified with the database name:

mysql> USE performance_schema;

Performance Schema tables are stored in the performance_schema database. Information about the

structure of this database and its tables can be obtained, as for any other database, by selecting from

the INFORMATION_SCHEMA database or by using SHOW statements. For example, use either of these

statements to see what Performance Schema tables exist:

mysql> SELECT TABLE_NAME FROM INFORMATION_SCHEMA.TABLES

WHERE TABLE_SCHEMA = 'performance_schema';

+------------------------------------------------------+

| TABLE_NAME |

+------------------------------------------------------+

3

| accounts |

| cond_instances |

...

| events_stages_current |

| events_stages_history |

| events_stages_history_long |

| events_stages_summary_by_account_by_event_name |

| events_stages_summary_by_host_by_event_name |

| events_stages_summary_by_thread_by_event_name |

| events_stages_summary_by_user_by_event_name |

| events_stages_summary_global_by_event_name |

| events_statements_current |

| events_statements_history |

| events_statements_history_long |

...

| file_instances |

| file_summary_by_event_name |

| file_summary_by_instance |

| host_cache |

| hosts |

| memory_summary_by_account_by_event_name |

| memory_summary_by_host_by_event_name |

| memory_summary_by_thread_by_event_name |

| memory_summary_by_user_by_event_name |

| memory_summary_global_by_event_name |

| metadata_locks |

| mutex_instances |

| objects_summary_global_by_type |

| performance_timers |

| replication_connection_configuration |

| replication_connection_status |

| replication_applier_configuration |

| replication_applier_status |

| replication_applier_status_by_coordinator |

| replication_applier_status_by_worker |

| rwlock_instances |

| session_account_connect_attrs |

| session_connect_attrs |

| setup_actors |

| setup_consumers |

| setup_instruments |

| setup_objects |

| setup_timers |

| socket_instances |

| socket_summary_by_event_name |

| socket_summary_by_instance |

| table_handles |

| table_io_waits_summary_by_index_usage |

| table_io_waits_summary_by_table |

| table_lock_waits_summary_by_table |

| threads |

| users |

+------------------------------------------------------+

mysql> SHOW TABLES FROM performance_schema;

+------------------------------------------------------+

| Tables_in_performance_schema |

+------------------------------------------------------+

| accounts |

| cond_instances |

| events_stages_current |

| events_stages_history |

| events_stages_history_long |

...

The number of Performance Schema tables increases over time as implementation of additional

instrumentation proceeds.

The name of the performance_schema database is lowercase, as are the names of tables within it.

Queries should specify the names in lowercase.

To see the structure of individual tables, use SHOW CREATE TABLE:

4

mysql> SHOW CREATE TABLE performance_schema.setup_consumers\G

*************************** 1. row ***************************

Table: setup_consumers

Create Table: CREATE TABLE `setup_consumers` (

`NAME` varchar(64) NOT NULL,

`ENABLED` enum('YES','NO') NOT NULL

) ENGINE=PERFORMANCE_SCHEMA DEFAULT CHARSET=utf8

Table structure is also available by selecting from tables such as INFORMATION_SCHEMA.COLUMNS or

by using statements such as SHOW COLUMNS.

Tables in the performance_schema database can be grouped according to the type of information

in them: Current events, event histories and summaries, object instances, and setup (configuration)

information. The following examples illustrate a few uses for these tables. For detailed information

about the tables in each group, see Chapter 10, Performance Schema Table Descriptions.

Initially, not all instruments and consumers are enabled, so the performance schema does not collect

all events. To turn all of these on and enable event timing, execute two statements (the row counts may

differ depending on MySQL version):

mysql> UPDATE performance_schema.setup_instruments

SET ENABLED = 'YES', TIMED = 'YES';

Query OK, 560 rows affected (0.04 sec)

mysql> UPDATE performance_schema.setup_consumers

SET ENABLED = 'YES';

Query OK, 10 rows affected (0.00 sec)

To see what the server is doing at the moment, examine the events_waits_current table. It

contains one row per thread showing each thread's most recent monitored event:

mysql> SELECT *

FROM performance_schema.events_waits_current\G

*************************** 1. row ***************************

THREAD_ID: 0

EVENT_ID: 5523

END_EVENT_ID: 5523

EVENT_NAME: wait/synch/mutex/mysys/THR_LOCK::mutex

SOURCE: thr_lock.c:525

TIMER_START: 201660494489586

TIMER_END: 201660494576112

TIMER_WAIT: 86526

SPINS: NULL

OBJECT_SCHEMA: NULL

OBJECT_NAME: NULL

INDEX_NAME: NULL

OBJECT_TYPE: NULL

OBJECT_INSTANCE_BEGIN: 142270668

NESTING_EVENT_ID: NULL

NESTING_EVENT_TYPE: NULL

OPERATION: lock

NUMBER_OF_BYTES: NULL

FLAGS: 0

...

This event indicates that thread 0 was waiting for 86,526 picoseconds to acquire a lock on

THR_LOCK::mutex, a mutex in the mysys subsystem. The first few columns provide the following

information:

• The ID columns indicate which thread the event comes from and the event number.

• EVENT_NAME indicates what was instrumented and SOURCE indicates which source file contains the

instrumented code.

• The timer columns show when the event started and stopped and how long it took. If an event is still

in progress, the TIMER_END and TIMER_WAIT values are NULL. Timer values are approximate and

expressed in picoseconds. For information about timers and event time collection, see Section 5.1,

“Performance Schema Event Timing”.

5

The history tables contain the same kind of rows as the current-events table but have more rows and

show what the server has been doing “recently” rather than “currently.” The events_waits_history

and events_waits_history_long tables contain the most recent 10 events per thread and most

recent 10,000 events, respectively. For example, to see information for recent events produced by

thread 13, do this:

mysql> SELECT EVENT_ID, EVENT_NAME, TIMER_WAIT

FROM performance_schema.events_waits_history

WHERE THREAD_ID = 13

ORDER BY EVENT_ID;

+----------+-----------------------------------------+------------+

| EVENT_ID | EVENT_NAME | TIMER_WAIT |

+----------+-----------------------------------------+------------+

| 86 | wait/synch/mutex/mysys/THR_LOCK::mutex | 686322 |

| 87 | wait/synch/mutex/mysys/THR_LOCK_malloc | 320535 |

| 88 | wait/synch/mutex/mysys/THR_LOCK_malloc | 339390 |

| 89 | wait/synch/mutex/mysys/THR_LOCK_malloc | 377100 |

| 90 | wait/synch/mutex/sql/LOCK_plugin | 614673 |

| 91 | wait/synch/mutex/sql/LOCK_open | 659925 |

| 92 | wait/synch/mutex/sql/THD::LOCK_thd_data | 494001 |

| 93 | wait/synch/mutex/mysys/THR_LOCK_malloc | 222489 |

| 94 | wait/synch/mutex/mysys/THR_LOCK_malloc | 214947 |

| 95 | wait/synch/mutex/mysys/LOCK_alarm | 312993 |

+----------+-----------------------------------------+------------+

As new events are added to a history table, older events are discarded if the table is full.

Summary tables provide aggregated information for all events over time. The tables in this group

summarize event data in different ways. To see which instruments have been executed the most times

or have taken the most wait time, sort the events_waits_summary_global_by_event_name

table on the COUNT_STAR or SUM_TIMER_WAIT column, which correspond to a COUNT(*) or

SUM(TIMER_WAIT) value, respectively, calculated over all events:

mysql> SELECT EVENT_NAME, COUNT_STAR

FROM performance_schema.events_waits_summary_global_by_event_name

ORDER BY COUNT_STAR DESC LIMIT 10;

+---------------------------------------------------+------------+

| EVENT_NAME | COUNT_STAR |

+---------------------------------------------------+------------+

| wait/synch/mutex/mysys/THR_LOCK_malloc | 6419 |

| wait/io/file/sql/FRM | 452 |

| wait/synch/mutex/sql/LOCK_plugin | 337 |

| wait/synch/mutex/mysys/THR_LOCK_open | 187 |

| wait/synch/mutex/mysys/LOCK_alarm | 147 |

| wait/synch/mutex/sql/THD::LOCK_thd_data | 115 |

| wait/io/file/myisam/kfile | 102 |

| wait/synch/mutex/sql/LOCK_global_system_variables | 89 |

| wait/synch/mutex/mysys/THR_LOCK::mutex | 89 |

| wait/synch/mutex/sql/LOCK_open | 88 |

+---------------------------------------------------+------------+

mysql> SELECT EVENT_NAME, SUM_TIMER_WAIT

FROM performance_schema.events_waits_summary_global_by_event_name

ORDER BY SUM_TIMER_WAIT DESC LIMIT 10;

+----------------------------------------+----------------+

| EVENT_NAME | SUM_TIMER_WAIT |

+----------------------------------------+----------------+

| wait/io/file/sql/MYSQL_LOG | 1599816582 |

| wait/synch/mutex/mysys/THR_LOCK_malloc | 1530083250 |

| wait/io/file/sql/binlog_index | 1385291934 |

| wait/io/file/sql/FRM | 1292823243 |

| wait/io/file/myisam/kfile | 411193611 |

| wait/io/file/myisam/dfile | 322401645 |

| wait/synch/mutex/mysys/LOCK_alarm | 145126935 |

| wait/io/file/sql/casetest | 104324715 |

| wait/synch/mutex/sql/LOCK_plugin | 86027823 |

| wait/io/file/sql/pid | 72591750 |

+----------------------------------------+----------------+

These results show that the THR_LOCK_malloc mutex is “hot,” both in terms of how often it is used

and amount of time that threads wait attempting to acquire it.

6

Note

The THR_LOCK_malloc mutex is used only in debug builds. In production

builds it is not hot because it is nonexistent.

Instance tables document what types of objects are instrumented. An instrumented object, when

used by the server, produces an event. These tables provide event names and explanatory notes or

status information. For example, the file_instances table lists instances of instruments for file I/O

operations and their associated files:

mysql> SELECT *

FROM performance_schema.file_instances\G

*************************** 1. row ***************************

FILE_NAME: /opt/mysql-log/60500/binlog.000007

EVENT_NAME: wait/io/file/sql/binlog

OPEN_COUNT: 0

*************************** 2. row ***************************

FILE_NAME: /opt/mysql/60500/data/mysql/tables_priv.MYI

EVENT_NAME: wait/io/file/myisam/kfile

OPEN_COUNT: 1

*************************** 3. row ***************************

FILE_NAME: /opt/mysql/60500/data/mysql/columns_priv.MYI

EVENT_NAME: wait/io/file/myisam/kfile

OPEN_COUNT: 1

...

Setup tables are used to configure and display monitoring characteristics. For example,

setup_instruments lists the set of instruments for which events can be collected and shows which

of them are enabled:

mysql> SELECT * FROM performance_schema.setup_instruments;

+---------------------------------------------------+---------+-------+

| NAME | ENABLED | TIMED |

+---------------------------------------------------+---------+-------+

...

| stage/sql/end | NO | NO |

| stage/sql/executing | NO | NO |

| stage/sql/init | NO | NO |

| stage/sql/insert | NO | NO |

...

| statement/sql/load | YES | YES |

| statement/sql/grant | YES | YES |

| statement/sql/check | YES | YES |

| statement/sql/flush | YES | YES |

...

| wait/synch/mutex/sql/LOCK_global_read_lock | YES | YES |

| wait/synch/mutex/sql/LOCK_global_system_variables | YES | YES |

| wait/synch/mutex/sql/LOCK_lock_db | YES | YES |

| wait/synch/mutex/sql/LOCK_manager | YES | YES |

...

| wait/synch/rwlock/sql/LOCK_grant | YES | YES |

| wait/synch/rwlock/sql/LOGGER::LOCK_logger | YES | YES |

| wait/synch/rwlock/sql/LOCK_sys_init_connect | YES | YES |

| wait/synch/rwlock/sql/LOCK_sys_init_slave | YES | YES |

...

| wait/io/file/sql/binlog | YES | YES |

| wait/io/file/sql/binlog_index | YES | YES |

| wait/io/file/sql/casetest | YES | YES |

| wait/io/file/sql/dbopt | YES | YES |

...

To understand how to interpret instrument names, see Chapter 7, Performance Schema Instrument

Naming Conventions.

To control whether events are collected for an instrument, set its ENABLED value to YES or NO. For

example:

mysql> UPDATE performance_schema.setup_instruments

SET ENABLED = 'NO'

7

WHERE NAME = 'wait/synch/mutex/sql/LOCK_mysql_create_db';

The Performance Schema uses collected events to update tables in the performance_schema

database, which act as “consumers” of event information. The setup_consumers table lists the

available consumers and which are enabled:

mysql> SELECT * FROM performance_schema.setup_consumers;

+----------------------------------+---------+

| NAME | ENABLED |

+----------------------------------+---------+

| events_stages_current | NO |

| events_stages_history | NO |

| events_stages_history_long | NO |

| events_statements_current | YES |

| events_statements_history | YES |

| events_statements_history_long | NO |

| events_transactions_current | NO |

| events_transactions_history | NO |

| events_transactions_history_long | NO |

| events_waits_current | NO |

| events_waits_history | NO |

| events_waits_history_long | NO |

| global_instrumentation | YES |

| thread_instrumentation | YES |

| statements_digest | YES |

+----------------------------------+---------+

To control whether the Performance Schema maintains a consumer as a destination for event

information, set its ENABLED value.

For more information about the setup tables and how to use them to control event collection, see

Section 5.2, “Performance Schema Event Filtering”.

There are some miscellaneous tables that do not fall into any of the previous groups. For example,

performance_timers lists the available event timers and their characteristics. For information about

timers, see Section 5.1, “Performance Schema Event Timing”.

8

Chapter 3 Performance Schema Build Configuration

The Performance Schema is mandatory and always compiled in. It is possible to exclude certain

parts of the Performance Schema instrumentation. For example, to exclude stage and statement

instrumentation, do this:

$> cmake . \

-DDISABLE_PSI_STAGE=1 \

-DDISABLE_PSI_STATEMENT=1

For more information, see the descriptions of the DISABLE_PSI_XXX CMake options in MySQL

Source-Configuration Options.

If you install MySQL over a previous installation that was configured without the Performance Schema

(or with an older version of the Performance Schema that has missing or out-of-date tables). One

indication of this issue is the presence of messages such as the following in the error log:

[ERROR] Native table 'performance_schema'.'events_waits_history'

has the wrong structure

[ERROR] Native table 'performance_schema'.'events_waits_history_long'

has the wrong structure

...

To correct that problem, perform the MySQL upgrade procedure. See Upgrading MySQL.

To verify whether a server was built with Performance Schema support, check its help output. If the

Performance Schema is available, the output mentions several variables with names that begin with

performance_schema:

$> mysqld --verbose --help

...

--performance_schema

Enable the performance schema.

--performance_schema_events_waits_history_long_size=#

Number of rows in events_waits_history_long.

...

You can also connect to the server and look for a line that names the PERFORMANCE_SCHEMA storage

engine in the output from SHOW ENGINES:

mysql> SHOW ENGINES\G

...

Engine: PERFORMANCE_SCHEMA

Support: YES

Comment: Performance Schema

Transactions: NO

XA: NO

Savepoints: NO

...

If the Performance Schema was not configured into the server at build time, no row for

PERFORMANCE_SCHEMA appears in the output from SHOW ENGINES. You might see

performance_schema listed in the output from SHOW DATABASES, but it has no tables and cannot be

used.

A line for PERFORMANCE_SCHEMA in the SHOW ENGINES output means that the Performance Schema

is available, not that it is enabled. To enable it, you must do so at server startup, as described in the

next section.

9

10

Chapter 4 Performance Schema Startup Configuration

To use the MySQL Performance Schema, it must be enabled at server startup to enable event

collection to occur.

Assuming that the Performance Schema is available, it is enabled by default. To enable or disable it

explicitly, start the server with the performance_schema variable set to an appropriate value. For

example, use these lines in your my.cnf file:

[mysqld]

performance_schema=ON

If the server is unable to allocate any internal buffer during Performance Schema initialization, the

Performance Schema disables itself and sets performance_schema to OFF, and the server runs

without instrumentation.

The Performance Schema also permits instrument and consumer configuration at server startup.

To control an instrument at server startup, use an option of this form:

--performance-schema-instrument='instrument_name=value'

Here, instrument_name is an instrument name such as wait/synch/mutex/sql/LOCK_open,

and value is one of these values:

• OFF, FALSE, or 0: Disable the instrument

• ON, TRUE, or 1: Enable and time the instrument

• COUNTED: Enable and count (rather than time) the instrument

Each --performance-schema-instrument option can specify only one instrument name, but

multiple instances of the option can be given to configure multiple instruments. In addition, patterns

are permitted in instrument names to configure instruments that match the pattern. To configure all

condition synchronization instruments as enabled and counted, use this option:

--performance-schema-instrument='wait/synch/cond/%=COUNTED'

To disable all instruments, use this option:

--performance-schema-instrument='%=OFF'

Exception: The memory/performance_schema/% instruments are built in and cannot be disabled at

startup.

Longer instrument name strings take precedence over shorter pattern names, regardless of order. For

information about specifying patterns to select instruments, see Section 5.9, “Naming Instruments or

Consumers for Filtering Operations”.

An unrecognized instrument name is ignored. It is possible that a plugin installed later may create the

instrument, at which time the name is recognized and configured.

To control a consumer at server startup, use an option of this form:

--performance-schema-consumer-consumer_name=value

Here, consumer_name is a consumer name such as events_waits_history, and value is one of

these values:

• OFF, FALSE, or 0: Do not collect events for the consumer

• ON, TRUE, or 1: Collect events for the consumer

For example, to enable the events_waits_history consumer, use this option:

11

--performance-schema-consumer-events-waits-history=ON

The permitted consumer names can be found by examining the setup_consumers table. Patterns

are not permitted. Consumer names in the setup_consumers table use underscores, but for

consumers set at startup, dashes and underscores within the name are equivalent.

The Performance Schema includes several system variables that provide configuration information:

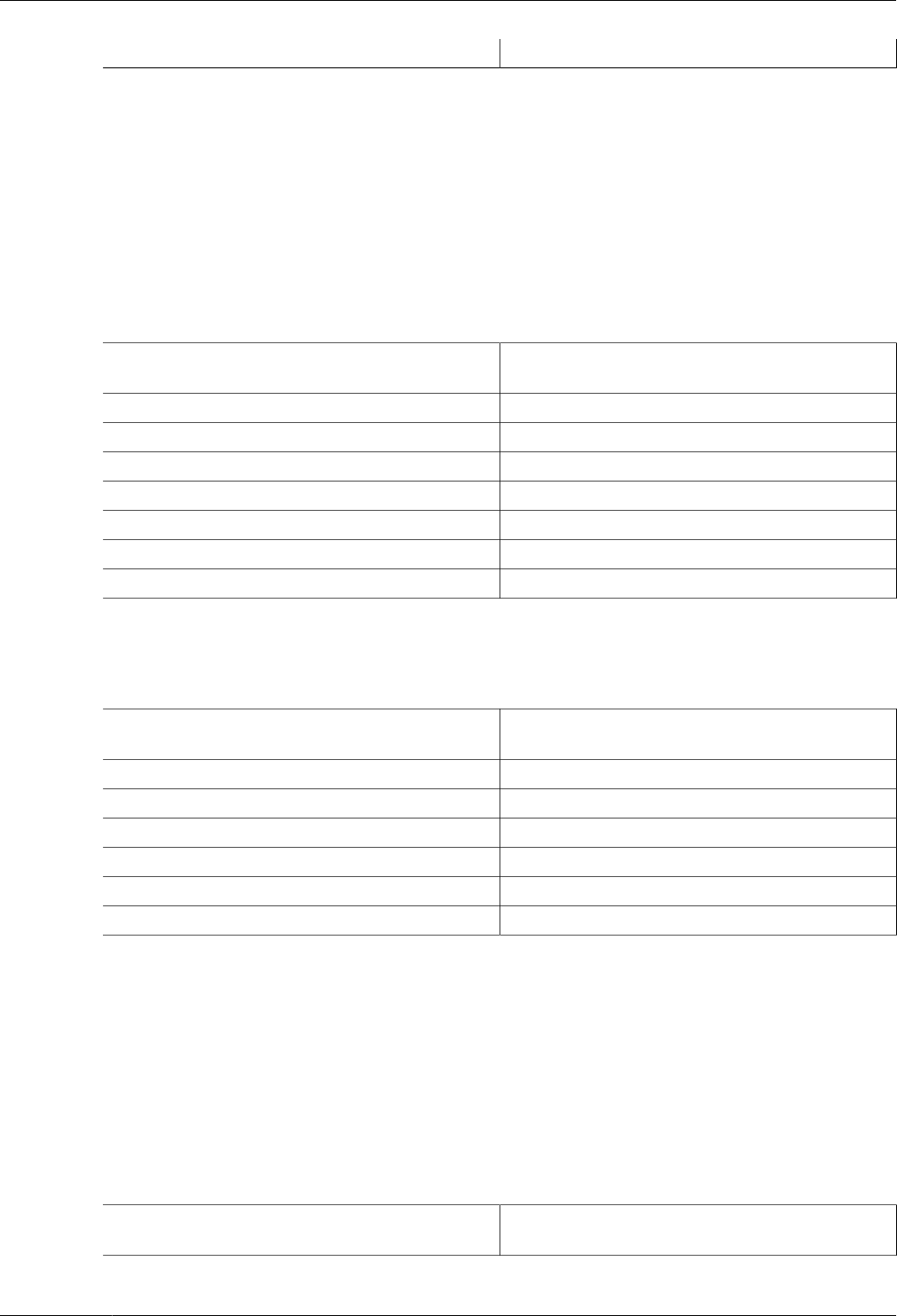

mysql> SHOW VARIABLES LIKE 'perf%';

+--------------------------------------------------------+---------+

| Variable_name | Value |

+--------------------------------------------------------+---------+

| performance_schema | ON |

| performance_schema_accounts_size | 100 |

| performance_schema_digests_size | 200 |

| performance_schema_events_stages_history_long_size | 10000 |

| performance_schema_events_stages_history_size | 10 |

| performance_schema_events_statements_history_long_size | 10000 |

| performance_schema_events_statements_history_size | 10 |

| performance_schema_events_waits_history_long_size | 10000 |

| performance_schema_events_waits_history_size | 10 |

| performance_schema_hosts_size | 100 |

| performance_schema_max_cond_classes | 80 |

| performance_schema_max_cond_instances | 1000 |

...

The performance_schema variable is ON or OFF to indicate whether the Performance Schema is

enabled or disabled. The other variables indicate table sizes (number of rows) or memory allocation

values.

Note

With the Performance Schema enabled, the number of Performance Schema

instances affects the server memory footprint, perhaps to a large extent. The

Performance Schema autoscales many parameters to use memory only as

required; see The Performance Schema Memory-Allocation Model.

To change the value of Performance Schema system variables, set them at server startup. For

example, put the following lines in a my.cnf file to change the sizes of the history tables for wait

events:

[mysqld]

performance_schema

performance_schema_events_waits_history_size=20

performance_schema_events_waits_history_long_size=15000

The Performance Schema automatically sizes the values of several of its parameters at server

startup if they are not set explicitly. For example, it sizes the parameters that control the sizes of the

events waits tables this way. the Performance Schema allocates memory incrementally, scaling its

memory use to actual server load, instead of allocating all the memory it needs during server startup.

Consequently, many sizing parameters need not be set at all. To see which parameters are autosized

or autoscaled, use mysqld --verbose --help and examine the option descriptions, or see

Chapter 12, Performance Schema System Variables.

For each autosized parameter that is not set at server startup, the Performance Schema determines

how to set its value based on the value of the following system values, which are considered as “hints”

about how you have configured your MySQL server:

max_connections

open_files_limit

table_definition_cache

table_open_cache

To override autosizing or autoscaling for a given parameter, set it to a value other than −1 at startup. In

this case, the Performance Schema assigns it the specified value.

12

14

Chapter 5 Performance Schema Runtime Configuration

Table of Contents

5.1 Performance Schema Event Timing ....................................................................................... 16

5.2 Performance Schema Event Filtering ..................................................................................... 19

5.3 Event Pre-Filtering ................................................................................................................ 20

5.4 Pre-Filtering by Instrument .................................................................................................... 21

5.5 Pre-Filtering by Object .......................................................................................................... 22

5.6 Pre-Filtering by Thread .......................................................................................................... 24

5.7 Pre-Filtering by Consumer ..................................................................................................... 26

5.8 Example Consumer Configurations ........................................................................................ 29

5.9 Naming Instruments or Consumers for Filtering Operations ..................................................... 34

5.10 Determining What Is Instrumented ....................................................................................... 34

Specific Performance Schema features can be enabled at runtime to control which types of event

collection occur.

Performance Schema setup tables contain information about monitoring configuration:

mysql> SELECT TABLE_NAME FROM INFORMATION_SCHEMA.TABLES

WHERE TABLE_SCHEMA = 'performance_schema'

AND TABLE_NAME LIKE 'setup%';

+-------------------+

| TABLE_NAME |

+-------------------+

| setup_actors |

| setup_consumers |

| setup_instruments |

| setup_objects |

| setup_timers |

+-------------------+

You can examine the contents of these tables to obtain information about Performance Schema

monitoring characteristics. If you have the UPDATE privilege, you can change Performance Schema

operation by modifying setup tables to affect how monitoring occurs. For additional details about these

tables, see Section 10.2, “Performance Schema Setup Tables”.

To see which event timers are selected, query the setup_timers tables:

mysql> SELECT * FROM performance_schema.setup_timers;

+-------------+-------------+

| NAME | TIMER_NAME |

+-------------+-------------+

| idle | MICROSECOND |

| wait | CYCLE |

| stage | NANOSECOND |

| statement | NANOSECOND |

| transaction | NANOSECOND |

+-------------+-------------+

The NAME value indicates the type of instrument to which the timer applies, and TIMER_NAME indicates

which timer applies to those instruments. The timer applies to instruments where their name begins

with an element matching the NAME value.

To change the timer, update the NAME value. For example, to use the NANOSECOND timer for the wait

timer:

mysql> UPDATE performance_schema.setup_timers

SET TIMER_NAME = 'NANOSECOND'

WHERE NAME = 'wait';

mysql> SELECT * FROM performance_schema.setup_timers;

+-------------+-------------+

15

Performance Schema Event Timing

| NAME | TIMER_NAME |

+-------------+-------------+

| idle | MICROSECOND |

| wait | NANOSECOND |

| stage | NANOSECOND |

| statement | NANOSECOND |

| transaction | NANOSECOND |

+-------------+-------------+

For discussion of timers, see Section 5.1, “Performance Schema Event Timing”.

The setup_instruments and setup_consumers tables list the instruments for which events

can be collected and the types of consumers for which event information actually is collected,

respectively. Other setup tables enable further modification of the monitoring configuration. Section 5.2,

“Performance Schema Event Filtering”, discusses how you can modify these tables to affect event

collection.

If there are Performance Schema configuration changes that must be made at runtime using SQL

statements and you would like these changes to take effect each time the server starts, put the

statements in a file and start the server with the init_file system variable set to name the file. This

strategy can also be useful if you have multiple monitoring configurations, each tailored to produce a

different kind of monitoring, such as casual server health monitoring, incident investigation, application

behavior troubleshooting, and so forth. Put the statements for each monitoring configuration into their

own file and specify the appropriate file as the init_file value when you start the server.

5.1 Performance Schema Event Timing

Events are collected by means of instrumentation added to the server source code. Instruments time

events, which is how the Performance Schema provides an idea of how long events take. It is also

possible to configure instruments not to collect timing information. This section discusses the available

timers and their characteristics, and how timing values are represented in events.

Performance Schema Timers

Two Performance Schema tables provide timer information:

• performance_timers lists the available timers and their characteristics.

• setup_timers indicates which timers are used for which instruments.

Each timer row in setup_timers must refer to one of the timers listed in performance_timers.

Timers vary in precision and amount of overhead. To see what timers are available and their

characteristics, check the performance_timers table:

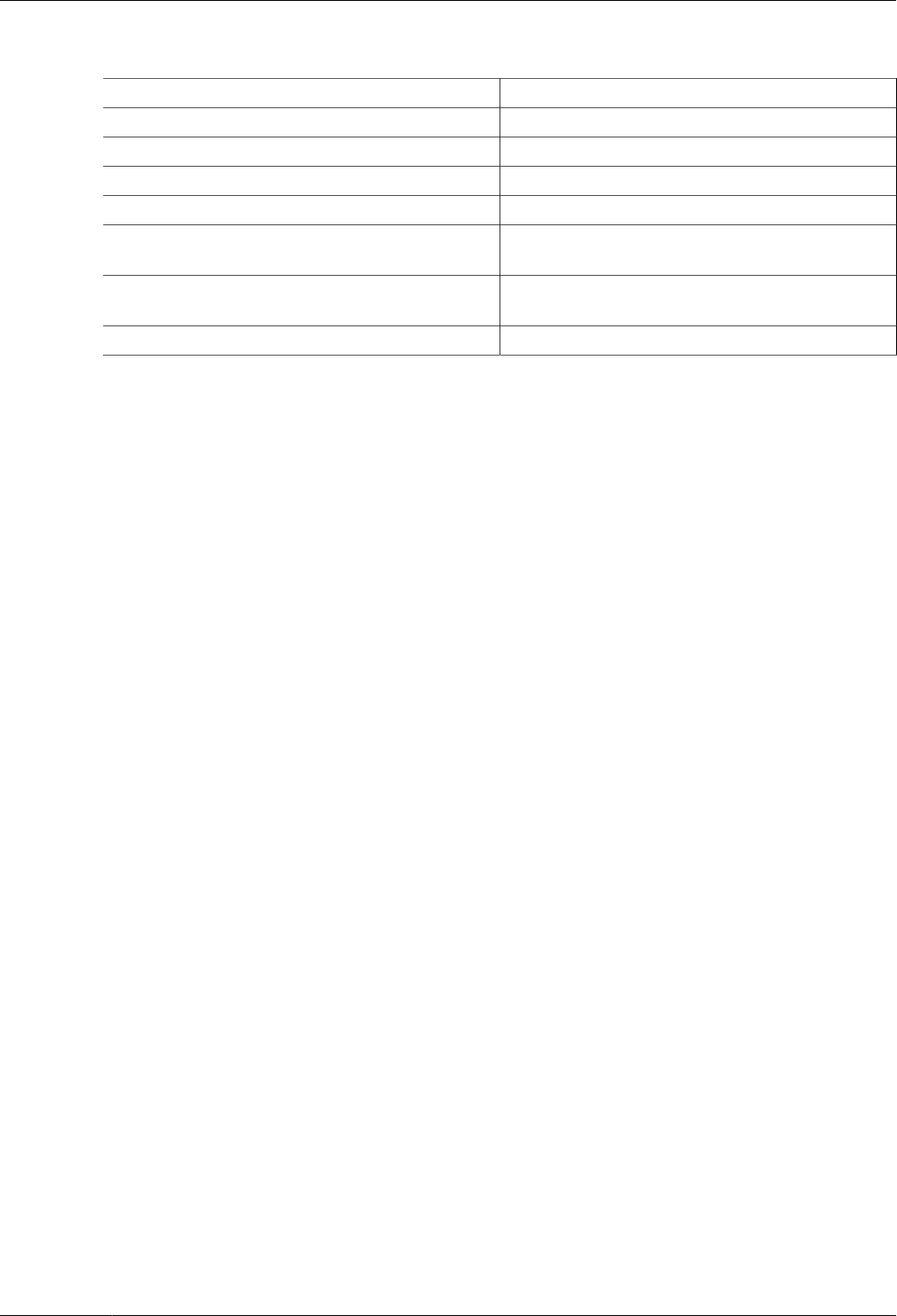

mysql> SELECT * FROM performance_schema.performance_timers;

+-------------+-----------------+------------------+----------------+

| TIMER_NAME | TIMER_FREQUENCY | TIMER_RESOLUTION | TIMER_OVERHEAD |

+-------------+-----------------+------------------+----------------+

| CYCLE | 2389029850 | 1 | 72 |

| NANOSECOND | 1000000000 | 1 | 112 |

| MICROSECOND | 1000000 | 1 | 136 |

| MILLISECOND | 1036 | 1 | 168 |

| TICK | 105 | 1 | 2416 |

+-------------+-----------------+------------------+----------------+

If the values associated with a given timer name are NULL, that timer is not supported on your platform.

The rows that do not contain NULL indicate which timers you can use in setup_timers.

The columns have these meanings:

• The TIMER_NAME column shows the names of the available timers. CYCLE refers to the timer that

is based on the CPU (processor) cycle counter. The timers in setup_timers that you can use are

16

Performance Schema Timers

those that do not have NULL in the other columns. If the values associated with a given timer name

are NULL, that timer is not supported on your platform.

• TIMER_FREQUENCY indicates the number of timer units per second. For a cycle timer, the frequency

is generally related to the CPU speed. The value shown was obtained on a system with a 2.4GHz

processor. The other timers are based on fixed fractions of seconds. For TICK, the frequency may

vary by platform (for example, some use 100 ticks/second, others 1000 ticks/second).

• TIMER_RESOLUTION indicates the number of timer units by which timer values increase at a time. If

a timer has a resolution of 10, its value increases by 10 each time.

• TIMER_OVERHEAD is the minimal number of cycles of overhead to obtain one timing with the given

timer. The overhead per event is twice the value displayed because the timer is invoked at the

beginning and end of the event.

To see which timers are in effect or to change timers, access the setup_timers table:

mysql> SELECT * FROM performance_schema.setup_timers;

+-------------+-------------+

| NAME | TIMER_NAME |

+-------------+-------------+

| idle | MICROSECOND |

| wait | CYCLE |

| stage | NANOSECOND |

| statement | NANOSECOND |

| transaction | NANOSECOND |

+-------------+-------------+

mysql> UPDATE performance_schema.setup_timers

SET TIMER_NAME = 'MICROSECOND'

WHERE NAME = 'idle';

mysql> SELECT * FROM performance_schema.setup_timers;

+-------------+-------------+

| NAME | TIMER_NAME |

+-------------+-------------+

| idle | MICROSECOND |

| wait | CYCLE |

| stage | NANOSECOND |

| statement | NANOSECOND |

| transaction | NANOSECOND |

+-------------+-------------+

By default, the Performance Schema uses the best timer available for each instrument type, but you

can select a different one.

To time wait events, the most important criterion is to reduce overhead, at the possible expense of the

timer accuracy, so using the CYCLE timer is the best.

The time a statement (or stage) takes to execute is in general orders of magnitude larger than the time

it takes to execute a single wait. To time statements, the most important criterion is to have an accurate

measure, which is not affected by changes in processor frequency, so using a timer which is not based

on cycles is the best. The default timer for statements is NANOSECOND. The extra “overhead” compared

to the CYCLE timer is not significant, because the overhead caused by calling a timer twice (once when

the statement starts, once when it ends) is orders of magnitude less compared to the CPU time used to

execute the statement itself. Using the CYCLE timer has no benefit here, only drawbacks.

The precision offered by the cycle counter depends on processor speed. If the processor runs at 1

GHz (one billion cycles/second) or higher, the cycle counter delivers sub-nanosecond precision. Using

the cycle counter is much cheaper than getting the actual time of day. For example, the standard

gettimeofday() function can take hundreds of cycles, which is an unacceptable overhead for data

gathering that may occur thousands or millions of times per second.

Cycle counters also have disadvantages:

• End users expect to see timings in wall-clock units, such as fractions of a second. Converting from

cycles to fractions of seconds can be expensive. For this reason, the conversion is a quick and fairly

rough multiplication operation.

17

Performance Schema Timer Representation in Events

• Processor cycle rate might change, such as when a laptop goes into power-saving mode or when a

CPU slows down to reduce heat generation. If a processor's cycle rate fluctuates, conversion from

cycles to real-time units is subject to error.

• Cycle counters might be unreliable or unavailable depending on the processor or the operating

system. For example, on Pentiums, the instruction is RDTSC (an assembly-language rather than a C

instruction) and it is theoretically possible for the operating system to prevent user-mode programs

from using it.

• Some processor details related to out-of-order execution or multiprocessor synchronization might

cause the counter to seem fast or slow by up to 1000 cycles.

MySQL works with cycle counters on x386 (Windows, macOS, Linux, Solaris, and other Unix flavors),

PowerPC, and IA-64.

Performance Schema Timer Representation in Events

Rows in Performance Schema tables that store current events and historical events have three

columns to represent timing information: TIMER_START and TIMER_END indicate when an event

started and finished, and TIMER_WAIT indicates event duration.

The setup_instruments table has an ENABLED column to indicate the instruments for which

to collect events. The table also has a TIMED column to indicate which instruments are timed. If

an instrument is not enabled, it produces no events. If an enabled instrument is not timed, events

produced by the instrument have NULL for the TIMER_START, TIMER_END, and TIMER_WAIT timer

values. This in turn causes those values to be ignored when calculating aggregate time values in

summary tables (sum, minimum, maximum, and average).

Internally, times within events are stored in units given by the timer in effect when event timing

begins. For display when events are retrieved from Performance Schema tables, times are shown in

picoseconds (trillionths of a second) to normalize them to a standard unit, regardless of which timer is

selected.

Modifications to the setup_timers table affect monitoring immediately. Events already in progress

may use the original timer for the begin time and the new timer for the end time. To avoid unpredictable

results after you make timer changes, use TRUNCATE TABLE to reset Performance Schema statistics.

The timer baseline (“time zero”) occurs at Performance Schema initialization during server startup.

TIMER_START and TIMER_END values in events represent picoseconds since the baseline.

TIMER_WAIT values are durations in picoseconds.

Picosecond values in events are approximate. Their accuracy is subject to the usual forms of error

associated with conversion from one unit to another. If the CYCLE timer is used and the processor

rate varies, there might be drift. For these reasons, it is not reasonable to look at the TIMER_START

value for an event as an accurate measure of time elapsed since server startup. On the other hand, it

is reasonable to use TIMER_START or TIMER_WAIT values in ORDER BY clauses to order events by

start time or duration.

The choice of picoseconds in events rather than a value such as microseconds has a performance

basis. One implementation goal was to show results in a uniform time unit, regardless of the timer.

In an ideal world this time unit would look like a wall-clock unit and be reasonably precise; in other

words, microseconds. But to convert cycles or nanoseconds to microseconds, it would be necessary

to perform a division for every instrumentation. Division is expensive on many platforms. Multiplication

is not expensive, so that is what is used. Therefore, the time unit is an integer multiple of the highest

possible TIMER_FREQUENCY value, using a multiplier large enough to ensure that there is no major

precision loss. The result is that the time unit is “picoseconds.” This precision is spurious, but the

decision enables overhead to be minimized.

While a wait, stage, statement, or transaction event is executing, the respective current-event tables

display current-event timing information:

18

Performance Schema Event Filtering

events_waits_current

events_stages_current

events_statements_current

events_transactions_current

To make it possible to determine how long a not-yet-completed event has been running, the timer

columns are set as follows:

• TIMER_START is populated.

• TIMER_END is populated with the current timer value.

•

TIMER_WAIT is populated with the time elapsed so far (TIMER_END − TIMER_START).

Events that have not yet completed have an END_EVENT_ID value of NULL. To assess time elapsed

so far for an event, use the TIMER_WAIT column. Therefore, to identify events that have not yet

completed and have taken longer than N picoseconds thus far, monitoring applications can use this

expression in queries:

WHERE END_EVENT_ID IS NULL AND TIMER_WAIT > N

Event identification as just described assumes that the corresponding instruments have ENABLED and

TIMED set to YES and that the relevant consumers are enabled.

5.2 Performance Schema Event Filtering

Events are processed in a producer/consumer fashion:

• Instrumented code is the source for events and produces events to be collected. The

setup_instruments table lists the instruments for which events can be collected, whether they

are enabled, and (for enabled instruments) whether to collect timing information:

mysql> SELECT * FROM performance_schema.setup_instruments;

+---------------------------------------------------+---------+-------+

| NAME | ENABLED | TIMED |

+---------------------------------------------------+---------+-------+

...

| wait/synch/mutex/sql/LOCK_global_read_lock | YES | YES |

| wait/synch/mutex/sql/LOCK_global_system_variables | YES | YES |

| wait/synch/mutex/sql/LOCK_lock_db | YES | YES |

| wait/synch/mutex/sql/LOCK_manager | YES | YES |

...

The setup_instruments table provides the most basic form of control over event production. To

further refine event production based on the type of object or thread being monitored, other tables

may be used as described in Section 5.3, “Event Pre-Filtering”.

• Performance Schema tables are the destinations for events and consume events. The

setup_consumers table lists the types of consumers to which event information can be sent and

whether they are enabled:

mysql> SELECT * FROM performance_schema.setup_consumers;

+----------------------------------+---------+

| NAME | ENABLED |

+----------------------------------+---------+

| events_stages_current | NO |

| events_stages_history | NO |

| events_stages_history_long | NO |

| events_statements_current | YES |

| events_statements_history | YES |

| events_statements_history_long | NO |

| events_transactions_current | NO |

| events_transactions_history | NO |

| events_transactions_history_long | NO |

| events_waits_current | NO |

| events_waits_history | NO |

19

Event Pre-Filtering

| events_waits_history_long | NO |

| global_instrumentation | YES |

| thread_instrumentation | YES |

| statements_digest | YES |

+----------------------------------+---------+

Filtering can be done at different stages of performance monitoring:

• Pre-filtering. This is done by modifying Performance Schema configuration so that only certain

types of events are collected from producers, and collected events update only certain consumers.

To do this, enable or disable instruments or consumers. Pre-filtering is done by the Performance

Schema and has a global effect that applies to all users.

Reasons to use pre-filtering:

• To reduce overhead. Performance Schema overhead should be minimal even with all instruments

enabled, but perhaps you want to reduce it further. Or you do not care about timing events and

want to disable the timing code to eliminate timing overhead.

• To avoid filling the current-events or history tables with events in which you have no interest. Pre-

filtering leaves more “room” in these tables for instances of rows for enabled instrument types. If

you enable only file instruments with pre-filtering, no rows are collected for nonfile instruments.

With post-filtering, nonfile events are collected, leaving fewer rows for file events.

• To avoid maintaining some kinds of event tables. If you disable a consumer, the server does not

spend time maintaining destinations for that consumer. For example, if you do not care about

event histories, you can disable the history table consumers to improve performance.

• Post-filtering. This involves the use of WHERE clauses in queries that select information from

Performance Schema tables, to specify which of the available events you want to see. Post-filtering

is performed on a per-user basis because individual users select which of the available events are of

interest.

Reasons to use post-filtering:

• To avoid making decisions for individual users about which event information is of interest.

• To use the Performance Schema to investigate a performance issue when the restrictions to

impose using pre-filtering are not known in advance.

The following sections provide more detail about pre-filtering and provide guidelines for naming

instruments or consumers in filtering operations. For information about writing queries to retrieve

information (post-filtering), see Chapter 6, Performance Schema Queries.

5.3 Event Pre-Filtering

Pre-filtering is done by the Performance Schema and has a global effect that applies to all users. Pre-

filtering can be applied to either the producer or consumer stage of event processing:

• To configure pre-filtering at the producer stage, several tables can be used:

• setup_instruments indicates which instruments are available. An instrument disabled in this

table produces no events regardless of the contents of the other production-related setup tables.

An instrument enabled in this table is permitted to produce events, subject to the contents of the

other tables.

• setup_objects controls whether the Performance Schema monitors particular table and stored

program objects.

• threads indicates whether monitoring is enabled for each server thread.

• setup_actors determines the initial monitoring state for new foreground threads.

20

Pre-Filtering by Instrument

• To configure pre-filtering at the consumer stage, modify the setup_consumers table. This

determines the destinations to which events are sent. setup_consumers also implicitly affects

event production. If a given event is not e sent to any destination (is not be consumed), the

Performance Schema does not produce it.

Modifications to any of these tables affect monitoring immediately, with some exceptions:

• Modifications to some instruments in the setup_instruments table are effective only at server

startup; changing them at runtime has no effect. This affects primarily mutexes, conditions, and

rwlocks in the server, although there may be other instruments for which this is true. This restriction

is lifted as of MySQL 5.7.12.

• Modifications to the setup_actors table affect only foreground threads created subsequent to the

modification, not existing threads.

When you change the monitoring configuration, the Performance Schema does not flush the history

tables. Events already collected remain in the current-events and history tables until displaced by

newer events. If you disable instruments, you might need to wait a while before events for them are

displaced by newer events of interest. Alternatively, use TRUNCATE TABLE to empty the history tables.

After making instrumentation changes, you might want to truncate the summary tables. Generally, the

effect is to reset the summary columns to 0 or NULL, not to remove rows. This enables you to clear

collected values and restart aggregation. That might be useful, for example, after you have made a

runtime configuration change. Exceptions to this truncation behavior are noted in individual summary

table sections.

The following sections describe how to use specific tables to control Performance Schema pre-filtering.

5.4 Pre-Filtering by Instrument

The setup_instruments table lists the available instruments:

mysql> SELECT * FROM performance_schema.setup_instruments;

+---------------------------------------------------+---------+-------+

| NAME | ENABLED | TIMED |

+---------------------------------------------------+---------+-------+

...

| stage/sql/end | NO | NO |

| stage/sql/executing | NO | NO |

| stage/sql/init | NO | NO |

| stage/sql/insert | NO | NO |

...

| statement/sql/load | YES | YES |

| statement/sql/grant | YES | YES |

| statement/sql/check | YES | YES |

| statement/sql/flush | YES | YES |

...

| wait/synch/mutex/sql/LOCK_global_read_lock | YES | YES |

| wait/synch/mutex/sql/LOCK_global_system_variables | YES | YES |

| wait/synch/mutex/sql/LOCK_lock_db | YES | YES |

| wait/synch/mutex/sql/LOCK_manager | YES | YES |

...

| wait/synch/rwlock/sql/LOCK_grant | YES | YES |

| wait/synch/rwlock/sql/LOGGER::LOCK_logger | YES | YES |

| wait/synch/rwlock/sql/LOCK_sys_init_connect | YES | YES |

| wait/synch/rwlock/sql/LOCK_sys_init_slave | YES | YES |

...

| wait/io/file/sql/binlog | YES | YES |

| wait/io/file/sql/binlog_index | YES | YES |

| wait/io/file/sql/casetest | YES | YES |

| wait/io/file/sql/dbopt | YES | YES |

...

To control whether an instrument is enabled, set its ENABLED column to YES or NO. To configure

whether to collect timing information for an enabled instrument, set its TIMED value to YES or NO.

21

Pre-Filtering by Object

Setting the TIMED column affects Performance Schema table contents as described in Section 5.1,

“Performance Schema Event Timing”.

Modifications to most setup_instruments rows affect monitoring immediately. For some

instruments, modifications are effective only at server startup; changing them at runtime has no effect.

This affects primarily mutexes, conditions, and rwlocks in the server, although there may be other

instruments for which this is true.

The setup_instruments table provides the most basic form of control over event production. To

further refine event production based on the type of object or thread being monitored, other tables may

be used as described in Section 5.3, “Event Pre-Filtering”.

The following examples demonstrate possible operations on the setup_instruments table. These

changes, like other pre-filtering operations, affect all users. Some of these queries use the LIKE

operator and a pattern match instrument names. For additional information about specifying patterns to

select instruments, see Section 5.9, “Naming Instruments or Consumers for Filtering Operations”.

• Disable all instruments:

UPDATE performance_schema.setup_instruments

SET ENABLED = 'NO';

Now no events are collected.

• Disable all file instruments, adding them to the current set of disabled instruments:

UPDATE performance_schema.setup_instruments

SET ENABLED = 'NO'

WHERE NAME LIKE 'wait/io/file/%';

• Disable only file instruments, enable all other instruments:

UPDATE performance_schema.setup_instruments

SET ENABLED = IF(NAME LIKE 'wait/io/file/%', 'NO', 'YES');

• Enable all but those instruments in the mysys library:

UPDATE performance_schema.setup_instruments

SET ENABLED = CASE WHEN NAME LIKE '%/mysys/%' THEN 'YES' ELSE 'NO' END;

• Disable a specific instrument:

UPDATE performance_schema.setup_instruments

SET ENABLED = 'NO'

WHERE NAME = 'wait/synch/mutex/mysys/TMPDIR_mutex';

• To toggle the state of an instrument, “flip” its ENABLED value:

UPDATE performance_schema.setup_instruments

SET ENABLED = IF(ENABLED = 'YES', 'NO', 'YES')

WHERE NAME = 'wait/synch/mutex/mysys/TMPDIR_mutex';

• Disable timing for all events:

UPDATE performance_schema.setup_instruments

SET TIMED = 'NO';

5.5 Pre-Filtering by Object

The setup_objects table controls whether the Performance Schema monitors particular table and

stored program objects. The initial setup_objects contents look like this:

mysql> SELECT * FROM performance_schema.setup_objects;

+-------------+--------------------+-------------+---------+-------+

| OBJECT_TYPE | OBJECT_SCHEMA | OBJECT_NAME | ENABLED | TIMED |

22

Pre-Filtering by Object

+-------------+--------------------+-------------+---------+-------+

| EVENT | mysql | % | NO | NO |

| EVENT | performance_schema | % | NO | NO |

| EVENT | information_schema | % | NO | NO |

| EVENT | % | % | YES | YES |

| FUNCTION | mysql | % | NO | NO |

| FUNCTION | performance_schema | % | NO | NO |

| FUNCTION | information_schema | % | NO | NO |

| FUNCTION | % | % | YES | YES |

| PROCEDURE | mysql | % | NO | NO |

| PROCEDURE | performance_schema | % | NO | NO |

| PROCEDURE | information_schema | % | NO | NO |

| PROCEDURE | % | % | YES | YES |

| TABLE | mysql | % | NO | NO |

| TABLE | performance_schema | % | NO | NO |

| TABLE | information_schema | % | NO | NO |

| TABLE | % | % | YES | YES |