Earned Value Management System (EVMS)

Program Analysis Pamphlet (PAP)

DCMA-EA PAM 200.1

October 2012

THE DCMA ENGINEERING AND ANALYSIS EXECUTIVE DIRECTOR IS RESPONSIBLE

FOR ISSUANCE AND MAINTENANCE OF THIS DOCUMENT

DCMA

EVMS PROGRAM ANALYSIS PAMPLET OCT 2012

i

DEPARTMENT OF DEFENSE

Defense Contract Management Agency

PAMPHLET

Earned Value Management System (EVMS) Program Analysis Pamphlet (PAP)

Engineering and Analysis DCMA-EA PAM 200.1

OPR: DCMA-EAVP October 29, 2012

1. PURPOSE. This EVMS Program Analysis Pamphlet (EVMSPAP) will serve as a

primary

reference for EVMS Specialists to properly generate EVM metrics,

graphs

,

tables,

and

presentations supporting the creation of the Program Assessment Report (PAR). It outlines

EVM key components spanning

the

integrated business management systems and the

Integrated Master Schedule (IMS

)

.

2. APPLICABILITY. This Pamphlet applies to all DCMA

activitie

s

.

3.

RELEASABILITY – UNLIMITED

. This Pamphlet is approved for public release

.

4. EFFECTIVE DATE. This Pamphlet is effective

immediately.

Karron E.

Small

Executive

Director

Engineering and Analysis

Directorate

SMALL.KARR

ON.E.122962

5957

Digitally signed by

SMALL.KARRON.E.1229625957

DN: c=US, o=U.S. Government,

ou=DoD, ou=PKI, ou=DLA,

cn=SMALL.KARRON.E.122962595

7

Date: 2012.11.06 15:20:53 -05'00'

EVMS PROGRAM ANALYSIS PAMPLET OCT 2012

ii

TABLE OF CONTENTS

FOREWORD .............................................................................................................................................. 1

1.0 INTRODUCTION ..................................................................................................................... 2

2.0 PROGRAM ASSESSMENT REPORT (PAR) EVM REQUIREMENTS ........................ 3

2.1 EXECUTIVE SUMMARY ...................................................................................................... 3

2.2 INDEPENDENT ESTIMATE AT COMPLETION (IEAC) ................................................ 3

2.3 SUMMARY TABLE ................................................................................................................. 5

2.4 CONTRACT VARIANCE AND PERFORMANCE CHARTS ......................................... 8

2.5 VARIANCE ANALYSIS ......................................................................................................... 9

2.6 SCHEDULE ANALYSIS ....................................................................................................... 11

2.7 BASELINE REVISIONS ....................................................................................................... 11

2.8 EVM SYSTEM STATUS ....................................................................................................... 12

2.9 EV ASSESSMENT POINT OF CONTACT (POC) ........................................................... 13

3.0 INTEGRATED DATA ANALYSIS PERFORMANCE INDICATORS ........................ 14

3.1 PERFORMANCE DATA ANALYSIS ................................................................................ 14

3.1.1 COST ..............................................................................................................................15

3.1.1.1 COST PERFORMANCE INDEX (CPI) ........................................................................15

3.1.1.2 COST PERFORMANCE INDEX TREND ....................................................................16

3.1.1.3 THE RATIO: “PERCENT COMPLETE” TO “PERCENT SPENT” ............................16

3.1.2 SCHEDULE ....................................................................................................................18

3.1.2.1 SCHEDULE PERFORMANCE INDEX (SPI) ..............................................................18

3.1.2.2 SCHEDULE PERFORMANCE INDEX (SPI) TREND ................................................19

3.1.2.3 CRITICAL PATH LENGTH INDEX (CPLI) ................................................................19

3.1.2.4 BASELINE EXECUTION INDEX (BEI) ......................................................................20

EVMS PROGRAM ANALYSIS PAMPLET OCT 2012

iii

3.1.3 ESTIMATE AT COMPLETION (EAC) ........................................................................22

3.1.3.1 “COST PERFORMANCE INDEX” – “TO COMPLETE PERFORMANCE

INDEX” ..........................................................................................................................22

3.1.3.2 THE RATIO: “BUDGET AT COMPLETION” TO “ESTIMATE AT

COMPLETION” .............................................................................................................23

3.1.3.3 THE RATIO: “% COMPLETE” TO “% MANAGEMENT RESERVE USED” ..........23

3.2 DATA INTEGRITY ASSESSMENT ................................................................................... 24

3.2.1 SYSTEM COMPLIANCE ..............................................................................................25

3.2.1.1 SYSTEM COMPLIANCE STATUS RATING .............................................................25

3.2.2 BASELINE QUALITY ..................................................................................................25

3.2.2.1 BASELINE INDICATOR ..............................................................................................25

3.2.2.2 BASELINE REVISIONS INDEX ..................................................................................26

3.2.2.3 CONTRACT MODIFICATIONS ..................................................................................27

4.0 14 POINT SCHEDULE METRICS FOR IMS (PROJECT/OPEN PLAN, ETC.)

ANALYSIS ............................................................................................................................... 28

4.1 LOGIC ....................................................................................................................................... 28

4.2 LEADS ...................................................................................................................................... 28

4.3 LAGS ......................................................................................................................................... 29

4.4 RELATIONSHIP TYPES ....................................................................................................... 29

4.5 HARD CONSTRAINTS ......................................................................................................... 30

4.6 HIGH FLOAT .......................................................................................................................... 30

4.7 NEGATIVE FLOAT ............................................................................................................... 30

4.8 HIGH DURATION ................................................................................................................. 31

4.9 INVALID DATES ................................................................................................................... 31

4.10 RESOURCES ........................................................................................................................... 31

4.11 MISSED TASKS ..................................................................................................................... 32

EVMS PROGRAM ANALYSIS PAMPLET OCT 2012

iv

4.12 CRITICAL PATH TEST ........................................................................................................ 32

4.13 CRITICAL PATH LENGTH INDEX (CPLI) ..................................................................... 32

4.14 BASELINE EXECUTION INDEX (BEI) ............................................................................ 32

5.0 DATA INTEGRITY INDICATORS .................................................................................... 33

5.1 BCWS

cum

> BAC ..................................................................................................................... 33

5.2 BCWP

cum

> BAC ..................................................................................................................... 33

5.3 ACWP

cum

WITH NO BAC ..................................................................................................... 34

5.4 ACWP

cur

WITH NO BAC ...................................................................................................... 34

5.5 NEGATIVE BAC .................................................................................................................... 34

5.6 ZERO BUDGET WPs ............................................................................................................. 34

5.7 LEVEL OF EFFORT WITH SCHEDULE VARIANCE ................................................... 35

5.8 BCWP WITH NO ACWP ...................................................................................................... 35

5.9 COMPLETED WORK WITH ETC ...................................................................................... 36

5.10 INCOMPLETE WORK WITHOUT ETC ............................................................................ 36

5.11 ACWP ON COMPLETED WORK ....................................................................................... 36

5.12 CPI-TCPI > 0.10 ...................................................................................................................... 37

5.13 CPI-TCPI < -0.10 ..................................................................................................................... 37

5.14 ACWP

cum

> EAC ...................................................................................................................... 37

5.15 NEGATIVE BCWS

cum

OR NEGATIVE BCWS

cur

............................................................. 37

5.16 NEGATIVE BCWP

cum

OR NEGATIVE BCWP

cur

............................................................ 38

5.17 EVM COST TOOL TO IMS WORK PACKAGE COMPARISON ................................ 38

6.0 ADDITIONAL RESOURCES........................................................................................40

7.0 APPENDIX: ACRONYM GLOSSARY .......................................................................42

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

1

FOREWORD

The Defense Contract Management Agency (DCMA) performs three types of Earned Value

Management System (EVMS) functions: Compliance Reviews, System Surveillance, and

Program Analysis. The Agency has developed a suite of instructions to define and standardize

these functions. In addition, the EVMS Specialist Certification Program (ESCP) standardizes

the training and experience requirements for DCMA EVMS Specialists. The Major Program

Support (MPS) instruction (http://guidebook.dcma.mil/56/index.cfm) provides a framework for

Contract Management Office (CMO) multifunctional Programs Support Teams (PST) and

requires a Program Assessment Report (PAR). This EVMS Program Analysis Pamphlet

(EVMSPAP) shall be used as a reference to generate EVM metrics,

graphs, tables, and

presentations.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

2

1.0 INTRODUCTION

The intent of the EVMSPAP is to outline the key components of EVM analysis. An EVMS is an

integrated business management system consisting of the following five areas: Organization;

Planning, Scheduling & Budgeting; Accounting; Analysis & Management Reports; and

Revisions & Data Maintenance. Scheduling is a critical EVMS function. Therefore, this

Pamphlet also contains information regarding Integrated Master Schedule (IMS) analysis.

The purpose of the EVMSPAP is to provide a standard for conducting analysis and to ensure all

EVMS Specialists have the information required to approach EVM and the IMS analysis

consistently. An integral part of successful program management is reliable and accurate

information. Program managers and their teams perform best when they are well informed. The

goal of EVM program analysis is to provide consistent and timely insight to program

status in

order to enable timely, effective management decision making.

On June 19, 2012, the Integrated Program Management Report (IPMR) was released and

effectively combined and updated the Contract Performance Report (CPR) and the Integrated

Master Schedule (IMS) Data Item Descriptions (DID). The IPMR contains data for measuring

cost and schedule performance on Department of Defense (DOD) acquisition contracts. It is

structured around seven formats that contain the content and relationships required for electronic

submissions. The IPMR is effective for all

new applicable contracts after July 1, 2012,

but the CPR and the IMS DIDs remain in

effect for preexisting contracts. Users of this

document should recognize this transition to

the IPMR when considering text references

to the CPR or IMS and application of the

practices, procedures, and methods in support

of EVM functions. While the IPMR updated

DID requirements, Table 1 compares the

CPR, IMS, and IPMR DID formats.

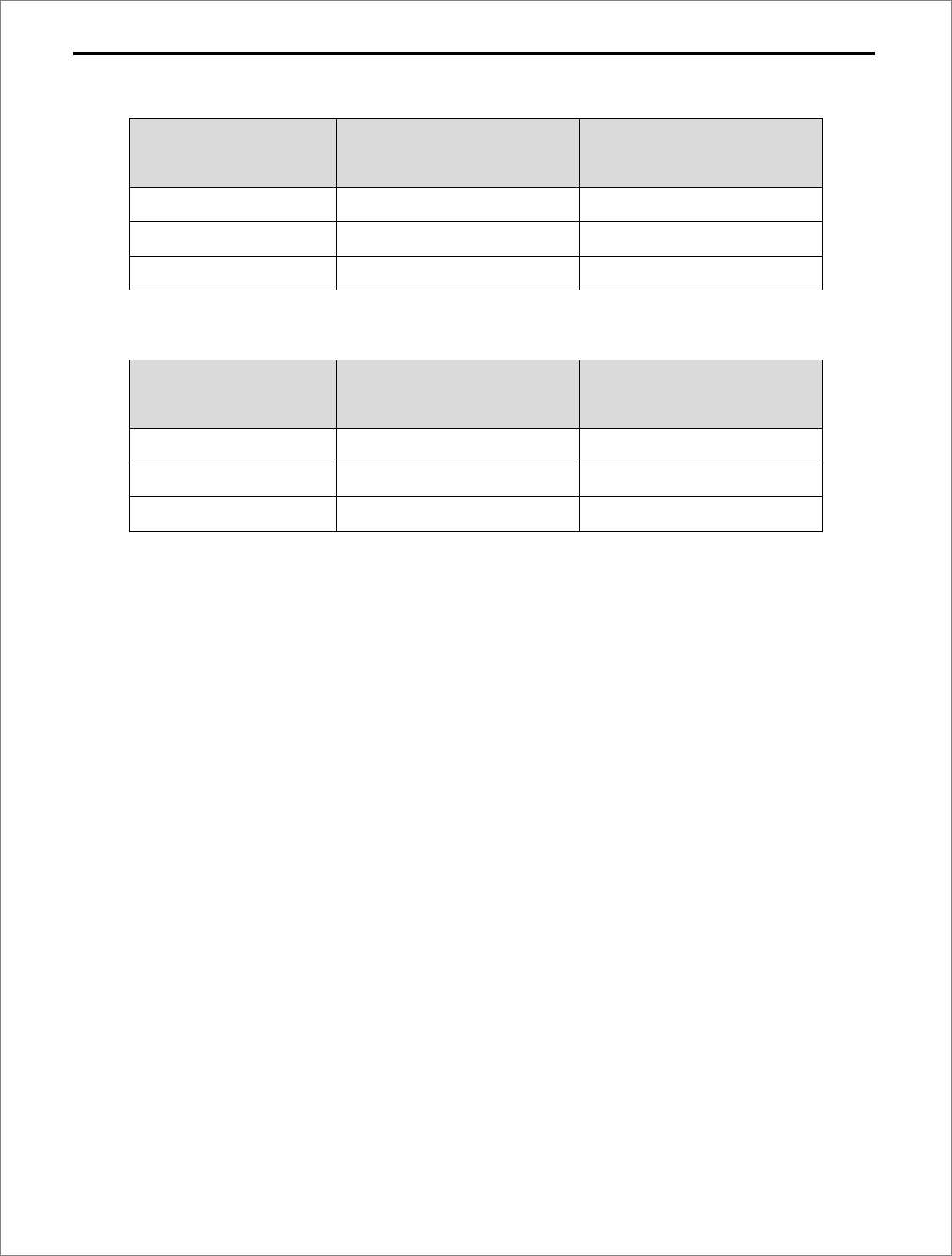

Table 1. Correlating the CPR DID and the IMS

DID with the IPMR DID

CPR DID & IMS DID

IPMR DID

CPR Format 1 IPMR Format 1

CPR Format 2 IPMR Format 2

CPR Format 3 IPMR Format 3

CPR Format 4 IPMR Format 4

CPR Format 5 IPMR Format 5

IMS IPMR Format 6

N/A IPMR Format 7

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

3

2.0 PROGRAM ASSESSMENT REPORT (PAR) EVM REQUIREMENTS

The PAR is a product for DCMA customers containing functional input from all members of a

program support team (PST). One of those PST functions is EVMS. EVMS information is

contained in Section 4 and Annex A: Earned Value Report Template. The information requested

in Section 4 can be entirely derived from the Earned Value (EV) Report Template. If a program

has more than one contract, an EV Report will be generated for each contract and summarized

into a single Section 4 summary.

The following sections contain guidance for EVMS Specialists in the production of EV Reports

intended for Annex A of the PAR.

2.1 EXECUTIVE SUMMARY

The executive summary is intended to provide a brief synopsis for management that may not

necessarily have the time to read the entire report. Try to avoid providing too many details.

Instead, focus on broad trends or major issues that require DCMA or otherwise governmental

PM attention. Major programmatic issues related to cost, schedule, or Estimated at Completion

(EAC) growth should be included. For example, an Over Target Baseline (OTB) or Nunn

McCurdy breach would be a significant issue to be addressed in the executive summary. A

summary of metrics such as the Cost Performance Index (CPI) and the Schedule Performance

Index (SPI), and the top cost and schedule drivers provides a useful assessment of overall

contractor performance. Lastly, provide the latest EVM System Status as this indicates the

higher reliability of contractor reports generated by their EVMS.

2.2 INDEPENDENT ESTIMATE AT COMPLETION (IEAC)

An IEAC is DCMA’s forecast of the final total cost of the program. The EAC is an important

number used by program stakeholders. A program office relies on the EAC for securing

sufficient funding for the program. The DCMA IEAC represents an independent second opinion

of the final program costs. This provides the program office with important information to aid in

funding decisions by quantifying risk associated with both cost and schedule and evaluating

potential impacts if the current course of action is not changed.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

4

The process of determining the IEAC begins with determining the upper and lower statistical or

mathematical bounds with the following equations:

EAC

cpi

= ACWP

cum

+

(

BAC − BCWP

cum

)

CPI

cum

EAC

composite

= ACWP

cum

+

(BAC − BCWP

cum

)

(CPI

cum

∗ SPI

cum

)

Typically the EAC

cpi

formula provides a lower bound, or the most optimistic IEAC. The

EAC

composite

formula provides an upper bound, or the most pessimistic IEAC. This assumption is

based on CPI

cum

and SPI

cum

being less than 1. If both of these metrics are greater than 1 then the

reverse will be true; meaning EAC

cpi

will become the most pessimistic IEAC. These formulas

are most accurate when the program is between 15% complete and 95% complete. Outside of

these ranges the formulas may not provide accurate bounds.

The next step performs a detailed analysis of the contractor EAC by Work Breakdown Structure

(WBS) element at the lowest level available. This analysis involves determining the

reasonableness of the WBS level estimates with information gained from program surveillance

and other functional PST member input. This is the perfect place to make adjustments if the

contractor’s value does not appear reasonable. For a detailed discussion regarding analysis of a

contractor’s EAC please refer to “Examining the Comp

rehensive Estimate-at-Completion” found

in the references section of this Pamphlet.

A contractor’s most likely EAC is required to include some program risk factors. Review the

program risk registry and determine if the risks included by the contractor in their most likely

EAC are reasonable. These risks may present a consequence in terms of either cost or schedule.

Even schedule risks may end up presenting a cost risk in the end. However, it should be noted

that risks themselves might never result in a cost or schedule increase until the risk manifests

itself. Risk mitigation (abatement) on the other hand would need effort expended and result in

some cost and possible schedule impact. Any differences between the contractor’s estimate of

likely risks and DCMA’s assessment should be documented in terms of dollars.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

5

Finally, roll up any adjustments made to individual WBS element EACs and any changes made

to risks to determine the value of the DCMA IEAC. Check the rolled-up value against the two

formula values discussed earlier in this section. The rolled-up value may fall outside of the

statistical formula bounds, but this should be considered a flag. If this occurs, double check your

adjustments and ensure they are properly documented.

Some programs may have a Contract Funds Status Report (CFSR) requirement. CFSRs are

typically submitted to the program office on a quarterly basis. The CFSR provides a forecast of

the total program price at completion, to include any applicable fee. The contractor’s most likely

EAC reported on the monthly Contract Performance Report (CPR) should reconcile with the

forecast in the CFSR after taking into account the timing differences of the reports.

2.3 SUMMARY TABLE

This section of the PAR contains a summary table of metrics for the contract WBS (CWBS)

watch items identified in Section 2 of the PAR as well as other CWBS elements that require

management visibility. Appendix B of the PAR defines the assessment criteria for metrics in

terms of Green, Yellow, and Red. The following metrics are already defined in this Pamphlet

and can be found in the following sections:

2.2 INDEPENDENT ESTIMATE AT COMPLETION (IEAC)

3.1.1.1 COST PERFORMANCE INDEX (CPI)

3.1.1.3 THE RATIO: “PERCENT COMPLETE” TO “PERCENT SPENT”

3.1.2.1 SCHEDULE PERFORMANCE INDEX (SPI)

The remaining metrics are

• Schedule Variance (SV)

• Schedule Variance (SV) Trend

• Cost Variance (CV)

• Cost Variance (CV) Trend

Schedule Variance (SV) is the difference between the dollars of budget earned {the Budgeted

Cost for Work Performed (BCWP)} and the dollars planned to be earned to date {the Budgeted

Cost for Work Scheduled (BCWS)}. BCWP and BCWS can be found on the CPR Format 1.

Due to the various techniques available for calculating the amount of budget earned (BCWP),

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

6

the SV metric should not be confused with a behind schedule or ahead of schedule condition. It

should be used as a general indicator of schedule performance and must be used in conjunction

with IMS analysis in accordance with this document as well as the DCMA Integrated Master

Schedule Assessment Guide to determine the true schedule status of the program. For example,

if the program incorporated into the schedule, the current delays might be absorbed and the

program would finish on time and perhaps even ahead of schedule. However, if extra time is not

available, then any negative SV would almost certainly result in a slip. Similarly, a positive SV

doesn’t necessarily mean that the program will finish on time or ahead of schedule.

The formula for calculating SV is as follows:

Schedule Variance (SV) = Earned − Budgeted

or

SV = BCWP − BCWS

SV less than zero (0) indicates an unfavorable schedule variance and perhaps unfavorable

performance. Conversely, SV values greater than zero (0) indicate a favorable schedule

variance.

The SV Trend compares the metric for a specific reporting period (usually monthly) to the same

metric in prior reporting periods. An SV trend is favorable if the SV increases in value over the

course of multiple reporting periods (i.e., three months). Conversely, the SV trend is

unfavorable if it decreases in value. Table 2 provides examples of the first trend while Table 3

provides an example of the second trend. Note in table 2, the metric for Project B actually shows

a negative monthly status although the trend is progressively less negative and therefore

favorable; Table 3 shows the inverse, that although the trend decreases unfavorably, the SV

itself remains positive.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

7

Table 2. Favorable SV Trends

Reporting Period

Project A Project B

Schedule Variance (SV) Schedule Variance (SV)

January

$

6K -

$

8K

February

$

7K -

$

7K

March

$

8K -

$

6K

Table 3. Unfavorable SV Trends

Reporting Period

Project A Project B

Schedule Variance (SV) Schedule Variance (SV)

January

$

8K -

$

6K

February

$

7K -

$

7K

March

$

6K -

$

8K

Cost Variance (CV) is the difference of the value of budget earned, or Budgeted Cost for Work

Performed (BCWP), and the amount of costs incurred, or actual cost of work performed

(ACWP). BCWP and ACWP can be found on the CPR Format 1. The formula for calculating

CV is as follows:

Cost Variance (CV) = Earned − Actual

or

CV = BCWP − ACWP

A Cost Variance less than 0 indicates an unfavorable cost variance or more resources have been

spent to accomplish the work to date and that the project may be currently over budget.

Conversely, a CV greater than 0 indicates a favorable cost variance; the project is under budget

costing less than expected. As with the SV discussed earlier, CV is a snapshot of current

performance. The analyst must establish how these variances relate to and impact the whole

program.

Similar to the SV Trend, the CV Trend is a comparison of the metric for a specific reporting

period (usually monthly) to the same metric in prior reporting periods. A CV trend is favorable

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

8

if the CV increases in value over the course of multiple reporting periods. Conversely, the CV

trend is unfavorable if it decreases in value. Examples are similar to those provided in the SV

Trend tables above.

2.4 CONTRACT VARIANCE AND PERFORMANCE CHARTS

This section of the PAR is a graphical view of various metrics depicting program performance

from program inception to date. These charts are presented as part of the Defense Acquisition

Executive Summary (DAES) review; which is routinely conducted at Office of the Secretary of

Defense for programs determined to be high risk or high visibility.

The first chart, Figure 1 Contract Variance Chart, depicts the following:

• Cost Variance

•

Schedule Variance

•

Management Reserve Usage,

•

Contractor Variance at Completion

•

Program Manager Variance at Completion

•

10% BCWP Thresholds

The Program Manager Variance at Completion (VAC) is calculated by subtracting the Program

Office EAC from the Budget at Completion (BAC) of the contract. The BAC can be found on

the CPR Format 1. VAC in general is calculated with the following formula:

Variance at Completion (VAC) = Budgeted − Estimated

or

VAC = BAC − EAC

The Variance at Completion identifies either a projected over-run (negative VAC) or an under-

run (positive VAC). If a contract is projected to overrun, it means the total cost at completion

will be greater than the budget.

The second chart, Figure 2 Contract Performance Chart, depicts

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

9

• ACWP

•

BCWS

•

BCWP

•

Total Allocated Budget (TAB)

•

Contract Budget Base (CBB)

•

Contractor EAC

•

The program manager’s EAC over the period of performance of the contract.

Both Figure 1 and Figure 2 can be generated from wlnsight or by using Microsoft Excel file

found in the Resource Page Tab to this Pamphlet (http://guidebook.dcma.mil/).

2.5 VARIANCE ANALYSIS

Variance Analysis is the identification and explanation of the top cost and schedule drivers and

typically involves cumulative information. Variance analysis employing current data may also

be useful in identifying emerging trends that may signal concern. The WBS elements that

significantly contribute to the program cost and schedule variance should be discussed in this

section of the PAR. The CPR Format 1 contains the cost and schedule variance by WBS

element. When available, a wlnsight export file from the contractor’s EVMS may contain more

detailed WBS level information. If using a CPR Format 1, ensure you have a Microsoft Excel

electronic copy. If one is not available then populate a blank spreadsheet with the CPR

information.

The first step in identifying top drivers is to calculate cost variance percent (CV%) and schedule

variance percent (SV%). The CV% metric quantifies the magnitude of the cost variance (CV) by

dividing CV by BCWP and multiplying by 100. The formula for calculating

CV% is as follows:

CV% =

CV

BCWP

× 100

A high CV% indicates significant variance magnitude. Both positive and negative CV, and

likewise CV%, are considered drivers.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

10

SV% is similar in that the metric quantifies the magnitude of the schedule variance (SV). The

formula for calculating SV% is as follows:

SV% =

SV

BCWS

× 100

A high SV% indicates significant variance magnitude. Both positive and negative SV, and

likewise SV%, are considered drivers.

If using wlnsight, the CV% and SV% fields are already calculated and available by inserting a

new column and selecting these elements. If using a CPR Format 1, ensure you either have the

MS Excel copy or have populated a MS Excel spreadsheet. Then use the formulas above to

calculate the metric by WBS element.

Once the CV% and SV% metrics have been calculated, sort the WBS elements by CV% from

smallest to largest. If there are WBS elements with negative (unfavorable) CV% they will be

displayed at the top of the list. If there are WBS elements with positive (favorable) CV% they

will be displayed at the bottom of the list. Select the largest favorable and unfavorable cost

drivers and include them in the variance analysis section of the PAR. Likewise, sort the list by

SV% and select the largest favorable and unfavorable schedule drivers.

Once the top schedule drivers have been identified, identify any resulting impacts to the key

milestones in the IMS. In order to do this the WBS elements in the CPR must be correlated to

activities in the IMS. Typically, contractors include a WBS reference column in the IMS for this

purpose. Obtain a copy of the IMS for the same month as the CPR being analyzed. Microsoft

Project is a common software tool used for creating an IMS. Filter for the WBS elements

identified as top schedule drivers. A list of activities will be displayed with logic links. Follow

the successors of these tasks until you find the first major milestone in the logic chain. A few

examples of major milestones are the Preliminary Design Review (PDR) or the Critical Design

Review (CDR). This will be the key milestone impacted by the schedule variance.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

11

2.6 SCHEDULE ANALYSIS

The area of schedule analysis is broad and diverse. For the purpose of the PAR, two metrics will

be calculated: Critical Path Length Index (CPLI) and Baseline Execution Index (BEI). See

section 3.1.2.3 of this Pamphlet for a detailed discussion regarding CPLI or section 3.1.2.4 for

BEI.

2.7 BASELINE REVISIONS

The Baseline Revisions metric highlights changes made to the time-phased PMB (or budget)

over the past 6 -12 month period using the CPR Format 3. If the Format 3 has been tailored out,

request a wlnsight data file. A change of five or more percent is used as an early warning

indicator that the program’s time-phasing and control of budget is volatile in the near term and

that a significant departure from the original plan has occurred. This metric indicates how the

volatile near term baseline changes over time. Substantial changes to the baseline time phasing

may indicate the supplier has inadequate plans in place and the performance metrics may be

unreliable. Change is inevitable but the near term plan should

be firm and change control should

be exercised.

The Baseline Revisions metric is measured using “end of period” data from the CPR Format 3.

The Format 3 of the CPR (end of period) projects the PMB for future periods, and it’s important

to ensure that only the End of Month (EOM) data is being used for this calculation. An example

of the calculation is provided in the Baseline Revisions Spreadsheet found in the Resource Page

Tab to this Pamphlet (http://guidebook.dcma.mil/) and is calculated using the following steps:

1. Format a spreadsheet with the report dates of the six CPRs being analyzed in Column A,

Rows 2-7.

2. Format the spreadsheet with the forecast months beginning with the date from Block 6,

Column 4 of the oldest of the six CPR’s Format 3 and ending with the date from Block 6,

Column 9 of the most recent of the six CPR’s Format 3.

3. Input the values from “Six Month Forecast” in Block 6, Columns 4-9 of the oldest CPR

Format 3 into Row 2, Columns B-G.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

12

4. Continue inputting values from subsequent months CPR’s, aligning the dates from Block

6, Columns 4-9 of CPR Format 3 to the dates in Row 1 of the spreadsheet.

5. For the past 6 periods identified in row 1, identify the min and max values.

6. Calculate the Baseline Revision Percentage for each of the last 6 months using the

following calculation:

Baseline Revision

(

%

)

=

Maximum − Minimum

Minimum

× 100

If this metric exceeds 5% there is high volatility in the near term plan, and it should be

documented in the PAR as an issue. This metric can be generated/reported through wlnsight.

2.8 EVM SYSTEM STATUS

The EVM System Status is important for stakeholders that depend on EVMS data. The System

Status indicates if the data generated by the contractor EVMS is reliable for management

decision making. The DCMA Standard Surveillance Instruction (SSI) used by

CMOs and the

Compliance Review Instruction (CRI) used by the Operations EVM Implementation Division

outline what

steps are necessary to ensure a contractor EVMS is in compliance with ANSI/EIA-

748 Guidelines.

This section of the PAR requires the following information:

• The status of system acceptance by DCMA.

• EVM system surveillance results

• Status of open Corrective Action Requests (CAR) and ongoing Corrective Action

Plans (CAP)

The first bullet is a product of the CRI process and is represented by either a Letter of

Acceptance (LOA) or Advance Agreement (AA). The remaining bullets are products of the SSI

process. Take all of these items into consideration to determine the overall health of the

contractor’s EVMS.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

13

2.9 EV ASSESSMENT POINT OF CONTACT (POC)

The official EV point of contact for each contractor site is maintained on a spreadsheet by the

Engineering and Analysis

EVM Division

. Those POCs conduct system surveillance and

produce the applicable documents per the SSI. If there is a separate POC for program analysis,

list both names and

contact information in this section of the PAR.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

14

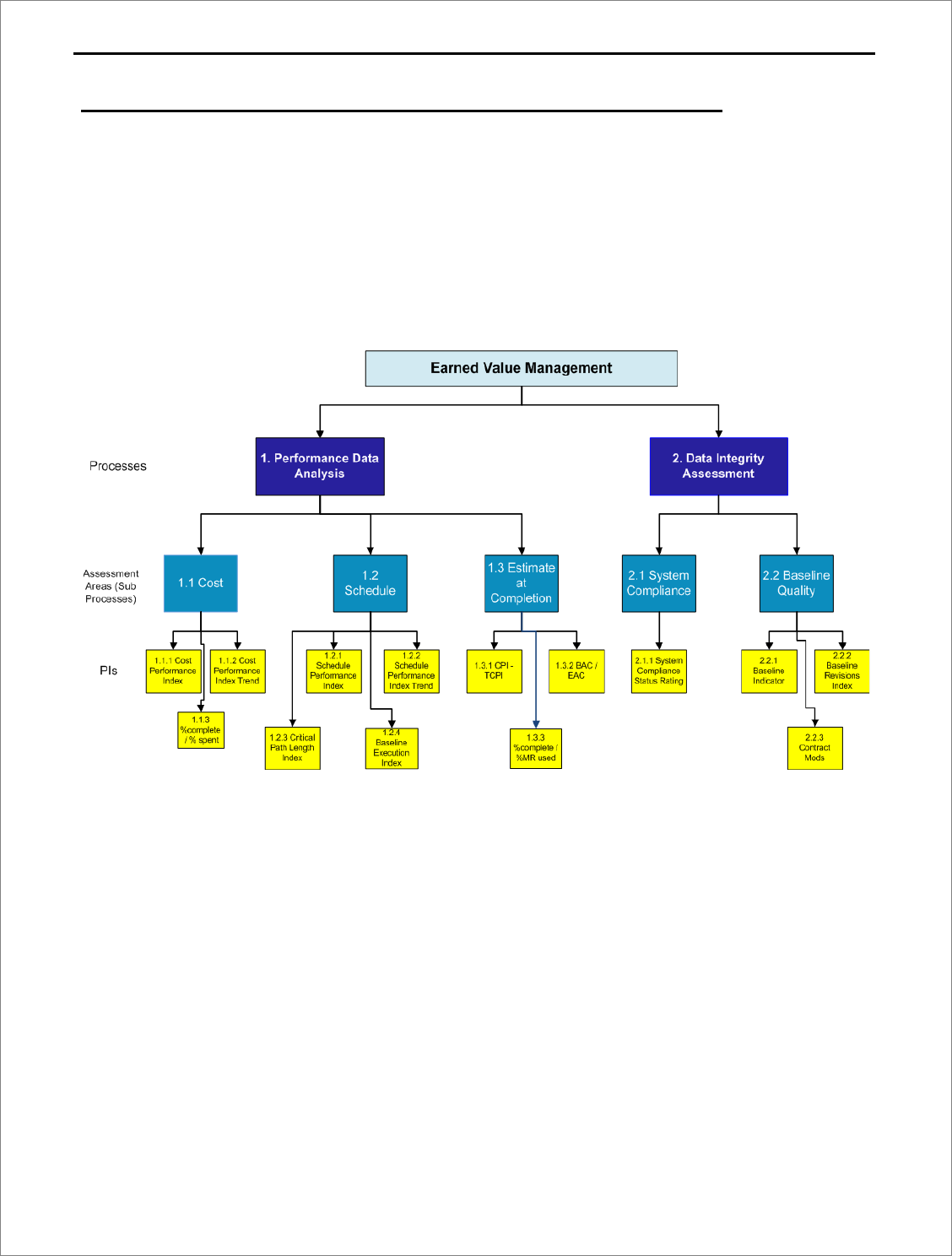

3.0 INTEGRATED DATA ANALYSIS PERFORMANCE INDICATORS

The integrated data analysis performance indicators (PIs) will be used by DCMA to quickly

determine program and overall supplier status with respect to EVMS and other functional areas.

The PIs will be located and maintained in Metrics Studio. Figure 1 below outlines the PIs

related to EVM. For ease of reference, the numbering scheme in section 3.0 of this Pamphlet

reflects the numbering scheme in Figure 1.

Figure 1: EVM Performance Indicators

3.1 PERFORMANCE DATA ANALYSIS

The performance indicators are grouped into two main categories: Performance Data Analysis

and Data Integrity Assessment. This section discusses Performance Data Analysis. As the

names suggest, these metrics relate to how well the program is performing with respect to cost,

schedule, and EAC management. These metrics reflect program execution

with respect to the

performance measurement baseline (PMB).

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

15

3.1.1 COST

The first subgroup of performance metrics is cost. The cost performance metrics selected for

performance indicators are Cost Performance Index (CPI), CPI Trend, and the ratio “%

complete” to “% spent.”

3.1.1.1 COST PERFORMANCE INDEX (CPI)

The Cost Performance Index (CPI) is an efficiency factor representing the relationship between

the performance accomplished (BCWP) and the actual cost expended (ACWP).

The CPI for

programs without an OTB is calculated as follows:

Cost Performance Index

cumulative

=

Earned

Actual

or

CPI

cum

=

BCWP

cum

ACWP

cum

An index of 1.00 or greater indicates that work is being accomplished at a cost equal to or below

what was planned. An index of less than 1.00 suggests work is accomplished at a cost greater

than planned. A cumulative index of less than 0.95 is used as an early warning indicator of cost

increase and should be investigated.

BCWP

cum

is found in block 8.g. column 8 of CPR Format 1 and ACWP

cum

in block 8.g. column

9. The CPI for programs with an OTB calculated to be less than 0.95 also serves as a warning

and should be investigated; this adjusted CPI is determined as follows:

CPI

adj

=

BCWP

cum

− BCWP

prior to OTB

ACWP

cum

− ACWP

prior to OTB

A single point adjustment (SPA) is a process that sets a contract’s existing cost and/or schedule

variances to zero and re-plans all the remaining work with the goal of completing the project on

schedule and on budget. Unlike an over-target baseline, the goal of an SPA is to develop a new

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

16

PMB that completes all the remaining work using only the remaining budget from the original

PMB. No additional (over-target) budget is added to the new PMB. With the SPA reducing the

variances to zero, the Cost Performance Index would equal 1.

3.1.1.2 COST PERFORMANCE INDEX TREND

The CPI Trend is a comparison of the metric this reporting period (usually monthly) to the same

metric in prior reporting periods. A CPI trend is favorable if the CPI increases in value over the

course of multiple reporting periods. Conversely, the CPI trend is unfavorable if it decreases.

3.1.1.3 THE RATIO: “PERCENT COMPLETE” TO “PERCENT SPENT”

This metric is a ratio of two other metrics for the purpose of gauging the amount of budget spent

in relation to the amount of work completed. The first part of this metric, the

numerator, is

Percent Complete (%comp). The formula to calculate %comp is as follows:

Percent Complete (%) = %comp =

BCWP

cum

BAC

× 100

The value range of %comp is from 0% to 100%. It provides a measure of how far along the

program is toward project completion. The second part of the metric, the denominator, is

Percent Spent (%spent). The formula to calculate % spent is as follows:

Percent Spent

(

%

)

= %spent =

ACWP

cum

BAC

× 100

The value range of %spent starts at 0% and since it tracks actual cost, theoretically has no limit.

It provides a measure of how far along the program is toward completion in terms of the budget

at completion. If %spent is over 100%, it indicates a cost over-run condition has been realized.

The combination of

these two metrics results in the following formula:

%comp

%spent

=

BCWP

cum

BAC

⁄

ACWP

cum

BAC

⁄

=

BCWP

cum

ACWP

cum

= Cost Performance Index

(

CPI

)

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

17

When measured independently, %comp and %spent provide additional insight into program

performance. As shown above, the ratio of these two metrics results in the CPI.

An alternative calculation for %spent sometimes employed in the industry compares the actual

cost to the estimate of the total cost for the total contract (EAC). As noted in section 5.14, the

EAC differs from the BAC by moving away from earned value that results from the budgeted

baseline and builds on the actual costs to date (ACWP) plus a forecasted cost to complete the

program (ETC). As noted in the presentation of the Independent Estimate at Completion (IEAC)

in Section 2.2, the estimate to complete is actually a range of values that attempts to predict the

final program cost and it may consequently be computed in accordance with a number of

methodologies.

First, it could be based on an assumption that future work will be accomplished at the established

budgeted rate. If project assessment for tracking actual performance is unfavorable, then the

inherent assumption that future performance will rise to the budgeted expectations may not be

valid.

Secondly, in computing EAC, it could be assumed that the previous actual performance /

efficiency will continue into the future and the Cost Performance Index (CPI) may be a

reasonable modifier of the budgeted cost at completion to indicate the final program cost. Some

techniques for computing EAC consider both cost and schedule performance factors by

employing the SPI and the CPI collectively to modify the ETC which is then summed with the

actual cost. Variations on this technique weight SPI and CPI (75/25, 50/50, etc.) based on their

relative impact on project performance and thus their influence on future performance.

Other techniques include statistical approaches employing regression or Monte Carlo simulations

or a “bottom’s up” summation of EAC’s at different WBS levels. The different methodologies

for computing EAC testify to an inherent flexibility of the metric to match the complexities of

any given project and could if employed properly and considered in light of their base

assumptions, provide valuable tools for tracking and projecting project performance. However,

this variability undermines EAC’s usefulness in computing percent spent in a consistent manner.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

18

Consequently, DCMA EVMS Specialists consistently employ the Budget at Completion for

computing %spent.

3.1.2 SCHEDULE

The second subgroup of performance metrics is schedule. The schedule performance metrics

selected for performance indicators are the Schedule Performance Index (SPI), the SPI Trend,

the Critical Path Length Index (CPLI), and the Baseline Execution Index (BEI).

3.1.2.1 SCHEDULE PERFORMANCE INDEX (SPI)

The Schedule Performance Index (SPI) is an efficiency factor representing the relationship

between the performance achieved and the initial planned schedule. The SPI for programs

without an OTB is calculated as follows:

Schedule Performance Index

cumulative

=

Earned

Budgeted

or

SPI

cum

=

BCWP

cum

BCWS

cum

An index of 1.00 or greater indicates that work is being accomplished at a rate on or ahead of

what was planned. An index of less than 1.00 suggests work is being accomplished at a rate

below the planned schedule. An index of less than 0.95 is used as an early warning indication of

execution and should be investigated.

BCWP

cum

is found in block 8.g. column 8 of CPR Format 1 and BCWS

cum

in block 8.g. column

7. The SPI for programs with an OTB is calculated as:

SPI

cum

=

BCWP

cum

− BCWP

cum @ time of OTB

BCWS

cum

− BCWS

cum @ time of OTB

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

19

3.1.2.2 SCHEDULE PERFORMANCE INDEX (SPI) TREND

Similar to the CPI Trend, the SPI Trend is a comparison of the metric for this reporting period

(usually monthly) to the same metric in prior reporting periods. An SPI trend is favorable if

the

SPI increases in value over the course of multiple reporting periods. Conversely, the SPI trend is

unfavorable if it decreases in value.

3.1.2.3 CRITICAL PATH LENGTH INDEX (CPLI)

The Critical Path Length Index (CPLI) is a measure of the efficiency required to complete a

milestone on-time. It measures critical path “realism” relative to the baselined finish date, when

constrained. A CPLI of 1.00 means that the program must accomplish one day’s worth of work

for every day that passes. A CPLI less than 1.00 means that the program schedule is inefficient

with regard to meeting the baseline date of the milestone (i.e. going to finish late). A CPLI

greater than 1.00 means the program is running efficiently with regard to meeting the baseline

date of the milestone (i.e. going to finish early). The CPLI is an indicator of efficiency relating

to tasks on a milestone’s critical path (not to other tasks within the schedule). The CPLI is a

measure of the relative achievability of the critical path. A CPLI less than 0.95 should be

considered a flag and requires further investigation.

The CPLI requires determining the program schedule’s Critical Path Length (CPL) and the Total

Float (TF). The CPL is the length in work days from time now until the next program milestone

that is being measured. TF is the amount of days a project can be delayed before delaying the

project completion date. TF can be negative, which reflects that the program is behind schedule.

The mathematical calculation of total float is generally accepted to be the difference between the

“late finish” date and the “early finish” date (late finish minus early

finish equals total float).

The formula for CPLI is as follows:

Critical Path Length Index (CPLI) =

CPL + TF

CPL

For programs that provide the IMS in a native schedule format, use the “Critical Path guide” to

determine the program’s critical path. Once the critical path has been identified, the CPL is

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

20

calculated by inserting a new task into the IMS, with an actual start date of the IMS status date.

The CPL is determined by inserting a number value in the duration of

this new task until the

finish date equals the finish date of the completion milestone identified

by the critical path

analysis. This is a trial and error process to get the correct duration for the CPL. Total Float for

the completion milestone is recorded for conducting the CPLI calculation.

For programs that provide the IMS in a static format, the CPL is estimated by counting workdays

between the start and finish. This can also be done by creating a new project file and entering

the dates in the manner described in the Critical Path Guide. Total Float used for this method is

the float for the completion milestone identified on the critical path. If the IMS is not delivered

in the native software tool and is not independently verified note the data used, method of

verifica

tion or that verification could not be independently conducted, and method used to assess

the critical path.

In addition to recording the CPLI results, it is important to document any rationale for the

completion milestone chosen and the analysis method used to calculate the critical path. It is

also important to note if the final milestone or task in the schedule has a baseline finish date

beyond the contract period of performance (CPR Format 3, Block 5.k.).

3.1.2.4 BASELINE EXECUTION INDEX (BEI)

The Baseline Execution Index (BEI) metric is an IMS-based metric that calculates the efficiency

with which tasks have been accomplished when measured against the baseline tasks. In other

words, it is a measure of task throughput. The BEI provides insight into the realism of program

cost, resource, and schedule estimates. It compares the cumulative number of tasks completed to

the cumulative number of tasks with a baseline finish date on or before the current reporting

period. BEI does not provide insight into tasks completed early or late (before or after the

baseline finish date), as long as the task was completed prior to time now. See the Hit Task

Percentage metric below for further insight into on-time performance. If the contractor

completes more tasks than planned, then the BEI will be higher than 1.00 reflecting a higher task

throughput than planned. Tasks missing baseline finish dates are included in the denominator. A

BEI less than 0.95 should be

considered a flag and requires additional investigation. The BEI is

calculated as follows:

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

21

BEI

cum

=

Total # of Tasks Complete

Total # of Tasks Completed Before Now + Total # of Tasks Missing Baseline Finish Date

The BEI is always compared against the Hit Task Percentage. The Hit Task Percentage is a

metric that measures the number of tasks completed early or on time to the number of tasks with

a baseline finish date within a given fiscal month. This metric can never exceed a value of 1,

since the metric assesses the status of tasks with a base finish date within a single fiscal month.

The following are definitions to become familiar with when calculating the BEI metric:

• Total Tasks: The total number of tasks with detail level work associated to them

• Baseline Count: The number of tasks with a baseline finish date on or before the

reporting period end

Begin by exporting or copying the IMS data to an MS Excel Worksheet. Include the Unique ID,

Task Name, EV Method, Duration, Summary, Actual Finish, Baseline Finish and Finish

Variance fields from the IMS file. Employ the following steps:

1. Filter out Level of Effort (LOE) tasks, summary tasks, and zero duration tasks

(milestones).

2. Filter the Baseline Finish data to include only dates up to the current reporting period of

the IMS.

3. Subtotal the number of tasks as the “Baseline Count”.

4. Undo the filter on Baseline Finish.

5. Filter the Actual Finish data to include only dates up to the current reporting period of the

IMS.

6. Subtotal the number of tasks as the “Total number of tasks completed”.

7. Undo the filter on Actual Finish.

8. Divide the “Total number of tasks completed” by the “Baseline Count” to get the BEI

value.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

22

The Hit Task Percentage is calculated as follows:

Hit Task % =

Total # of tasks complete on or before task baseline date

# of tasks with baseline finish date within current reporting period

Steps:

1. Using the same spreadsheet used to calculate the BEI, clear all filters and reapply filters

to remove all LOE, summary and zero duration (milestone) tasks.

2. Filter the baseline finish less than or equal to current reporting month end.

3. Subtotal these tasks as “Current Period Baseline Count” tasks.

4. Filter (within above filter) the actual finish less than or equal to baseline finish date.

5. Subtotal these tasks as “Actual Hit”.

6. Divide the “Actual Hit” number by the “Current Period Baseline” number to get the

Current Hit Task Percentage.

3.1.3 ESTIMATE AT COMPLETION (EAC)

The third subgroup of performance metrics is estimate at completion (EAC). The EAC metrics

selected for performance indicators are CPI-TCPI and the ratio of BAC to EAC and %comp to

%MR used.

3.1.3.1 “COST PERFORMANCE INDEX” – “TO COMPLETE PERFORMANCE

INDEX”

This metric compares the Cost Performance Index (CPI

cum

) to the To Complete Performance

Index (TCPI

EAC

). This metric gauges the realism of the contractor’s Latest Revised Estimate

(LRE) or Estimate at Completion (EAC) and is most useful when the program is at least 15%

complete and less than 95% complete. A mathematical difference of 0.10 or greater is used as an

early warning indication that the contractor’s forecasted completion cost could possibly be

unrealistic, stale, or not updated recently.

A CPI

cum

– TCPI

EAC

difference greater than or equal to 0.10 (Using the absolute value of the

difference) should be considered a flag. To begin it is first necessary to calculate the CPI

cum

.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

23

Follow the same method described in section 3.1.1.1 of this Pamphlet. The CPI

cum

.formula is

repeated here for convenience:

Cost Performance Index (CPI

cum

) =

BCWP

cum

ACWP

cum

TCPI

EAC

reflects the work remaining divided by the cost remaining as follows:

To Complete Performance Index

(

TCPI

EAC

)

=

BAC − BCWP

cum

EAC − ACWP

cum

In the case of an OTB, replace Budget at Completion (BAC) with the Total Allocated Budget

(TAB). The EAC used in this formula is the contractor’s most likely EAC from Block 6.c. of the

header information in the CPR Format 1.

The CPI

cum

and the TCPI

EAC

are compared to evaluate the realism of the supplier’s EAC and to

evaluate the reasonableness of using past efficiencies to predict future efficiencies. It is possible

that the nature of the work has changed thus making predictions based on past performance

unjustified.

3.1.3.2 THE RATIO: “BUDGET AT COMPLETION” TO “ESTIMATE AT

COMPLETION”

The budget at completion (BAC) and estimate at completion (EAC) are values that can be found

on the CPR Format 1. This metric divides BAC by EAC to determine a ratio. A ratio value

above 1.0 indicates the program is estimate to complete under cost. A ratio value of less than 1.0

indicates a projected cost over-run.

3.1.3.3 THE RATIO: “% COMPLETE” TO “% MANAGEMENT RESERVE USED”

This metric divides the program percent complete (%comp) by the percentage of management

reserve (%MR) used to date. It provides insight into how quickly the MR is being depleted. If

the rate of MR usage is high it may indicate the original performance measurement baseline did

not contain the necessary budget for accomplishing the contract statement of work. Since this

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

24

metric is a ratio of two different metrics, those components

must be calculated first. To calculate

%comp use the following formula:

Percent Complete = %comp =

BCWP

cum

BAC

× 100

To calculate %MR, use the following formula:

Percent Management Reserve = %MR =

Total amount of MR used

Total amount of MR added to the program

Keep in mind that MR may be added or removed based on contractual actions. So it is important

to account for all the MR debits and credits when calculating this metric. It is not simply the

current value of MR divided by the original value of MR. In fact, if significant credits have been

made to MR since program inception, the current MR value might actually be greater than the

original value, even if some MR was debited.

Now take the ratio of %comp and %MR:

Ratio =

Percent Complete

Percent Management Reserve

=

%comp

%MR

The resulting value should be equal to 1.0 ± 0.1. A value greater than 1.0 indicates that the

Management Reserve is possibly being too conservatively withheld, while a value less than 1.0

indicates that there may not be enough Management Reserve to support the program through

completion.

3.2 DATA INTEGRITY ASSESSMENT

This section of the EVM performance indicators discusses Data Integrity. As the name suggests,

these metrics determine the validity and accuracy of EVM data produced by the contractor for

management decision making. These metrics reflect the trustworthiness of EVM reports, such as

the CPR.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

25

3.2.1 SYSTEM COMPLIANCE

As the Department of Defense Cognizant Federal Agency for EVMS compliance, DCMA

determines the system compliance of a contractor’s EVMS. System Compliance is one type of

data integrity assessment as it helps ensure the EVM data is valid.

3.2.1.1 SYSTEM COMPLIANCE STATUS RATING

The system compliance rating can be Approved, Disapproved, Not Evaluated, or Not Applicable.

See section 2.8 for a detailed discussion regarding the EVM system status. Official ratings can

be found in the Contractor Business Analysis Repository (CBAR) eTool.

3.2.2 BASELINE QUALITY

Baseline quality is a type of data integrity assessment that determines the quality of the initial

performance measurement baseline (PMB). The metrics indicate the amount of planning and

forethought placed into the PMB.

3.2.2.1 BASELINE INDICATOR

The Baseline Indicator assesses the health of the contractor’s Performance Measurement

Baseline (PMB). It is a qualitative metric that identifies the quality, completeness and adequacy

of the contractor’s Integrated Baseline Review (IBR) and any follow-on baseline reviews. The

following is a list of events that would adversely impact this indicator:

1. Initial IBR not conducted within 180 days from the contract award date.

2. Incomplete IBR conducted; cost, schedule, technical, resource and management risks not

addressed in the IBR.

3. Inadequate plan to address the program risks; risks can be assessed as high but the

supplier either needs to have a plan to mitigate the risk or account for it with a larger

Estimate at Completion (EAC).

4. Follow on IBRs not conducted within 180 days.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

26

5. IBR not conducted after major scope changes, formal reprogramming/restructuring or re-

baseline efforts.

6. Baseline does not capture the total scope of work.

7. Supplier does not have an executable, time-phased plan (resourced IMS).

8. Multiple, ineffective Over Target Baselines (OTBs) or incorrectly implemented OTBs

over the life of the program.

9. Excessive, ongoing baseline revisions.

Documents to review include the IBR briefs, action item resolution forms, and out-brief risks

and risk mitigation plans. With respect to IBR documentation, ensure that:

1. The date the IBR is conducted falls within the required time limit from contract award.

2. If high risks were identified at IBR:

a. Have the risks been mitigated? or

b. Have mitigation plans been developed? or

c. Have the risks been offset by budgetary and/or schedule considerations?

With respect to the IMS, ensure that:

1. All tasks have baseline start and baseline finish dates.

2. IMS has incorporated the Risk and Mitigation plans from IBR or subsequent re-plans.

3. Frank discussions with PI and EVMS Specialists are held to get their opinion of the

adequacy/scope of the IBR(s).

3.2.2.2 BASELINE REVISIONS INDEX

See section 2.7 for a detailed discussion regarding the baseline revisions index.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

27

3.2.2.3 CONTRACT MODIFICATIONS

The Contract Modifications metric highlights changes made to the contract dollar value from the

time of award to the present. A delta of ten percent is used as an early warning indication that

the program’s technical requirements may not have been understood at the time of contract

award, that poor contracting practices may have been in place, and/or that the entire scope of the

contract may not have been entirely understood. This metric helps to identify when new

requirements have been added to the contract (requirements creep) or when existing

requirements have been modified extensively. A Contract Modifications percentage greater than

or equal to 10% should be considered a flag. The Contract Modification Calculation is

performed as follows:

Contract Mods % =

(

CPR Format 3 Block 5. e

)

− (CPR Format 3 Block 5. a)

CPR Format 3 Block 5. a

× 100

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

28

4.0 14 POINT SCHEDULE METRICS FOR IMS (PROJECT/OPEN PLAN, ETC.)

ANALYSIS

The DCMA 14 Point Schedule Metrics were developed to identify potential problem areas with a

contractor’s IMS. This analysis should exclude Completed tasks, LOE tasks, Subprojects (called

Summary tasks in MS Project), and Milestones. These metrics provide the analyst with a

framework for asking educated questions and performing follow-up research. The identification

of a “red” metric is not in and of itself synonymous with failure but rather an indicator or a

catalyst to dig deeper in the analysis for understanding the reason for the situation.

Consequently, correction of that metric is not necessarily required, but it should be understood.

A detailed and comprehensive training course has been developed (Reference 3). The training

identifies the techniques (14 Point Assessment), tools, and reference materials used by EVMS

Specialists to accomplish schedule analysis requirements. After taking the course, students will

be able to determine whether schedules are realistic and perform schedule analysis using tools

such as MS Project and MS Excel. The Agency’s automated MS Project macro for performing

the 14 Point Assessment is demonstrated and provided within the course presentation; which is

available online at

http://guidebook.dcma.mil/51/index.cfm#tools.

4.1 LOGIC

This metric identifies incomplete tasks with missing logic links. It helps identify how well or

poorly the schedule is linked together. Even if links exist, the logic still needs to be verified by

the technical leads to ensure that the links make sense. Any incomplete task that is missing a

predecessor and/or a successor is included in this metric. The number of tasks without

predecessors and/or successors should not exceed 5%. An excess of

5% should be considered a

flag. The formula for calculating this metric is as follows:

Missing Logic % =

# of tasks missing logic

# of incomplete tasks

× 100

4.2 LEADS

This metric identifies the number of logic links with a lead (negative lag) in predecessor

relationships for incomplete tasks. The critical path and any subsequent analysis can be

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

29

adversely affected by using leads. The use of leads distorts the total float in the schedule and

may cause resource conflicts. Per the IMS Data Item Description (DID), negative time is not

demonstrable and should not be encouraged. Using MS Excel, count the number of “Leads” that

are found. Leads should not be used; therefore, the

goal for this metric is 0.

Leads % =

# of logic links with leads

# of logic links

× 100

4.3 LAGS

This represents the number of lags in predecessor logic relationships for incomplete tasks. The

critical path and any subsequent analysis can be adversely affected by using lags. Per the IMS

DID, lag should not be used to manipulate float/slack or to restrain the schedule. Using MS

Excel, count the number of “Lags” that are found. The

number relationships with lags should

not exceed 5%.

Lags % =

# of logic links with lags

# of logic links

× 100

4.4 RELATIONSHIP TYPES

The metric provides a count of incomplete tasks containing each type of logic link. The Finish-

to-Start (FS) relationship type (“once the predecessor is finished, the successor can start”)

provides a logical path through the program and should account for at least 90% of the

relationship types being used. The Start-to-Finish (SF) relationship type is counter-intuitive

(“the successor can’t finish until the predecessor starts”) and should only be used very rarely and

with detailed justification. By counting the number of Start- to-Start (SS), Finish-to-Finish (FF),

and Start-to-Finish (SF) relationship types, the % of

Finish-to-Start (FS) relationship types can

be calculated.

% of FS Relationship Types =

# of logic links with FS Relationships

# of logic links

× 100

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

30

4.5 HARD CONSTRAINTS

This is a count of incomplete tasks with hard constraints in use. Using hard constraints [Must-

Finish-On (MFO), Must-Start-On (MSO), Start-No-Later-Than (SNLT), & Finish-No-Later-

Than (FNLT)] may prevent tasks from being moved by their dependencies and, therefore,

prevent the schedule from being logic-driven. Soft constraints such as As-Soon-As-Possible

(ASAP), Start-No-Earlier-Than (SNET), and Finish-No-Earlier-Than (FNET) enable the

schedule to be logic-driven. Divide the total number of hard constraints by the number of

incomplete tasks. The number of tasks with hard

constraints should not exceed 5%.

Hard Constraint % =

Total # of incomplete tasks with hard constraints

Total # of incomplete tasks

× 100

4.6 HIGH FLOAT

An incomplete task with total float greater than 44 working days (2 months) is counted in this

metric. A task with total float over 44 working days may be a result of missing predecessors

and/or successors. If the percentage of tasks with excessive total float

exceeds 5%, the network

may be unstable and may not be logic-driven.

High Float % =

Total # of incomplete tasks with high float

Total # of incomplete tasks

× 100

4.7 NEGATIVE FLOAT

An incomplete task with total float less than 0 working days is included in this metric. It helps

identify tasks that are delaying completion of one or more milestones. Tasks with negative float

should have an explanation and a corrective action plan to mitigate the negative float. Divide the

total number of tasks with negative float by the number of

incomplete tasks. Ideally, there

should not be any negative float in the schedule.

Negative Float % =

Total # of incomplete tasks with negative float

Total # of incomplete tasks

× 100

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

31

4.8 HIGH DURATION

An incomplete task with a baseline duration greater than 44 working days (2 months), and has a

baseline start date within the detail planning period or rolling wave is included in this metric. It

helps to determine whether or not a task can be broken into two or more discrete tasks rather

than one. In addition, it helps to make tasks more manageable; which provides better insight into

cost and schedule performance. Divide the number of incomplete tasks with high duration tasks

by the total number of

incomplete tasks. The number of tasks with high duration should not

exceed 5%.

High Duration % =

Total # of incomplete tasks with high duration

Total # of incomplete tasks

× 100

4.9 INVALID DATES

Incomplete tasks that have a forecast start/finish date prior to the IMS status date, or has an

actual start/finish date beyond the IMS status date are included in this metric. A task should

have forecast start and forecast finish dates in the future relative to the status date of the IMS (i.e.

if the IMS status date is 8/1/09, the forecast date should be on or after 8/1/09). A task should not

have an actual start or actual finish date that is in the future relative to the status date of the IMS

(i.e. if the IMS status date is 8/1/09, the actual start or finish date should be on or before 8/1/09,

not after 8/1/09). There should not be any invalid dates in the schedule.

4.10 RESOURCES

This metric provides verification that all tasks with durations greater than zero have dollars or

hours assigned. Some contractors may not load their resources into the IMS. The IMS DID (DI-

MGMT-81650) does not require the contractor to load resources directly into the schedule. If

the contractor does resource load their schedule, calculate the metric by dividing the number of

incomplete tasks without dollars/hours assigned by

the total number of incomplete tasks.

Missing Resource % =

Total # of incomplete tasks with missing resource

Total # of incomplete tasks

× 100

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

32

4.11 MISSED TASKS

A task is included in this metric if it is supposed to be completed already (baseline finish date on

or before the status date) and the actual finish date or forecast finish date (early finish date) is

after the baseline finish date or the Finish Variance (Early Finish minus Baseline Finish) is

greater than zero. This metric helps identify how well or poorly the schedule is meeting the

baseline plan. To calculate this metric, divide the number of missed tasks by the baseline count

which does not include the number of tasks missing

baseline start or finish dates. The number of

missed tasks should not exceed 5%.

Missed % =

# of tasks with actual/forecast finish date past baseline date

# of tasks with baseline finish dates on or before status date

× 100

4.12 CRITICAL PATH TEST

The purpose is to test the integrity of the overall network logic and, in particular, the critical

path. If the project completion date (or other milestone) is not delayed in direct proportion

(assuming zero float) to the amount of intentional slip that is introduced into the schedule as part

of this test, then there is broken logic somewhere in the network. Broken logic is the result of

missing predecessors and/or successors on tasks where they are needed. The IMS passes the

Critical Path Test if the project completion date (or other task/milestone) show a negative total

float number or a revised Early Finish date that is in direct proportion (assuming zero float) to

the amount of intentional slip applied.

4.13 CRITICAL PATH LENGTH INDEX (CPLI)

See section 3.1.2.3 for a detailed discussion regarding the critical path length index.

4.14 BASELINE EXECUTION INDEX (BEI)

See section 3.1.2.4 for a detailed discussion regarding the Baseline Execution Index.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

33

5.0 DATA INTEGRITY INDICATORS

Data integrity indicators are metrics designed to provide confidence in the quality of the data

being reviewed. Many of the other metrics described in the EVMSPAP are designed to provide

insight into the performance of a program. The data integrity metrics are not primarily intended

for program performance information. If control accounts or work packages have one of the

conditions being tested for by these metrics, then the EVMS Specialist should investigate further.

5.1 BCWS

cum

> BAC

The Budgeted Cost for Work Scheduled (BCWS) is the program budget time-phased over the

period of performance. The summation of BCWS for all reporting periods should equal the

budget at completion (BAC). In other words, BCWS

CUM

should equal BAC on the month the

program is planned to complete. Both of these values can be found on the CPR Format 1. Due

to this relationship, the value of BCWS

CUM

should never exceed BAC. Errors may exist in

EVMS data resulting in this condition, thereby making it necessary to perform this metric. The

calculation of this metric is simple. Compare the value of BCWS

CUM

to the value of BAC; if

BCWS

cum

is greater than BAC, consider this an error in the EVMS data and pursue corrective

action. There is no plausible explanation. If the value of BCWS

cum

is less than BAC, there is no

issue.

5.2 BCWP

cum

> BAC

The Budgeted Cost for Work Performed (BCWP) is the amount of BCWS earned by the

completion of work to date. Like the metric in section 5.1, BCWP

cum

may not exceed the value

of BAC. The program is considered complete when BCWP

cum

equals BAC. The calculation of

this metric is simple. Compare the value of BCWP

cum

to BAC. If

BCWP

cum

is greater, then this

is an error requiring corrective action. Otherwise there is

no issue.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

34

5.3 ACWP

cum

WITH NO BAC

The actual cost of work performed (ACWP) is the total dollars spent on labor, material,

subcontracts, and other direct costs in the performance of the contract statement of work. These

costs are controlled by the accounting general ledger and should reconcile between the

accounting system and EVMS. Work should only be performed if there is a clear contractual

requirement. The BAC is required to be traceable to work requirements in the contract statement

of work. If work is performed and ACWP

cum

incurred without applicable BAC, there may be a

misalignment between this work and the requirements of the contract. To test for this condition,

simply review the CPR Format 1 or wlnsight data for WBS elements containing ACWP

cum

but

no BAC. If there are elements that meet these criteria, consider this an error that must be

investigated. The contractor should provide justification and must take corrective action

regardless of the reason.

5.4 ACWP

cur

WITH NO BAC

Similar to section 5.3 above, this metric differs in that current reporting period actual costs

(ACWP

cur

) are being compared to the BAC. The calculation of this metric and the meaning of

its results are identical to section 5.3.

5.5 NEGATIVE BAC

BAC is the total budget assigned to complete the work defined within the contract. A negative

total budget is not logical. To test for this condition simply examine the CPR Format 1 or

wlnsight data for a BAC less than zero. This test should be performed at the reported WBS

levels as well as the total program level. A BAC less than zero should be considered an error

and corrective action must be taken by the contractor.

5.6 ZERO BUDGET WPs

Work packages (WPs) are natural subdivisions of control accounts (CAs). The summation of all

WP budgets within a given CA results in the CA budget. If there is a WP with a budget of zero,

it should be considered a flag because no work can be performed without budget. At a

minimum, there is no need for the WP with a BAC of zero. To test for this condition a detailed

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

35

EVMS report must be obtained from the contract. The CPR Format 1 will not contain sufficient

detail down to the WP level. The

contractor should be prepared to export the EVMS data down

to the WP level in a format compatible with the UNCEFACT/XML schema; the DCMA EVM

specialist can employ wlnsight to assess the program. It is

important to understand at which

levels of the WBS the contractor established CAs and WPs. Examine this report to determine if

any WPs have a BAC of zero. Consider this condition an error that requires at least an

explanation and perhaps corrective action.

5.7 LEVEL OF EFFORT WITH SCHEDULE VARIANCE

Level of effort (LOE) is an earned value technique (EVT) used to calculate BCWP for work that

is supportive in nature and produces no definable end product. See ANSI/EIA-748 EVMS

Guideline 12 for more information on LOE requirements. The LOE technique automatically

earns BCWP equal to the amount of BCWS for the reporting period. Since schedule variance

(SV) is defined as the difference between BCWP and BCWS, work measure with the LOE EVT

should have an SV of zero. BCWP is earned at the WP level. Therefore a report with WP level

detail and indication of EVT used is required to perform this metric. This report can be

requested from the contractor as a wlnsight export or other electronic format, such as Microsoft

Excel. Simply check all elements with an EVT of LOE for an SV other than zero. If this

condition exists consider it an error that requires corrective action.

5.8 BCWP WITH NO ACWP

Since work or materials must be paid for, it is not possible to earn BCWP without incurring

ACWP. This condition may occur for elements using the LOE EVT. In this case, it would

signify the support work that was planned to occur is not occurring due to some delay. The

delay is likely in the work the LOE function would support. Either way, this condition should be

flagged and investigated to determine the root cause. This metric can be calculated using the

CPR Format 1 or more detailed wlnsight data. Inspect the elements on the report for any

instance of BCWP

cum

with an ACWP

cum

equal to zero.

EVMS PROGRAM ANALYSIS PAMPHLET OCT 2012

36

5.9 COMPLETED WORK WITH ETC

Work is considered complete when the CA or WP BCWP

cum

equals BAC. The estimate to

complete (ETC) is the to-go portion of the estimate at completion (EAC). The ETC

should be

zero if the work is complete, as there should be no projected future cost left to

incur. A detailed

report from the contractor’s EVMS is required to calculate this metric. The report will need to

have all the information contained in the CPR Format 1 in addition to providing the ETC by

WBS element. Examine this report for completed elements (BCWP

cum

= BAC) with an ETC

other than zero. This condition may exist if labor or material invoices are lagging behind and

haven’t been paid yet. However, this requires investigation to determine the root cause.